Solar models from Upstage are now available in Amazon SageMaker JumpStart

This blog post is co-written with Hwalsuk Lee at Upstage.

Today, we’re excited to announce that the Solar foundation model developed by Upstage is now available for customers using Amazon SageMaker JumpStart. Solar is a large language model (LLM) 100% pre-trained with Amazon SageMaker that outperforms and uses its compact size and powerful track records to specialize in purpose-training, making it versatile across languages, domains, and tasks.

You can now use the Solar Mini Chat and Solar Mini Chat – Quant pretrained models within SageMaker JumpStart. SageMaker JumpStart is the machine learning (ML) hub of SageMaker that provides access to foundation models in addition to built-in algorithms to help you quickly get started with ML.

In this post, we walk through how to discover and deploy the Solar model via SageMaker JumpStart.

What’s the Solar model?

Solar is a compact and powerful model for English and Korean languages. It’s specifically fine-tuned for multi-turn chat purposes, demonstrating enhanced performance across a wide range of natural language processing tasks.

The Solar Mini Chat model is based on Solar 10.7B, with a 32-layer Llama 2 structure, and initialized with pre-trained weights from Mistral 7B compatible with the Llama 2 architecture. This fine-tuning equips it with the ability to handle extended conversations more effectively, making it particularly adept for interactive applications. It employs a scaling method called depth up-scaling (DUS), which is comprised of depth-wise scaling and continued pretraining. DUS allows for a much more straightforward and efficient enlargement of smaller models than other scaling methods such as mixture of experts (MoE).

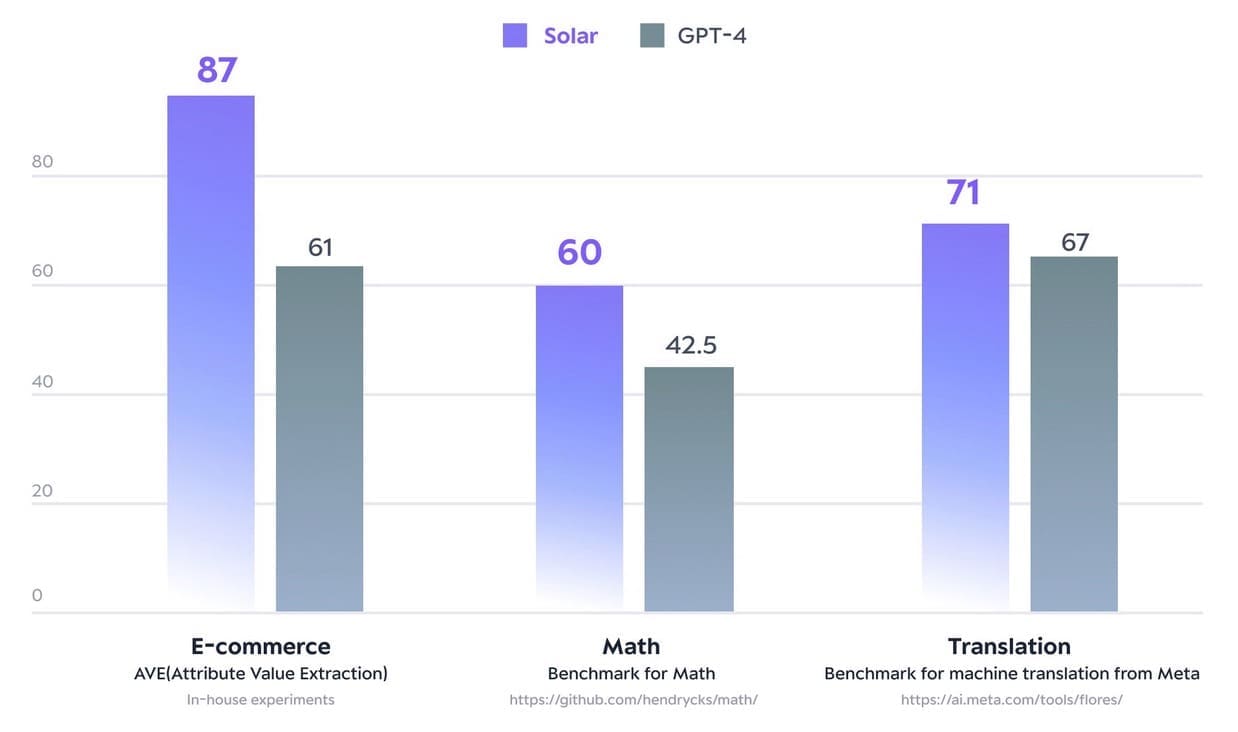

In December 2023, the Solar 10.7B model made waves by reaching the pinnacle of the Open LLM Leaderboard of Hugging Face. Using notably fewer parameters, Solar 10.7B delivers responses comparable to GPT-3.5, but is 2.5 times faster. Along with topping the Open LLM Leaderboard, Solar 10.7B outperforms GPT-4 with purpose-trained models on certain domains and tasks.

The following figure illustrates some of these metrics:

source: https://www.upstage.ai/solar-llm

With SageMaker JumpStart, you can deploy Solar 10.7B based pre-trained models: Solar Mini Chat and a quantized version of Solar Mini Chat, optimized for chat applications in English and Korean. The Solar Mini Chat model provides an advanced grasp of Korean language nuances, which significantly elevates user interactions in chat environments. It provides precise responses to user inputs, ensuring clearer communication and more efficient problem resolution in English and Korean chat applications.

Get started with Solar models in SageMaker JumpStart

To get started with Solar models, you can use SageMaker JumpStart, a fully managed ML hub service to deploy pre-built ML models into a production-ready hosted environment. You can access Solar models through SageMaker JumpStart in Amazon SageMaker Studio, a web-based integrated development environment (IDE) where you can access purpose-built tools to perform all ML development steps, from preparing data to building, training, and deploying your ML models.

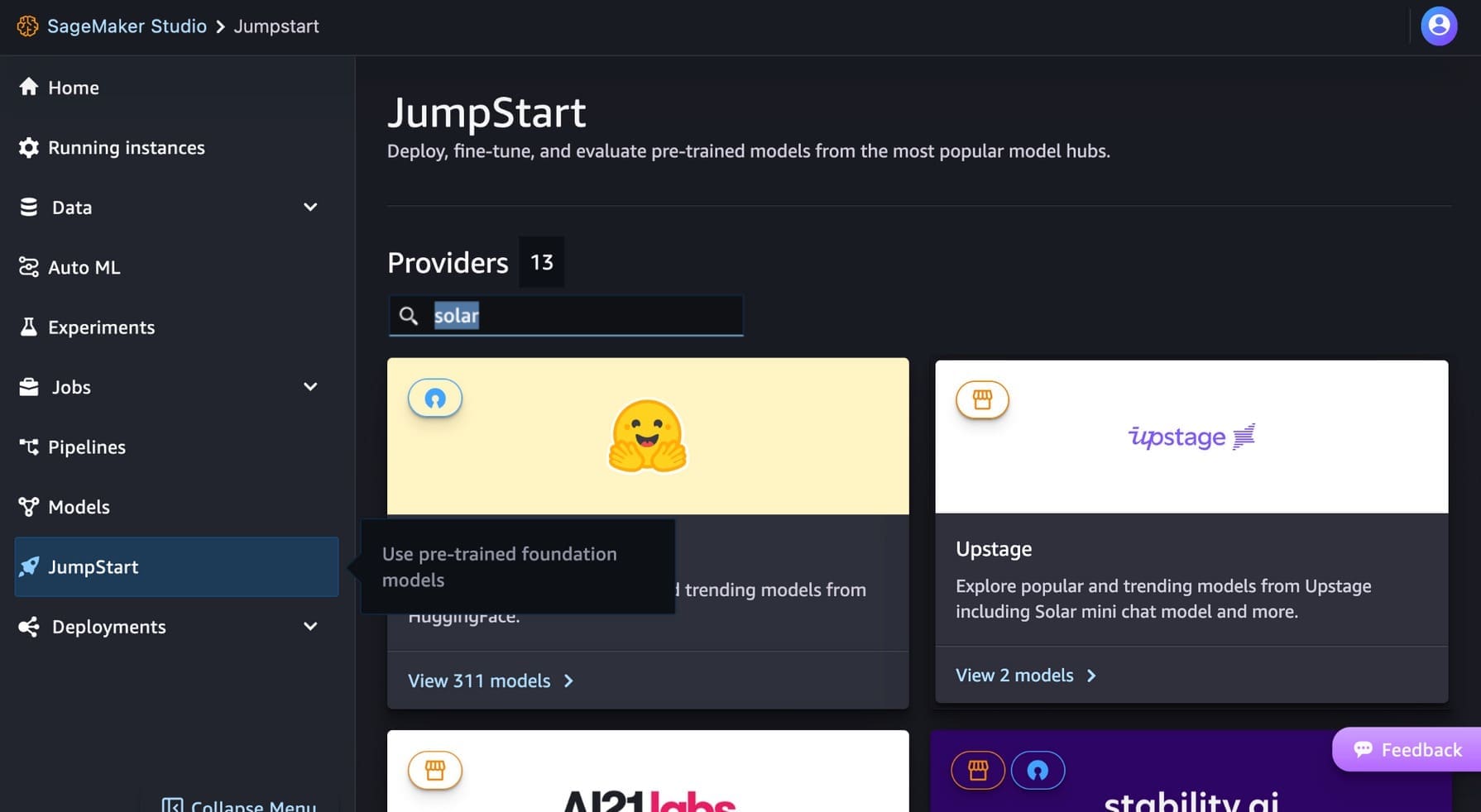

On the SageMaker Studio console, choose JumpStart in the navigation pane. You can enter “solar” in the search bar to find Upstage’s solar models.

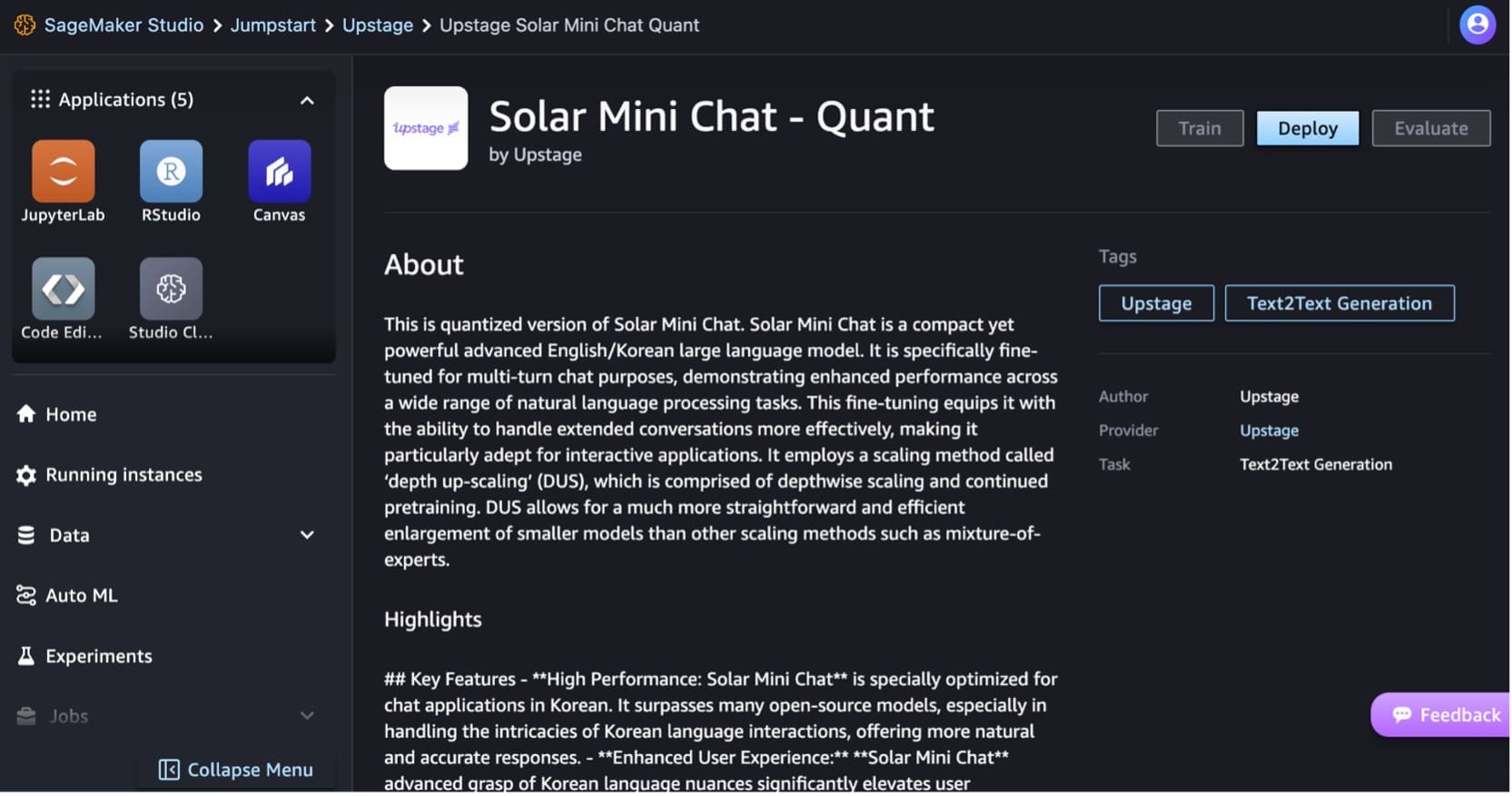

Let’s deploy the Solar Mini Chat – Quant model. Choose the model card to view details about the model such as the license, data used to train, and how to use the model. You will also find a Deploy option, which takes you to a landing page where you can test inference with an example payload.

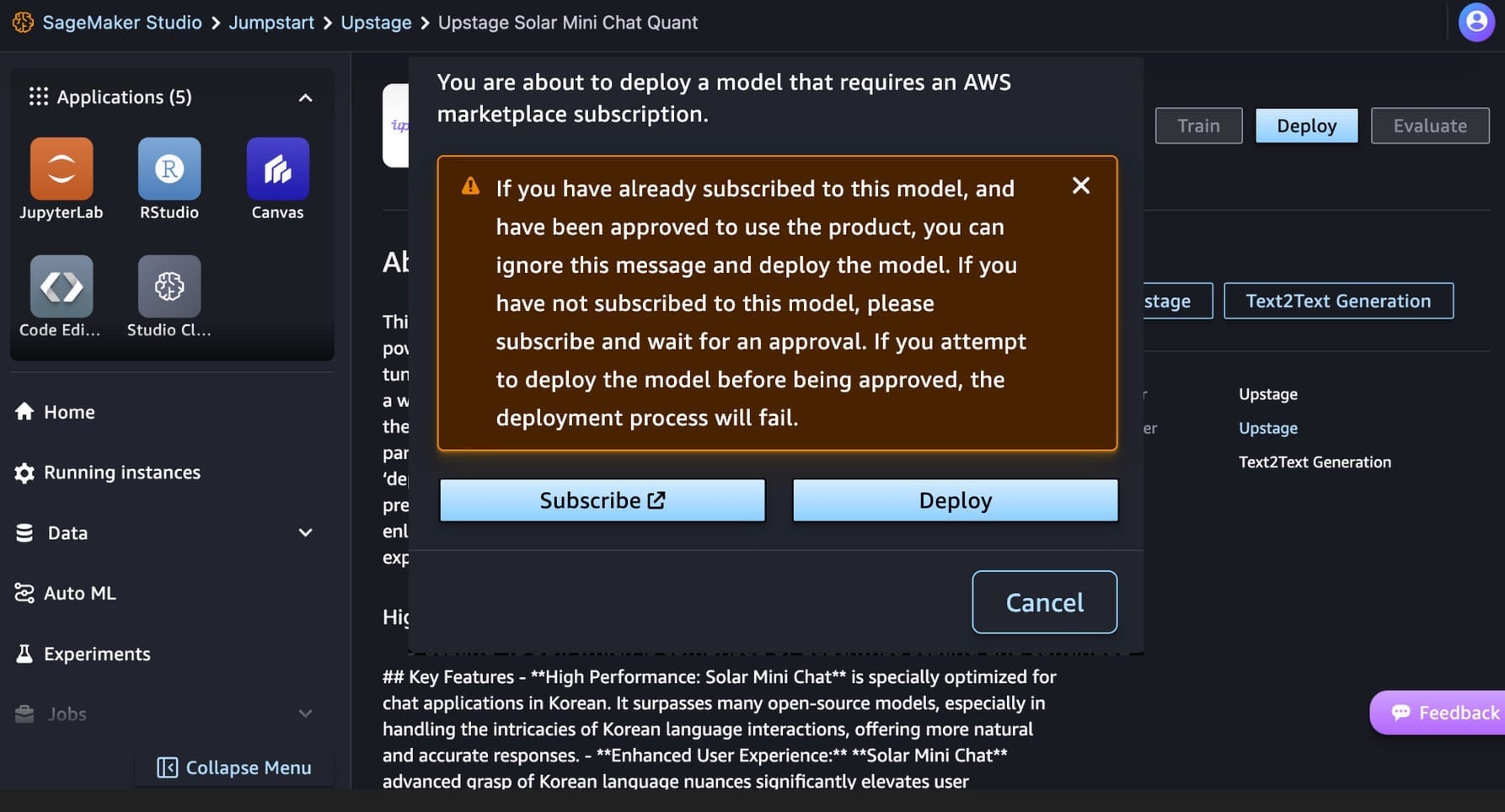

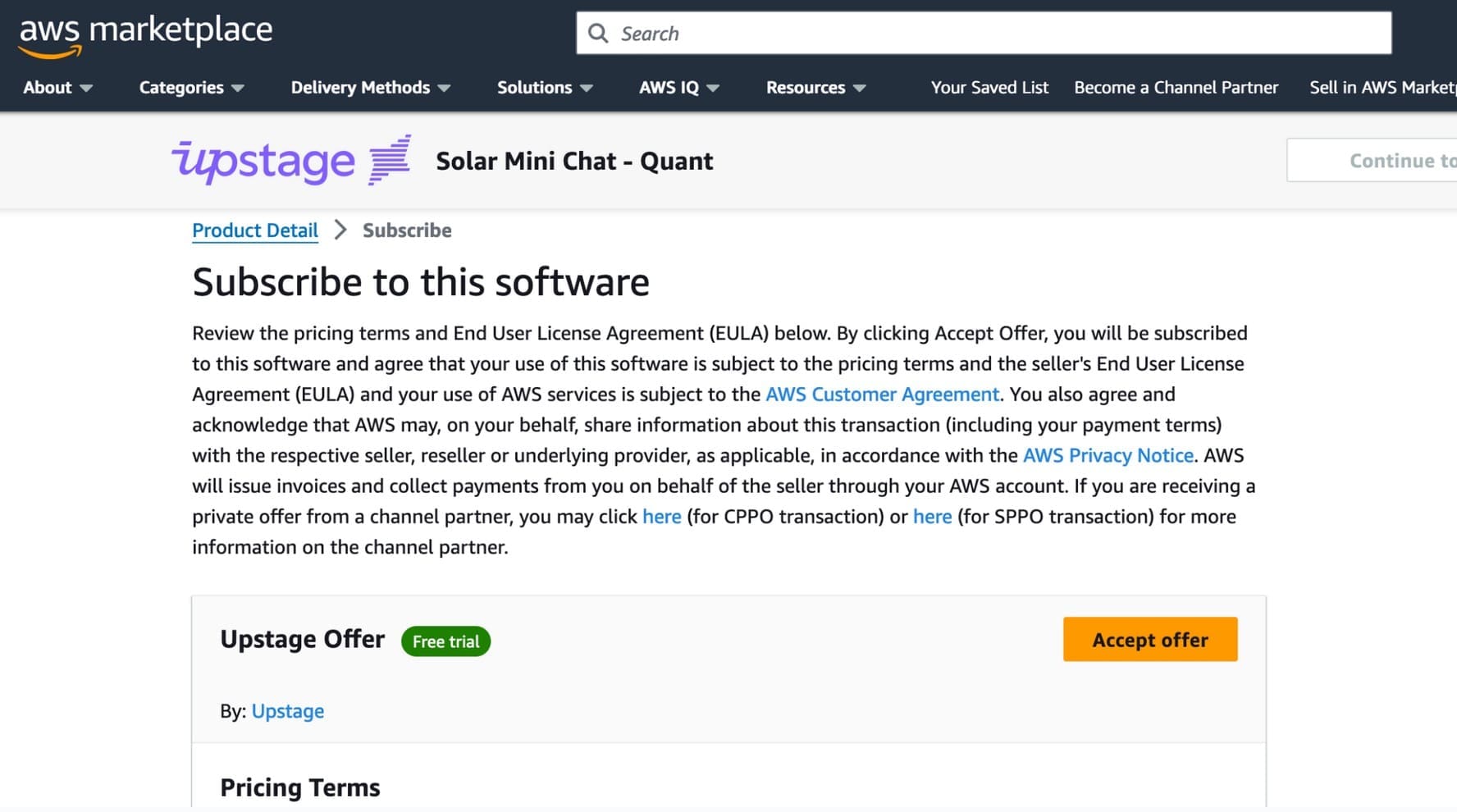

This model requires an AWS Marketplace subscription. If you have already subscribed to this model, and have been approved to use the product, you can deploy the model directly.

If you have not subscribed to this model, choose Subscribe, go to AWS Marketplace, review the pricing terms and End User License Agreement (EULA), and choose Accept offer.

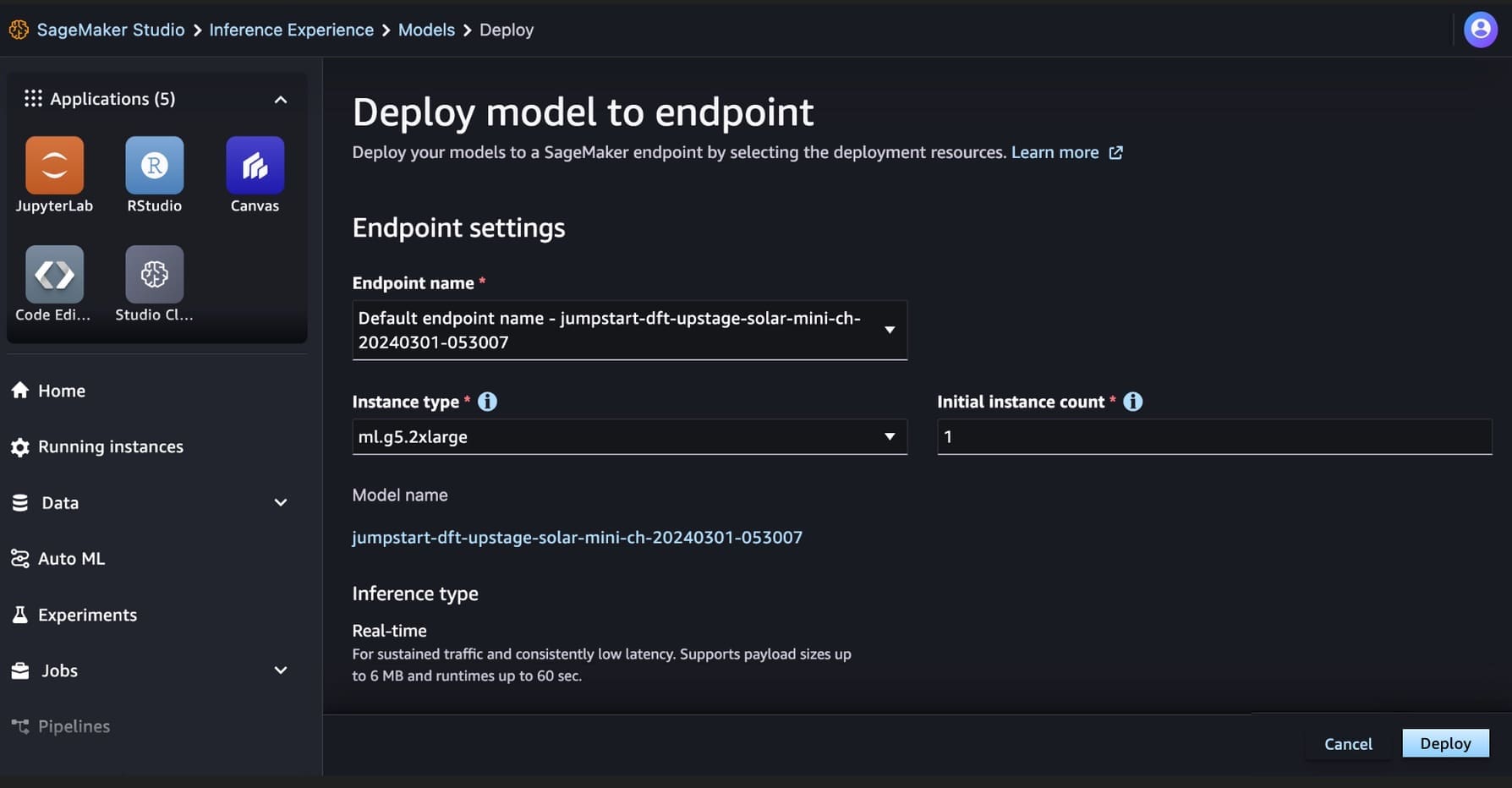

After you’re subscribed to the model, you can deploy your model to a SageMaker endpoint by selecting the deployment resources, such as instance type and initial instance count. Choose Deploy and wait an endpoint to be created for the model inference. You can select an ml.g5.2xlarge instance as a cheaper option for inference with the Solar model.

When your SageMaker endpoint is successfully created, you can test it through the various SageMaker application environments.

Run your code for Solar models in SageMaker Studio JupyterLab

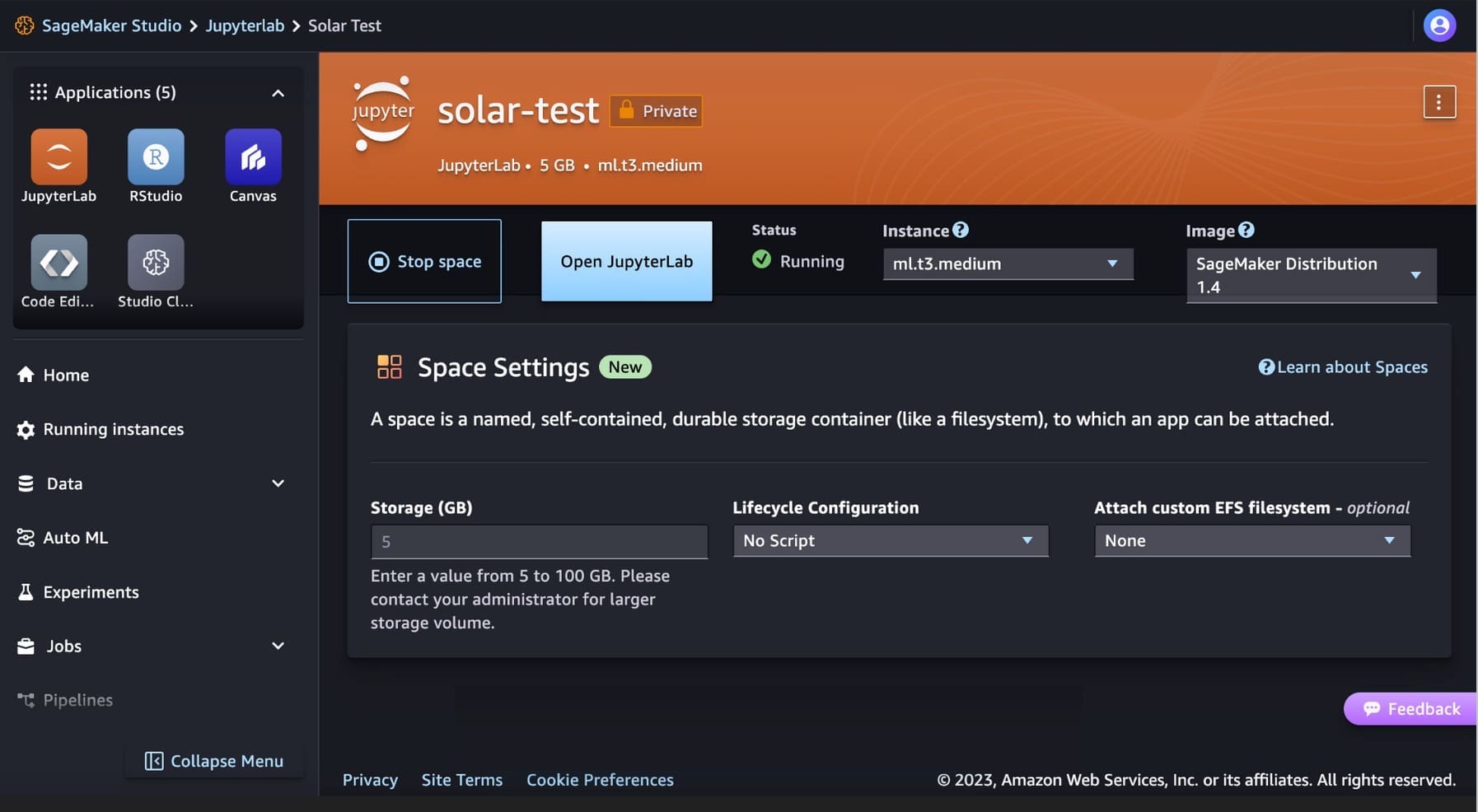

SageMaker Studio supports various application development environments, including JupyterLab, a set of capabilities that augment the fully managed notebook offering. It includes kernels that start in seconds, a preconfigured runtime with popular data science, ML frameworks, and high-performance private block storage. For more information, see SageMaker JupyterLab.

Create a JupyterLab space within SageMaker Studio that manages the storage and compute resources needed to run the JupyterLab application.

You can find the code showing the deployment of Solar models on SageMaker JumpStart and an example of how to use the deployed model in the GitHub repo. You can now deploy the model using SageMaker JumpStart. The following code uses the default instance ml.g5.2xlarge for the Solar Mini Chat – Quant model inference endpoint.

Solar models support a request/response payload compatible to OpenAI’s Chat completion endpoint. You can test single-turn or multi-turn chat examples with Python.

# Get a SageMaker endpoint

sagemaker_runtime = boto3.client("sagemaker-runtime")

endpoint_name = sagemaker.utils.name_from_base(model_name)

# Multi-turn chat prompt example

input = {

"messages": [

{

"role": "system",

"content": "You are a helpful assistant."

},

{

"role": "user",

"content": "Can you provide a Python script to merge two sorted lists?"

},

{

"role": "assistant",

"content": """Sure, here is a Python script to merge two sorted lists:

```python

def merge_lists(list1, list2):

return sorted(list1 + list2)

```

"""

},

{

"role": "user",

"content": "Can you provide an example of how to use this function?"

}

]

}

# Get response from the model

response = sagemaker_runtime.invoke_endpoint(EndpointName=endpoint_name, ContentType='application/json', Body=json.dumps (input))

result = json.loads(response['Body'].read().decode())

print resultYou have successfully performed a real time inference with the Solar Mini Chat model.

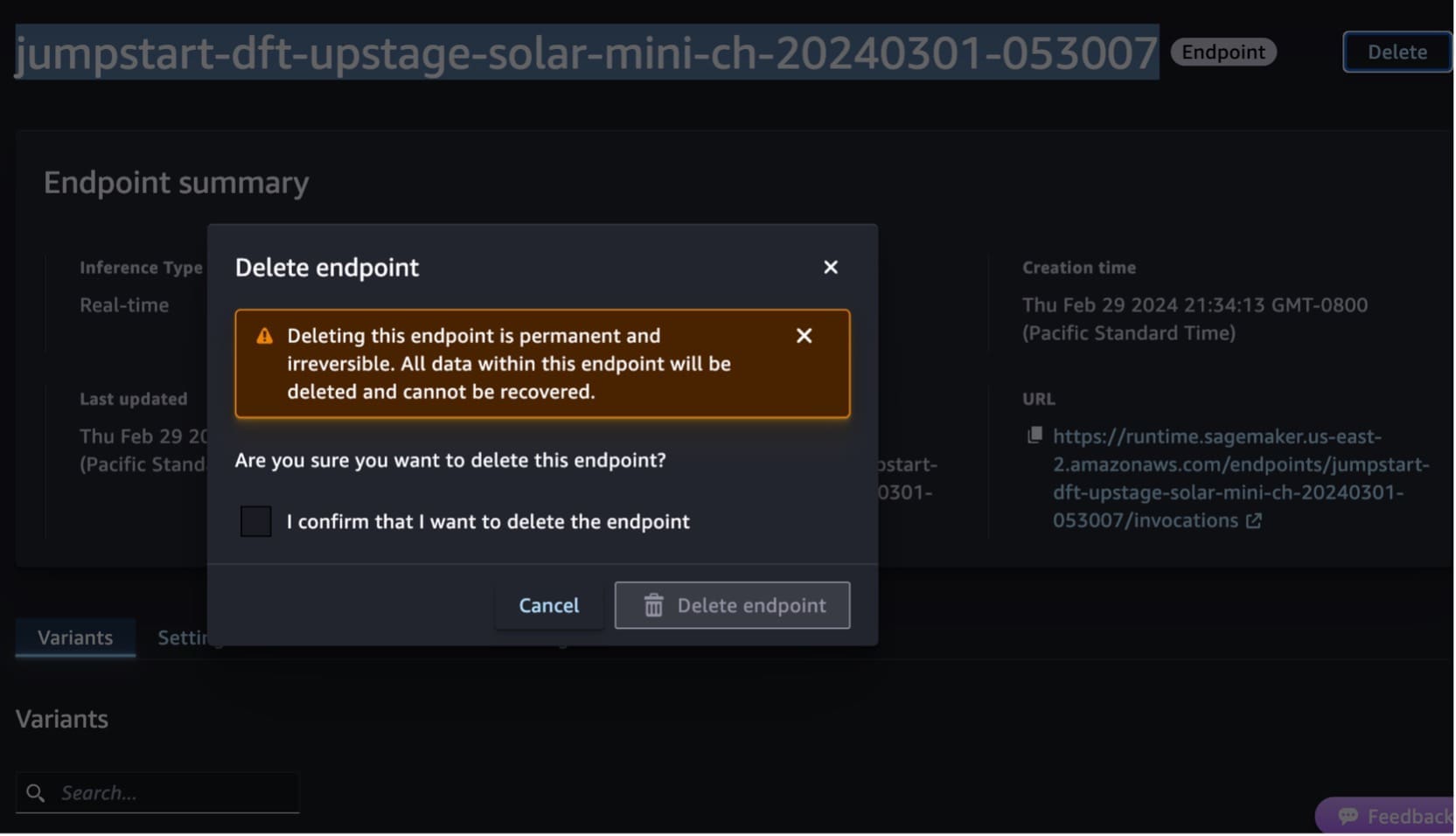

Clean up

After you have tested the endpoint, delete the SageMaker inference endpoint and delete the model to avoid incurring charges.

You can also run the following code to delete the endpoint and mode in the notebook of SageMaker Studio JupyterLab:

# Delete the endpoint

model.sagemaker_session.delete_endpoint(endpoint_name)

model.sagemaker_session.delete_endpoint_config(endpoint_name)

# Delete the model

model.delete_model()For more information, see Delete Endpoints and Resources. Additionally, you can shut down the SageMaker Studio resources that are no longer required.

Conclusion

In this post, we showed you how to get started with Upstage’s Solar models in SageMaker Studio and deploy the model for inference. We also showed you how you can run your Python sample code on SageMaker Studio JupyterLab.

Because Solar models are already pre-trained, they can help lower training and infrastructure costs and enable customization for your generative AI applications.

Try it out on the SageMaker JumpStart console or SageMaker Studio console! You can also watch the following video, Try ‘Solar’ with Amazon SageMaker.

This guidance is for informational purposes only. You should still perform your own independent assessment, and take measures to ensure that you comply with your own specific quality control practices and standards, and the local rules, laws, regulations, licenses, and terms of use that apply to you, your content, and the third-party model referenced in this guidance. AWS has no control or authority over the third-party model referenced in this guidance, and does not make any representations or warranties that the third-party model is secure, virus-free, operational, or compatible with your production environment and standards. AWS does not make any representations, warranties, or guarantees that any information in this guidance will result in a particular outcome or result.

About the Authors

Channy Yun is a Principal Developer Advocate at AWS, and is passionate about helping developers build modern applications on the latest AWS services. He is a pragmatic developer and blogger at heart, and he loves community-driven learning and sharing of technology.

Channy Yun is a Principal Developer Advocate at AWS, and is passionate about helping developers build modern applications on the latest AWS services. He is a pragmatic developer and blogger at heart, and he loves community-driven learning and sharing of technology.

Hwalsuk Lee is Chief Technology Officer (CTO) at Upstage. He has worked for Samsung Techwin, NCSOFT, and Naver as an AI Researcher. He is pursuing his PhD in Computer and Electrical Engineering at the Korea Advanced Institute of Science and Technology (KAIST).

Hwalsuk Lee is Chief Technology Officer (CTO) at Upstage. He has worked for Samsung Techwin, NCSOFT, and Naver as an AI Researcher. He is pursuing his PhD in Computer and Electrical Engineering at the Korea Advanced Institute of Science and Technology (KAIST).

Brandon Lee is a Senior Solutions Architect at AWS, and primarily helps large educational technology customers in the Public Sector. He has over 20 years of experience leading application development at global companies and large corporations.

Brandon Lee is a Senior Solutions Architect at AWS, and primarily helps large educational technology customers in the Public Sector. He has over 20 years of experience leading application development at global companies and large corporations.

Leave a Reply