Ebbey Thomas specializes in strategizing and developing custom AWS Landing Zones with a focus on leveraging Generative AI to enhance cloud infrastructure automation. In his role at AWS Professional Services, Ebbey’s expertise is central to architecting solutions that streamline cloud adoption, ensuring a secure and efficient operational framework for AWS users. He is known for his innovative approach to cloud challenges and his commitment to driving forward the capabilities of cloud services.

Ebbey Thomas specializes in strategizing and developing custom AWS Landing Zones with a focus on leveraging Generative AI to enhance cloud infrastructure automation. In his role at AWS Professional Services, Ebbey’s expertise is central to architecting solutions that streamline cloud adoption, ensuring a secure and efficient operational framework for AWS users. He is known for his innovative approach to cloud challenges and his commitment to driving forward the capabilities of cloud services.

Using Agents for Amazon Bedrock to interactively generate infrastructure as code

In the diverse toolkit available for deploying cloud infrastructure, Agents for Amazon Bedrock offers a practical and innovative option for teams looking to enhance their infrastructure as code (IaC) processes. Agents for Amazon Bedrock automates the prompt engineering and orchestration of user-requested tasks. After being configured, an agent builds the prompt and augments it with your company-specific information to provide responses back to the user in natural language.

This solution shows how Amazon Bedrock agents can be configured to accept cloud architecture diagrams, automatically analyze them, and generate Terraform or AWS CloudFormation templates. This solution uses Retrieval Augmented Generation (RAG) to ensure the generated scripts adhere to organizational needs and industry standards. A key feature is the agent’s ability to dynamically interact with users. During the IaC generation process, Amazon Bedrock agents actively probe for additional information by analyzing the provided diagrams and querying the user to fill any gaps. This interaction allows for a more tailored and precise IaC configuration.

Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) from leading artificial intelligence (AI) companies like AI21 Labs, Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon through a single API, along with a broad set of capabilities you need to build generative AI applications with security, privacy, and responsible AI.

In this blog post, we explore how Agents for Amazon Bedrock can be used to generate customized, organization standards-compliant IaC scripts directly from uploaded architecture diagrams. This will help accelerate deployments, reduce errors, and ensure adherence to security guidelines.

Solution overview

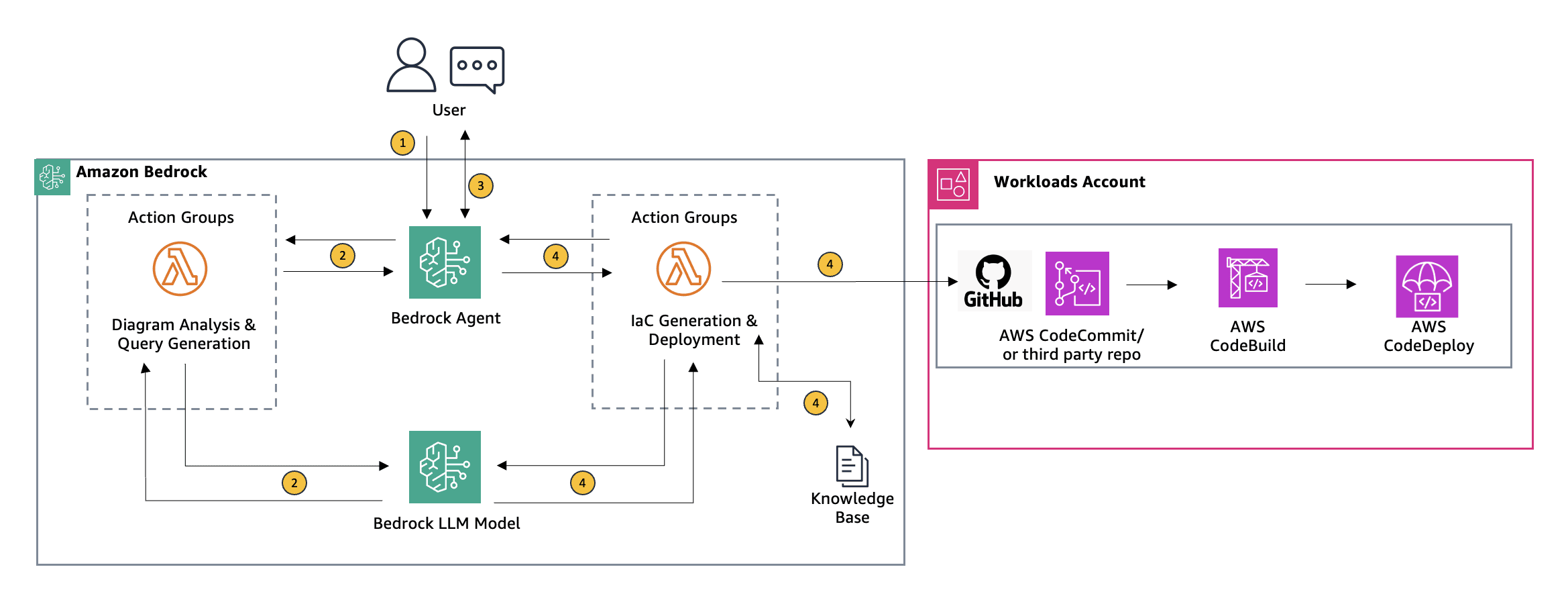

Before we explore the deployment process, let’s walk through the key steps of the architecture as illustrated in Figure 1.

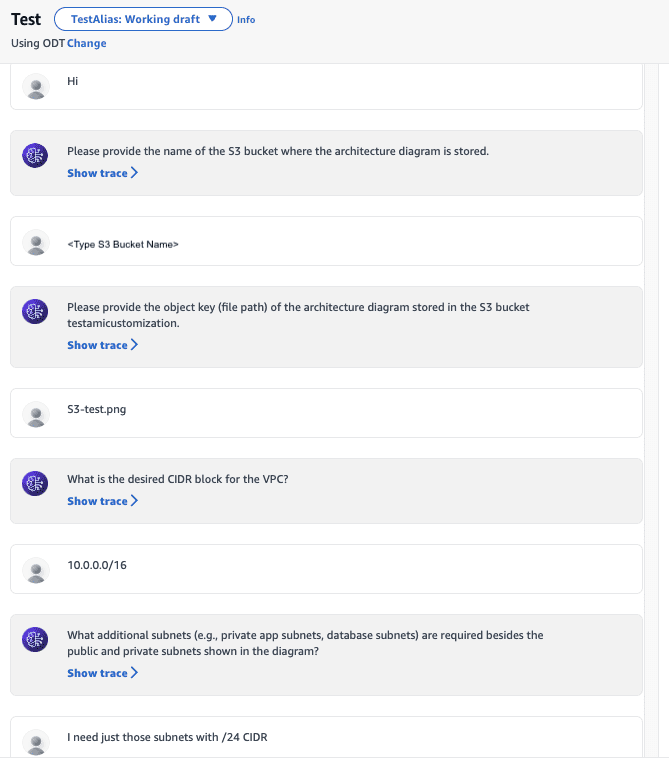

- Initial Input through the Amazon Bedrock chat console: The user begins by entering the name of their Amazon Simple Storage Service (Amazon S3) bucket and the object (key) name where the architecture diagram is stored into the Amazon Bedrock chat console. For instance, if an architecture diagram is saved as s3://testbucket/architecturediagram.png, the user will enter testbucket as the S3 bucket name and architecturediagram.png as the object name.

- Diagram analysis and query generation: The Amazon Bedrock agent forwards the architecture diagram location to an action group that invokes an AWS Lambda. This function retrieves the architecture diagram from the specified S3 bucket, analyzes it using the Amazon Bedrock model, and produces a summary of the diagram. It also generates questions regarding any missing components, dependencies, or parameter values that are needed to create IaC for AWS services. This detailed response is then sent back to the agent.

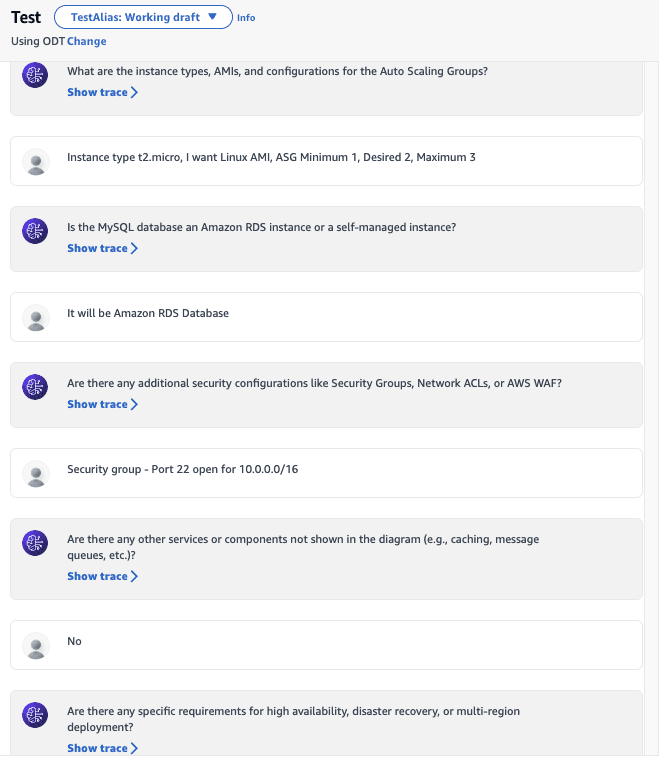

- Interaction and user confirmation: The agent displays the generated questions to the user and records their responses. Next, the agent provides a comprehensive summary of the architecture diagram along with additional inputs provided by the user. Users then have the opportunity to approve this configuration or suggest any necessary adjustments. On receiving confirmation from the user, the agent passes this information to the second action group to generate IaC.

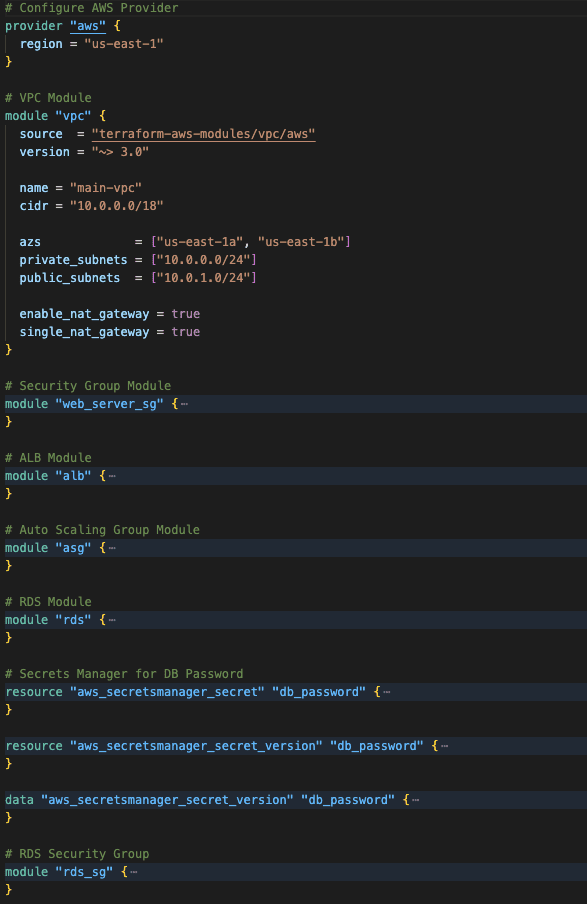

- IaC generation and deployment: The second action group invokes a Lambda function that processes the user’s input data along with organization-specific coding guidelines from Knowledge Bases for Amazon Bedrock to create the IaC. After being generated, the IaC is automatically pushed to a designated GitHub repository.

Prerequisites

You should have the following:

- Understanding of Agents for Amazon Bedrock, prompt engineering, Knowledge Bases for Amazon Bedrock, Lambda functions, and AWS Identity and Access Management (IAM).

- An AWS account with the appropriate IAM permissions to create Amazon Bedrock agents and knowledge bases, Lambda functions, and IAM roles.

- Create a service role for Agents for Amazon Bedrock.

- A GitHub account with a repository to store the generated Terraform scripts.

Deployment steps

The solution can be used to create IaC (using Terraform or CloudFormation) by inputting the architecture diagram. For the purpose of this blog post, we focus on creating Terraform IaC. There are four steps to deploy the solution.

Step 1: Configure an Amazon Bedrock knowledge base: Configuring a knowledge base (KB) enables you to access information about organization standard Terraform modules. Follow these steps to set up your KB:

- Sign in and go to the AWS Management Console for Amazon Bedrock. Go directly to the Knowledge Base section. This is your starting point for creating a new KB.

- Enter a clear and descriptive name that reflects the purpose of your KB, such as Terraform KB.

- Assign a pre-configured IAM role with the necessary permissions. It’s typically best to let Amazon Bedrock create this role for you to ensure it has the correct permissions.

- Define the data sources by uploading a JSON file to an S3 bucket with encryption enabled for security. This file should contain a structured list of AWS services and Terraform modules. For the JSON structure, use the example provided in the repository.

- Choose the default embeddings model. For most use cases, the Amazon Bedrock Titan G1 Embeddings – Text model will suffice. It’s pre-configured and ready to use, simplifying the process.

- Use the managed vector store to allow Amazon Bedrock to create and manage the vector store for you in Amazon OpenSearch Service.

- Select the KB and in the Data source section, choose Sync to begin data ingestion. When data ingestion completes, a green success banner appears if it is successful.

- Double-check all entered information for accuracy. Pay special attention to the S3 bucket URI and IAM role details.

Step 2: Configure the Bedrock agent:

- Open the Amazon Bedrock console, select Agents in the left navigation panel, then choose Create Agent.

- Enter agent details including agent name and description (optional).

- Next, grant the agent permissions to AWS services through the IAM service role. This gives your agent access to required services, such as Lambda.

- Select a foundation model from Amazon Bedrock (for example, Anthropic Claude 3 Sonnet).

- To create Terraform code using Agents for Amazon Bedrock, attach the following instruction to the agent:

“Assist users in creating IaC for provided architecture diagram. Ask user for S3 bucket name and object name where the diagram is stored. Upon receiving the information, run analysis-query action group. Give structured summary and ask user only the questions that are received from action group response. Take the answers from the user and give detailed summary to the user. Take approval from user. When approved, give all that information to final draft along with S3 bucket name, object name as input for the iac-deployment action group and run the action group.”

Step 3: Configuring agent action groups: After initial agent configuration and adding the above instruction to the agent, there are two actions that need to be added to the agent to create Terraform IaC by passing an architecture diagram.

- Create an action group linked to a Lambda function (for creating a Lambda function, see Getting started with Lambda) that is designed to analyze the architecture diagram and generates questions related to any missing components, dependencies, or parameter values necessary for IaC creation of AWS services. This group is invoked by the agent following the user’s input of S3 bucket and object details. The responses are then relayed back to the agent, which conducts an interactive session to collect any missing information from the user. See Lambda code and OpenAPI-schema in the repository.

- Establish a second action group tied to a different Lambda function responsible for creating the Terraform code and uploading it to a GitHub repository. This group is invoked only after the user has reviewed and approved the infrastructure configuration. See Lambda code and OpenAPI-schema in the repository.

Step 4: Add the action groups to the agent:

- Assign a descriptive name to each action group and detail their functions in the description fields. This helps clarify the purpose of each group within the workflow.

- For each action group, select the appropriate Lambda functions that you set up previously. These functions run the business logic required when an action is invoked. Make sure to choose the correct version of each Lambda function. For additional details, see the section on Action Group Lambda Functions.

- Provide the Amazon S3 URI that links to the API schema for each action group. This schema should include the API’s description, structure, and parameters. The API is crucial for managing the workflow, such as receiving user inputs, invoking Lambda functions to run the process, validating inputs, initiating Terraform module creation, and monitoring the provisioning status. For further guidance, see the section on Action Group OpenAPI Schemas.

The following screenshot shows an example of the user interaction with Agents for Amazon Bedrock

The following screenshot shows an example Terraform output

Clean up

The services used in this demonstration can incur costs. Complete the following steps to clean up your resources:

- Delete the Lambda functions if they’re no longer required.

- Delete action groups and Amazon Bedrock agent that were created.

- Empty and delete the S3 bucket used for storing the architecture diagram.

- Remove the generated Terraform scripts from the GitHub repo.

- Delete the Amazon Bedrock knowledge base Bedrock if it’s no longer needed.

Conclusion

Agents for Amazon Bedrock uses generative AI to transform architecture diagrams into compliant infrastructure as code (IaC) scripts for AWS deployments, such as Terraform and AWS CloudFormation. This capability is a crucial tool for engineers transitioning to the cloud, speeding up the cloud adoption process while ensuring that deployments adhere to established best practices from the start.

Through the interactive features of Agents for Amazon Bedrock, the automation of IaC generation not only streamlines the initial set up but also significantly improves ongoing operations like infrastructure management. Although this post concentrates on IaC creation, the interactive capabilities of Agents for Amazon Bedrock can be used across various AWS services, providing a dynamic and comprehensive solution for managing and optimizing cloud infrastructure.

Are you ready to streamline your cloud deployment process with the generative AI of Amazon Bedrock? Start by delving into the Amazon Bedrock User Guide to see how it can facilitate your organization’s transition to the cloud. For specialized assistance, consider engaging with AWS Professional Services to maximize the efficiency and benefits of using Amazon Bedrock. Embrace the potential for a swift, secure, and efficient cloud transformation with Amazon Bedrock. Take the first step today and discover how using generative AI can revolutionize your approach to cloud infrastructure.

About the Author

Akhil Raj Yallamelli is a Cloud Infrastructure Architect at AWS, specializing in optimizing cloud infrastructures for enhanced data security and cost efficiency. He skillfully integrates technical solutions with business strategies to create scalable, reliable, and secure cloud environments. Akhil builds technical solutions focusing on customer business outcomes, incorporating generative AI (Gen AI) technologies to drive innovation. With deep expertise in AWS and a strong background in DevOps methodologies throughout the software development life cycle (SDLC), Akhil leads critical implementation and migration projects. He holds an MS degree in Computer Science. Outside of his professional work, Akhil enjoys watching and playing sports.

Akhil Raj Yallamelli is a Cloud Infrastructure Architect at AWS, specializing in optimizing cloud infrastructures for enhanced data security and cost efficiency. He skillfully integrates technical solutions with business strategies to create scalable, reliable, and secure cloud environments. Akhil builds technical solutions focusing on customer business outcomes, incorporating generative AI (Gen AI) technologies to drive innovation. With deep expertise in AWS and a strong background in DevOps methodologies throughout the software development life cycle (SDLC), Akhil leads critical implementation and migration projects. He holds an MS degree in Computer Science. Outside of his professional work, Akhil enjoys watching and playing sports.

Leave a Reply