Get the most from Amazon Titan Text Premier

Amazon Titan Text Premier, the latest addition to the Amazon Titan family of large language models (LLMs), is now generally available in Amazon Bedrock. Amazon Titan Text Premier is an advanced, high performance, and cost-effective LLM engineered to deliver superior performance for enterprise-grade text generation applications, including optimized performance for Retrieval Augmented Generation (RAG) and agents. The model is built from the ground up following safe, secure, and trustworthy responsible AI practices and excels in delivering exceptional generative artificial intelligence (AI) text capabilities at scale.

Exclusive to Amazon Bedrock, Amazon Titan Text Premier supports a wide range of text-related tasks, including summarization, text generation, classification, question-answering, and information extraction. This new model offers optimized performance for key features such as RAG on Knowledge Bases for Amazon Bedrock and function calling on Agents for Amazon Bedrock. Such integrations enable advanced applications like building interactive AI assistants that use your APIs and interact with your documents.

Why choose Amazon Titan Text Premier?

As of today, the Amazon Titan family of models for text generation allows for context windows from 4K to 32K and a rich set of capabilities around free text and code generation, API orchestration, RAG, and Agent based applications. An overview of these Amazon Titan models is shown in the following table.

| Model | Availability | Context window | Languages | Functionality | Customized fine-tuning |

| Amazon Titan Text Lite | GA | 4K | English | Code, rich text | Yes |

| Amazon Titan Text Express | GA (English) |

8K | Multilingual (100+ languages) |

Code, rich text, API orchestration |

Yes |

| Amazon Titan Text Premier | GA | 32K | English | Enterprise text generation applications, RAG, agents | Yes (preview) |

Amazon Titan Text Premier is an LLM designed for enterprise-grade applications. It is optimized for performance and cost-effectiveness, with a maximum context length of 32,000 tokens. Amazon Titan Text Premier enables the development of custom agents for tasks such as text summarization, generation, classification, question-answering, and information extraction. It also supports the creation of interactive AI assistants that can integrate with existing APIs and data sources. As of today, Amazon Titan Text Premier is also customizable with your own datasets for even better performance with your specific use cases. In our own internal tests, fine-tuned Amazon Titan Text Premier models on various tasks related to instruction tuning and domain adaptation yielded superior results compared to the Amazon Titan Text Premier model baseline, as well as other fine-tuned models. To try out model customization for Amazon Titan Text Premier, contact your AWS account team. By using the capabilities of Amazon Titan Text Premier, organizations can streamline workflows and enhance their operations and customer experiences through advanced language AI.

As highlighted in the AWS News Blog launch post, Amazon Titan Text Premier has demonstrated strong performance on a range of public benchmarks that assess critical enterprise-relevant capabilities. Notably, Amazon Titan Text Premier achieved a score of 92.6% on the HellaSwag benchmark, which evaluates common-sense reasoning, outperforming outperforming competitor models. Additionally, Amazon Titan Text Premier showed strong results on reading comprehension (89.7% on RACE-H) and multi-step reasoning (77.9 F1 score on the DROP benchmark). Across diverse tasks like instruction following, representation of questions in 57 subjects, and BIG-Bench Hard, Amazon Titan Text Premier has consistently delivered comparable performance to other providers, highlighting its broad intelligence and utility for enterprise applications. However, we encourage our customers to benchmark the model’s performance on their own specific datasets and use cases because actual performance may vary. Conducting thorough testing and evaluation is crucial to ensure the model meets the unique requirements of each enterprise.

How do you get started with Amazon Titan Text Premier?

Amazon Titan Text Premier is generally available in Amazon Bedrock in the US East (N. Virginia) AWS Region.

To enable access to Amazon Titan Text Premier, on the Amazon Bedrock console, choose Model access on the bottom left pane. On the Model access overview page, choose Manage model access in the upper right corner and enable access to Amazon Titan Text Premier.

With Amazon Titan Text Premier available through the Amazon Bedrock serverless experience, you can easily access the model using a single API and without managing any infrastructure. You can use the model either through the Amazon Bedrock REST API or the AWS SDK using the InvokeModel API or Converse API. In the code example below, we define a simple function “call_titan” which uses the boto3 “bedrock-runtime” client to invoke the Amazon Titan Text Premier model.

With a maximum context length of 32K tokens, Amazon Titan Text Premier has been specifically optimized for enterprise use cases, such as building RAG and agent-based applications with Knowledge Bases for Amazon Bedrock and Agents for Amazon Bedrock. The model training data includes examples for tasks like summarization, Q&A, and conversational chat and has been optimized for integration with Knowledge Bases for Amazon Bedrock and Agents for Amazon Bedrock. The optimization includes training the model to handle the nuances of these features, such as their specific prompt formats.

Sample RAG and agent based application using Knowledge Bases for Amazon Bedrock and Agents for Amazon Bedrock

Amazon Titan Text Premier offers high-quality RAG through integration with Knowledge Bases for Amazon Bedrock. With a knowledge base, you can securely connect foundation models (FMs) in Amazon Bedrock to your company data for RAG. You can now choose Amazon Titan Text Premier with Knowledge Bases for Amazon Bedrock to implement question-answering and summarization tasks over your company’s proprietary data.

Evaluating high-quality RAG system on research papers with Amazon Titan Text Premier using Knowledge Bases for Amazon Bedrock

To demonstrate how Amazon Titan Text Premier can be used in RAG based applications, we ingested recent research papers (which are linked in the resources section) and articles related to LLMs to construct a knowledge base using Amazon Bedrock and Amazon OpenSearch Serverless. Learn more about how you can do this on your own here. This collection (see the references section for the full list) of papers and articles covers a wide range of topics, including benchmarking tools, distributed training techniques, surveys of LLMs, prompt engineering methods, scaling laws, quantization approaches, security considerations, self-improvement algorithms, and efficient training procedures. As LLMs continue to progress rapidly and find widespread use, it is crucial to have a comprehensive and up-to-date knowledge repository that can facilitate informed decision-making, foster innovation, and enable responsible development of these powerful AI systems. By grounding the answers from a RAG model on this Amazon Bedrock knowledge base, we can ensure that the responses are backed by authoritative and relevant research, enhancing their accuracy, trustworthiness, and potential for real-world impact.

The following video showcases the capabilities of Knowledge Bases for Amazon Bedrock when used with Amazon Titan Text Premier, which was constructed using the research papers and articles we discussed earlier. When models available on Amazon Bedrock, such as Amazon Amazon Titan Text Premier, are asked about research on avocados or more relevant research about AI training methods, they can confidently answer without using any sources. In this particular example, the answers may even be wrong. The video shows how Knowledge Bases for Amazon Bedrock and Amazon Titan Text Premier can be used to ground answers based on recent research. With this setup, when asked, “What does recent research have to say about the health benefits of eating avocados?” the system correctly acknowledges that it does not have access to information related to this query within its knowledge base, which focuses on LLMs and related areas. However, when prompted with “What is codistillation?” the system provides a detailed response grounded in the information found in the source chunks displayed.

This demonstration effectively illustrates the knowledge-grounded nature of Knowledge Bases for Amazon Bedrock and its ability to provide accurate and well-substantiated responses based on the curated research content when used with models like Amazon Titan Text Premier. By grounding the responses in authoritative sources, the system ensures that the information provided is reliable, up-to-date, and relevant to the specific domain of LLMs and related areas. Amazon Bedrock also allows users to edit retriever and model parameters and instructions in the prompt template to further customize how sources are used and how answers are generated, as shown in the following screenshot. This approach not only enhances the credibility of the responses but also promotes transparency by explicitly displaying the source material that informs the system’s output.

Build a human resources (HR) assistant with Amazon Titan Text Premier using Knowledge Bases for Amazon Bedrock and Agents for Amazon Bedrock

The following video describes the workflow and architecture of creating an assistant with Amazon Titan Text Premier.

The workflow consists of the following steps:

- Input query – Users provide natural language inputs to the agent.

- Preprocessing step – During preprocessing, the agent validates, contextualizes, and categorizes user input. The user input (or task) is interpreted by the agent using the chat history, instructions, and underlying FM specified during agent creation. The agent’s instructions are descriptive guidelines outlining the agent’s intended actions. Also, you can configure advanced prompts, which allow you to boost your agent’s precision by employing more detailed configurations and offering manually selected examples for few-shot prompting. This method allows you to enhance the model’s performance by providing labeled examples associated with a particular task.

- Action groups – Action groups are a set of APIs and corresponding business logic whose OpenAPI schema is defined as JSON files stored in Amazon Simple Storage Service (Amazon S3). The schema allows the agent to reason around the function of each API. Each action group can specify one or more API paths whose business logic is run through the AWS Lambda function associated with the action group.

In this sample application, the agent has multiple actions associated within an action group, such as looking up and updating the data around the employee’s time off in an Amazon Athena table, sending Slack and Outlook messages to teammates, generating images using Amazon Titan Image Generator, and making a knowledge base query to get the relevant details.

- Knowledge Bases for Amazon Bedrock look up as an action – Knowledge Bases for Amazon Bedrock provides fully managed RAG to supply the agent with access to your data. You first configure the knowledge base by specifying a description that instructs the agent when to use your knowledge base. Then, you point the knowledge base to your Amazon S3 data source. Finally, you specify an embedding model and choose to use your existing vector store or allow Amazon Bedrock to create the vector store on your behalf. Once configured, each data source sync creates vector embeddings of your data, which the agent can use to return information to the user or augment subsequent FM prompts.

In this sample application, we use Amazon Titan Text Embeddings as an embedding model along with the default OpenSearch Serverless vector database to store our embedding. The knowledge base contains the employer’s relevant HR documents, such as parental leave policy, vacation policy, payment slips and more.

- Orchestration – During orchestration, the agent develops a rationale with the logical steps of which action group API invocations and knowledge base queries are needed to generate an observation that can be used to augment the base prompt for the underlying FM. This ReAct style of prompting serves as the input for activating the FM, which then anticipates the most optimal sequence of actions to complete the user’s task.

In this sample application, the agent processes the employee’s query, breaks it down into a series of subtasks, determines the proper sequence of steps, and finally executes the appropriate actions and knowledge searches on the fly.

- Postprocessing – Once all orchestration iterations are complete, the agent curates a final response. Postprocessing is disabled by default.

The following sections demonstrate test calls on the HR assistant application

Using Knowledge Bases for Amazon Bedrock

In this test call, the assistant makes a knowledge base call to fetch the relevant information from the documents about HR policies to answer the query, “Can you get me some details about parental leave policy?” The following screenshot shows the prompt query and the reply.

Knowledge Bases for Amazon Bedrock call with GetTimeOffBalance action call and UpdateTimeOffBalance action call

In this test call, the assistant needs to answer the query, “My partner and I are expecting a baby on July 1. Can I take 2 weeks off?” It makes a knowledge base call to fetch the relevant information from the documents and answer questions based on the results. This is followed by making the GetTimeOffBalance action call to check for the available vacation time off balance. In the next query, we ask the assistant to update the database with appropriate values by asking,

“Yeah, let’s go ahead and request time off for 2 weeks from July 1–14, 2024.”

Amazon Titan Image Generator action call

In this test call, the assistant makes a call to Amazon Titan Image Generator through Agents for Amazon Bedrock actions to generate the corresponding image based on the input query, “Generate a cartoon image of a newborn child with parents.” The following screenshot shows the query and the response, including the generated image.

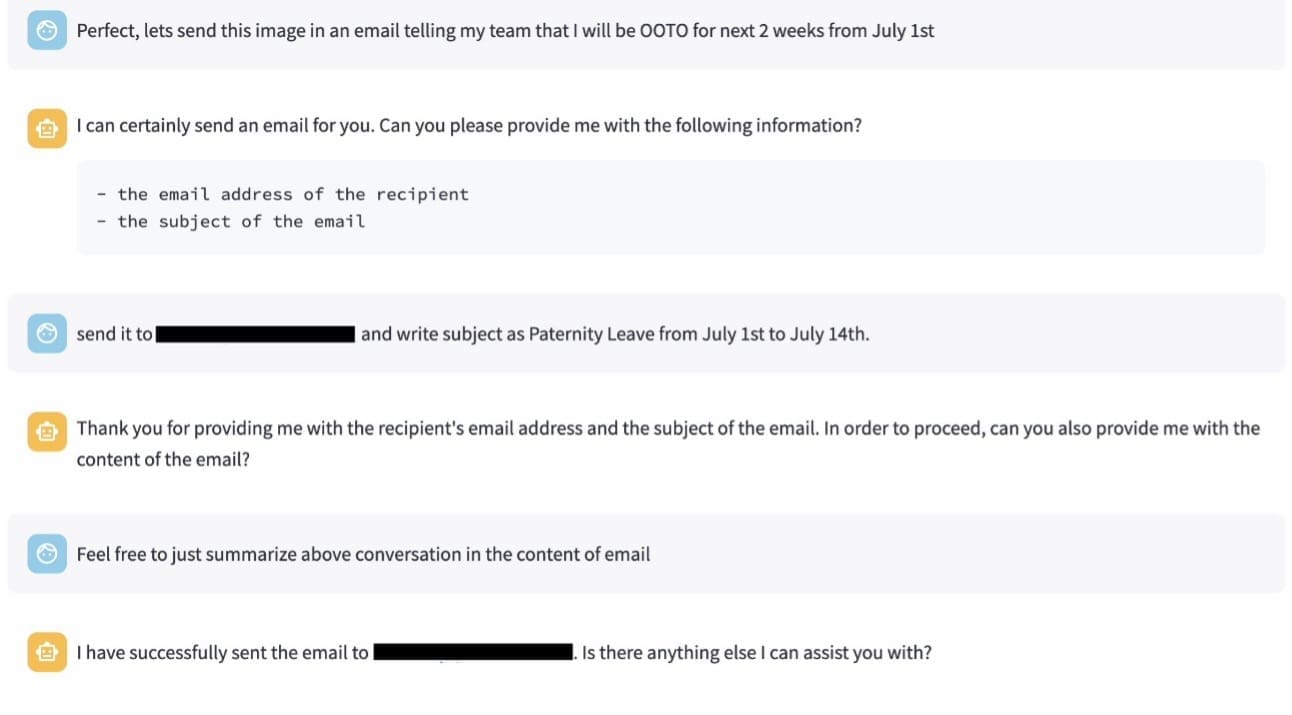

Amazon Simple Notification Service (Amazon SNS) email sending action

In this test call, the assistant makes a call to the emailSender action through Amazon SNS to send an email message, using the query, “Send an email to my team telling them that I will be away for 2 weeks starting July 1.” The following screenshot shows the exchange.

The following screenshot shows the response email.

Slack integration

You can set up the Slack message API similarly using Slack Webhooks and integrate it as one of the actions in Amazon Bedrock. For a demo, view the 90-second YouTube video and Refer to GitHub for the code repo

Agent responses might vary with different tries, so make sure to optimize your prompts to make it robust for other use cases.

Conclusion

In this post, we introduced the new Amazon Titan Text Premier model, specifically optimized for enterprise use cases, such as building RAG and agent-based applications. Such integrations enable advanced applications like building interactive AI assistants that use enterprise APIs and interact with your propriety documents. Now that you know more about Amazon Titan Text Premier and its integrations with Knowledge Bases for Amazon Bedrock and Agents for Amazon Bedrock, we can’t wait to see what you all build with this model.

To learn more about the Amazon Titan family of models, visit the Amazon Titan product page. For pricing details, review Amazon Bedrock pricing. For more examples to get started, check out the Amazon Bedrock workshop repository and Amazon Bedrock samples repository.

About the authors

Anupam Dewan is a Senior Solutions Architect with a passion for Generative AI and its applications in real life. He and his team enable Amazon Builders who build customer facing application using generative AI. He lives in Seattle area, and outside of work loves to go on hiking and enjoy nature.

Anupam Dewan is a Senior Solutions Architect with a passion for Generative AI and its applications in real life. He and his team enable Amazon Builders who build customer facing application using generative AI. He lives in Seattle area, and outside of work loves to go on hiking and enjoy nature.

Shreyas Subramanian is a Principal data scientist and helps customers by using Machine Learning to solve their business challenges using the AWS platform. Shreyas has a background in large scale optimization and Machine Learning, and in use of Machine Learning and Reinforcement Learning for accelerating optimization tasks.

Shreyas Subramanian is a Principal data scientist and helps customers by using Machine Learning to solve their business challenges using the AWS platform. Shreyas has a background in large scale optimization and Machine Learning, and in use of Machine Learning and Reinforcement Learning for accelerating optimization tasks.

Leave a Reply