Build ultra-low latency multimodal generative AI applications using sticky session routing in Amazon SageMaker

Amazon SageMaker is a fully managed machine learning (ML) service. With SageMaker, data scientists and developers can quickly and confidently build, train, and deploy ML models into a production-ready hosted environment. SageMaker provides a broad selection of ML infrastructure and model deployment options to help meet your ML inference needs. It also helps scale your model deployment, manage models more effectively in production, and reduce operational burden.

Although early large language models (LLMs) were limited to processing text inputs, the rapid evolution of these AI systems has enabled LLMs to expand their capabilities to handle a wide range of media types, including images, video, and audio, ushering in the era of multimodal models. Multimodal is a type of deep learning using multiple modalities of data, such as text, audio, or images. Multimodal inference adds challenges of large data transfer overhead and slow response times. For instance, in a typical chatbot scenario, users initiate the conversation by providing a multimedia file or a link as input payload, followed by a back-and-forth dialogue, asking questions or seeking information related to the initial input. However, transmitting large multimedia files with every request to a model inference endpoint can significantly impact the response times and latency, leading to an unsatisfactory user experience. For example, sending a 500 MB input file could potentially add 3–5 seconds to the response time, which is unacceptable for a chatbot aiming to deliver a seamless and responsive interaction.

We are announcing the availability of sticky session routing on Amazon SageMaker Inference which helps customers improve the performance and user experience of their generative AI applications by leveraging their previously processed information. Amazon SageMaker makes it easier to deploy ML models including foundation models (FMs) to make inference requests at the best price performance for any use case.

By enabling sticky sessions routing, all requests from the same session are routed to the same instance, allowing your ML application to reuse previously processed information to reduce latency and improve user experience. This is particularly valuable when you want to use large data payloads or need seamless interactive experiences. By using your previous inference requests, you can now take advantage of this feature to build innovative state-aware AI applications on SageMaker. To do, you create a session ID with your first request, and then use that session ID to indicate that SageMaker should route all subsequent requests to the same instance. Sessions can also be deleted when done to free up resources for new sessions.

This feature is available in all AWS Regions where SageMaker is available. To learn more about deploying models on SageMaker, see Amazon SageMaker Model Deployment. For more about this feature, refer to Stateful sessions with Amazon SageMaker models.

Solution overview

SageMaker simplifies the deployment of models, enabling chatbots and other applications to use their multimodal capabilities with ease. SageMaker has implemented a robust solution that combines two key strategies: sticky session routing in SageMaker with load balancing, and stateful sessions in TorchServe. Sticky session routing makes sure all requests from a user session are serviced by the same SageMaker server instance. Stateful sessions in TorchServe cache the multimedia data in GPU memory from the session start request and minimize loading and unloading of this data from GPU memory for improved response times.

With this focus on minimizing data transfer overhead and improving response time, our approach makes sure the initial multimedia file is loaded and processed only one time, and subsequent requests within the same session can use the cached data.

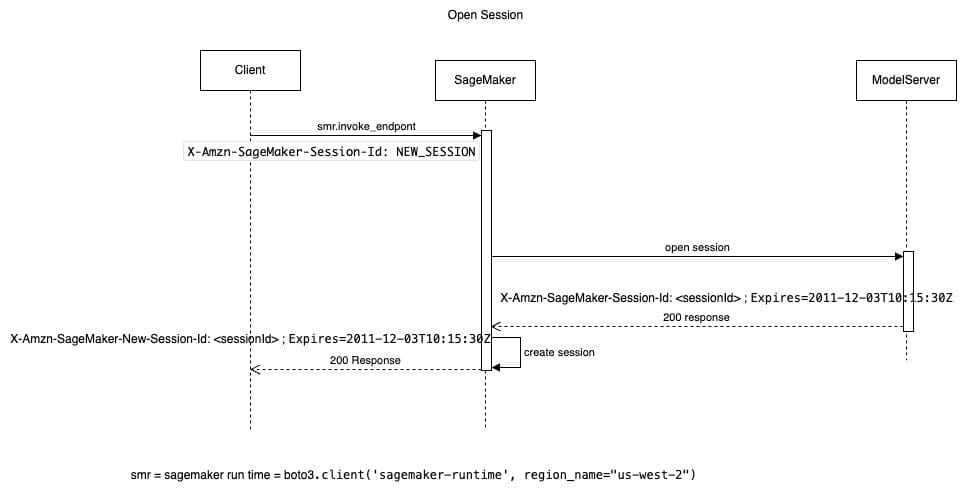

Let’s look at the sequence of events when a client initiates a sticky session on SageMaker:

- In the first request, you call the Boto3 SageMaker runtime invoke_endpoint with

session-id=NEW_SESSIONin the header and a payload indicating an open session type of request. SageMaker then creates a new session and stores the session ID. The router initiates an open session (this API is defined by the client; it could be some other name likestart_session) with the model server, in this case TorchServe, and responds back with 200 OK along with the session ID and time to live (TTL), which is sent back to the client.

- Whenever you need to use the same session to perform subsequent actions, you pass the session ID as part of the

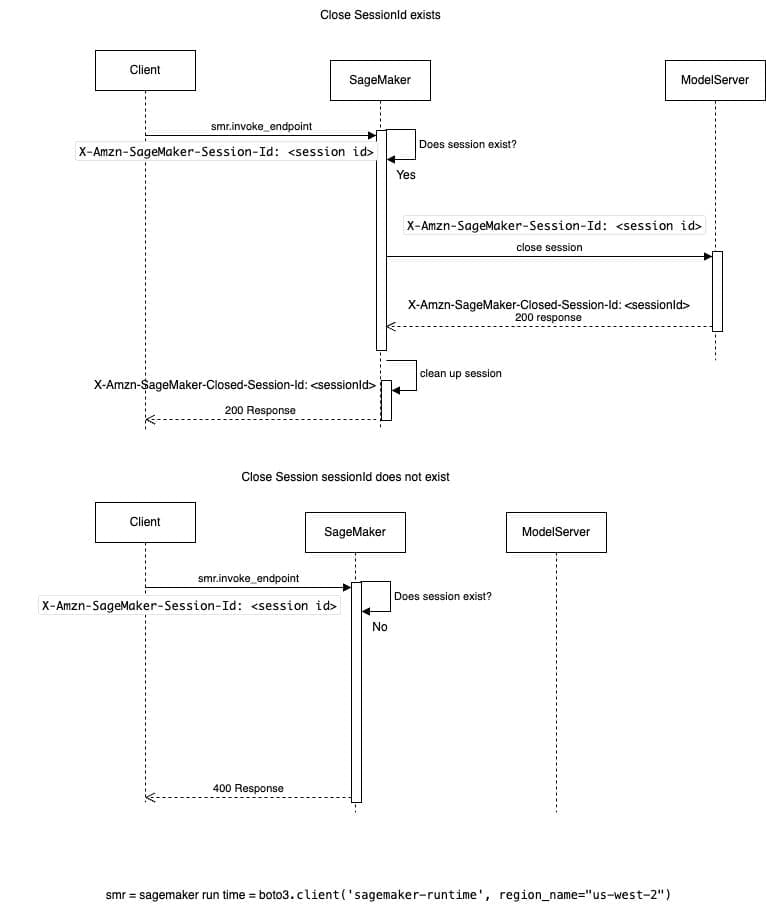

invoke_endpointcall, which allows SageMaker to route all the subsequent requests to the same model server instance. - To close or delete a session, you can use

invoke_endpointwith a payload indicating a close session type of request along with the session ID. The SageMaker router first checks if the session exists. If it does, the router initiates a close session call to the model server, which responds back with a successful 200 OK along with session ID, which is sent back to the client. In the scenario, when the session ID doesn’t exist, the router responds back with a 400 response.

In the following sections, we walk through an example of how you can use sticky routing in SageMaker to achieve stateful model inference. For this post, we use the LLaVA: Large Language and Vision Assistant model. LLaVa is a multimodal model that accepts images and text prompts.

We use LLaVa to upload an image and then ask questions about the image without having to resend the image for every request. The image is cached in the GPU memory as opposed to the CPU memory, so we don’t have to incur the latency cost of moving this image from CPU memory to GPU memory on every call.

We use TorchServe as our model server for this example. TorchServe is a performant, flexible and easy to use tool for serving PyTorch models in production. TorchServe supports a wide array of advanced features, including dynamic batching, microbatching, model A/B testing, streaming, torch XLA, tensorRT, ONNX and IPEX. Moreover, it seamlessly integrates PyTorch’s large model solution, PiPPy, enabling efficient handling of large models. Additionally, TorchServe extends its support to popular open-source libraries like DeepSpeed, Accelerate, Fast Transformers, and more, expanding its capabilities even further.

The following are the main steps to deploy the LLava model. The section below introduces the steps conceptually, so you’ll have a better grasp of the overall deployment workflow before diving into the practical implementation details in the subsequent section.

Build a TorchServe Docker container and push it to Amazon ECR

The first step is to build a TorchServe Docker container and push it to Amazon Elastic Container Registry (Amazon ECR). Because we’re using a custom model, we use the bring your own container approach. We use one of the AWS provided deep learning containers as our base, namely pytorch-inference:2.3.0-gpu-py311-cu121-ubuntu20.04-sagemaker.

Build TorchServe model artifacts and upload them to Amazon S3

We use torch-model-archiver to gather all the artifacts, like custom handlers, the LlaVa model code, the data types for request and response, model configuration, prediction API, and other utilities. Then we upload the model artifacts to Amazon Simple Storage Service (Amazon S3).

Create the SageMaker endpoint

To create the SageMaker endpoint, complete the following steps:

- To create the model, use the SageMaker Python SDK Model class and as inputs. Specify the S3 bucket you created earlier to upload the TorchServe model artifacts and the

image_uriof the Docker container you created.

SageMaker expects the session ID in X-Amzn-SageMaker-Session-Id format; you can specify that in the environment properties to the model.

- To deploy the model and create the endpoint, specify the initial instance count to match the load, instance type, and timeouts.

- Lastly, create a SageMaker Python SDK Predictor by passing in the endpoint name.

Run inference

Complete the following steps to run inference:

- Use an open session to send a URL to the image you want to ask questions about.

This is a custom API we have defined for our use case (see inference_api.py). You can define the inputs, outputs, and APIs to suit your business use case. For this use case, we use an open session to send a URL to the image we want to ask questions about. For the session ID header value, use the special string NEW_SESSION to indicate this is the start of a session. The custom handler you wrote downloads the image, converts it to a tensor, and caches that in the GPU memory. We do this because we have access to the LLaVa source code; we could also modify the original predict.py file from LLaVa model to accept a tensor instead of a PIL image. By caching the tensor in GPU, we have saved some inference time by not moving the image from CPU memory to GPU memory for every call. If you don’t have access to the model source code, you have to cache the image in CPU memory. Refer to inference_api.py for this source code. The open session API call returns a session ID, which you use for the rest of the calls in this session.

- To send a text prompt, get the session ID from the open session and send it along with the text prompt.

inference_api.py looks up the cache in GPU for the image based on the session ID and uses that for inference. This returns the LLaVa model output as a string.

- Repeat the previous step to send a different text prompt.

- When you’re done with all the text prompts, use the session ID to close the session.

In inference_api.py, we no longer hold on to the image cache in GPU.

The source code for this example is in the GitHub repo. You can run the steps using the following notebook.

Prerequisites

Use the following code to deploy an AWS CloudFormation stack that creates an AWS Identity and Access Management (IAM) role to deploy the SageMaker endpoints:

Create a SageMaker notebook instance

Complete the following steps to create a notebook instance for LLaVa model deployment:

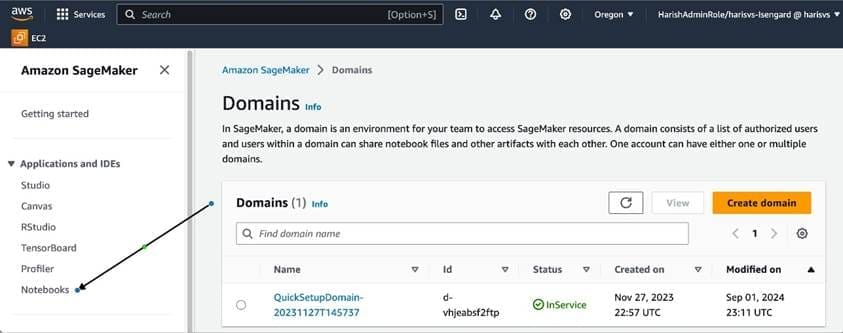

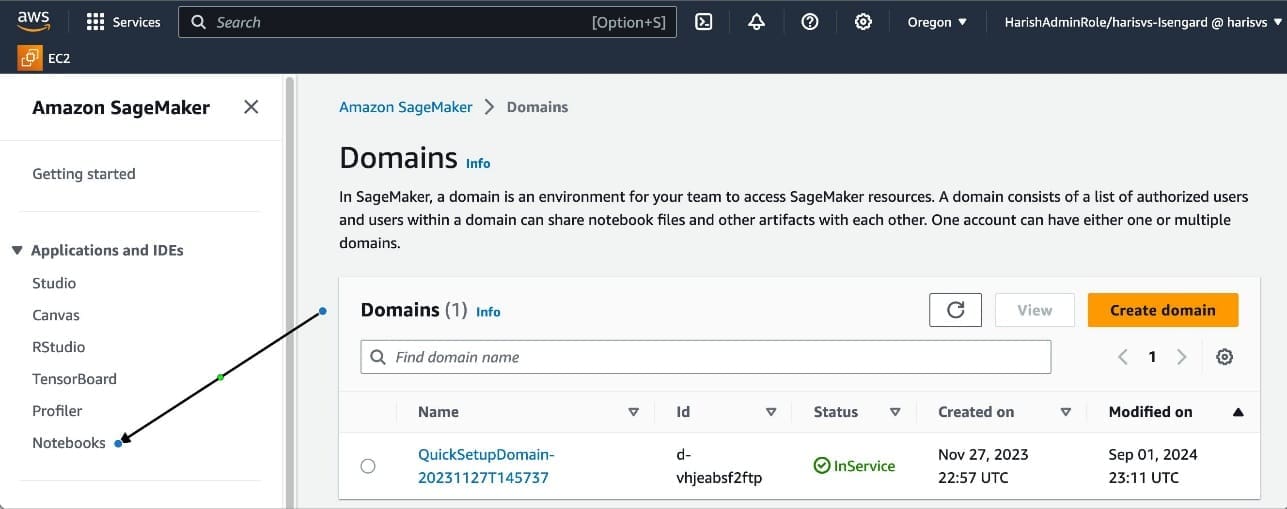

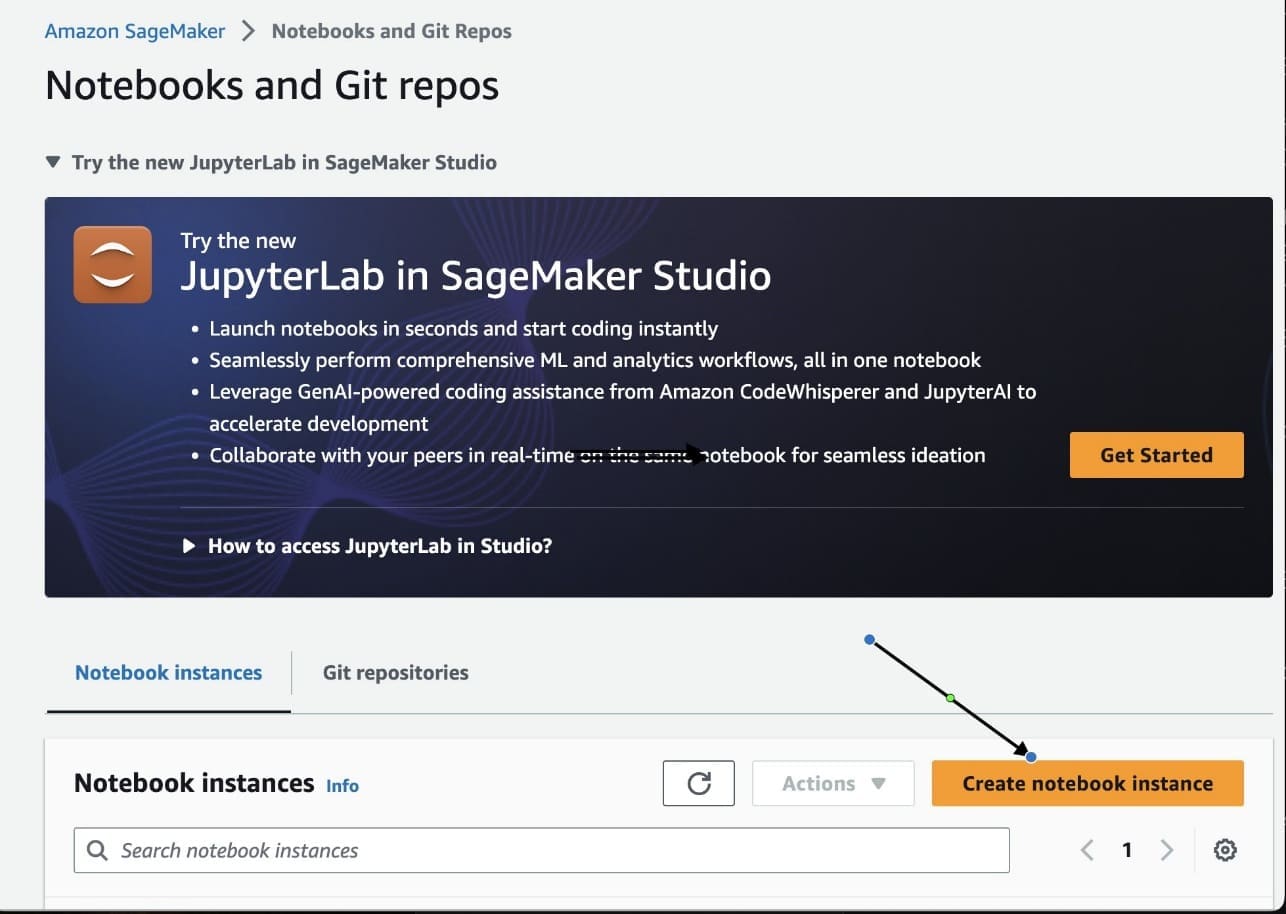

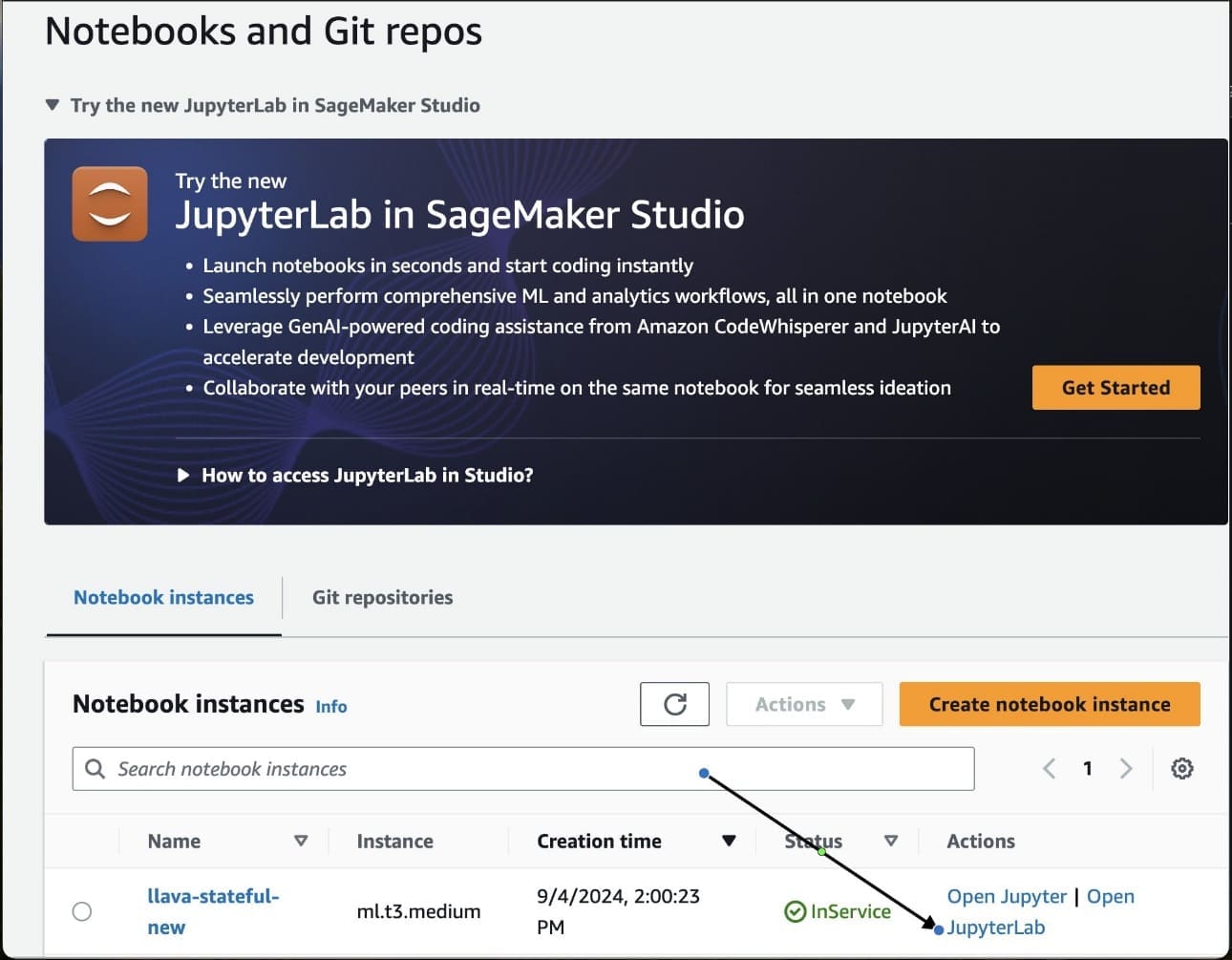

- On the SageMaker console, choose Notebooks in the navigation pane.

- Choose Create notebook instance.

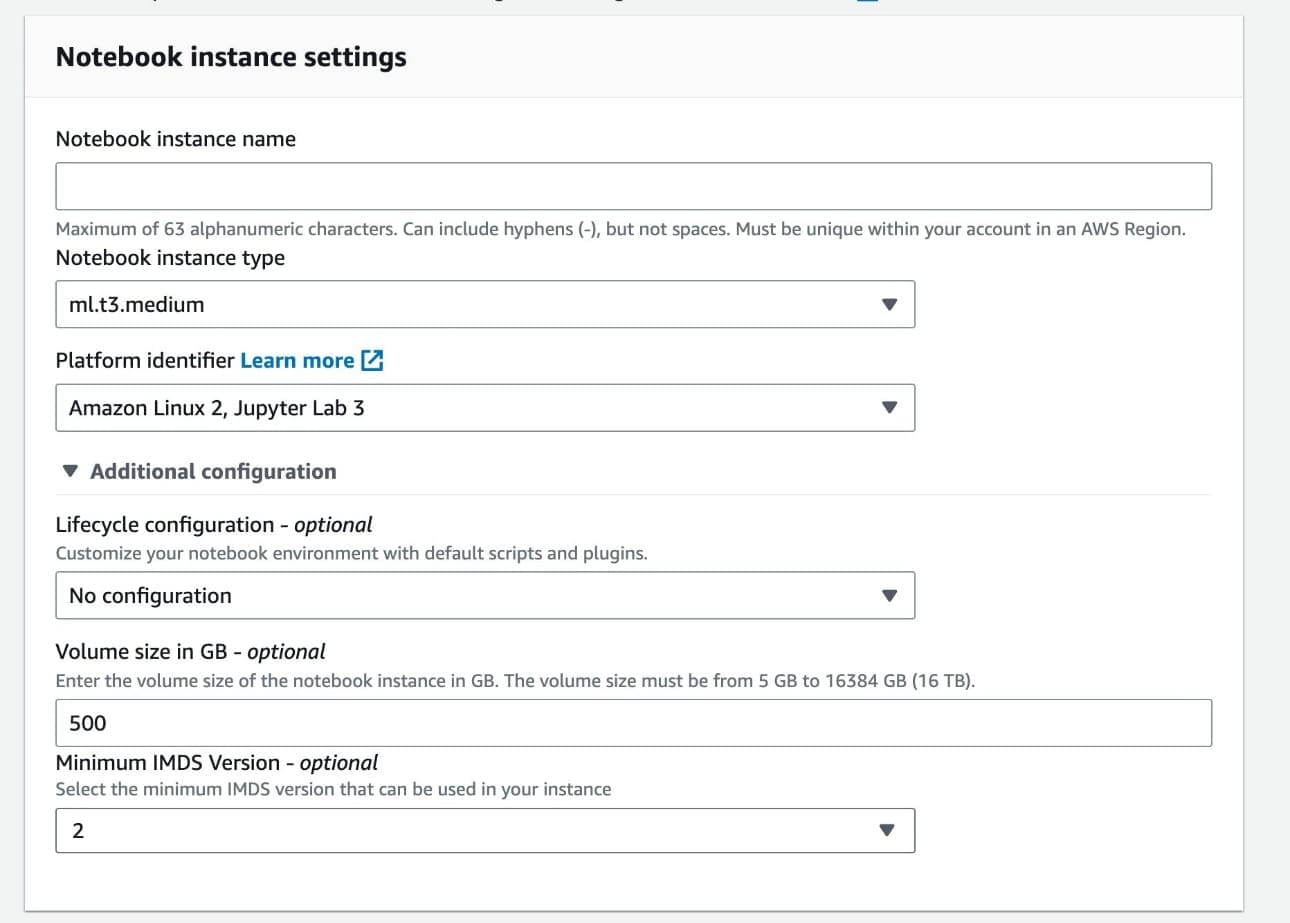

- In the Notebook instance settings section, under Additional configuration, choose at least 500 GB for the storage volume.

- In the Permissions and encryption section, choose to use an existing IAM role, and choose the role you created in the prerequisites (

sm-stateful-role-xxx).

You can get the full name of the role on the AWS CloudFormation console, on the Resources tab of the stack sm-stateful-role.

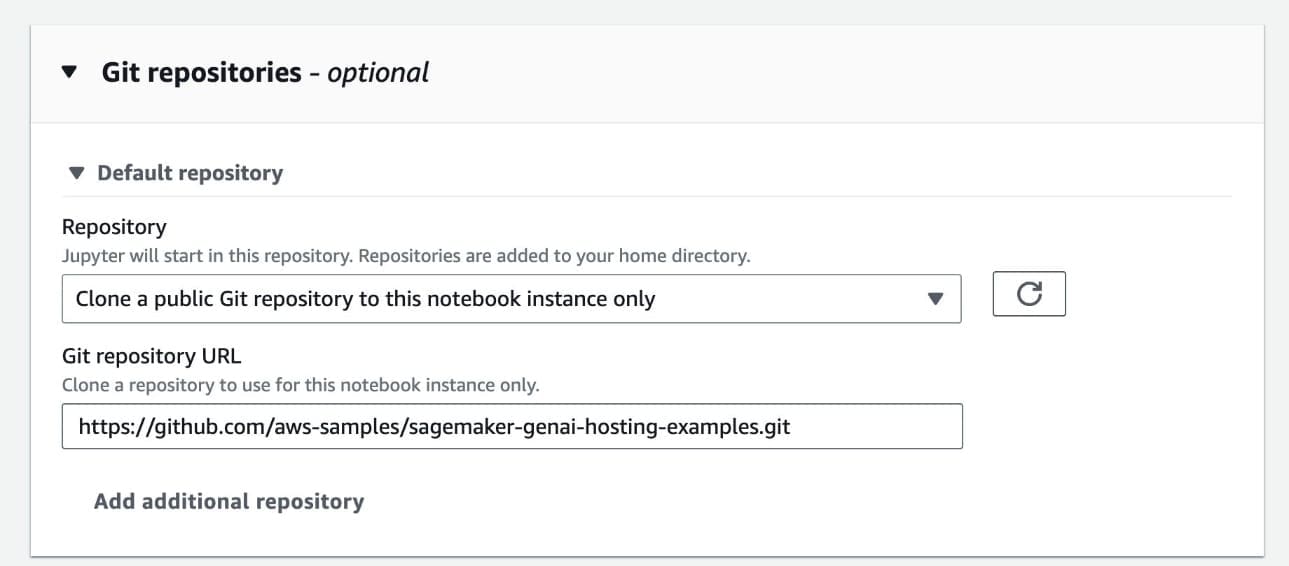

- In the Git repositories section, for Git repository URL, enter

https://github.com/aws-samples/sagemaker-genai-hosting-examples.git.

- Choose Create notebook instance.

Run the notebook

When the notebook is ready, complete the following steps:

- On the SageMaker console, choose Notebooks in the navigation pane.

- Choose Open JupyterLab for this new instance.

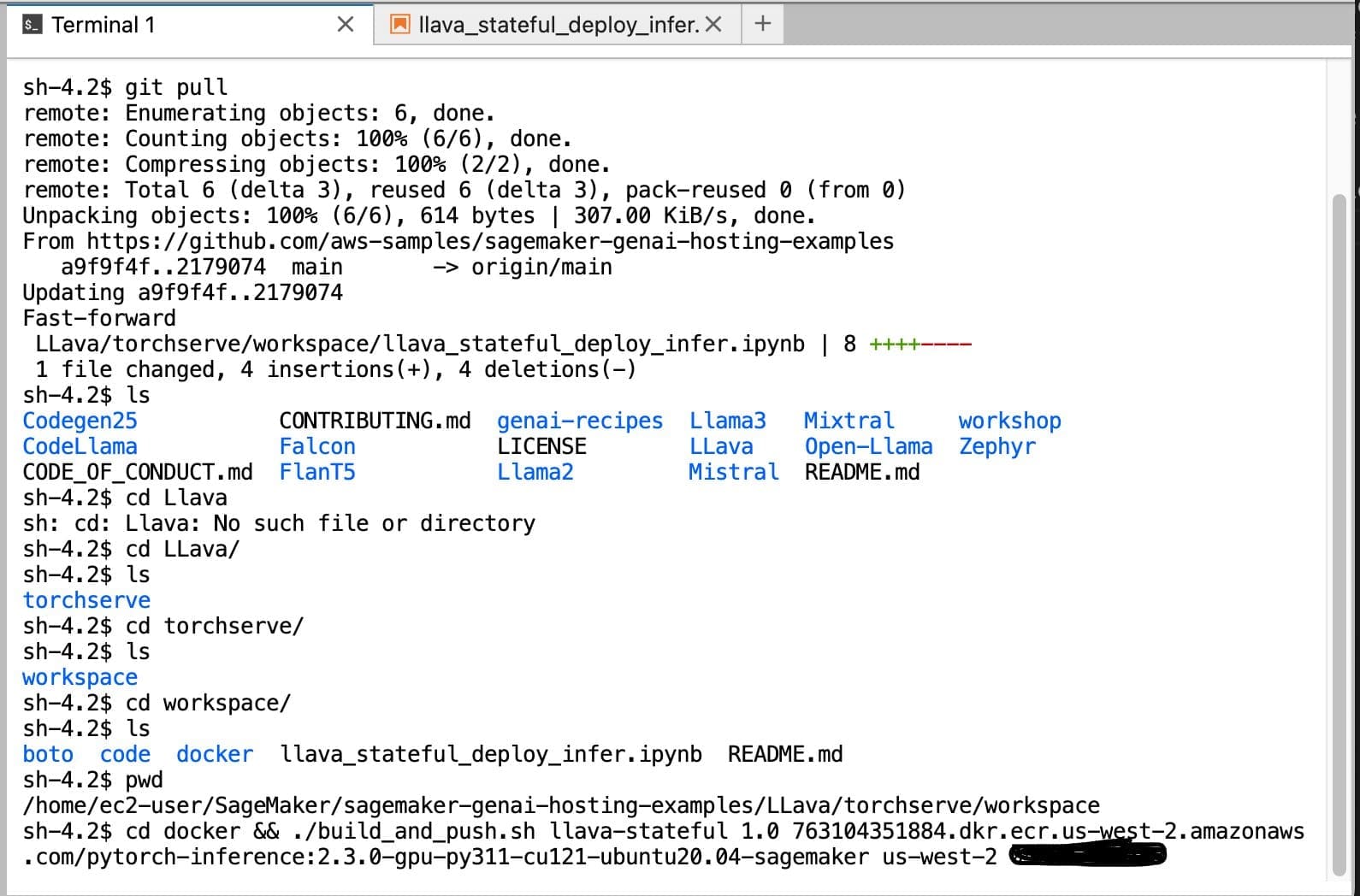

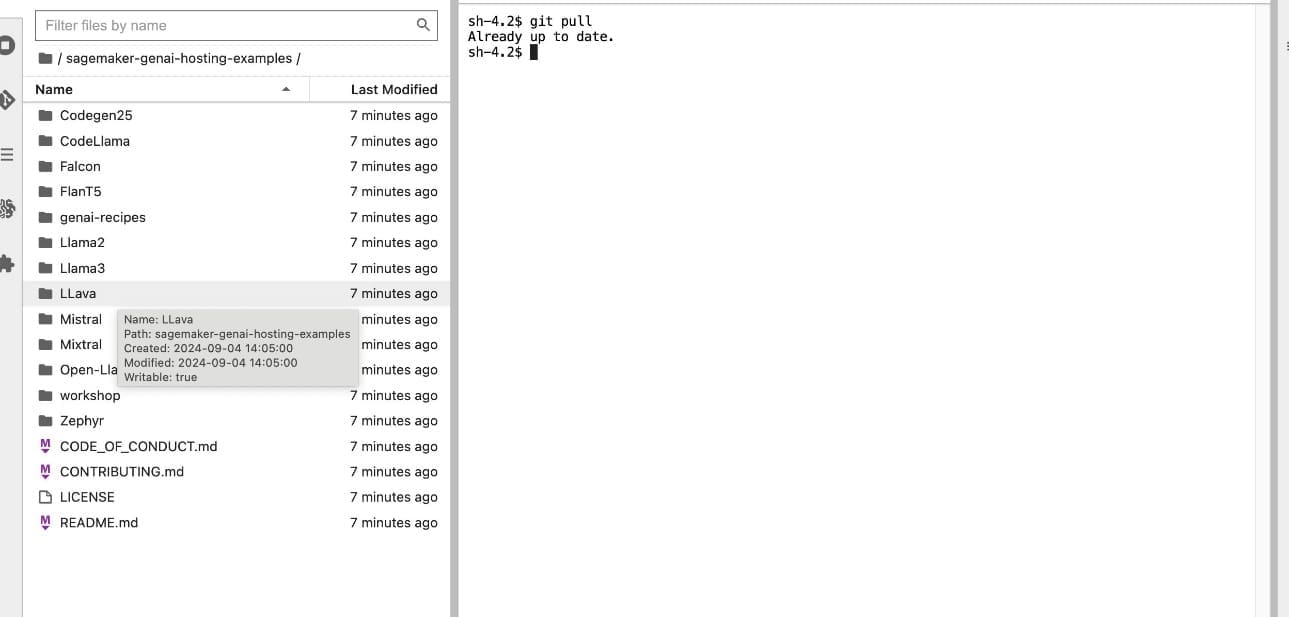

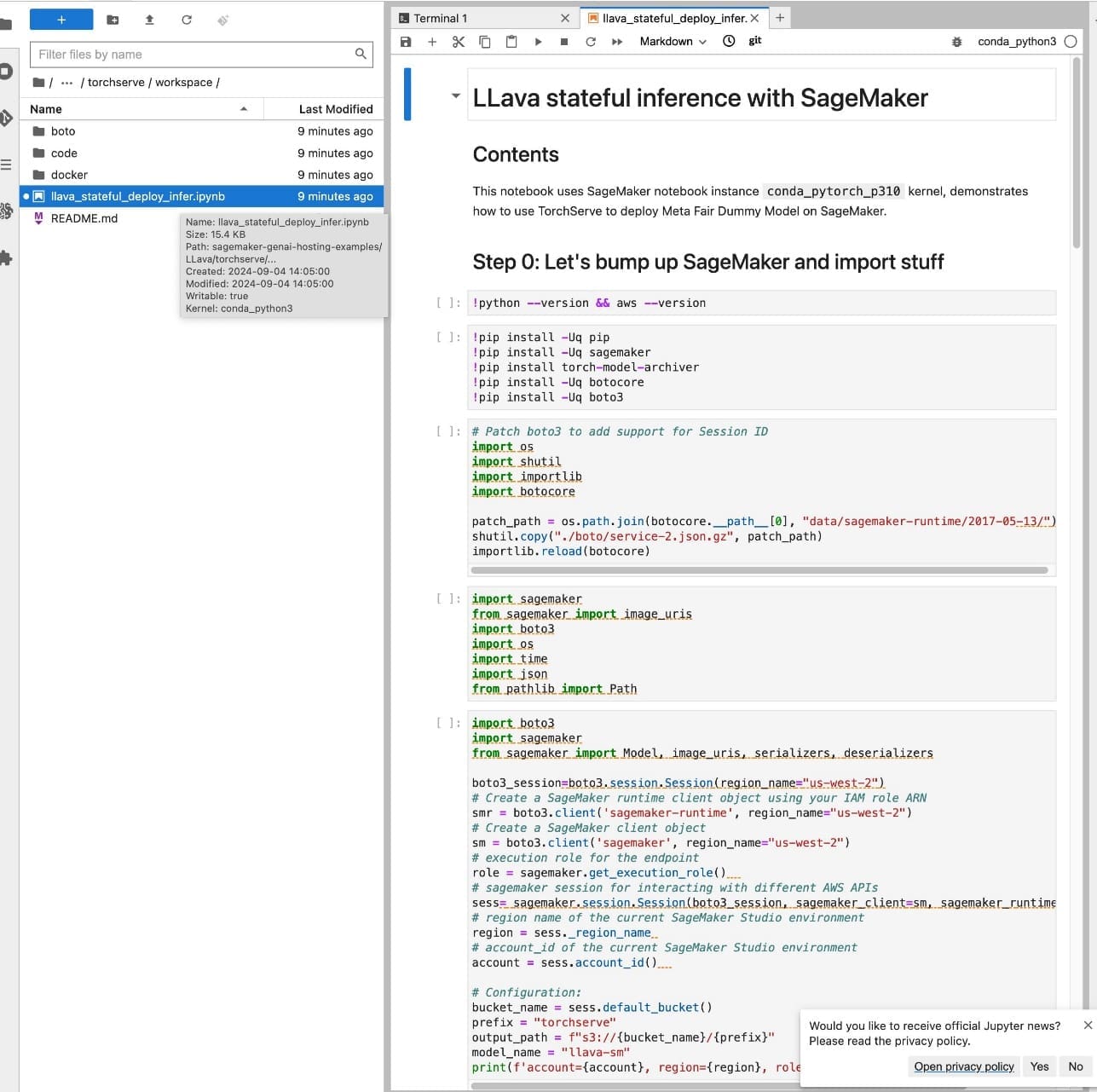

- In JupyterLab, navigate to

LLavausing the file explorer.

- Navigate to

torchserve /workspace /and open the notebookllava_stateful_deploy_infer.ipynb.

- Run the notebook.

The ./build_and_push.sh script takes approximately 30 minutes to run. You can also run the ./build_and_push.sh script in a terminal for better feedback. Note the input parameters from the previous step and make sure you’re in the right directory (sagemaker-genai-hosting-examples/LLava/torchserve/workspace).

The model.deploy() step also takes 20–30 minutes to complete.

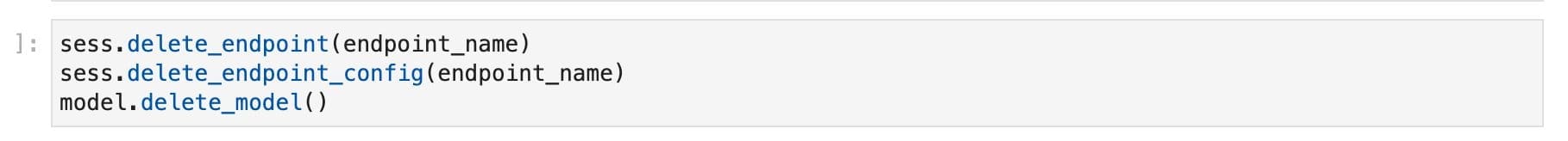

- When you’re done, run the last cleanup cell.

- Additionally, delete the SageMaker notebook instance.

Troubleshooting

When you run ./build_and_push.sh, you might get the following error:

This means you’re not using SageMaker notebooks, and are probably using Amazon SageMaker Studio. Docker is not installed in SageMaker Studio by default.

Look at the screen shot below to learn how to open Amazon SageMaker Notebook.

Conclusion

In this post, we explained how the new sticky routing feature in Amazon SageMaker allows you to achieve ultra-low latency and enhance your end-user experience when serving multi-modal models. You can use the provided notebook and create stateful endpoints for your multimodal models to enhance your end-user experience.

Try out this solution for your own use case, and let us know your feedback and questions in the comments.

About the authors

Harish Rao is a senior solutions architect at AWS, specializing in large-scale distributed AI training and inference. He empowers customers to harness the power of AI to drive innovation and solve complex challenges. Outside of work, Harish embraces an active lifestyle, enjoying the tranquility of hiking, the intensity of racquetball, and the mental clarity of mindfulness practices.

Harish Rao is a senior solutions architect at AWS, specializing in large-scale distributed AI training and inference. He empowers customers to harness the power of AI to drive innovation and solve complex challenges. Outside of work, Harish embraces an active lifestyle, enjoying the tranquility of hiking, the intensity of racquetball, and the mental clarity of mindfulness practices.

Raghu Ramesha is a Senior GenAI/ML Solutions Architect on the Amazon SageMaker Service team. He focuses on helping customers build, deploy, and migrate ML production workloads to SageMaker at scale. He specializes in machine learning, AI, and computer vision domains, and holds a master’s degree in computer science from UT Dallas. In his free time, he enjoys traveling and photography.

Raghu Ramesha is a Senior GenAI/ML Solutions Architect on the Amazon SageMaker Service team. He focuses on helping customers build, deploy, and migrate ML production workloads to SageMaker at scale. He specializes in machine learning, AI, and computer vision domains, and holds a master’s degree in computer science from UT Dallas. In his free time, he enjoys traveling and photography.

Lingran Xia is a software development engineer at AWS. He currently focuses on improving inference performance of machine learning models. In his free time, he enjoys traveling and skiing.

Lingran Xia is a software development engineer at AWS. He currently focuses on improving inference performance of machine learning models. In his free time, he enjoys traveling and skiing.

Naman Nandan is a software development engineer at AWS, specializing in enabling large scale AI/ML inference workloads on SageMaker using TorchServe, a project jointly developed by AWS and Meta. In his free time, he enjoys playing tennis and going on hikes.

Naman Nandan is a software development engineer at AWS, specializing in enabling large scale AI/ML inference workloads on SageMaker using TorchServe, a project jointly developed by AWS and Meta. In his free time, he enjoys playing tennis and going on hikes.

Li Ning is a senior software engineer at AWS with a specialization in building large-scale AI solutions. As a tech lead for TorchServe, a project jointly developed by AWS and Meta, her passion lies in leveraging PyTorch and AWS SageMaker to help customers embrace AI for the greater good. Outside of her professional endeavors, Li enjoys swimming, traveling, following the latest advancements in technology, and spending quality time with her family.

Li Ning is a senior software engineer at AWS with a specialization in building large-scale AI solutions. As a tech lead for TorchServe, a project jointly developed by AWS and Meta, her passion lies in leveraging PyTorch and AWS SageMaker to help customers embrace AI for the greater good. Outside of her professional endeavors, Li enjoys swimming, traveling, following the latest advancements in technology, and spending quality time with her family.

Frank Liu is a Principal Software Engineer for AWS Deep Learning. He focuses on building innovative deep learning tools for software engineers and scientists. Frank has in-depth knowledge on the infrastructure optimization and Deep Learning acceleration.

Frank Liu is a Principal Software Engineer for AWS Deep Learning. He focuses on building innovative deep learning tools for software engineers and scientists. Frank has in-depth knowledge on the infrastructure optimization and Deep Learning acceleration.

Deepika Damojipurapu is a Senior Technical Account Manager at AWS, specializing in distributed AI training and inference. She helps customers unlock the full potential of AWS by providing consultative guidance on architecture and operations, tailored to their specific applications and use cases. When not immersed in her professional responsibilities, Deepika finds joy in spending quality time with her family – exploring outdoors, traveling to new destinations, cooking wholesome meals together, creating cherished memories.

Deepika Damojipurapu is a Senior Technical Account Manager at AWS, specializing in distributed AI training and inference. She helps customers unlock the full potential of AWS by providing consultative guidance on architecture and operations, tailored to their specific applications and use cases. When not immersed in her professional responsibilities, Deepika finds joy in spending quality time with her family – exploring outdoors, traveling to new destinations, cooking wholesome meals together, creating cherished memories.

Alan Tan is a Principal Product Manager with SageMaker, leading efforts on large model inference. He’s passionate about applying machine learning to building novel solutions. Outside of work, he enjoys the outdoors.

Alan Tan is a Principal Product Manager with SageMaker, leading efforts on large model inference. He’s passionate about applying machine learning to building novel solutions. Outside of work, he enjoys the outdoors.

Leave a Reply