Announcing support for Llama 2 and Mistral models and streaming responses in Amazon SageMaker Canvas

Launched in 2021, Amazon SageMaker Canvas is a visual, point-and-click service for building and deploying machine learning (ML) models without the need to write any code. Ready-to-use Foundation Models (FMs) available in SageMaker Canvas enable customers to use generative AI for tasks such as content generation and summarization.

We are thrilled to announce the latest updates to Amazon SageMaker Canvas, which bring exciting new generative AI capabilities to the platform. With support for Meta Llama 2 and Mistral.AI models and the launch of streaming responses, SageMaker Canvas continues to empower everyone that wants to get started with generative AI without writing a single line of code. In this post, we discuss these updates and their benefits.

Introducing Meta Llama 2 and Mistral models

Llama 2 is a cutting-edge foundation model by Meta that offers improved scalability and versatility for a wide range of generative AI tasks. Users have reported that Llama 2 is capable of engaging in meaningful and coherent conversations, generating new content, and extracting answers from existing notes. Llama 2 is among the state-of-the-art large language models (LLMs) available today for the open source community to build their own AI-powered applications.

Mistral.AI, a leading AI French start-up, has developed the Mistral 7B, a powerful language model with 7.3 billion parameters. Mistral models has been very well received by the open-source community thanks to the usage of Grouped-query attention (GQA) for faster inference, making it highly efficient and performing comparably to model with twice or three times the number of parameters.

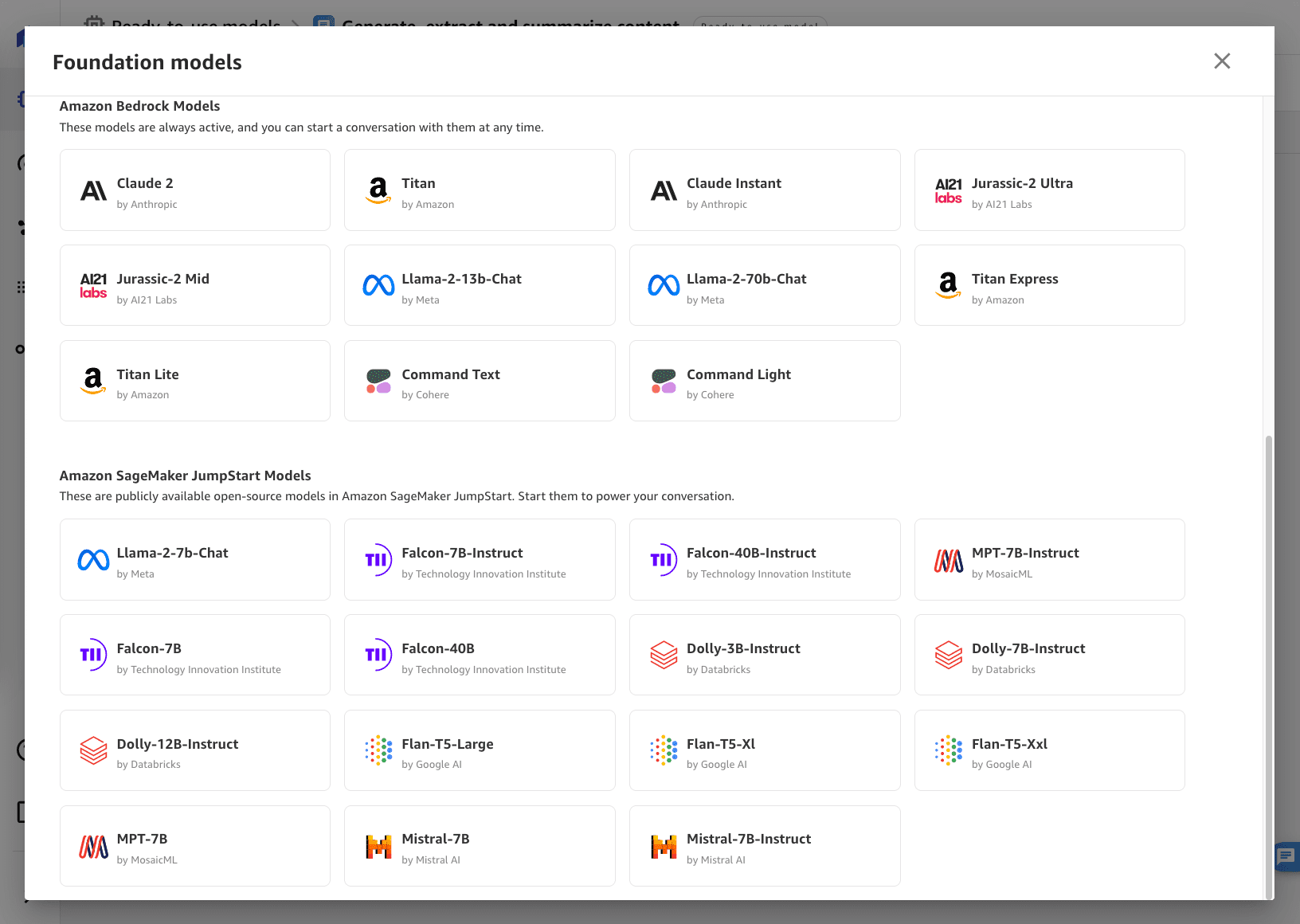

Today, we are excited to announce that SageMaker Canvas now supports three Llama 2 model variants and two Mistral 7B variants:

- Llama-2-13B-chat and Llama-2-70B-chat, powered by Amazon Bedrock

- Llama-2-7b-Chat, powered by Amazon SageMaker JumpStart

- Mistral-7B and Mistral-7B-Chat, powered by Amazon SageMaker JumpStart

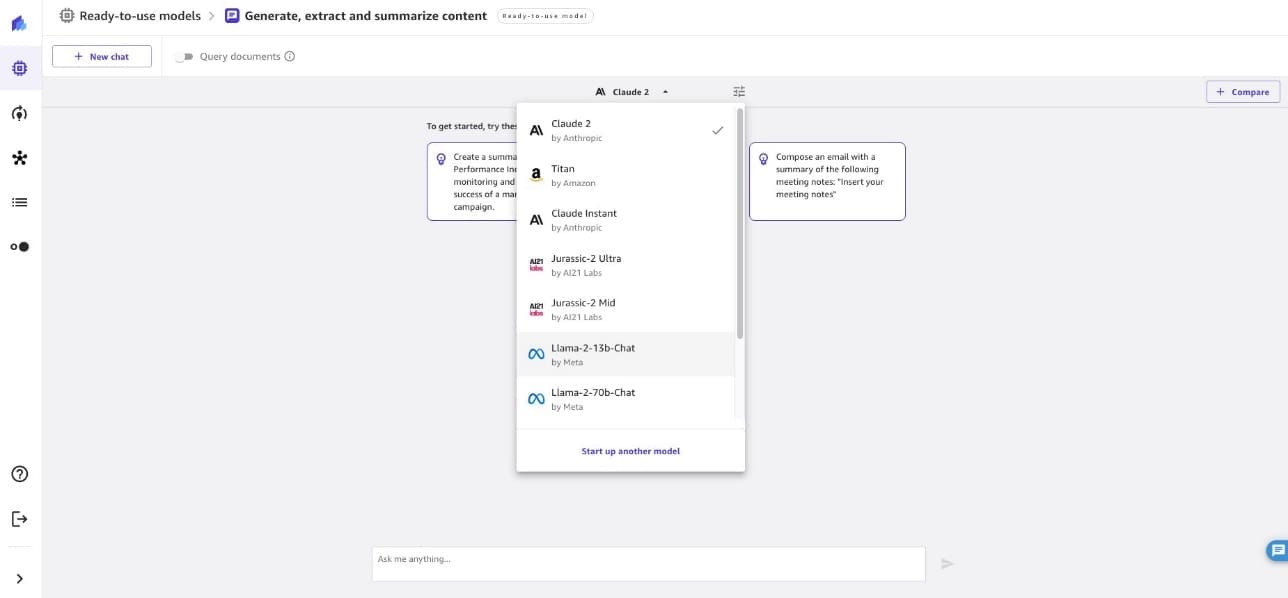

To test these models, navigate to the SageMaker Canvas Ready-to-use models page, then choose Generate, extract and summarize content. This is where you’ll find the SageMaker Canvas GenAI chat experience. In here, you can use any model from Amazon Bedrock or SageMaker JumpStart by selecting them on the model drop-down menu.

In our case, we choose one of the Llama 2 models. Now you can provide your input or query. As you send the input, SageMaker Canvas forwards your input to the model.

Choosing which one of the models available in SageMaker Canvas fits best for your use case requires you to take into account information about the models themselves: the Llama-2-70B-chat model is a bigger model (70 billion parameters, compared to 13 billion with Llama-2-13B-chat ), which means that its performance is generally higher that the smaller one, at the cost of a slightly higher latency and an increased cost per token. Mistral-7B has performances comparable to Llama-2-7B or Llama-2-13B, however it is hosted on Amazon SageMaker. This means that the pricing model is different, moving from a dollar-per-token pricing model, to a dollar-per-hour model. This can be more cost effective with a significant amount of requests per hour and a consistent usage at scale. All of the models above can perform well on a variety of use cases, so our suggestion is to evaluate which model best solves your problem, considering output, throughput, and cost trade-offs.

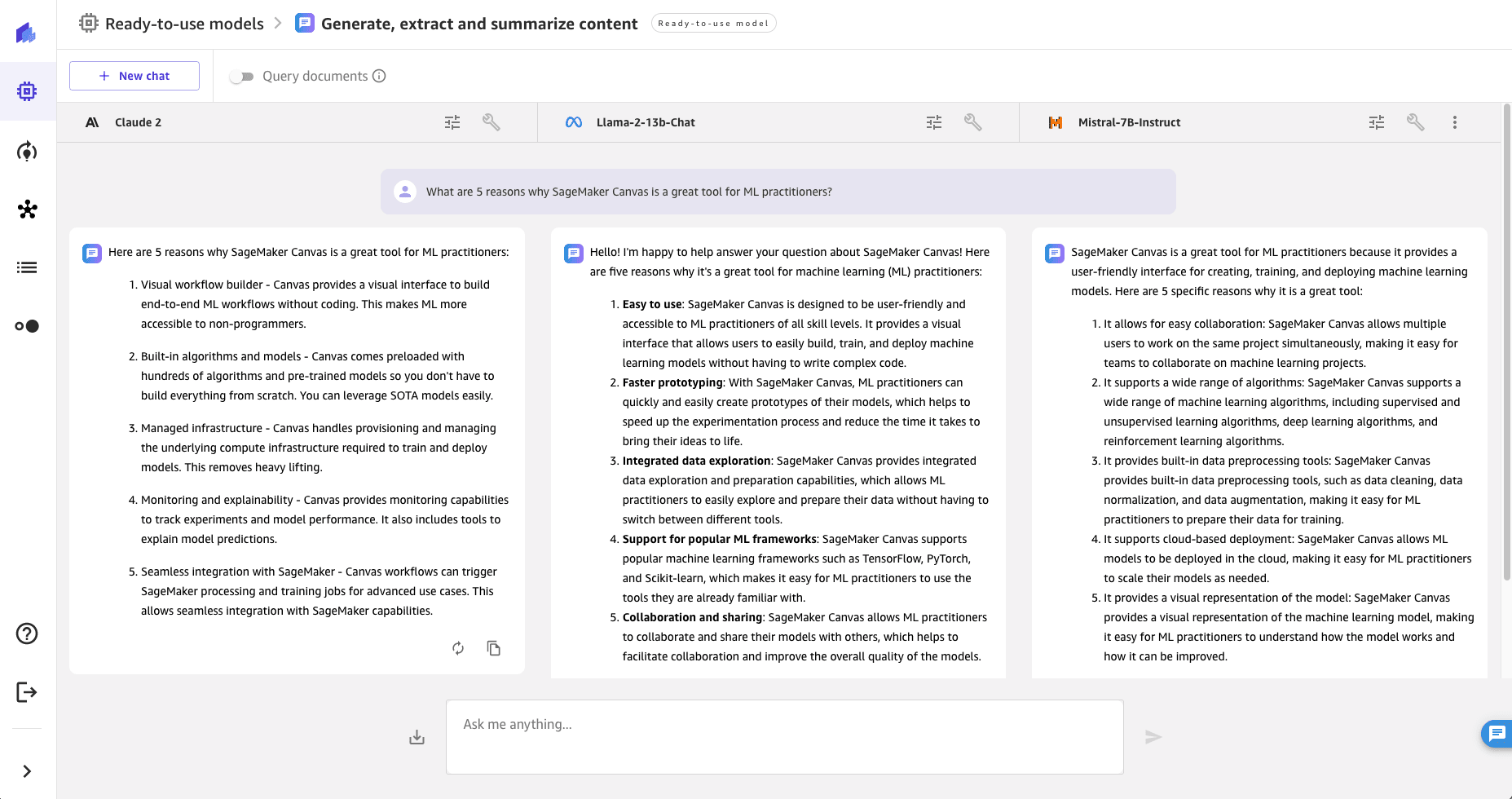

If you’re looking for a straightforward way to compare how models behave, SageMaker Canvas natively provides this capability in the form of model comparisons. You can select up to three different models and send the same query to all of them at once. SageMaker Canvas will then get the responses from each of the models and show them in a side-by-side chat UI. To do this, choose Compare and choose other models to compare against, as shown below:

Introducing response streaming: Real-time interactions and enhanced performance

One of the key advancements in this release is the introduction of streamed responses. The streaming of responses provides a richer experience for the user and better reflects a chat experience. With streaming responses, users can receive instant feedback and seamless integration in their chatbot applications. This allows for a more interactive and responsive experience, enhancing the overall performance and user satisfaction of the chatbot. The ability to receive immediate responses in a chat-like manner creates a more natural conversation flow and improves the user experience.

With this feature, you can now interact with your AI models in real time, receiving instant responses and enabling seamless integration into a variety of applications and workflows. All models that can be queried in SageMaker Canvas—from Amazon Bedrock and SageMaker JumpStart—can stream responses to the user.

Get started today

Whether you’re building a chatbot, recommendation system, or virtual assistant, the Llama 2 and Mistral models combined with streamed responses bring enhanced performance and interactivity to your projects.

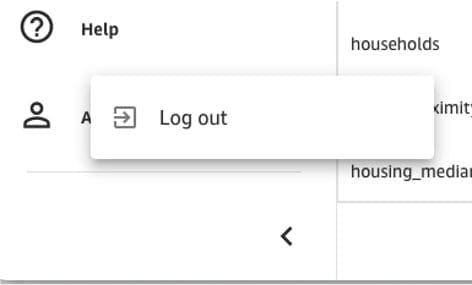

To use the latest features of SageMaker Canvas, make sure to delete and recreate the app. To do that, log out from the app by choosing Log out, then open SageMaker Canvas again. You should see the new models and enjoy the latest releases. Logging out of the SageMaker Canvas application will release all resources used by the workspace instance, therefore avoiding incurring additional unintended charges.

Conclusion

To get started with the new streamed responses for the Llama 2 and Mistral models in SageMaker Canvas, visit the SageMaker console and explore the intuitive interface. To learn more about how SageMaker Canvas and generative AI can help you achieve your business goals, refer to Empower your business users to extract insights from company documents using Amazon SageMaker Canvas and Generative AI and Overcoming common contact center challenges with generative AI and Amazon SageMaker Canvas.

If you want to learn more about SageMaker Canvas features and deep dive on other ML use cases, check out the other posts available in the SageMaker Canvas category of the AWS ML Blog. We can’t wait to see the amazing AI applications you will create with these new capabilities!

About the authors

Davide Gallitelli is a Senior Specialist Solutions Architect for AI/ML. He is based in Brussels and works closely with customers all around the globe that are looking to adopt Low-Code/No-Code Machine Learning technologies, and Generative AI. He has been a developer since he was very young, starting to code at the age of 7. He started learning AI/ML at university, and has fallen in love with it since then.

Davide Gallitelli is a Senior Specialist Solutions Architect for AI/ML. He is based in Brussels and works closely with customers all around the globe that are looking to adopt Low-Code/No-Code Machine Learning technologies, and Generative AI. He has been a developer since he was very young, starting to code at the age of 7. He started learning AI/ML at university, and has fallen in love with it since then.

Dan Sinnreich is a Senior Product Manager at AWS, helping to democratize low-code/no-code machine learning. Previous to AWS, Dan built and commercialized enterprise SaaS platforms and time-series models used by institutional investors to manage risk and construct optimal portfolios. Outside of work, he can be found playing hockey, scuba diving, and reading science fiction.

Dan Sinnreich is a Senior Product Manager at AWS, helping to democratize low-code/no-code machine learning. Previous to AWS, Dan built and commercialized enterprise SaaS platforms and time-series models used by institutional investors to manage risk and construct optimal portfolios. Outside of work, he can be found playing hockey, scuba diving, and reading science fiction.

Leave a Reply