Code Llama 70B is now available in Amazon SageMaker JumpStart

Today, we are excited to announce that Code Llama foundation models, developed by Meta, are available for customers through Amazon SageMaker JumpStart to deploy with one click for running inference. Code Llama is a state-of-the-art large language model (LLM) capable of generating code and natural language about code from both code and natural language prompts. You can try out this model with SageMaker JumpStart, a machine learning (ML) hub that provides access to algorithms, models, and ML solutions so you can quickly get started with ML. In this post, we walk through how to discover and deploy the Code Llama model via SageMaker JumpStart.

Code Llama

Code Llama is a model released by Meta that is built on top of Llama 2. This state-of-the-art model is designed to improve productivity for programming tasks for developers by helping them create high-quality, well-documented code. The models excel in Python, C++, Java, PHP, C#, TypeScript, and Bash, and have the potential to save developers’ time and make software workflows more efficient.

It comes in three variants, engineered to cover a wide variety of applications: the foundational model (Code Llama), a Python specialized model (Code Llama Python), and an instruction-following model for understanding natural language instructions (Code Llama Instruct). All Code Llama variants come in four sizes: 7B, 13B, 34B, and 70B parameters. The 7B and 13B base and instruct variants support infilling based on surrounding content, making them ideal for code assistant applications. The models were designed using Llama 2 as the base and then trained on 500 billion tokens of code data, with the Python specialized version trained on an incremental 100 billion tokens. The Code Llama models provide stable generations with up to 100,000 tokens of context. All models are trained on sequences of 16,000 tokens and show improvements on inputs with up to 100,000 tokens.

The model is made available under the same community license as Llama 2.

Foundation models in SageMaker

SageMaker JumpStart provides access to a range of models from popular model hubs, including Hugging Face, PyTorch Hub, and TensorFlow Hub, which you can use within your ML development workflow in SageMaker. Recent advances in ML have given rise to a new class of models known as foundation models, which are typically trained on billions of parameters and are adaptable to a wide category of use cases, such as text summarization, digital art generation, and language translation. Because these models are expensive to train, customers want to use existing pre-trained foundation models and fine-tune them as needed, rather than train these models themselves. SageMaker provides a curated list of models that you can choose from on the SageMaker console.

You can find foundation models from different model providers within SageMaker JumpStart, enabling you to get started with foundation models quickly. You can find foundation models based on different tasks or model providers, and easily review model characteristics and usage terms. You can also try out these models using a test UI widget. When you want to use a foundation model at scale, you can do so without leaving SageMaker by using pre-built notebooks from model providers. Because the models are hosted and deployed on AWS, you can rest assured that your data, whether used for evaluating or using the model at scale, is never shared with third parties.

Discover the Code Llama model in SageMaker JumpStart

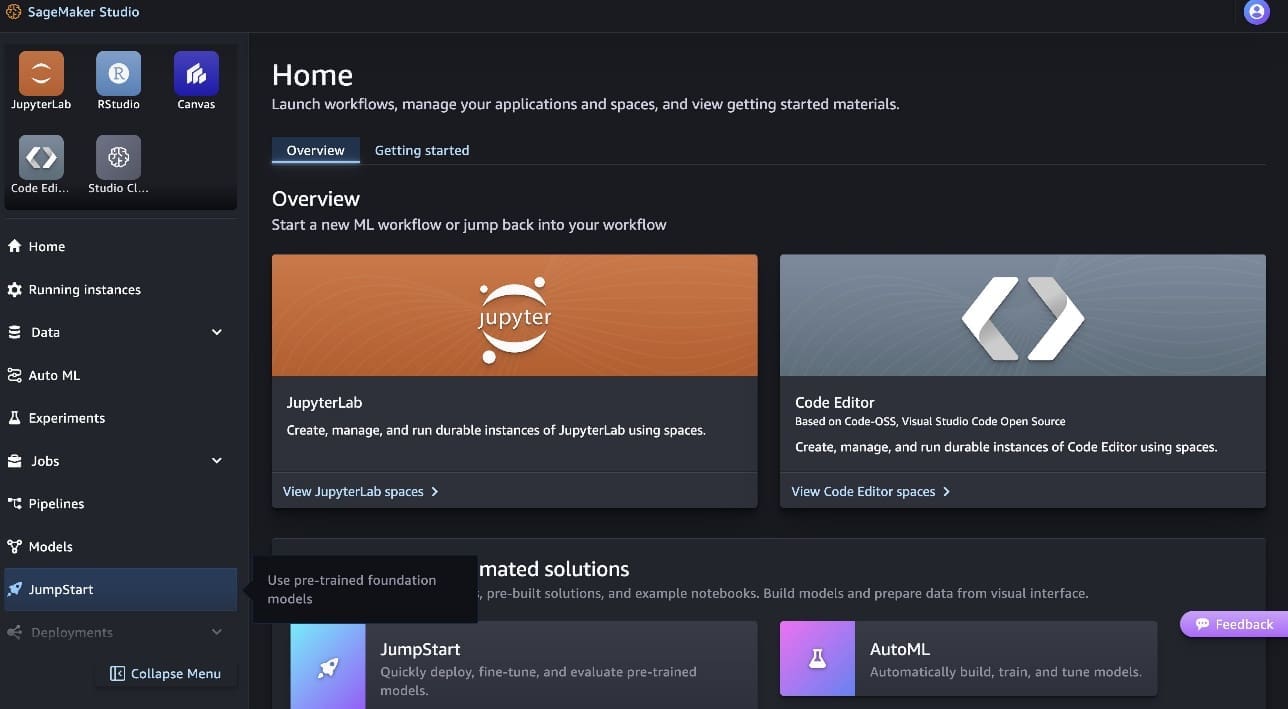

To deploy the Code Llama 70B model, complete the following steps in Amazon SageMaker Studio:

- On the SageMaker Studio home page, choose JumpStart in the navigation pane.

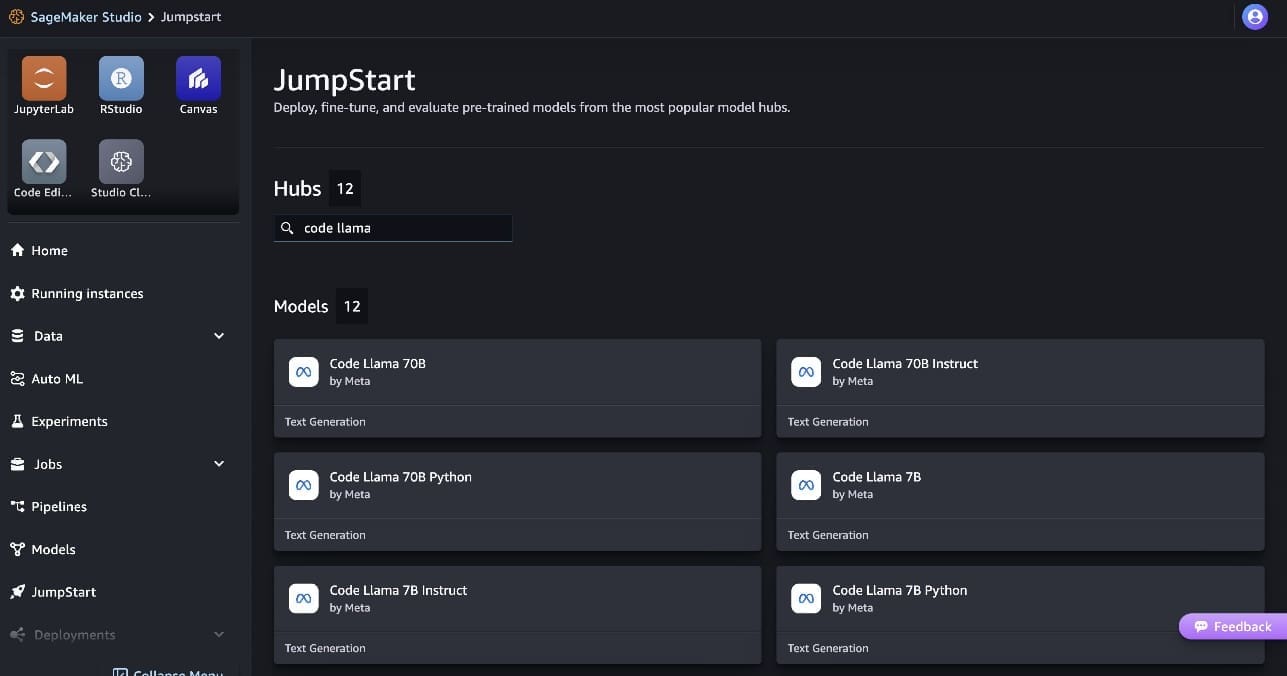

- Search for Code Llama models and choose the Code Llama 70B model from the list of models shown.

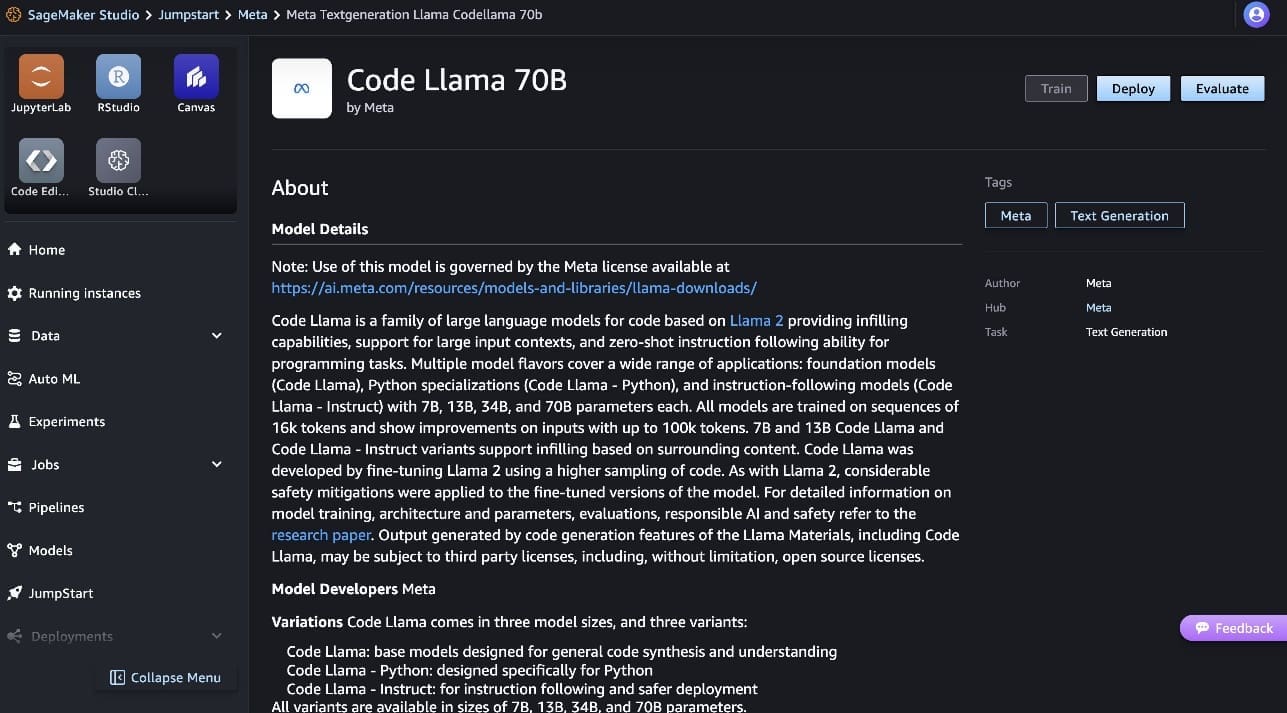

You can find more information about the model on the Code Llama 70B model card.

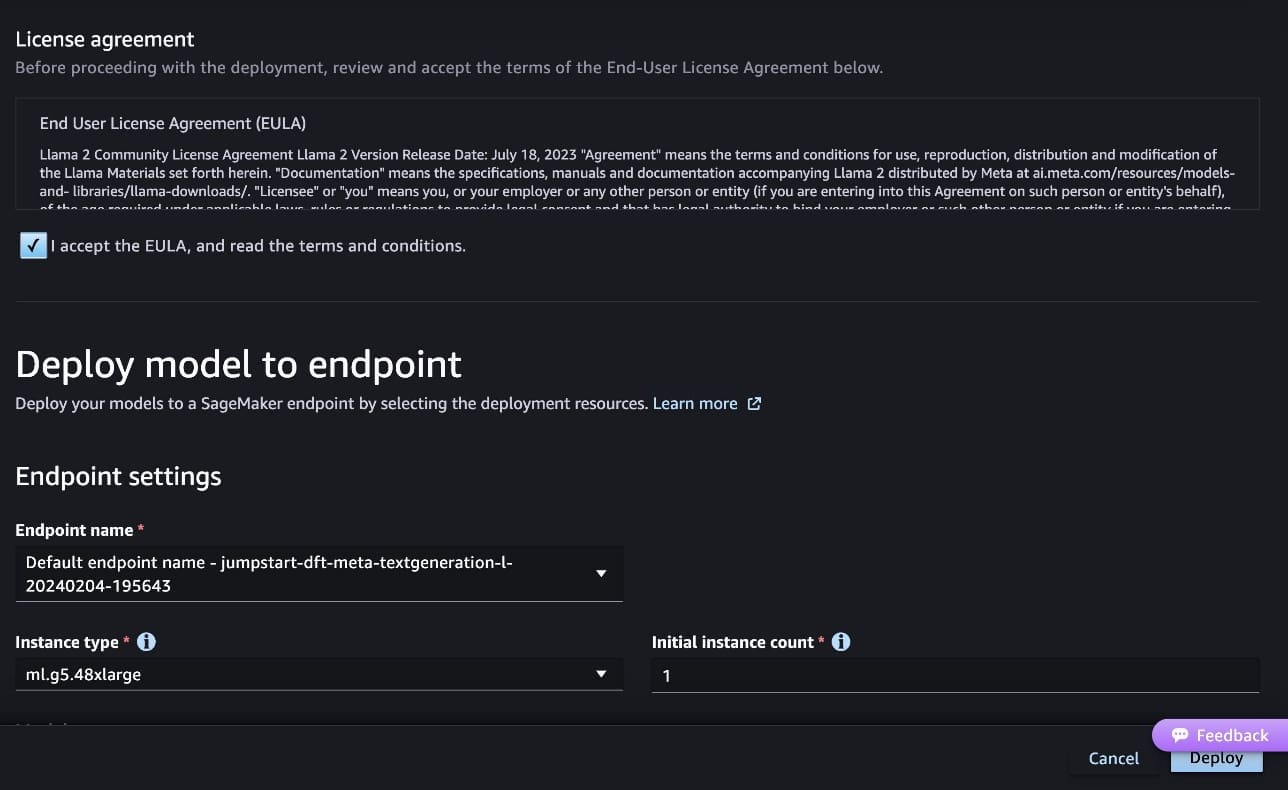

The following screenshot shows the endpoint settings. You can change the options or use the default ones.

- Accept the End User License Agreement (EULA) and choose Deploy.

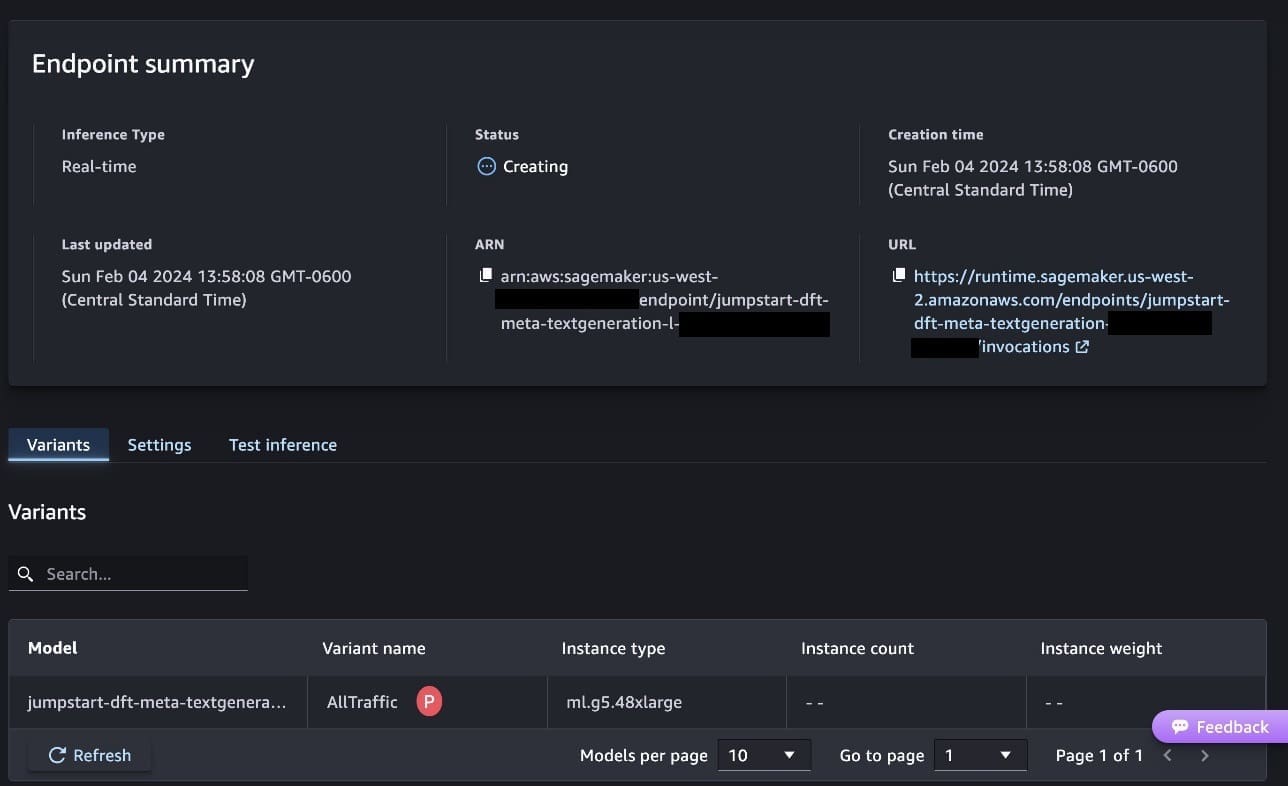

This will start the endpoint deployment process, as shown in the following screenshot.

Deploy the model with the SageMaker Python SDK

Alternatively, you can deploy through the example notebook by choosing Open Notebook within model detail page of Classic Studio. The example notebook provides end-to-end guidance on how to deploy the model for inference and clean up resources.

To deploy using notebook, we start by selecting an appropriate model, specified by the model_id. You can deploy any of the selected models on SageMaker with the following code:

This deploys the model on SageMaker with default configurations, including default instance type and default VPC configurations. You can change these configurations by specifying non-default values in JumpStartModel. Note that by default, accept_eula is set to False. You need to set accept_eula=True to deploy the endpoint successfully. By doing so, you accept the user license agreement and acceptable use policy as mentioned earlier. You can also download the license agreement.

Invoke a SageMaker endpoint

After the endpoint is deployed, you can carry out inference by using Boto3 or the SageMaker Python SDK. In the following code, we use the SageMaker Python SDK to call the model for inference and print the response:

The function print_response takes a payload consisting of the payload and model response and prints the output. Code Llama supports many parameters while performing inference:

- max_length – The model generates text until the output length (which includes the input context length) reaches

max_length. If specified, it must be a positive integer. - max_new_tokens – The model generates text until the output length (excluding the input context length) reaches

max_new_tokens. If specified, it must be a positive integer. - num_beams – This specifies the number of beams used in the greedy search. If specified, it must be an integer greater than or equal to

num_return_sequences. - no_repeat_ngram_size – The model ensures that a sequence of words of

no_repeat_ngram_sizeis not repeated in the output sequence. If specified, it must be a positive integer greater than 1. - temperature – This controls the randomness in the output. Higher

temperatureresults in an output sequence with low-probability words, and lowertemperatureresults in an output sequence with high-probability words. Iftemperatureis 0, it results in greedy decoding. If specified, it must be a positive float. - early_stopping – If

True, text generation is finished when all beam hypotheses reach the end of sentence token. If specified, it must be Boolean. - do_sample – If

True, the model samples the next word as per the likelihood. If specified, it must be Boolean. - top_k – In each step of text generation, the model samples from only the

top_kmost likely words. If specified, it must be a positive integer. - top_p – In each step of text generation, the model samples from the smallest possible set of words with cumulative probability

top_p. If specified, it must be a float between 0 and 1. - return_full_text – If

True, the input text will be part of the output generated text. If specified, it must be Boolean. The default value for it isFalse. - stop – If specified, it must be a list of strings. Text generation stops if any one of the specified strings is generated.

You can specify any subset of these parameters while invoking an endpoint. Next, we show an example of how to invoke an endpoint with these arguments.

Code completion

The following examples demonstrate how to perform code completion where the expected endpoint response is the natural continuation of the prompt.

We first run the following code:

We get the following output:

For our next example, we run the following code:

We get the following output:

Code generation

The following examples show Python code generation using Code Llama.

We first run the following code:

We get the following output:

For our next example, we run the following code:

We get the following output:

These are some of the examples of code-related tasks using Code Llama 70B. You can use the model to generate even more complicated code. We encourage you to try it using your own code-related use cases and examples!

Clean up

After you have tested the endpoints, make sure you delete the SageMaker inference endpoints and the model to avoid incurring charges. Use the following code:

Conclusion

In this post, we introduced Code Llama 70B on SageMaker JumpStart. Code Llama 70B is a state-of-the-art model for generating code from natural language prompts as well as code. You can deploy the model with a few simple steps in SageMaker JumpStart and then use it to carry out code-related tasks such as code generation and code infilling. As a next step, try using the model with your own code-related use cases and data.

About the authors

Dr. Kyle Ulrich is an Applied Scientist with the Amazon SageMaker JumpStart team. His research interests include scalable machine learning algorithms, computer vision, time series, Bayesian non-parametrics, and Gaussian processes. His PhD is from Duke University and he has published papers in NeurIPS, Cell, and Neuron.

Dr. Kyle Ulrich is an Applied Scientist with the Amazon SageMaker JumpStart team. His research interests include scalable machine learning algorithms, computer vision, time series, Bayesian non-parametrics, and Gaussian processes. His PhD is from Duke University and he has published papers in NeurIPS, Cell, and Neuron.

Dr. Farooq Sabir is a Senior Artificial Intelligence and Machine Learning Specialist Solutions Architect at AWS. He holds PhD and MS degrees in Electrical Engineering from the University of Texas at Austin and an MS in Computer Science from Georgia Institute of Technology. He has over 15 years of work experience and also likes to teach and mentor college students. At AWS, he helps customers formulate and solve their business problems in data science, machine learning, computer vision, artificial intelligence, numerical optimization, and related domains. Based in Dallas, Texas, he and his family love to travel and go on long road trips.

Dr. Farooq Sabir is a Senior Artificial Intelligence and Machine Learning Specialist Solutions Architect at AWS. He holds PhD and MS degrees in Electrical Engineering from the University of Texas at Austin and an MS in Computer Science from Georgia Institute of Technology. He has over 15 years of work experience and also likes to teach and mentor college students. At AWS, he helps customers formulate and solve their business problems in data science, machine learning, computer vision, artificial intelligence, numerical optimization, and related domains. Based in Dallas, Texas, he and his family love to travel and go on long road trips.

June Won is a product manager with SageMaker JumpStart. He focuses on making foundation models easily discoverable and usable to help customers build generative AI applications. His experience at Amazon also includes mobile shopping application and last mile delivery.

June Won is a product manager with SageMaker JumpStart. He focuses on making foundation models easily discoverable and usable to help customers build generative AI applications. His experience at Amazon also includes mobile shopping application and last mile delivery.

Leave a Reply