How LotteON built dynamic A/B testing for their personalized recommendation system

This post is co-written with HyeKyung Yang, Jieun Lim, and SeungBum Shim from LotteON.

LotteON is transforming itself into an online shopping platform that provides customers with an unprecedented shopping experience based on its in-store and online shopping expertise. Rather than simply selling the product, they create and let customers experience the product through their platform.

LotteON has been providing various forms of personalized recommendation services throughout the LotteON customer journey and across its platform, from its main page to its shopping cart and order completion pages. Through the development of new, high-performing models and continuous experimentation, they’re providing customers with personalized recommendations, improving CTR (click-through rate) metrics and increasing customer satisfaction.

In this post, we show you how LotteON implemented dynamic A/B testing for their personalized recommendation system.

The dynamic A/B testing system monitors user reactions, such as product clicks, in real-time from the recommended item lists provided. It dynamically assigns the most responsive recommendation model among multiple models to enhance the customer experience with the recommendation list. Using Amazon SageMaker and AWS services, these solutions offer insights into real-world implementation know-how and practical use cases for deployment.

Defining the business problem

In general, there are two types of A/B testing that are useful for measuring the performance of a new model: offline testing and online testing. Offline testing evaluates the performance of a new model based on past data. Online A/B testing, also known as split testing, is a method used to compare two versions of a webpage, or in LotteON’s case, two recommendation models, to determine which one performs better. A key strength of online A/B testing is its ability to provide empirical evidence based on user behavior and preferences. This evidence-based approach to selecting a recommendation model reduces guesswork and subjectivity in optimizing both click-through rates and sales.

A typical online A/B test serves two models in a certain ratio (such as 5:5) for a fixed period of time (for example, a day or a week). When one model performs better than the other, the lower performing model is still served for the duration of the experiment, regardless of its impact on the business. To improve this, LotteON turned to dynamic A/B testing, which evaluates the performance of models in real time and dynamically updates the ratios at which each model is served, so that better performing models are served more often. To implement dynamic A/B testing, they used the multi-armed bandit (MAB) algorithm, which performs real-time optimizations.

LotteON’s dynamic A/B testing automatically selects the model that drives the highest click-through rate (CTR) on their site. To build their dynamic A/B testing solution, LotteON used AWS services such as Amazon SageMaker and AWS Lambda. By doing so, they were able to reduce the time and resources that would otherwise be required for traditional forms of A/B testing. This frees up their scientists to focus more of their time on model development and training.

Solution and implementation details

The MAB algorithm evolved from casino slot machine profit optimization. MAB’s usage method differs in selection (arm) from the existing method, which is widely used to re-rank news or products. In this implementation the selection (the arm) in MAB must be a model. There are various MAB algorithms such as ε-greedy and Thompson sampling.

The ε-greedy algorithm balances exploration and exploitation by choosing the best-known option most of the time, but randomly exploring other options with a small probability ε. Thompson sampling involves defining the β distribution for each option, with parameters alpha (α) representing the number of successes so far and beta (β) representing failures. As the algorithm collects more observations, alpha and beta are updated, shifting the distributions toward the true success rate. The algorithm then randomly samples from these distributions to decide which option to try next—balancing exploitation of the best-performing options to-date with exploration of less-tested options. In this way, MAB learns which model is best based on actual outcomes.

Based on LotteON’s evaluation of both ε-greedy and Thompson sampling, which considered the balance of exposure opportunities of the models under test, they decided to use Thompson sampling. Based on the number of clicks obtained, they were able to derive an efficiency model. For a hands-on workshop on dynamic A/B testing with MAB and Thompson sampling algorithms, see Dynamic A/B Testing on Amazon Personalize & SageMaker Workshop. LotteON’s goal was to provide real-time recommendations for high CTR efficient models.

With the option (arm) configured as a model, and the alpha value for each model configured as a click, the beta value for each model was configured as a non-click. To apply the MAB algorithm to actual services, they introduced the bTS (batched Thompson sampling) method, which processes Thompson sampling on a batch basis. Specifically, they evaluated models based on traffic over a certain period of time (24 hours), and updated parameters at a certain time interval (1 hour).

In the handler part of the Lambda function, a bTS operation is performed that reflects the parameter values for each model (arm), and the click probabilities of the two models are calculated. The ID of the model with the highest probability of clicks is then selected. One thing to keep in mind when conducting dynamic A/B testing is not to start Thompson sampling right away. You should allow warm-up time for sufficient exploration. To avoid prematurely determining the winner due to small parameter values at the beginning of the test, you must collect an adequate number of impressions or click-metrics.

Dynamic A/B test architecture

The following figure shows the architecture for the dynamic A/B test that LotteON implemented.

The architecture in the preceding figure shows the data flow of Dynamic A/B testing and consists of the following four decoupled components:

1. MAB serving flow

Step 1: The user accesses LotteON’s recommendation page.

Step 2: The recommendations API checks MongoDB for information about ongoing experiments with recommendation section codes and, if the experiment is active, sends an API request with the member ID and section code to the Amazon API Gateway.

Step 3: API Gateway provides the received data to Lambda. If there is relevant data in the API Gateway cache, a specific model code in the cache is immediately passed to the recommendation API.

Step 4: The Lambda function checks the experiment type (that is, dynamic A/B test or online static A/B test) in MongoDB and runs its algorithm. If the experiment type is dynamic A/B test, the alpha (number of clicks) and beta (number of non-clicks) required for the Thompson sampling algorithm are retrieved from MongoDB, the values are obtained, and the Thompson sampling algorithm is run. Through this, the selected model’s identifier is delivered to Amazon API Gateway by the Lambda function.

Step 5: API Gateway provides the selected model’s identifier to the recommended API and caches the selected model’s identifier for a certain period of time.

Step 6: The recommendation API calls the model inference server (that is, the SageMaker endpoint) using the selected model’s identifier to receive a recommendation list and provides it to the user’s recommendation web page.

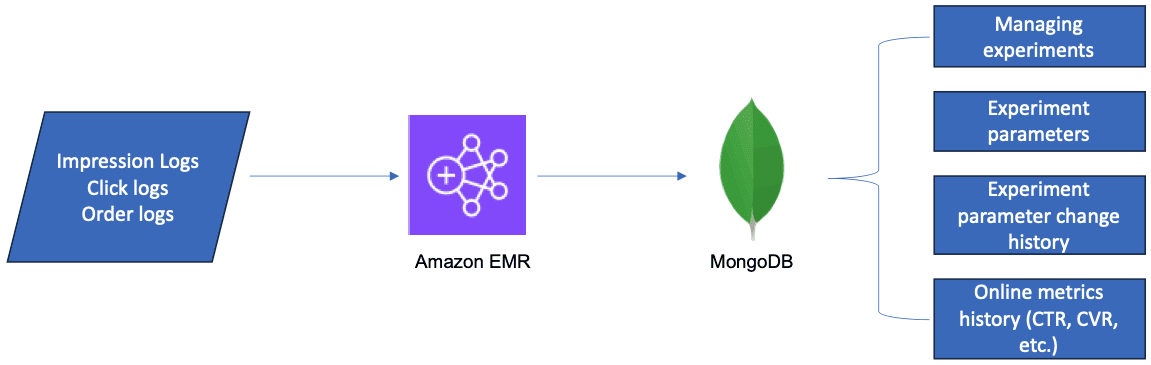

2. The flow of an alpha and beta parameter update

Step 1: The system powering LotteON’s recommendation page stores real-time logs in Amazon S3.

Step 2: Amazon EMR downloads the logs stored in Amazon S3.

Step 3: Amazon EMR processes the data and updates the alpha and beta parameter values to MongoDB for use in the Thompson sampling algorithm.

3. The flow of business metrics monitoring

Step 1: Streamlit pulls experimental business metrics from MongoDB to visualize.

Step 2: Monitor efficiency metrics such as CTR per model over time.

4. The flow of system operation monitoring

Step 1: When a recommended API call occurs, API Gateway and Lambda are launched, and Amazon CloudWatch logs are produced.

Step 2: Check system operation metrics using CloudWatch and AWS X-Ray dashboards based on CloudWatch logs.

Implementation Details 1: MAB serving flow mainly involving API Gateway and Lambda

The APIs that can serve MAB results—that is, the selected model—are implemented using serverless compute services, Lambda, and API Gateway. Let’s take a look at the implementation and settings.

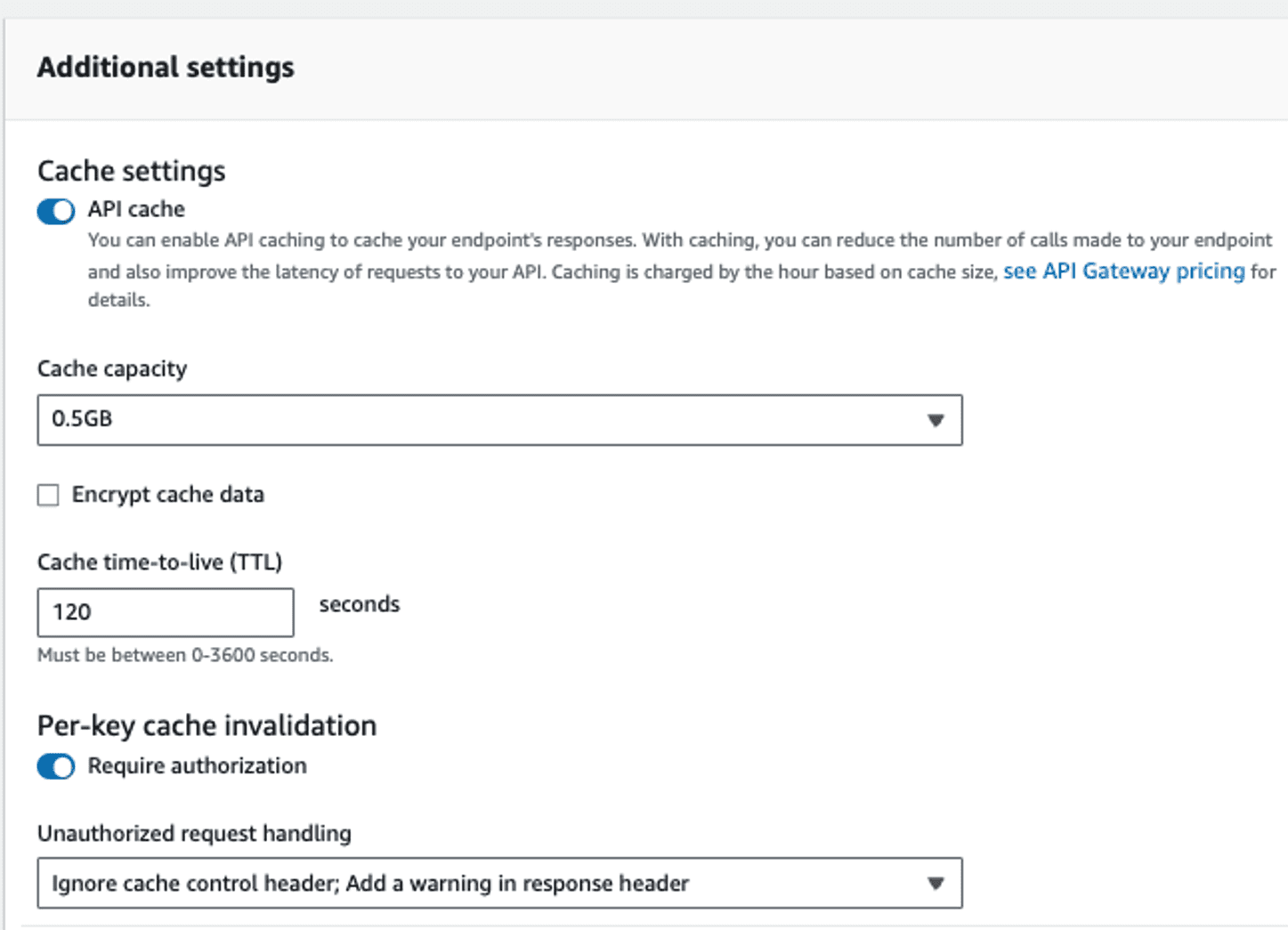

1. API Gateway configuration

When a LotteON user signs in to the recommended product area, member ID, section code, and so on are passed to API Gateway as GET parameters. Using the passed parameters, the selected model can be used for inferencing during a certain period of time through the cache function of Amazon API Gateway.

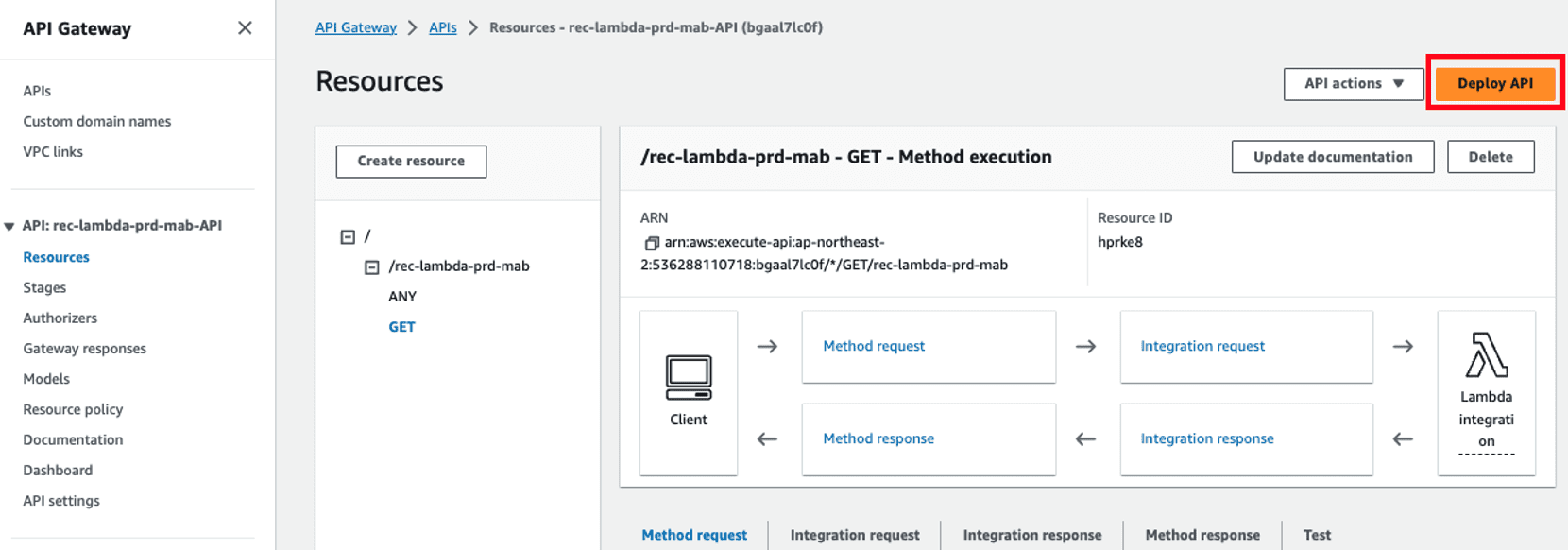

2. API Gateway cache settings

Setting up a cache in API Gateway is straightforward. To set up the cache, first enable it by selecting the appropriate checkbox under the Settings tab for your chosen stage. After it’s activated, you can define the cache time-to-live (TTL), which is the duration in seconds that cached data remains valid. This value can be set anywhere up to a maximum of 3,600 seconds.

The API Gateway caching feature is limited to the parameters of GET requests. To use caching for a particular parameter, you should insert a query string in the GET request’s query parameters within the resource. Then select the Enable API Cache option. It is essential to deploy your API using the deploy action in the API Gateway console to activate the caching function.

After the cache is set, the same model is used for inference on specific customers until the TTL has elapsed. Following that, or when the recommendation section is first exposed, API Gateway will call Lambda with the MAB function implemented.

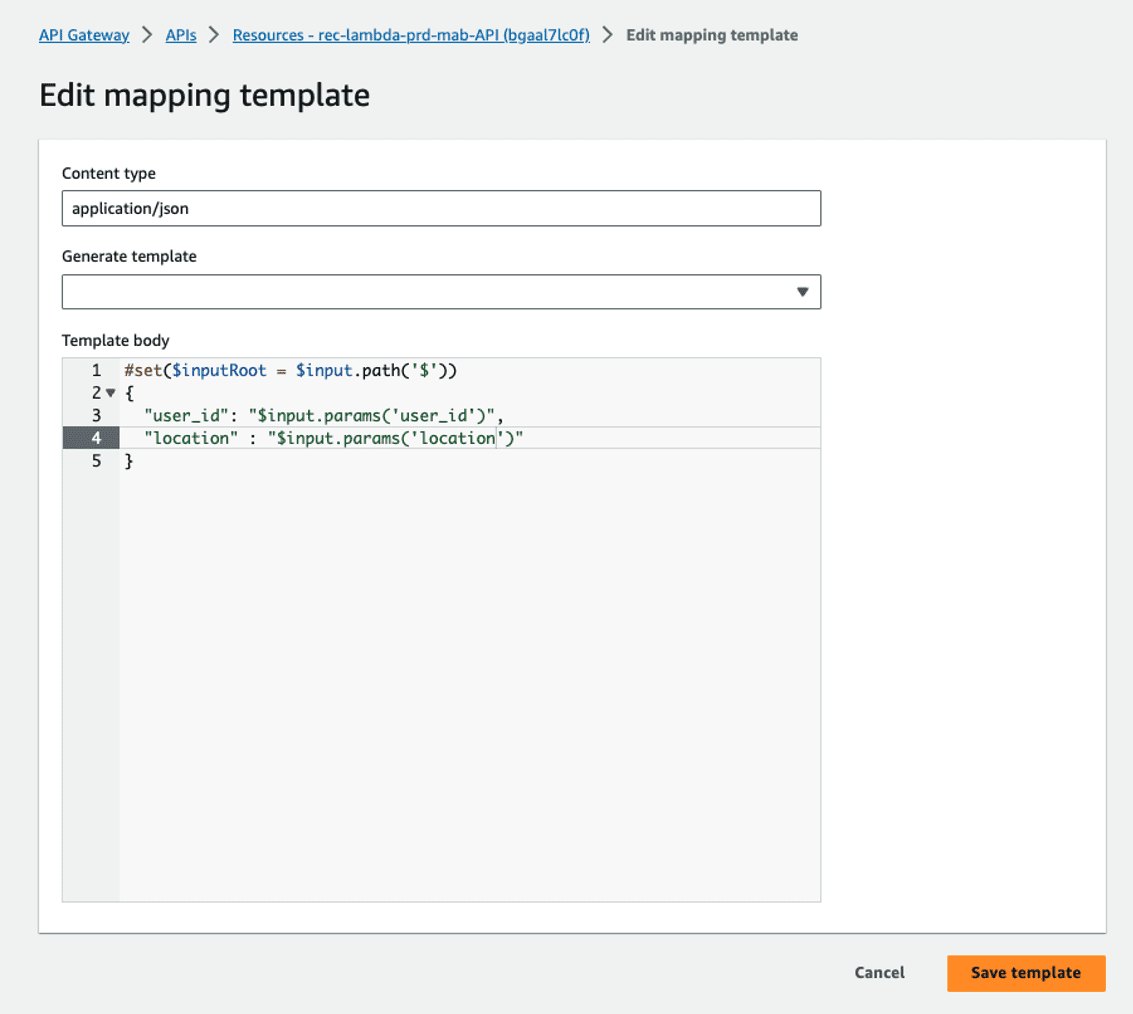

3. Add an API Gateway mapping template

When a Lambda handler function is invoked, it can receive the HTTPS request details from API Gateway as an event parameter. To provide a Lambda function with more detailed information, you can enhance the event payload using a mapping template in the API Gateway. This template is part of the integration request setup, which defines how incoming requests are mapped to the expected format of the Lambda function.

The specified parameters are then passed to the Lambda function’s event parameters. The following code is an example of source code that uses the event parameter in Lambda.

4. Lambda for Dynamic A/B Test

Lambda receives a member ID and section code as event parameter values. The Lambda function uses the received section code to run the MAB algorithm. In the case of the MAB algorithm, a dynamic A/B test is performed by getting the model (arm) settings and aggregated results. After updating the alpha and beta values according to bTS when reading the aggregated results, the probability of a click for each model is obtained through the beta distribution (see the following code), and the model with the maximum value is returned. For example, given model A and model B, where model B has a higher probability of producing a click-through event, model B is returned.

The overall implementation using the bTS algorithm, including the above code, was based on the Dynamic A/B testing for machine learning models with Amazon SageMaker MLOps projects post.

Implementation details 2: Alpha and beta parameter update

A product recommendation list is displayed to the LotteON user. When the user clicks on a specific product in the recommendation list, that data is captured and logged to Amazon S3. As shown in the following figure, LotteON used AWS EMR to perform Spark Jobs that periodically pulled the logged data from S3, processed the data, and inserted the results into MongoDB.

The results generated at this stage play a key role in determining the distribution used in MAB. The following impression and click data were examined in detail.

- Impression and click data

Note: Before updating the alpha and beta parameters in bTS, verify the integrity and completeness of log data, including impressions and clicks from the recommendation section.

Implementation details 3: Business metrics monitoring

To assess the most effective model, it’s essential to monitor business metrics during A/B testing. For this purpose, a dashboard was developed using Streamlit on an Amazon Elastic Compute Cloud (Amazon EC2) environment.

Streamlit is a Python library can be used to create web apps for data analysis. LotteON added the necessary Python package information for dashboard configuration to the requirements.txt file, specifying Streamlit version 1.14.1, and proceeded with the installation as demonstrated in the following:

The default port provided by Streamlit is 8501, so it’s required to set the inbound custom TCP port 8501 to allow access to the Streamlit web browser.

When setup is complete, use the streamlit run pythoncode.py command in the terminal, where pythoncode.py is the Python script containing the Streamlit code to run the application. This command launches the Streamlit web interface for the specified application.

LotteON created a dashboard based on Streamlit. The functionality of this organized dashboard includes monitoring simple business metrics such as model trends over time, daily and real-time winner models, as shown in the following figure.

The dashboard allowed LotteON to analyze the business metrics of the model and check the service status in real time. It also monitored the effectiveness of model version updates and reduced the time to check the service impact of the retraining pipeline.

The following shows an enlarged view of the cumulative CTR of the two models (EXP-01-APS002-01 model A, EXP-01-NCF-01 model B) on the testing day. Let’s take a look at each model to see what that means. Model A provided customers with 29,274 recommendation lists that received 1,972 product clicks and generated a CTR of 6.7 percent (1,972/29,274).

Model B, on the other hand, served 7,390 recommended lists, received 430 product clicks, and generated a CTR of 5.8 percent (430/7,390). Alpha and beta parameters, the number of clicks and the number of non-clicks respectively, of each model were used to set the beta distribution. Model A’s alpha parameter was 1972 (number of clicks) and its beta parameter was 27,752 (number of non-clicks [29,724 – 1,972]). Model B’s alpha parameter was 430 (number of clicks) and its beta parameter was 6,960 (number of non-clicks). The larger the X-axis value corresponding to the peak in the beta distribution graph, the better the performance (CTR) model.

In the following figure, model A (EXP-01-APS002-01) shows better performance because it’s further to the right in relation to the X axis. This is also consistent with the CTR rates of 6.7 percent and 5.8 percent.

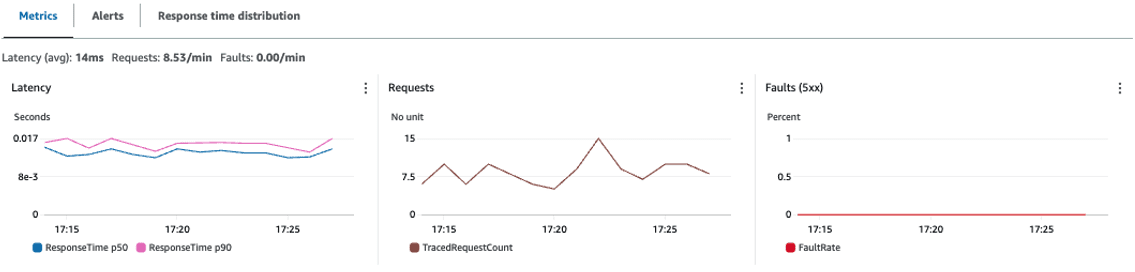

Implementation details 4: System operation monitoring with CloudWatch and AWS X-Ray

You can enable CloudWatch settings, custom access logging, and AWS X-Ray tracking features from the Logs/Tracking tab in the API Gateway menu.

CloudWatch settings and custom access logging

In the configuration step, you can change the CloudWatch Logs type to set the logging level, and after activating detailed indicators, you can check detailed metrics such as 400 errors and 500 errors. By enabling custom access logs, you can check which IP accessed the API and how.

Additionally, the retention period for CloudWatch Logs must be specified separately on the CloudWatch page to avoid storing them indefinitely.

If you select API Gateway from the CloudWatch Explorer list, you can view the number of API calls, latency, and cache hits and misses on a dashboard. Find the Cache Hit Rate as shown in the following formula and check the effectiveness of the cache on the dashboard.

- Cache Hit Rate = CacheHitCount / (CacheHitCount + CacheMissCount)

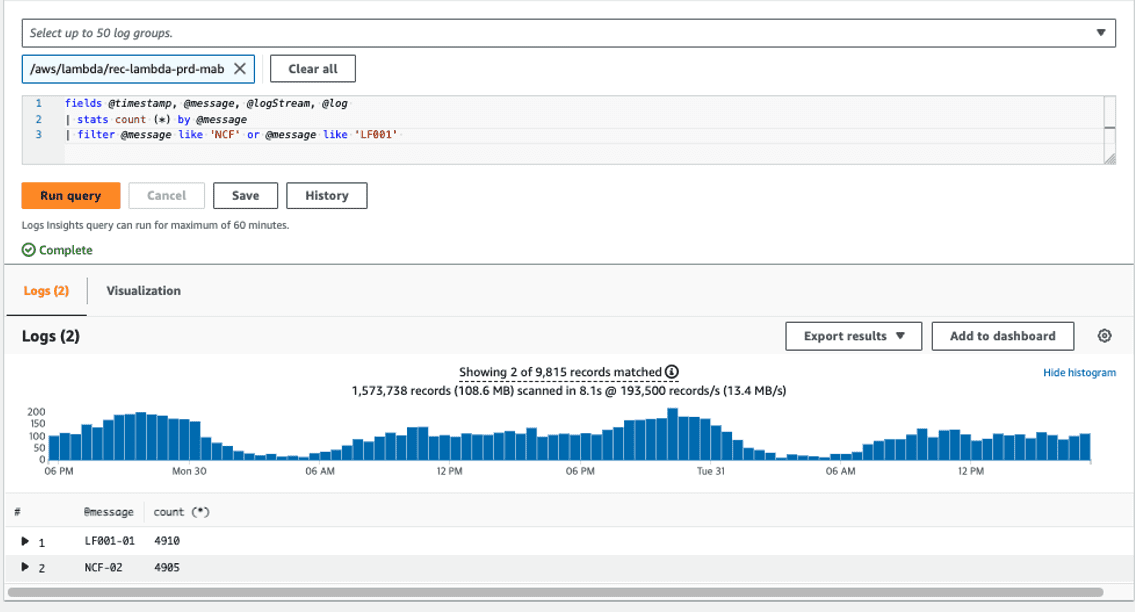

By selecting Lambda as the log group in the CloudWatch Logs Insights menu, you can verify the actual model code returned by Lambda, where MAB is performed, to check whether the sampling logic is working and branch processing is being performed.

As shown in the preceding image, LotteON observed how often the two models were called by the Lambda function during the A/B test. Specifically, the model labeled LF001-01 (the champion model) was invoked 4,910 times, while the model labeled NCF-02 (the challenger model) was invoked 4,905 times. These numbers represent the degree to which each model was selected in the experiment.

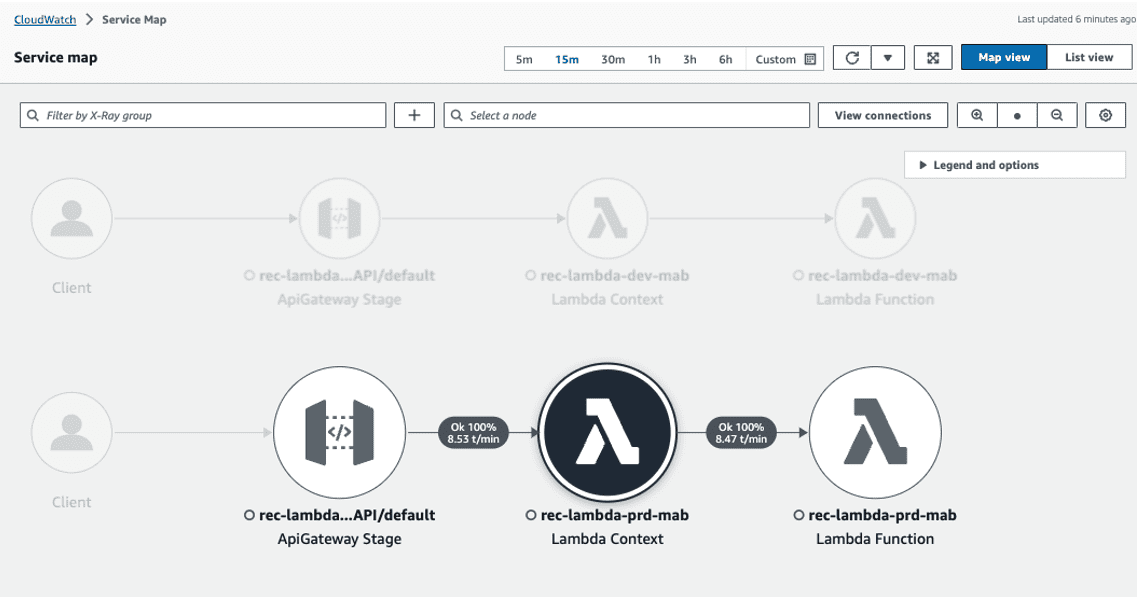

AWS X-Ray

If you enable the X-Ray trace feature, trace data is sent from the enabled AWS service to X-Ray and the visualized API service flow can be monitored from the service map menu in the X-Ray section of the CloudWatch page.

As shown in the preceding figure, you can easily track and monitor latency, number of calls, and number of HTTP call status for each service section by choosing the API Gateway icon and each Lambda node.

There was no need to store performance metrics for a long time because most for Lambda functions metrics are analyzed within a week and aren’t used afterward. Because data from X-Ray is stored for 30 days by default, which is enough time to use the metrics, the data was used without changing the storage cycle. (For more information, see the AWS X-Ray FAQs.)

Conclusion

In this post, we explained how Lotte ON builds and uses a dynamic A/B testing environment. Through this project, Lotte ON was able to test the model’s performance in various ways online by combining dynamic A/B testing with the MAB function. It also allows comparison of different types of recommendation models and is designed to be comparable across model versions, facilitating online testing.

In addition, data scientists could concentrate on improving model performance and training as they can check metrics and system monitoring instantly. The dynamic A/B testing system was initially developed and applied to the LotteON main page, and then expanded to the main page recommendation tab and product detail recommendation section. Because the system is able to evaluate online performance without significantly reducing the click-through rate of existing models, we have been able to conduct more experiments without impacting users.

Dynamic A/B Test exercises can also be found in AWS Workshop – Dynamic A/B Testing on Amazon Personalize & SageMaker.

About the Authors

HyeKyung Yang is a research engineer in the Lotte E-commerce Recommendation Platform Development Team and is in charge of developing ML/DL recommendation models by analyzing and utilizing various data and developing a dynamic A/B test environment.

HyeKyung Yang is a research engineer in the Lotte E-commerce Recommendation Platform Development Team and is in charge of developing ML/DL recommendation models by analyzing and utilizing various data and developing a dynamic A/B test environment.

Jieun Lim is a data engineer in the Lotte E-commerce Recommendation Platform Development Team and is in charge of operating LotteON’s personalized recommendation system and developing personalized recommendation models and dynamic A/B test environments.

Jieun Lim is a data engineer in the Lotte E-commerce Recommendation Platform Development Team and is in charge of operating LotteON’s personalized recommendation system and developing personalized recommendation models and dynamic A/B test environments.

SeungBum Shim is a data engineer in the Lotte E-commerce Recommendation Platform Development Team, responsible for discovering ways to use and improve recommendation-related products through LotteON data analysis, and developing MLOps pipelines and ML/DL recommendation models.

SeungBum Shim is a data engineer in the Lotte E-commerce Recommendation Platform Development Team, responsible for discovering ways to use and improve recommendation-related products through LotteON data analysis, and developing MLOps pipelines and ML/DL recommendation models.

Jesam Kim is an AWS Solutions Architect and helps enterprise customers adopt and troubleshoot cloud technologies and provides architectural design and technical support to address their business needs and challenges, especially in AIML areas such as recommendation services and generative AI.

Jesam Kim is an AWS Solutions Architect and helps enterprise customers adopt and troubleshoot cloud technologies and provides architectural design and technical support to address their business needs and challenges, especially in AIML areas such as recommendation services and generative AI.

Gonsoo Moon is an AWS AI/ML Specialist Solutions Architect and provides AI/ML technical support. His main role is to collaborate with customers to solve their AI/ML problems based on various use cases and production experience in AI/ML.

Gonsoo Moon is an AWS AI/ML Specialist Solutions Architect and provides AI/ML technical support. His main role is to collaborate with customers to solve their AI/ML problems based on various use cases and production experience in AI/ML.

Leave a Reply