Read graphs, diagrams, tables, and scanned pages using multimodal prompts in Amazon Bedrock

Large language models (LLMs) have come a long way from being able to read only text to now being able to read and understand graphs, diagrams, tables, and images. In this post, we discuss how to use LLMs from Amazon Bedrock to not only extract text, but also understand information available in images.

Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) from leading AI companies like AI21 Labs, Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon through a single API. It also provides a broad set of capabilities to build generative AI applications with security, privacy, and responsible AI.

Solution overview

In this post, we demonstrate how to use models on Amazon Bedrock to retrieve information from images, tables, and scanned documents. We provide the following examples:

- Performing object classification and object detection tasks

- Reading and querying graphs

- Reading flowcharts and architecture diagrams (such as an AWS architecture diagram) and converting it to text

We also explore specific use cases where Amazon Bedrock can help you generate information from an image:

- Identify the action in the image

- Name a product and extract metadata to generate a tagline and description

- Generate a real estate listing for a property

- Generate a recipe from an image of a dish

Perform object classification and object detection tasks using LLM

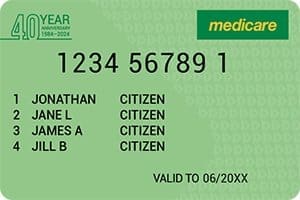

At the time of writing, the best algorithms for object classification and detection are based on convolution neural networks. However, LLMs such as Anthropic’s Claude 3 Sonnet on Amazon Bedrock can also perform these tasks using zero-shot prompting, which refers to a prompting technique to give a task to the model without providing specific examples or training for that specific task. In the following example, we show how to classify an image based on the classes that we passed with the prompt. The following image is a sample Australian Medicare card.

We use the following prompt with Anthropic’s Claude 3 Sonnet to classify the image.:

This is one of the documents that a customer seeking a loan has uploaded. The image can belong to one of the following four classes : ID, bank statement, application form or utility bill. Identify what the class of this document is and give me just the class name. I don’t need any other information for now

We get the following response from the LLM:

Based on the image provided, the class of this document appears to be an ID card or identification document. The image shows what seems to be a Medicare card or similar government-issued ID card displaying names and an identification number.

It has correctly identified the class; however, a response in JSON would make it more straightforward to process that response. We add the following to the end of the prompt:

provide the response in json format with the key as “class” and the value as the class of the document

We get the following response:

{

"class": "ID"

}

You can now read the JSON response using a library of your choice, such as the Python JSON library.

In this next example, we use the Meta’s Llama 3.2 90B Vision model to identify an object in an image. The following image is of a gearbox.

We use the following prompt to identify the gearbox from the image:

identify the entire machine part in this image. Give just the name of the machine part in a JSON format with the key as ”name“ and the value as the name of the machine part

The following screenshot shows the prompt and the answer the model gives.

Read and query graphs

In this next example, we show how to read a graph and then interpolate and extrapolate values in the graph. The following graph shows the population growth for a few countries (source).

We pass this image to Anthropic’s Claude 3 Sonnet along with the following prompt:

From this graph create a table for the population of India, with the first column as the year and the second column as the population in millions. Create a row for every 5 years starting from 1950 to 2025.

We ask it to create a row for every 5 years, so the model must interpolate values. We also ask it to extend the table until 2025, and because the data is only until 2021, the model will have to extrapolate the values. We get the following response:

Here is a table for the population of India, with the first column as the year and the second column as the population in millions, for every 5 years starting from 1950 to 2025:

| Year | Population (in millions) |

|------|--------------------------|

| 1950 | 357.6 |

| 1955 | 390.5 |

| 1960 | 427.8 |

| 1965 | 468.0 |

| 1970 | 510.9 |

| 1975 | 556.9 |

| 1980 | 605.7 |

| 1985 | 658.0 |

| 1990 | 713.8 |

| 1995 | 773.0 |

| 2000 | 835.6 |

| 2005 | 901.7 |

| 2010 | 971.5 |

| 2015 | 1045.0 |

| 2020 | 1122.0 |

| 2025 | 1202.4 |

The extrapolation may not be accurate, and this is where a more traditional machine learning (ML) approach would be helpful.

You can also ask the model to combine its knowledge with the knowledge from the graph. The following prompt asks for the population for countries in this graph for 2021, along with the country’s capital:

Can you create a table for 2021 with the first column as the country name, the second column as population in millions and third column as the capital of that country?

The following screenshot shows the response.

The LLM has filled in the table based on the graph and its own knowledge about the capital of each country.

You can try out something harder as well. The following image is a weather map from the

You can ask the LLM a specific question, such as the following:

This is a precipitation map for Australia. Is it raining in Sydney right now?

You don’t have to tell the LLM where Sydney is or that the image is for rainfall.

We get the following response:

Based on the precipitation map shown, there does not appear to be any significant rainfall occurring over the Sydney area, which is located on the eastern coast of the Australian continent. The map uses contour lines and colors to represent precipitation levels, but the region around Sydney has no shaded areas or contour lines indicating rainfall at this particular timeframe depicted by the map. Therefore, the available data suggests that it is likely not raining in Sydney at the time represented by this precipitation map.

There are a couple of interesting observations from this use case:

- Anthropic’s Claude 3 Sonnet can read maps

- The model is able to read overlays on a map

- Phrases such as “region around Sydney” shows that the model doesn’t need to work with exact information but can use an approximation, just as humans do

Read flowcharts and architecture diagrams

You can read AWS architecture diagrams using the Meta Llama 3.2 90B Vision model. The following is an example architecture diagram for modernizing applications with microservices using Amazon Elastic Kubernetes Service (Amazon EKS).

We use the following prompt to read this diagram:

The steps in this diagram are explained using numbers 1 to 11. The numbers are shown in blue squares. Can you explain the diagram using the numbers 1 to 11 and an explanation of what happens at each of those steps?

The following screenshot shows the response that we get from the LLM (truncated for brevity).

Furthermore, you can use this diagram to ask follow-up questions:

Why do we need a network load balancer in this architecture

The following screenshot shows the response from the model.

As you can see, the LLM acts as your advisor now for questions related to this architecture.

However, we’re not limited to using generative AI for only software engineering. You can also read diagrams and images from engineering, architecture, and healthcare.

For this example, we use a process diagram taken from Wikipedia.

To find out what this process diagram is for and to describe the process, you can use the following prompt:

Can you name the process shown in the example. Also describe the process using numbered steps and go from left to right.

The following screenshot shows the response.

The LLM has done a good job figuring out that the diagram is for the Haber process to produce ammonia. It also describes the steps of the process.

Identify actions in an image

You can identify and classify the actions taking place in the image. The model’s ability to accurately identify actions is further enhanced by its capacity to analyze contextual information, such as the surrounding objects, environments, and the positions of individuals or entities within the image. By combining these visual cues and contextual elements, Anthropic’s Claude 3 Sonnet can make informed decisions about the nature of the actions being performed, providing a comprehensive understanding of the scene depicted in the image.

The following is an example where we can not only classify the action of the player but also provide feedback to the player comparing the action to a professional player.

We provide the model the following image of a tennis player. The image was generated using the Stability AI (SDXL 1.0) model on Amazon Bedrock.

The following screenshot shows the prompt and the model’s response.

Name a product and extract metadata to generate a tagline and description

In the field of marketing and product development, coming up with a perfect product name and creative promotional content can be challenging. With the image-to-text capabilities of Anthropic’s Claude 3 Sonnet, you can upload the image of the product and the model can generate a unique product name and craft taglines to suit the target audience.

For this example, we provide the following image of a sneaker to the model (the image was generated using the Stability AI (SDXL 1.0) model on Amazon Bedrock).

The following screenshot shows the prompt.

The following screenshot shows the model’s response.

In the retail and ecommerce domain, you can also use Anthropic’s Claude 3 Sonnet to extract detailed product information from the images for inventory management.

For example, we use the prompt shown in the following screenshot.

The following screenshot shows the model’s response.

Create a real estate listing for a property

You can upload images of a property floor plan and pictures of interior and exterior of the house and then get a description to use in a real estate listing. This is useful to increase the creativity and productivity of real estate agents while advertising properties. Architects could also use this mechanism to explain the floor plan to customers.

We provide the following example floor plan to the model.

The following screenshot shows the prompt.

The following screenshot shows the response.

Generate a recipe from the image of a dish

You can also use Anthropic’s Claude 3 Sonnet to create a recipe based on a picture of a dish. However, out of the box, the model can identify only the dishes that are included in the dataset used for the model training. Factors such as ingredient substitutions, cooking techniques, and cultural variations in cuisine can pose significant challenges.

For example, we provide the following image of a cake to the model to extract the recipe. The image was generated using the Stability AI model (SDXL 1.0) on Amazon Bedrock.

The following screenshot shows the prompt.

The model successfully identifies the dish as Black Forest cake and creates a detailed recipe. The recipe may not create the exact cake shown in the figure, but it does get close to a Black Forest Cake.

Conclusion

FMs such as Anthropic’s Claude 3 Sonnet and Meta Llama 3.2 90B Vision model, available on Amazon Bedrock, have demonstrated impressive capabilities in image processing. These FMs unlock a range of powerful features, including image classification, optical character recognition (OCR), and the ability to interpret complex visuals such as graphs and architectural blueprints. Such innovations offer novel solutions to challenging problems, from searching through scanned document archives to generating image-inspired text content and converting visual information into structured data.

To start using these capabilities for your specific needs, we recommend exploring the chat playground feature on Amazon Bedrock, which allows you to interact with and extract information from images.

About the Authors

Mithil Shah is a Principal AI/ML Solution Architect at Amazon Web Services. He helps commercial and public sector customers use AI/ML to achieve their business outcome. He is currently helping customers build chat bots and search functionality using LLM agents and RAG.

Mithil Shah is a Principal AI/ML Solution Architect at Amazon Web Services. He helps commercial and public sector customers use AI/ML to achieve their business outcome. He is currently helping customers build chat bots and search functionality using LLM agents and RAG.

Santosh Kulkarni is an Senior Solutions Architect at Amazon Web Services specializing in AI/ML. He is passionate about generative AI and is helping customers unlock business potential and drive actionable outcomes with machine learning at scale. Outside of work, he enjoys reading and traveling.

Santosh Kulkarni is an Senior Solutions Architect at Amazon Web Services specializing in AI/ML. He is passionate about generative AI and is helping customers unlock business potential and drive actionable outcomes with machine learning at scale. Outside of work, he enjoys reading and traveling.

Leave a Reply