Design multi-agent orchestration with reasoning using Amazon Bedrock and open source frameworks

As generative AI capabilities evolve, successful business adoptions hinge on the development of robust problem-solving capabilities. At the forefront of this transformation are agentic systems, which harness the power of foundation models (FMs) to tackle complex, real-world challenges. By seamlessly integrating multiple agents, these innovative solutions enable autonomous collaboration, decision-making, and efficient problem-solving in diverse environments. Empirical research conducted by Amazon Web Services (AWS) scientists in conjunction with academic researchers has demonstrated the significant strides made in enhancing the reasoning capabilities through agent collaboration on competitive tasks.

This post provides step-by-step instructions for creating a collaborative multi-agent framework with reasoning capabilities to decouple business applications from FMs. It demonstrates how to combine Amazon Bedrock Agents with open source multi-agent frameworks, enabling collaborations and reasoning among agents to dynamically execute various tasks. The exercise will guide you through the process of building a reasoning orchestration system using Amazon Bedrock, Amazon Bedrock Knowledge Bases, Amazon Bedrock Agents, and FMs. We also explore the integration of Amazon Bedrock Agents with open source orchestration frameworks LangGraph and CrewAI for dispatching and reasoning.

AWS has introduced a multi-agent collaboration capability for Amazon Bedrock, enabling developers to build, deploy, and manage multiple AI agents working together on complex tasks. This feature allows for the creation of specialized agents that handle different aspects of a process, coordinated by a supervisor agent that breaks down requests, delegates tasks, and consolidates outputs. This approach improves task success rates, accuracy, and productivity, especially for complex, multi-step tasks.

For the example code and demonstration discussed in this post, refer to the agentic-orchestration GitHub repository and this AWS Workshop. You can also refer to GitHub repo for Amazon Bedrock multi-agent collaboration code samples.

Key characteristics of an agentic service

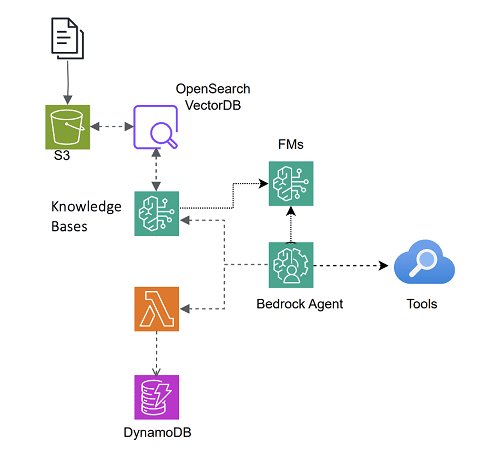

In the context of generative AI, “agent” refers to an autonomous function that can interact with its environment, gather data, and make decisions to execute complex tasks to achieve predefined goals. Generative AI agents are autonomous, goal-oriented systems that use FMs, such as large language models (LLMs), to interact with and adapt to their environments. These agents excel in planning, problem-solving, and decision-making, using techniques such as chain-of-thought prompting to break down complex tasks. They can self-reflect, improve their processes, and expand their capabilities through tool use and collaborations with other AI models. These agents can operate independently or collaboratively, executing tasks across various domains while continuously adapting to new information and changing circumstances. Agents can lead to increased creativity and produce content at scale, automating repetitive tasks so humans can focus on strategic work, thus reducing repetitive actions and leading to cost savings. The following diagram shows the high-level architecture of the solution.

To implement an agent on AWS, you can use the Amazon Bedrock Agents Boto3 client as demonstrated in the following code example. After the required AWS and Identity and Access Management (IAM) role is created for the agent, use the create_agent API. This API requires an agent name, an FM identifier, and an instruction string. Optionally, you can also provide an agent description. The created agent is not yet prepared for use. We focus on preparing the agent and then using it to invoke actions and interact with other APIs. Use the following code example to obtain your agent ID; it will be crucial for performing operations with the agent.

# Use the Python boto3 SDK to interact with Amazon Bedrock Agent service

bedrock_agent_client = boto3.client('bedrock-agent')

# Create a new Bedrock Agent

response = bedrock_agent_client.create_agent(

agentName=, #customized text string

agentResourceRoleArn=, #IAM role assigned to the agent

description=, #customized text string

idleSessionTTLInSeconds=1800,

foundationModel=, #e.g. "anthropic.claude-3-sonnet-20240229-v1:0"

instruction=, #agent instruction text string

)

agent_id = response['agent']['agentId'] Multi-agent pipelines for intra-agent collaboration

Multi-agent pipelines are orchestrated processes within AI systems that involve multiple specialized agents working together to accomplish complex tasks. Within pipelines, agents are organized in a sequential order structure, with different agents handling specific subtasks or roles within the overall workflow. Agents interact with each other, often through a shared “scratchpad” or messaging system, allowing them to exchange information and build upon each other’s work. Each agent maintains its own state, which can be updated with new information as the flow progresses. Complex projects are broken down into manageable subtasks, which are then distributed among the specialized agents. The workflow includes clearly defined processes for how tasks should be orchestrated, facilitating efficient task distribution and alignment with objectives. These processes can govern both inter-agent interactions and intra-agent operations (such as how an agent interacts with tools or processes outputs). Agents can be assigned specific roles (for example, retriever or injector) to tackle different aspects of a problem.

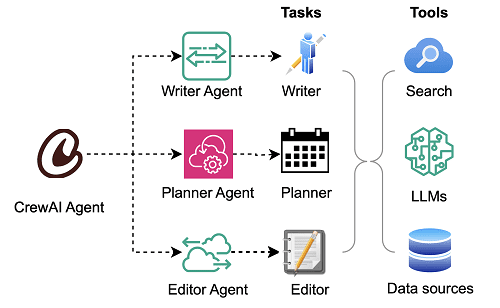

As a practical example, consider a multi-agent pipeline for blog writing, implemented with the multi-agent framework CrewAI. To create a multi-agent pipeline with CrewAI, first define the individual agents that will participate in the pipeline. The agents in the following example are the Planner Agent, a Writer Agent, and an Editor Agent. Next, arrange these agents into a pipeline, specifying the order of task execution and how the data flows between them. CrewAI provides mechanisms for agents to pass information to each other and coordinate their actions. The modular and scalable design of CrewAI makes it well-suited for developing both simple and sophisticated multi-agent AI applications. The following diagram shows this multi-agent pipeline.

from crewai import Agent, Task, Crew, Process

# Create a blog writing multi-agent pipeline, which is comprised of a planner, a writer, and an editor agent

# This code snippet shows only the planner agent, which calls web search tools

# and Amazon Bedrock for the LLM

class blogAgents():

def __init__(self, topic, model_id):

self.topic = topic

self.model_id = model_id

def planner(self, topic, model_id):

return Agent(

role="Content Planner",

goal=f"""Plan engaging and factually accurate content on {topic}.""",

backstory=f"""You're working on planning a blog article about the topic: {topic}. n

You collect information by searching the web for the latest developments that directly relate to the {topic}. n

You help the audience learn something to make informed decisions regarding {topic}. n

Your work is the basis for the Content Writer to write an article on this {topic}.""",

allow_delegation=False,

tools=,

llm=,

verbose=True

)

......

# Create the associated blog agent tasks which are comprised of a planner, writer, and editor tasks.

# This code snippet shows only the planner task.

class blogTasks():

def __init__(self, topic, model_id):

self.topic = topic

self.model_id = model_id

def plan(self, planner, topic, model_id):

return Task(

description=(

f"""1. Prioritize the latest trends, key players, and noteworthy news on {topic}.n

2. Identify the target audience, considering their interests and pain points.n

3. Develop a detailed content outline including an introduction, key points, and a call to action.n

4. Include SEO keywords and relevant data or sources."""

),

expected_output=f"""Convey the latest developments on the {topic} with sufficient depth as a domain expert.n

Create a comprehensive content plan document with an outline, audience analysis,

SEO keywords, and resources.""",

agent=planner

)

......

# Define planner agent and planning tasks

planner_agent = agents.planner(self.topic, self.model_id)

plan_task = tasks.plan(planner_agent, self.topic, self.model_id)

......

# Define an agentic pipeline to chain the agent and associated tasks

# with service components, embedding engine, and execution process

crew = Crew(

agents=[planner_agent, writer_agent, editor_agent],

tasks=[plan_task, write_task, edit_task],

verbose=True,

memory=True,

embedder={

"provider": "huggingface",

"config": {"model": "sentence-transformers/paraphrase-multilingual-MiniLM-L12-v2"},

},

cache=True,

process=Process.sequential # Sequential process will have tasks executed one after the other

)

result = crew.kickoff()

As demonstrated in this code example, multi-agent pipelines are generally simple linear structures that may be easy to set up and understand. They have a clear sequential flow of tasks from one agent to the next and can work well for straightforward workflows with a defined order of operations. Meanwhile, the pipeline structure can be less flexible for complex, nonlinear agent interactions, which makes it less able to handle branching logic or cycles. This might be less efficient for problems that require back-and-forth between agents. The next section addresses a graph framework for multi-agent systems, which lend better to more complex scenarios.

Multi-agent graph framework for asynchronous orchestration and reasoning

A multi-agent framework offers significant potential for intelligent, dynamic problem-solving that enable collaborative, specialized task execution. While these systems can enhance inference accuracy and response efficiency by dynamically activating and coordinating agents, they also present critical challenges including potential bias, limited reasoning capabilities, and the need for robust oversight. Effective multi-agent frameworks require careful design considerations such as clear leadership, dynamic team construction, effective information sharing, planning mechanisms like chain-of-thought prompting, memory systems for contextual learning, and strategic orchestration of specialized language models. As the technology evolves, balancing agent autonomy with human oversight and ethical safeguards will be crucial to unlocking the full potential of these intelligent systems while mitigating potential risks.

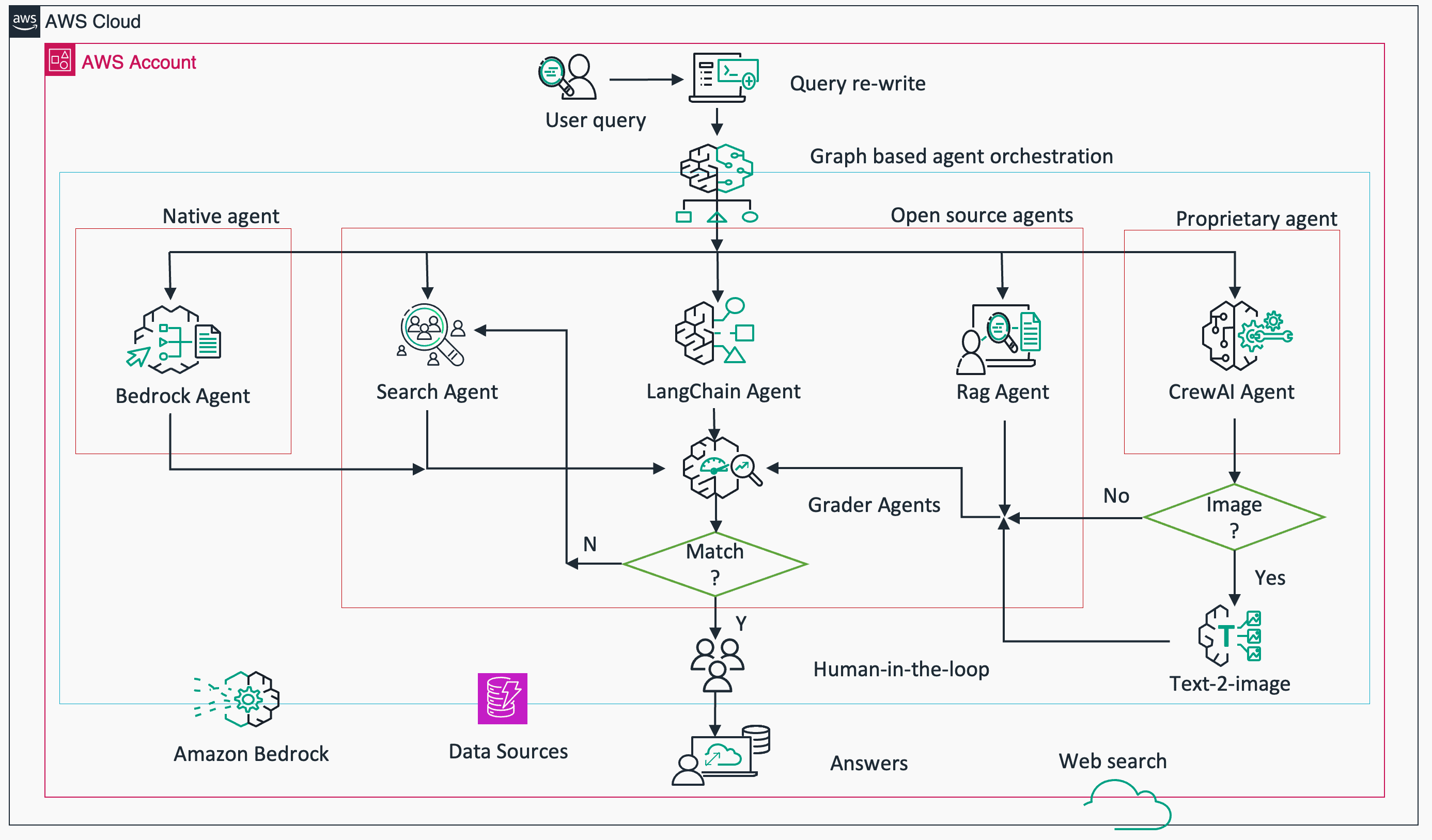

A multi-agent graph framework is a system that models the interactions and relationships between multiple autonomous agents using a graph-based representation. In this type of framework, agents are represented as nodes in the graph, with each agent having its own set of capabilities, goals, and decision-making processes. The edges in the graph represent the interactions, communications, or dependencies between the agents. These can include things like information sharing, task delegation, negotiation, or coordination. The graph structure allows for the modeling of complex, dynamic relationships between agents, including cycles, feedback loops, and hierarchies. The following diagram shows this architecture.

The graph-based approach provides a flexible and scalable way to represent the structure of multi-agent systems, making it easier to analyze, simulate, and reason about the emergent behaviors that arise from agent interactions. The following code snippet illustrates the process of building a graph framework designed for multi-agent orchestration using LangGraph. This framework is essential for managing and coordinating the interactions between multiple agents within a system, promoting efficient and effective communication and collaboration. Notably, it emphasizes the plug-and-play feature, which allows for dynamic changes and the flexibility to accommodate third-party agents. Frameworks with this capability can seamlessly adapt to new requirements and integrate with external systems, enhancing their overall versatility and usability.

from langgraph.graph import StateGraph, END

......

# Create a graph to orchestrate multiple agents (i.e. nodes)

orch = StateGraph(MultiAgentState)

orch.add_node("rewrite_agent", rewrite_node)

orch.add_node('booking_assistant', bedrock_agent_node)

orch.add_node('blog_writer', blog_writer_node)

orch.add_node("router_agent", router_node)

orch.add_node('search_expert', search_expert_node)

....

# Create edges to connect agents to form a graph

orch.set_entry_point("rewrite_agent")

orch.add_edge('rewrite_agent', 'router_agent')

orch.add_conditional_edges(

"RAG_agent",

decide_to_search,

{

"to_human": "human",

"do_search": "search_expert",

},

)

orch.add_edge('blog_writer', 'text2image_generation')

......

# Compile the graph for agentic orchestration

graph = orch.compile(checkpointer=memory, interrupt_before = ['human'])

The multi-agent graph approach is particularly useful for domains where complex, dynamic interactions between autonomous entities need to be modeled and analyzed, such as in robotics, logistics, social networks, and more. There are multiple advantages and disadvantages to the multi-agent graph-based approach over the linear multi-agent pipelines approach, which are captured below.

Advantages and limitations

The emergence of agentic services represents a transformative approach to system design. Unlike conventional AI models that adhere to fixed, predetermined workflows, agentic systems are characterized by their capacity to collaborate, adapt, and make decisions in real time. This transition from passive to active AI opens up exciting opportunities and presents unique design challenges for developers and architects. Central to agentic services is the notion of agentic reasoning, which embodies a flexible, iterative problem-solving methodology that reflects human cognitive processes. By integrating design patterns such as reflection, self-improvement, and tool utilization, we can develop AI agents that are capable of ongoing enhancement and broader functionality across various domains.

Agentic services, although promising, face several limitations that must be addressed for their successful production implementation. The complexity of managing multiple autonomous agents, especially as their numbers and scope increase, poses a significant challenge in maintaining system coherence and stability. Additionally, the emergent behaviors of these systems can be difficult to predict and understand, hindering transparency and interpretability, which are crucial for building trust and accountability. Safety and robustness are paramount concerns because unintended behaviors or failures could have far-reaching consequences, necessitating robust safeguards and error-handling mechanisms. As agentic services scale up, maintaining efficient performance becomes increasingly challenging, requiring optimized resource utilization and load balancing. Finally, the lack of widely adopted standards and protocols for agent-based systems creates interoperability issues, making it difficult to integrate these services with existing infrastructure. Addressing these limitations is essential for the widespread adoption and success of agentic services in various domains.

Advantages:

- More flexible representation of agent interactions using a graph structure

- Better suited for complex workflows with nonlinear agent communication

- Can more easily represent cycles and branching logic between agents

- Potentially more scalable for large multi-agent system

- Clearer visualization of overall agent system structure

Disadvantages:

- More complex initial setup compared to linear pipelines

- Can require more upfront planning to design the graph structure

- Can require extra source usage and longer response time

Next steps

In the next phase of multi-agent orchestration, our focus will be on enhancing the reasoning, reflection, and self-correction capabilities of our agents. This involves developing advanced algorithms (such as tree-of-thoughts (ToT) prompting, Monte Carlo tree search (MCTS), and others) that allow agents to learn from their peer interactions, adapt to new situations, and correct their behaviors based on feedback. Additionally, we’re working on creating a production-ready framework that can accommodate a variety of agentic services. This framework will be designed to be flexible and scalable, enabling seamless integration of different types of agents and services. These efforts are currently underway, and we’ll provide a detailed update on our progress in the next blog post. Stay tuned for more insights into our innovative approach to multi-agent orchestration.

Conclusion

Multi-agent orchestration and reasoning represent a significant leap forward in generative AI production adoption, offering unprecedented potential for complex problem-solving and decision-making, decoupling your applications from individual FMs. It’s also crucial to acknowledge and address the limitations, including scalability challenges, long latency and likely incompatibility among different agents. As we look to the future, enhancing self and intra-agent reasoning, reflection, and self-correction capabilities of our agents will be paramount. This will involve developing more sophisticated algorithms for metacognition, improving inter-agent communication protocols, and implementing robust error detection and correction mechanisms.

For the example code and demonstration discussed in this post, refer to the agentic-orchestration GitHub repository and this AWS Workshop. You can also refer to GitHub repo for Amazon Bedrock multi-agent collaboration code samples.

The authors wish to express their gratitude to Mark Roy, Maria Laderia Tanke, and Max Iguer for their insightful contributions, as well as to Nausheen Sayed for her relentless coordination.

About the authors

Alfred Shen is a Senior GenAI Specialist at AWS. He has been working in Silicon Valley, holding technical and managerial positions in diverse sectors including healthcare, finance, and high-tech. He is a dedicated applied AI/ML researcher, concentrating on agentic solutions and multimodality.

Alfred Shen is a Senior GenAI Specialist at AWS. He has been working in Silicon Valley, holding technical and managerial positions in diverse sectors including healthcare, finance, and high-tech. He is a dedicated applied AI/ML researcher, concentrating on agentic solutions and multimodality.

Anya Derbakova is a Senior Startup Solutions Architect at AWS, specializing in Healthcare and Life Science technologies. A University of North Carolina graduate, she previously worked as a Principal Developer at Blue Cross Blue Shield Association. Anya is recognized for her contributions to AWS professional development, having been featured on the AWS Developer Podcast and participating in multiple educational series. She co-hosted a six-part mini-series on AWS Certification Exam Prep, focusing on cost-optimized cloud architecture strategies. Additionally, she was instrumental in the “Get Schooled on…Architecting” podcast, which provided comprehensive preparation for the AWS Solutions Architect Exam.

Anya Derbakova is a Senior Startup Solutions Architect at AWS, specializing in Healthcare and Life Science technologies. A University of North Carolina graduate, she previously worked as a Principal Developer at Blue Cross Blue Shield Association. Anya is recognized for her contributions to AWS professional development, having been featured on the AWS Developer Podcast and participating in multiple educational series. She co-hosted a six-part mini-series on AWS Certification Exam Prep, focusing on cost-optimized cloud architecture strategies. Additionally, she was instrumental in the “Get Schooled on…Architecting” podcast, which provided comprehensive preparation for the AWS Solutions Architect Exam.

Leave a Reply