Build verifiable explainability into financial services workflows with Automated Reasoning checks for Amazon Bedrock Guardrails

Foundational models (FMs) and generative AI are transforming how financial service institutions (FSIs) operate their core business functions. AWS FSI customers, including NASDAQ, State Bank of India, and Bridgewater, have used FMs to reimagine their business operations and deliver improved outcomes.

FMs are probabilistic in nature and produce a range of outcomes. Though these models can produce sophisticated outputs through the interplay of pre-training, fine-tuning, and prompt engineering, their decision-making process remains less transparent than classical predictive approaches. Although emerging techniques such as tool use and Retrieval Augmented Generation (RAG) aim to enhance transparency, they too rely on probabilistic mechanisms—whether in retrieving relevant context or selecting appropriate tools. Even methods such as attention visualization and prompt tracing produce probabilistic insights rather than deterministic explanations.

AWS customers operating in regulated industries such as insurance, banking, payments, and capital markets, where decision transparency is paramount, want to launch FM-powered applications with the same confidence of traditional, deterministic software. To address these challenges, we’re introducing Automated Reasoning checks in Amazon Bedrock Guardrails (preview.) Automated Reasoning checks can detect hallucinations, suggest corrections, and highlight unstated assumptions in the response of your generative AI application. More importantly, Automated Reasoning checks can explain why a statement is accurate using mathematically verifiable, deterministic formal logic.

To use Automated Reasoning checks, you first create an Automated Reasoning policy by encoding a set of logical rules and variables from available source documentation. Automated Reasoning checks can then validate that the questions (prompts) and the FM-suggested answers are consistent with the rules defined in the Automated Reasoning policy using sound mathematical techniques. This fundamentally changes the approach to a solution’s transparency in FM applications, adding a deterministic verification for process-oriented workflows common in FSI organizations.

In this post, we explore how Automated Reasoning checks work through various common FSI scenarios such as insurance legal triaging, underwriting rules validation, and claims processing.

What is Automated Reasoning and how does it help?

Automated Reasoning is a field of computer science focused on mathematical proof and logical deduction—similar to how an auditor might verify financial statements or how a compliance officer makes sure that regulatory requirements are met. Rather than using probabilistic approaches such as traditional machine learning (ML), Automated Reasoning tools rely on mathematical logic to definitively verify compliance with policies and provide certainty (under given assumptions) about what a system will or won’t do. Automated Reasoning checks in Amazon Bedrock Guardrails is the first offering from a major cloud provider in the generative AI space.

The following financial example serves as an illustration.

Consider a basic trading rule: “If a trade is over $1 million AND the client is not tier-1 rated, THEN additional approval is required.”

An Automated Reasoning system would analyze this rule by breaking it down into logical components:

- Trade value > $1,000,000

- Client rating ≠ tier-1

- Result: Additional approval required

When presented with a scenario, the system can provide a deterministic (yes or no) answer about whether additional approval is needed, along with the exact logical path it used to reach that conclusion. For instance:

- Scenario A – $1.5M trade, tier-2 client → Additional approval required (Both conditions met)

- Scenario B – $2M trade, tier-1 client → No additional approval (Second condition not met)

What makes Automated Reasoning different is its fundamental departure from probabilistic approaches common in generative AI. At its core, Automated Reasoning provides deterministic outcomes where the same input consistently produces the same output, backed by verifiable proof chains that trace each conclusion to its original rules. This mathematical certainty, based on formal logic rather than statistical inference, enables complete verification of possible scenarios within defined rules (and under given assumptions).

FSIs regularly apply Automated Reasoning to verify regulatory compliance, validate trading rules, manage access controls, and enforce policy frameworks. However, it’s important to understand its limitations. Automated Reasoning can’t predict future events or handle ambiguous situations, nor can it learn from new data such as ML models. It requires precise, formal definition of rules and isn’t suitable for subjective decisions that require human judgment. This is where the combination of generative AI and Automated Reasoning come into play.

As institutions seek to integrate generative AI into their decision-making processes, Amazon Bedrock Guardrails Automated Reasoning checks provides a way to incorporate Automated Reasoning into the generative AI workflow. Automated Reasoning checks deliver deterministic verification of model outputs against documented rules, complete with audit trails and mathematical proof of policy adherence. This capability makes it particularly valuable for regulated processes where accuracy and governance are essential, such as risk assessment, compliance monitoring, and fraud detection. Most importantly, through its deterministic rule-checking and explainable audit trails, Automated Reasoning checks effectively address one of the major barriers to generative AI adoption: model hallucination, where models generate unreliable or unfaithful responses to the given task.

Using Automated Reasoning checks for Amazon Bedrock in financial services

A great candidate for applying Automated Reasoning in FSI is in scenarios where a process or workflow can be translated into a set of logical rules. Hard-coding rules as programmatic functions provides deterministic outcomes, but it becomes complex to maintain and requires highly structured inputs, potentially compromising the user experience. Alternatively, using an FM as the decision engine offers flexibility but introduces uncertainty. This is because FMs operate as black boxes where the internal reasoning process remains opaque and difficult to audit. In addition, the FM’s potential to hallucinate or misinterpret inputs means that conclusions would require human verification to verify accuracy.

Solution overview

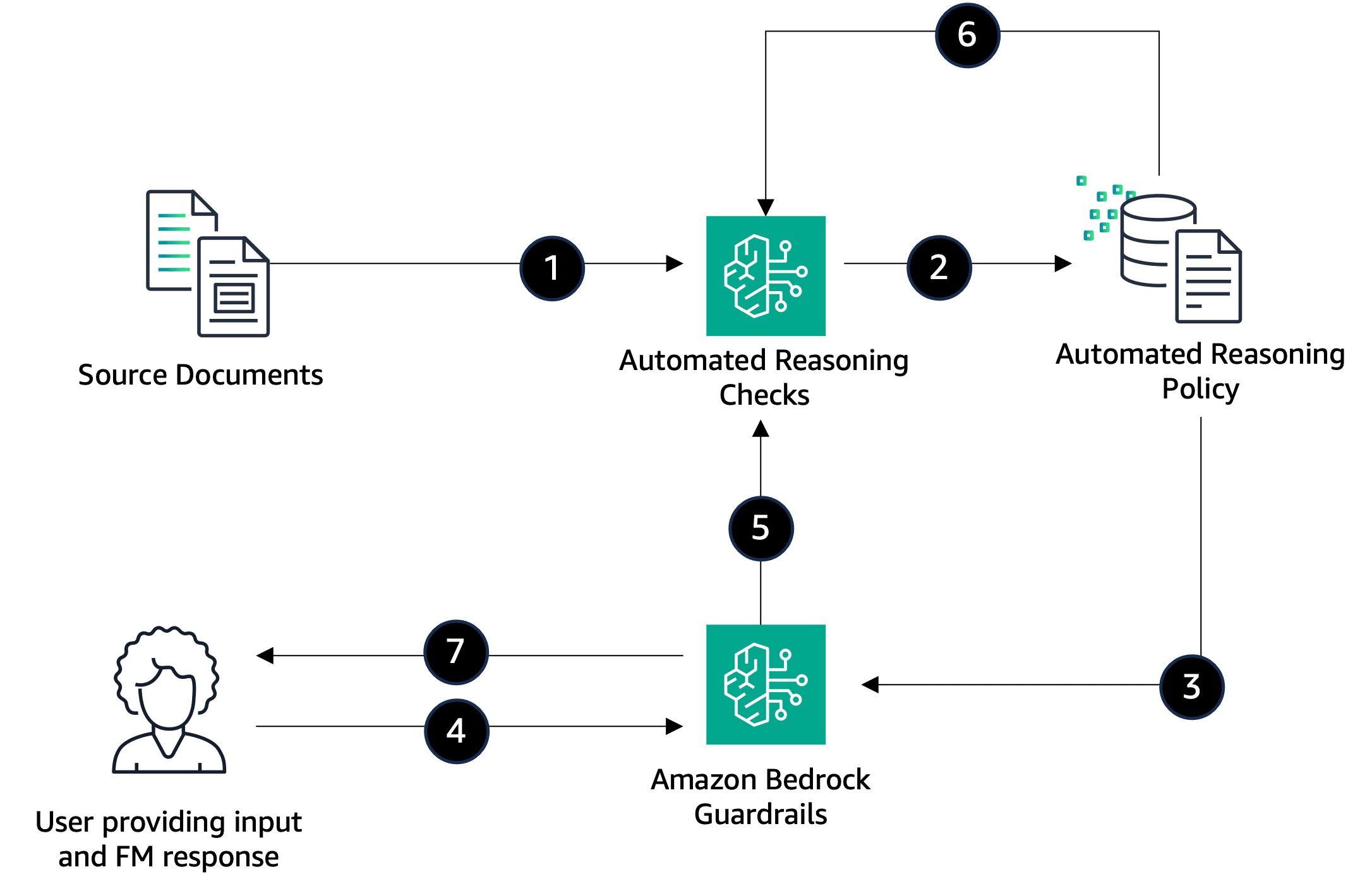

This is where Automated Reasoning checks come into play. The following diagram demonstrates the workflow to combine generative AI and Automated Reasoning to incorporate both methods.

The following steps explain the workflow in detail:

- The source document along with the intent instructions are passed to the Automated Reasoning checks service to build the rules and variables and create an Automated Reasoning checks policy.

- An Automated Reasoning checks policy is created and versioned.

- An Automated Reasoning checks policy and version is associated with an Amazon Bedrock guardrail.

- An ApplyGuardrail API call is made with the question and an FM response to the associated Amazon Bedrock guardrail.

- The Automated Reasoning checks model is triggered with the inputs from the ApplyGuardrail API, building logical representation of the input and FM response.

- An Automated Reasoning check is completed based on the created rules and variables from the source document and the logical representation of the inputs.

- The results of the Automated Reasoning check are shared with the user along with what rules, variables, and variable values were used in its determination, plus suggestions on what would make the assertion valid.

Prerequisites

Before you build your first Automated Reasoning check for Amazon Bedrock Guardrails, make sure you have the following:

- An AWS account that provides access to AWS services, including Amazon Bedrock.

- The new Automated Reasoning checks safeguard is available today in preview in Amazon Bedrock Guardrails in the US West (Oregon) AWS Region. Make sure that you have access to the Automated Reasoning checks preview within Amazon Bedrock. To request access to the preview today, contact your AWS account team. To learn more, visit Amazon Bedrock Guardrails.

- An AWS Identity and Access Management (IAM) user set up for the Amazon Bedrock API and appropriate permissions added to the IAM user

Solution walkthrough

To build an Automated Reasoning check for Amazon Bedrock Guardrails, follow these steps:

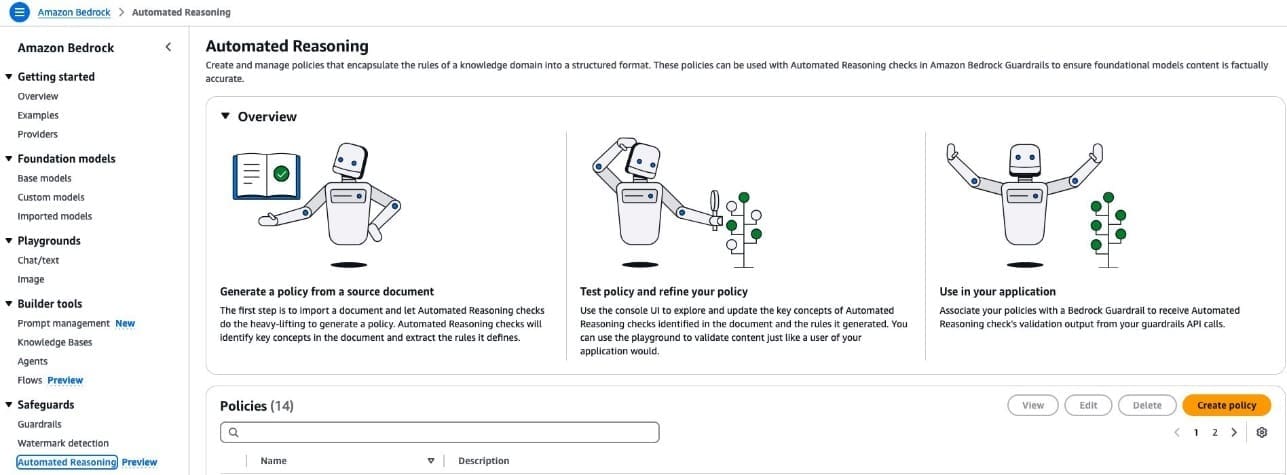

- On the Amazon Bedrock console, under Safeguards in the navigation pane, select Automated Reasoning.

- Choose Create policy, as shown in the following screenshot.

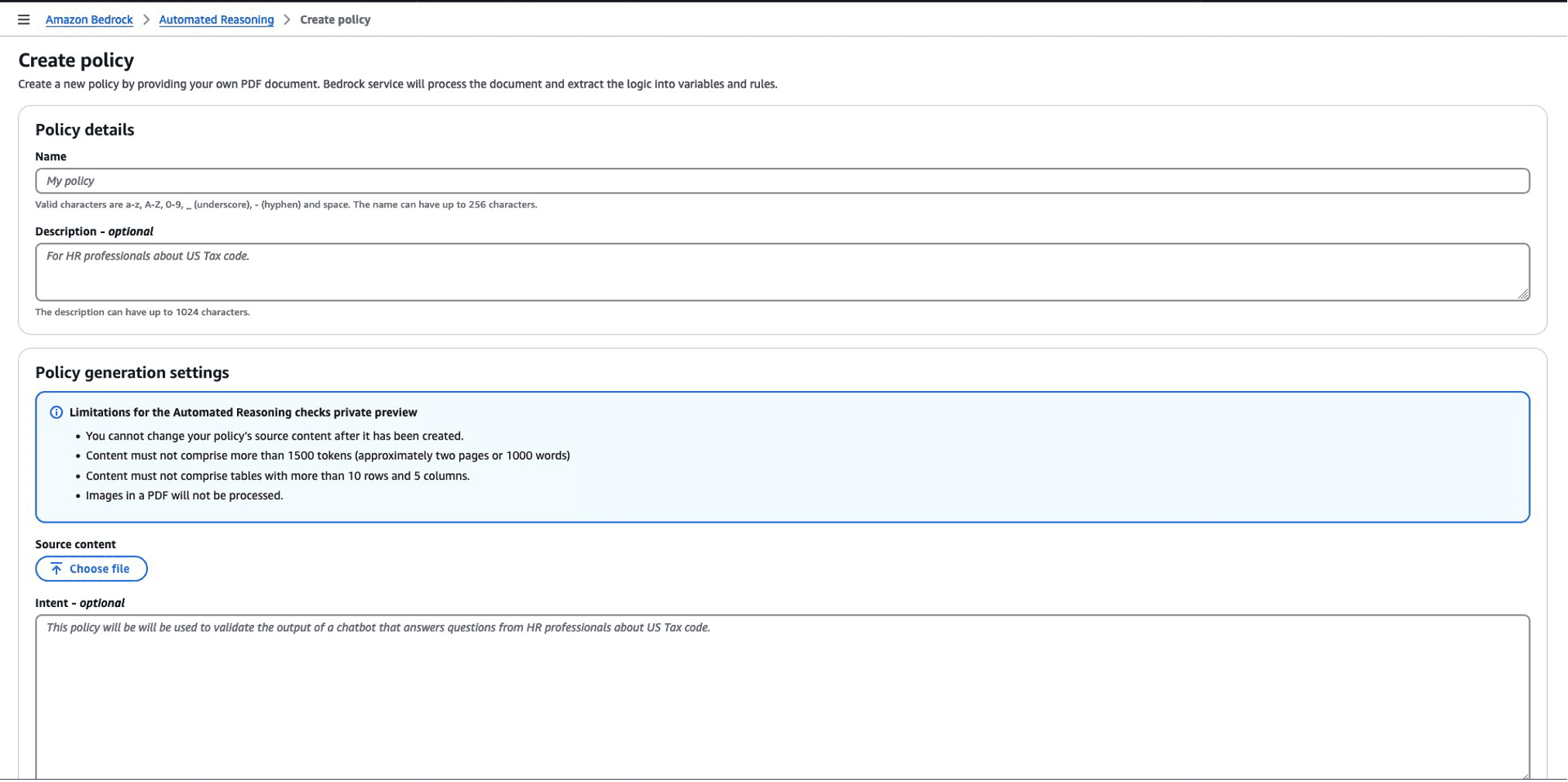

- On the Create policy section, shown in the following screenshot, enter the following inputs:

- Name – Name of the Automated Reasoning checks policy.

- Description – Description of the Automated Reasoning checks policy.

- Source content – The document to create the rules and variables from. You need to upload a document in PDF format.

- Intent – Instructions on how to approach the creation of the rules and variables.

The following sections dive into some example uses of Automated Reasoning checks.

Automated Reasoning checks for insurance underwriting rules validation

Consider a scenario for an auto insurance company’s underwriting rules validation process.

Underwriting is a fundamental function within the insurance industry, serving as the foundation for risk assessment and management. Underwriters are responsible for evaluating insurance applications, determining the level of risk associated with each applicant, and making decisions on whether to accept or reject the application based on the insurer’s guidelines and risk appetite.

One of the key challenges in underwriting is the process of rule validations, which is the verification that the information provided in the documents adheres to the insurer’s underwriting guidelines. This is a complex task that deals with unstructured data and varying document formats.

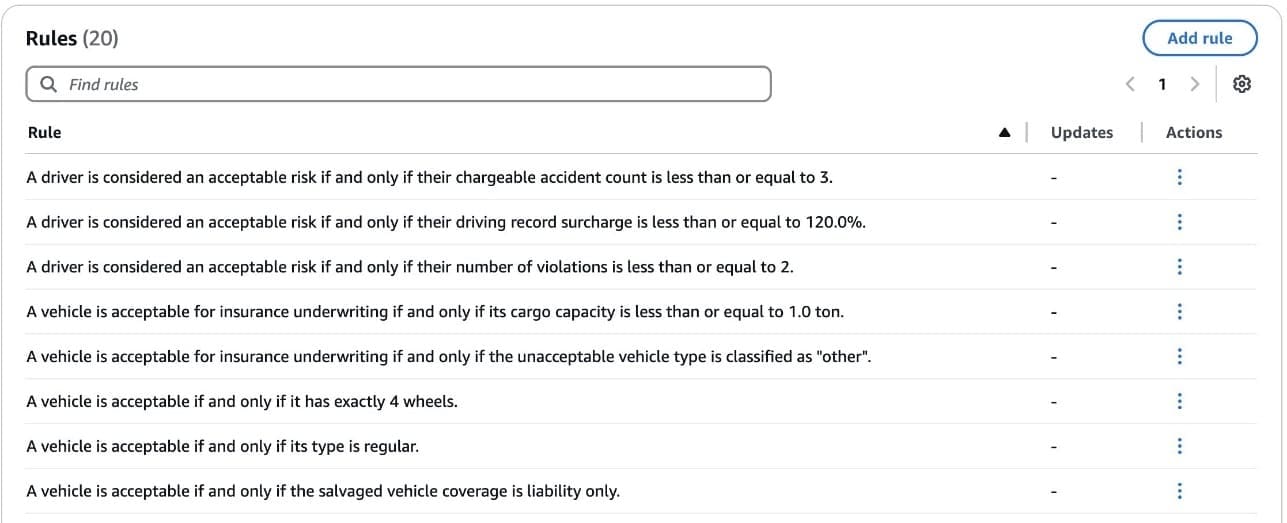

This example uses an auto insurance company’s underwriting rules guideline document. A typical underwriting manual can have rules to define unacceptable drivers, unacceptable vehicles, and other definitions, as shown in the following example:

Unacceptable drivers

- Drivers with 3 or more DUIs.

- For new business or additional drivers, drivers with 3 or more accidents, regardless of fault.

- Drivers with more than 2 major violations.

- Drivers with more than 3 chargeable accidents.

- Military personnel not stationed in California.

- Drivers 75 and older without a completed company Physician’s Report form.

- Any driver disclosing physical or mental conditions that might affect the driver’s ability to safely operate a motor vehicle may be required to complete a company Physician’s Report form to verify their ability to drive. In addition, if in the course of an investigation we discover an undisclosed medical concern, a completed company Physician’s Report form will be required.

- Any unlisted or undisclosed driver that is a household member or has regular use of a covered vehicle.

Unacceptable Vehicles

- Vehicles principally garaged outside the state of California.

- Vehicles with more or less than 4 wheels.

- Vehicles with cargo capacity over 1 ton.

- Motor vehicles not eligible to be licensed for highway use.

- Taxicabs, limousines, emergency vehicles, escort vehicles, and buses.

- Vehicles used for pickup or delivery of goods at any time including pizzas, magazines, and newspapers.

- Vehicles used for public livery, conveyance, and company fleets.

- Vehicles made available to unlisted drivers for any use including business use such as sales, farming, or artisan use (for example, pooled vehicles).

- Vehicles used to transport nursery or school children, migrant workers, or hotel or motel guests.

- Vehicles with permanent or removable business-solicitation logos or advertising.

- Vehicles owned or leased by a partnership or corporation.

- Step vans, panel vans, dump trucks, flatbed trucks, amphibious vehicles, dune buggies, motorcycles, scooters, motor homes, travel trailers, micro or kit cars, antique or classic vehicles, custom, rebuilt, altered or modified vehicles.

- Physical damage coverage for vehicles with an ISO symbol of more than 20 for model year 2010 and earlier or ISO symbol 41 for model year 2011 and later.

- Liability coverage for vehicles with an ISO symbol of more than 25 for vehicles with model year 2010 and earlier or ISO symbol 59 for model year 2011 and later.

- Salvaged vehicles for comprehensive and collision coverage. Liability only policies for salvaged vehicles are acceptable.

- Physical damage coverage for vehicles over 15 years old for new business or for vehicles added during the policy term.

For this example, we entered the following inputs for the Automated Reasoning check:

- Name – Auto Policy Rule Validation.

- Description – A policy document outlining the rules and criteria that define unacceptable drivers and unacceptable vehicles.

- Source content – A document describing the companies’ underwriting manual and guidelines. You can copy and paste the example provided and create a PDF document. Upload this document as your source content.

- Intent – Create a logical model for auto insurance underwriting policy approval. An underwriter associate will provide the driver profile and type of vehicle and ask whether a policy can be written for this potential customer. The underwriting guideline document uses a list of unacceptable driver profiles and unacceptable vehicles. Make sure to create a separate rule for each unacceptable condition listed in the document, and create a variable to capture whether the driver is an acceptable risk or not. A customer that doesn’t violate any rule is acceptable. Here is an example: ” Is the risk acceptable for a driver with the following profile? A driver has 4 car accidents, uses the car as a Uber-Taxi, and has 3 DUIs”. The model should determine: “The driver has unacceptable risks. Driving a taxi is an unacceptable risk. The driver has multiple DUIs.”

The model creates rules and variables from the source content. Depending on the size of the source content, this process may take more than 10 minutes.

The process of rule and variable creation is probabilistic in nature, and we highly recommend that you edit the created rules and variables to align better with your source content.

After the process is complete, a set of rules and variables will be created and can be reviewed and edited.

The following screenshots show an extract of the rules and variables created by the Automated Reasoning checks feature. The actual policy will have more rules and variables that can be viewed in Amazon Bedrock, but we’re not showing them here due to space limits.

The Automated Reasoning checks policy must be associated to an Amazon Bedrock guardrail. For more information, refer to Create a guardrail.

Test the policy

To test this policy, we considered a hypothetical scenario with an FM-generated response to validate.

Question: Is the risk acceptable for a driver with the following profile? Has 2 chargeable accidents in a span of 10 years. Driving records show a negligent driving charge and one DUI.

Answer: Driver has unacceptable risk. Number of chargeable accidents count is 2.

After entering the question and answer inputs, choose Submit, as shown in the following screenshot.

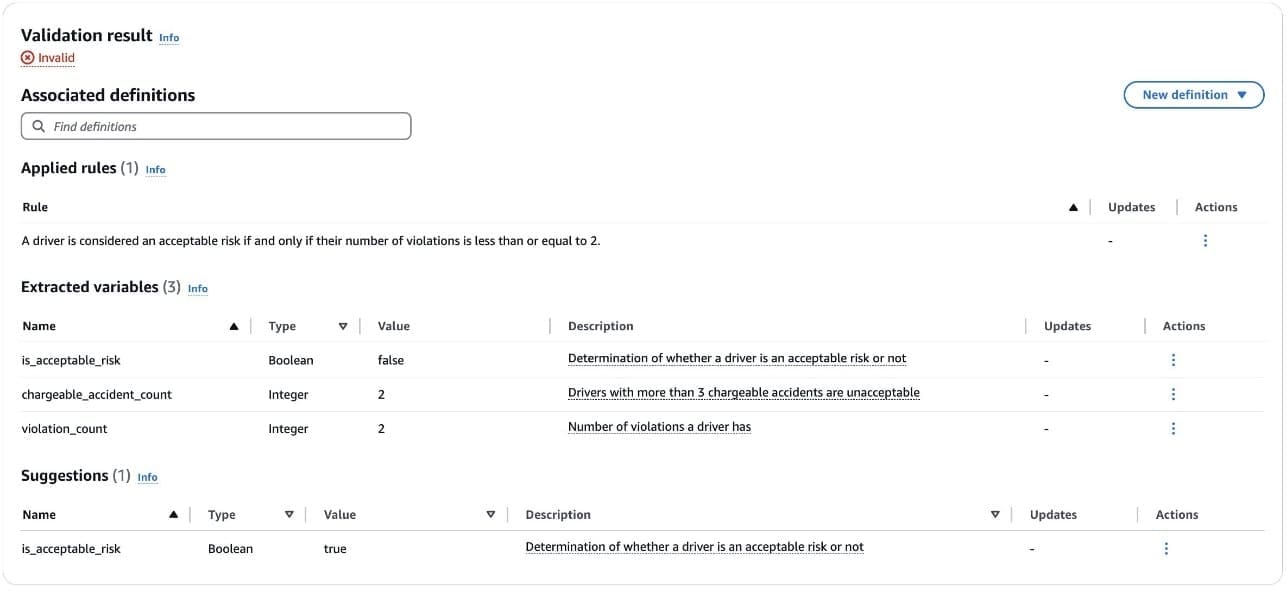

The Automated Reasoning check returned as Invalid, as shown in the following screenshot. The components shown in the screenshot are as follows:

- Validation result – This is the Automated Reasoning checks validation output. This conclusion is reached by computing the extracted variable assignments against the rules defined in the Automated Reasoning policy.

- Applied rules – These are the rules that were used to reach the validation result for this finding.

- Extracted variables – This list shows how Automated Reasoning checks interpreted the input Q&A and used it to assign values to variables in the Automated Reasoning policy. These variable values are computed against the rules in the policy to reach the validation result.

- Suggestions – When the validation result is invalid, this list shows a set of variable assignments that would make the conclusion valid. When the validation result is valid, this list shows a list of assignments that are necessary for the result to hold; these are unstated assumptions in the answer. You can use these values alongside the rules to generate a string that provides feedback to your FM.

The model evaluated the answer against the Automated Reasoning logical rules, and in this scenario the following rule was triggered:

“A driver is considered an acceptable risk if and only if their number of violations is less than or equal to 2.”

The Extracted variables value for violation_count is 2, and the is_acceptable_risk variable was set to false, which is wrong according to the Automated Reasoning logic. Therefore, the answer isn’t valid.

The suggested value for is_acceptable_risk is true.

Here is an example with a revised answer.

Question: Is the risk acceptable for a driver with the following profile? Has 2 chargeable accidents in a span of 10 years. Driving records show a negligent driving charge and one DUI.

Answer: Driver has acceptable risk.

Because no rules were violated, the Automated Reasoning logic determines the assertion is Valid, as shown in the following screenshot.

Automated Reasoning checks for insurance legal triaging

For the next example, consider a scenario where an underwriter is evaluating whether a long-term care (LTC) claim requires legal intervention.

For this example, we entered the following inputs:

- Name – Legal LTC Triage

- Description – A workflow document outlining the criteria, process, and requirements for referring LTC claims to legal investigation

- Source content – A document describing your LTC legal triaging process. You need to upload your own legal LTC triage document in PDF format. This document should outline the criteria, process, and requirements for referring LTC claims to legal investigation.

- Intent – Create a logical model that validates compliance requirements for LTC claims under legal investigation. The model must evaluate individual policy conditions including benefit thresholds, care durations, and documentation requirements that trigger investigations. It should verify timeline constraints, proper sequencing of actions, and policy limits. Each requirement must be evaluated independently, where a single violation results in noncompliance. For example: “A claim has two care plan amendments within 90 days, provider records covering 10 months, and a review meeting at 12 days. Is this compliant?” The model should determine: “Not compliant because: multiple amendments require investigation, provider records must cover 12 months, and review meetings must be within 10 days.”

The process of rule and variable creation is probabilistic in nature, and we highly recommend that you edit the created rules and variables to align better with your source content.

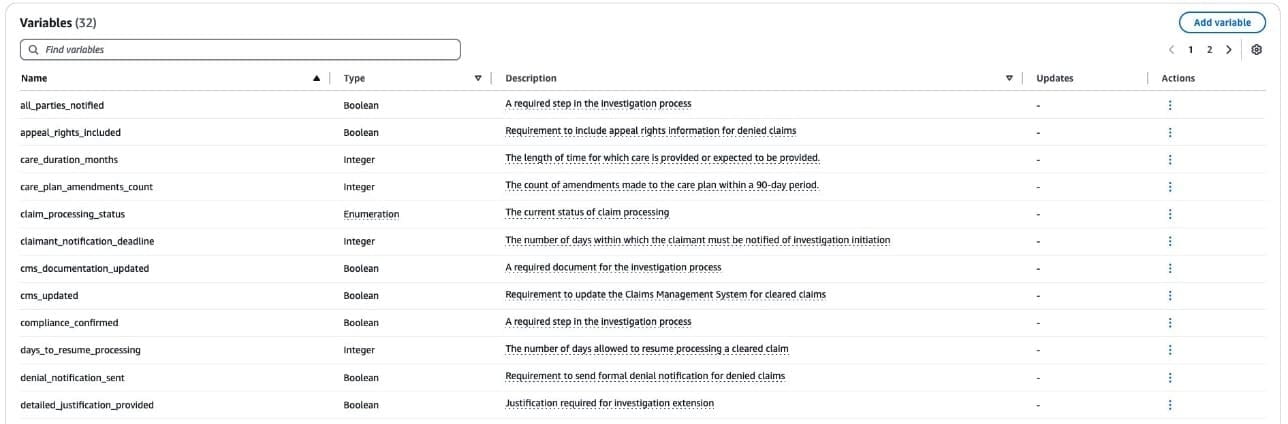

After the process is complete, a set of rules and variables will be created. To review and edit a rule or variable, select the more options icon under Actions and then choose Edit. The following screenshots show the Rules and Variables screens.

Test the policy

From here we can test out our Automated Reasoning checks in the test playground. Note: to do this, the Automated Reasoning checks policy must be associated to an Amazon Bedrock guardrail.To test this policy, we posed the following hypothetical scenario with an FM-generated response for the Automated Reasoning checks policy to validate.

Question: A claim with care duration of 28 months, no documentation irregularities, and total projected benefit value of $200,000 has been submitted. Does this require legal investigation?

Answer: This claim does not require legal investigation because the total projected benefit value is below $250,000 and there are no documentation irregularities.

After completing the check, the Automated Reasoning tool produces the validation result, which for this example was Invalid, as shown in the following screenshot. This means the FM generated response violates one or more rules from the generated Automated Reasoning checks policy.

The rule that was triggered was the following:

“A claim is flagged for legal investigation if and only if there are documentation irregularities, or the total projected benefit exceeds $250,000, or the care duration is more than 24 months, or the number of care plan amendments within a 90-day period is greater than 1.”

Based on our input the model determined our variable inputs to be:

| Name | Type | Value | Description | |

| 1 | total_projected_benefit | Real number | 200,000 | The total projected monetary value of benefits for a long-term care claim |

| 2 | flag_for_legal_investigation | Boolean | FALSE | Indicates whether a claim should be flagged for legal investigation based on the specified criteria |

| 3 | has_documentation_irregularities | Boolean | FALSE | Presence of irregularities in the care provider’s documentation |

| 4 | care_duration_months | Integer | 28 | The length of time for which care is provided or expected to be provided |

From this, we can determine where exactly our rule was found INVALID. Our input had care_duration_months > 24 months, and flag_for_legal_investigation was set as FALSE. This invalidated our rule.

In the suggestions, we observe that for our original Q&A to be correct, we’d have to have flag_for_legal_investigation as TRUE, along with the total_projected_benefit being 200,000.

We can validate whether the suggestion will yield a VALID response by adjusting our answer to the original question to the following.

“This claim does require legal investigation even though the total projected benefit value is below $250,000 and there are no documentation irregularities.”

As shown in the following screenshot, no rules were triggered. However, what changed is our extracted variables and our suggestions.

Now that the assertion is valid, we have the other requirements as unstated assumptions according to our rules to make sure that this is a VALID response. We can use suggestions to modify our response to the end user with more granular detail.

Automated Reasoning checks for insurance claims processing

The final example demonstrates an Automated Reasoning checks example for claims processing.

Claims processing is another fundamental function within insurance companies, and it’s the process used by policy holders to exercise their policy to get compensation for an event (a car accident, for example). Claims processors work to validate the claim and the beneficiaries, determine the amount of compensation, and work to settle the claim. This process includes verification of the people involved, proof of the incident, and a host of legal guidelines that they’re required to follow.

One of the key issues in claims processing is validating the claim and the parties involved. In this example, we use Automated Reasoning checks to provide recommendations to individuals attempting to file a claim in the case of a house fire.

As in the previous examples, we create an Automated Reasoning guardrail policy as follows:

- Name – Home Owners Insurance Claims Policy

- Description – This policy is used for the validation of homeowners’ insurance claims and includes the processes and procedures needed to file a claim.

- Source content – A document describing the companies’ homeowners’ insurance claims process. This document should outline the necessary processes and procedures needed to file a claim.

- Intent – Create a logical model that validates the requirements for homeowner claims. The model must evaluate individual policy conditions, including benefit thresholds, durations, and documentation requirements needed for the creation of a claim. It should verify timeline constraints, proper sequencing of actions, and policy limits. Each requirement must be evaluated independently, where any single violation results in noncompliance. For example: “I had a fire at my house. What documents do I need in order to file a claim?” The model should determine: “You will need to provide a fire department report, police report, photos, and your policy number.”

The following screenshots show an extract of the rules and variables created by the Automated Reasoning checks feature. The actual policy will have more rules and variables that can be viewed in Amazon Bedrock, but we’re not showing them due to space limits.

Test the policy

To test this policy, we considered a hypothetical scenario with an FM-generated response to validate.

Question: I had a fire at my house. What documents do I need to file a claim?

Answer: You provide a report from the fire department, a police report, photos, and policy number.

In this case, the Automated Reasoning check returned as Valid, as shown in the following screenshot. Automated Reasoning checks validated that the answer is correct and aligns to the provided claims processing document.

Conclusion

In this post, we demonstrated that Automated Reasoning checks solve a core challenge within FMs: the ability to verifiably demonstrate the reasoning for decision-making. By incorporating Automated Reasoning checks into our workflow, we were able to validate a complex triage scenario and determine the exact reason for why a decision was made. Automated Reasoning is deterministic, meaning that with the same ruleset, same variables, and same input and FM response, the determination will be reproducible. This means you can the reproduce findings for compliance or regulatory reporting.

Automated Reasoning checks in Amazon Bedrock Guardrails empowers financial service professionals to work more effectively with generative AI by providing deterministic validation of FM responses for decision-oriented documents. This enhances human decision-making by reducing hallucination risk and creating reproducible, explainable safeguards that help professionals better understand and trust FM-generated insights.

The new Automated Reasoning checks safeguard is available today in preview in Amazon Bedrock Guardrails in the US West (Oregon) AWS Region. We invite you to build your first Automated Reasoning checks. For detailed guidance, visit our documentation and code examples in our GitHub repo. Please share your experiences in the comments or reach out to the authors with questions. Happy building!

About the Authors

Alfredo Castillo is a Senior Solutions Architect at AWS, where he works with Financial Services customers on all aspects of internet-scale distributed systems, and specializes in Machine learning, Natural Language Processing, Intelligent Document Processing, and GenAI. Alfredo has a background in both electrical engineering and computer science. He is passionate about family, technology, and endurance sports.

Alfredo Castillo is a Senior Solutions Architect at AWS, where he works with Financial Services customers on all aspects of internet-scale distributed systems, and specializes in Machine learning, Natural Language Processing, Intelligent Document Processing, and GenAI. Alfredo has a background in both electrical engineering and computer science. He is passionate about family, technology, and endurance sports.

Andy Hall is a Senior Solutions Architect with AWS and is focused on helping Financial Services customers with their digital transformation to AWS. Andy has helped companies to architect, migrate, and modernize large-scale applications to AWS. Over the past 30 years, Andy has led efforts around Software Development, System Architecture, Data Processing, and Development Workflows for large enterprises.

Andy Hall is a Senior Solutions Architect with AWS and is focused on helping Financial Services customers with their digital transformation to AWS. Andy has helped companies to architect, migrate, and modernize large-scale applications to AWS. Over the past 30 years, Andy has led efforts around Software Development, System Architecture, Data Processing, and Development Workflows for large enterprises.

Raj Pathak is a Principal Solutions Architect and Technical advisor to Fortune 50 and Mid-Sized FSI (Banking, Insurance, Capital Markets) customers across Canada and the United States. Raj specializes in Machine Learning with applications in Generative AI, Natural Language Processing, Intelligent Document Processing, and MLOps.

Raj Pathak is a Principal Solutions Architect and Technical advisor to Fortune 50 and Mid-Sized FSI (Banking, Insurance, Capital Markets) customers across Canada and the United States. Raj specializes in Machine Learning with applications in Generative AI, Natural Language Processing, Intelligent Document Processing, and MLOps.

Leave a Reply