Knowledge Bases for Amazon Bedrock now supports metadata filtering to improve retrieval accuracy

At AWS re:Invent 2023, we announced the general availability of Knowledge Bases for Amazon Bedrock. With Knowledge Bases for Amazon Bedrock, you can securely connect foundation models (FMs) in Amazon Bedrock to your company data using a fully managed Retrieval Augmented Generation (RAG) model.

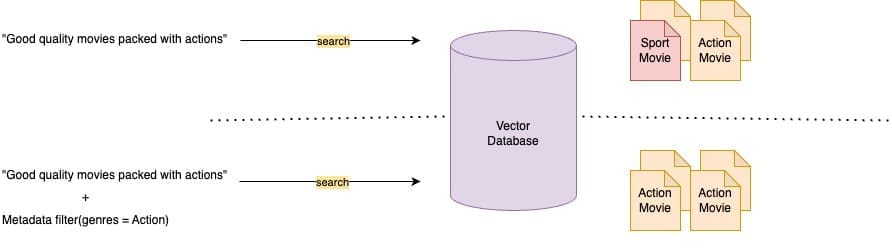

For RAG-based applications, the accuracy of the generated responses from FMs depend on the context provided to the model. Contexts are retrieved from vector stores based on user queries. In the recently released feature for Knowledge Bases for Amazon Bedrock, hybrid search, you can combine semantic search with keyword search. However, in many situations, you may need to retrieve documents created in a defined period or tagged with certain categories. To refine the search results, you can filter based on document metadata to improve retrieval accuracy, which in turn leads to more relevant FM generations aligned with your interests.

In this post, we discuss the new custom metadata filtering feature in Knowledge Bases for Amazon Bedrock, which you can use to improve search results by pre-filtering your retrievals from vector stores.

Metadata filtering overview

Prior to the release of metadata filtering, all semantically relevant chunks up to the pre-set maximum would be returned as context for the FM to use to generate a response. Now, with metadata filters, you can retrieve not only semantically relevant chunks but a well-defined subset of those relevant chucks based on applied metadata filters and associated values.

With this feature, you can now supply a custom metadata file (each up to 10 KB) for each document in the knowledge base. You can apply filters to your retrievals, instructing the vector store to pre-filter based on document metadata and then search for relevant documents. This way, you have control over the retrieved documents, especially if your queries are ambiguous. For example, you can use legal documents with similar terms for different contexts, or movies that have a similar plot released in different years. In addition, by reducing the number of chunks that are being searched over, you achieve performance advantages like a reduction in CPU cycles and cost of querying the vector store, in addition to improvement in accuracy.

To use the metadata filtering feature, you need to provide metadata files alongside the source data files with the same name as the source data file and .metadata.json suffix. Metadata can be string, number, or Boolean. The following is an example of the metadata file content:

The metadata filtering feature of Knowledge Bases for Amazon Bedrock is available in AWS Regions US East (N. Virginia) and US West (Oregon).

The following are common use cases for metadata filtering:

- Document chatbot for a software company – This allows users to find product information and troubleshooting guides. Filters on the operating system or application version, for example, can help avoid retrieving obsolete or irrelevant documents.

- Conversational search of an organization’s application – This allows users to search through documents, kanbans, meeting recording transcripts, and other assets. Using metadata filters on work groups, business units, or project IDs, you can personalize the chat experience and improve collaboration. An example would be, “What is the status of project Sphinx and risks raised,” where users can filter documents for a specific project or source type (such as email or meeting documents).

- Intelligent search for software developers – This allows developers to look for information of a specific release. Filters on the release version, document type (such as code, API reference, or issue) can help pinpoint relevant documents.

Solution overview

In the following sections, we demonstrate how to prepare a dataset to use as a knowledge base, and then query with metadata filtering. You can query using either the AWS Management Console or SDK.

Prepare a dataset for Knowledge Bases for Amazon Bedrock

For this post, we use a sample dataset about fictional video games to illustrate how to ingest and retrieve metadata using Knowledge Bases for Amazon Bedrock. If you want to follow along in your own AWS account, download the file.

If you want to add metadata to your documents in an existing knowledge base, create the metadata files with the expected filename and schema, then skip to the step to sync your data with the knowledge base to start the incremental ingestion.

In our sample dataset, each game’s document is a separate CSV file (for example, s3://$bucket_name/video_game/$game_id.csv) with the following columns:

title, description, genres, year, publisher, score

Each game’s metadata has the suffix .metadata.json (for example, s3://$bucket_name/video_game/$game_id.csv.metadata.json) with the following schema:

Create a knowledge base for Amazon Bedrock

For instructions to create a new knowledge base, see Create a knowledge base. For this example, we use the following settings:

- On the Set up data source page, under Chunking strategy, select No chunking, because you’ve already preprocessed the documents in the previous step.

- In the Embeddings model section, choose Titan G1 Embeddings – Text.

- In the Vector database section, choose Quick create a new vector store. The metadata filtering feature is available for all supported vector stores.

Synchronize the dataset with the knowledge base

After you create the knowledge base, and your data files and metadata files are in an Amazon Simple Storage Service (Amazon S3) bucket, you can start the incremental ingestion. For instructions, see Sync to ingest your data sources into the knowledge base.

Query with metadata filtering on the Amazon Bedrock console

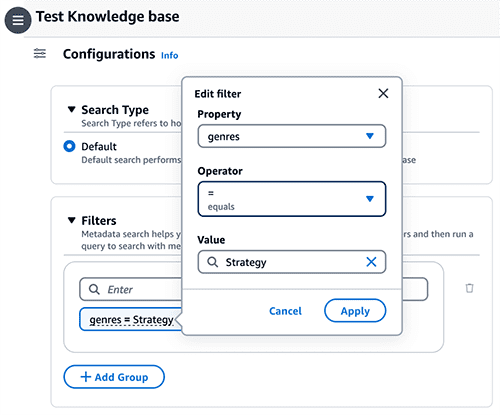

To use the metadata filtering options on the Amazon Bedrock console, complete the following steps:

- On the Amazon Bedrock console, choose Knowledge bases in the navigation pane.

- Choose the knowledge base you created.

- Choose Test knowledge base.

- Choose the Configurations icon, then expand Filters.

- Enter a condition using the format: key = value (for example, genres = Strategy) and press Enter.

- To change the key, value, or operator, choose the condition.

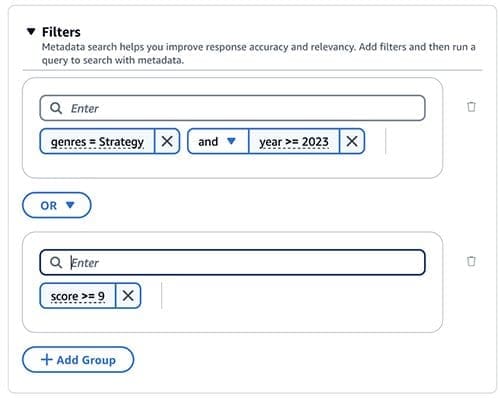

- Continue with the remaining conditions (for example, (genres = Strategy AND year >= 2023) OR (rating >= 9))

- When finished, enter your query in the message box, then choose Run.

For this post, we enter the query “A strategy game with cool graphic released after 2023.”

Query with metadata filtering using the SDK

To use the SDK, first create the client for the Agents for Amazon Bedrock runtime:

Then construct the filter (the following are some examples):

Pass the filter to retrievalConfiguration of the Retrieval API or RetrieveAndGenerate API:

The following table lists a few responses with different metadata filtering conditions.

| Query | Metadata Filtering | Retrieved Documents | Observations |

| “A strategy game with cool graphic released after 2023” | Off |

* Viking Saga: The Sea Raider, year:2023, genres: Strategy * Medieval Castle: Siege and Conquest, year:2022, genres: Strategy * Cybernetic Revolution: Rise of the Machines, year:2022, genres: Strategy |

2/5 games meet the condition (genres = Strategy and year >= 2023) |

| On | * Viking Saga: The Sea Raider, year:2023, genres: Strategy * Fantasy Kingdoms: Chronicles of Eldoria, year:2023, genres: Strategy |

2/2 games meet the condition (genres = Strategy and year >= 2023) |

In addition to custom metadata, you can also filter using S3 prefixes (which is a built-in metadata, so you don’t need to provide any metadata files). For example, if you organize the game documents into prefixes by publisher (for example, s3://$bucket_name/video_game/$publisher/$game_id.csv), you can filter with the specific publisher (for example, neo_tokyo_games) using the following syntax:

Clean up

To clean up your resources, complete the following steps:

- Delete the knowledge base:

- On the Amazon Bedrock console, choose Knowledge bases under Orchestration in the navigation pane.

- Choose the knowledge base you created.

- Take note of the AWS Identity and Access Management (IAM) service role name in the Knowledge base overview section.

- In the Vector database section, take note of the collection ARN.

- Choose Delete, then enter delete to confirm.

- Delete the vector database:

- On the Amazon OpenSearch Service console, choose Collections under Serverless in the navigation pane.

- Enter the collection ARN you saved in the search bar.

- Select the collection and chose Delete.

- Enter confirm in the confirmation prompt, then choose Delete.

- Delete the IAM service role:

- On the IAM console, choose Roles in the navigation pane.

- Search for the role name you noted earlier.

- Select the role and choose Delete.

- Enter the role name in the confirmation prompt and delete the role.

- Delete the sample dataset:

- On the Amazon S3 console, navigate to the S3 bucket you used.

- Select the prefix and files, then choose Delete.

- Enter permanently delete in the confirmation prompt to delete.

Conclusion

In this post, we covered the metadata filtering feature in Knowledge Bases for Amazon Bedrock. You learned how to add custom metadata to documents and use them as filters while retrieving and querying the documents using the Amazon Bedrock console and the SDK. This helps improve context accuracy, making query responses even more relevant while achieving a reduction in cost of querying the vector database.

For additional resources, refer to the following:

- User guide: Knowledge bases for Amazon Bedrock

- YouTube video: Use RAG to improve responses in generative AI application

- GitHub repo code samples: Amazon Bedrock Knowledge Base – Samples for building RAG workflows

About the Authors

Corvus Lee is a Senior GenAI Labs Solutions Architect based in London. He is passionate about designing and developing prototypes that use generative AI to solve customer problems. He also keeps up with the latest developments in generative AI and retrieval techniques by applying them to real-world scenarios.

Corvus Lee is a Senior GenAI Labs Solutions Architect based in London. He is passionate about designing and developing prototypes that use generative AI to solve customer problems. He also keeps up with the latest developments in generative AI and retrieval techniques by applying them to real-world scenarios.

Ahmed Ewis is a Senior Solutions Architect at AWS GenAI Labs, helping customers build generative AI prototypes to solve business problems. When not collaborating with customers, he enjoys playing with his kids and cooking.

Ahmed Ewis is a Senior Solutions Architect at AWS GenAI Labs, helping customers build generative AI prototypes to solve business problems. When not collaborating with customers, he enjoys playing with his kids and cooking.

Chris Pecora is a Generative AI Data Scientist at Amazon Web Services. He is passionate about building innovative products and solutions while also focusing on customer-obsessed science. When not running experiments and keeping up with the latest developments in GenAI, he loves spending time with his kids.

Chris Pecora is a Generative AI Data Scientist at Amazon Web Services. He is passionate about building innovative products and solutions while also focusing on customer-obsessed science. When not running experiments and keeping up with the latest developments in GenAI, he loves spending time with his kids.

Leave a Reply