Intelligent document processing with AWS AI services in the insurance industry: Part 1

The goal of intelligent document processing (IDP) is to help your organization make faster and more accurate decisions by applying AI to process your paperwork. This two-part series highlights the AWS AI technologies that insurance companies can use to speed up their business processes. These AI technologies can be used across insurance use cases such as claims, underwriting, customer correspondence, contracts, or handling disputes resolutions. This series focuses on a claims processing use case in the insurance industry; for more information about the fundamental concepts of the AWS IDP solution, refer to the following two-part series.

Claims processing consists of multiple checkpoints in a workflow that is required to review, verify authenticity, and determine the correct financial responsibility to adjudicate a claim. Insurance companies go through these checkpoints for claims before adjudication of the claims. If a claim successfully goes through all these checkpoints without issues, the insurance company approves it and processes any payment. However, they may require additional supporting information to adjudicate a claim. This claims processing process is often manual, making it expensive, error-prone, and time-consuming. Insurance customers can automate this process using AWS AI services to automate the document processing pipeline for claims processing.

In this two-part series, we take you through how you can automate and intelligently process documents at scale using AWS AI services for an insurance claims processing use case.

Intelligent document processing with AWS AI and Analytics services in the insurance industry

|

Solution overview

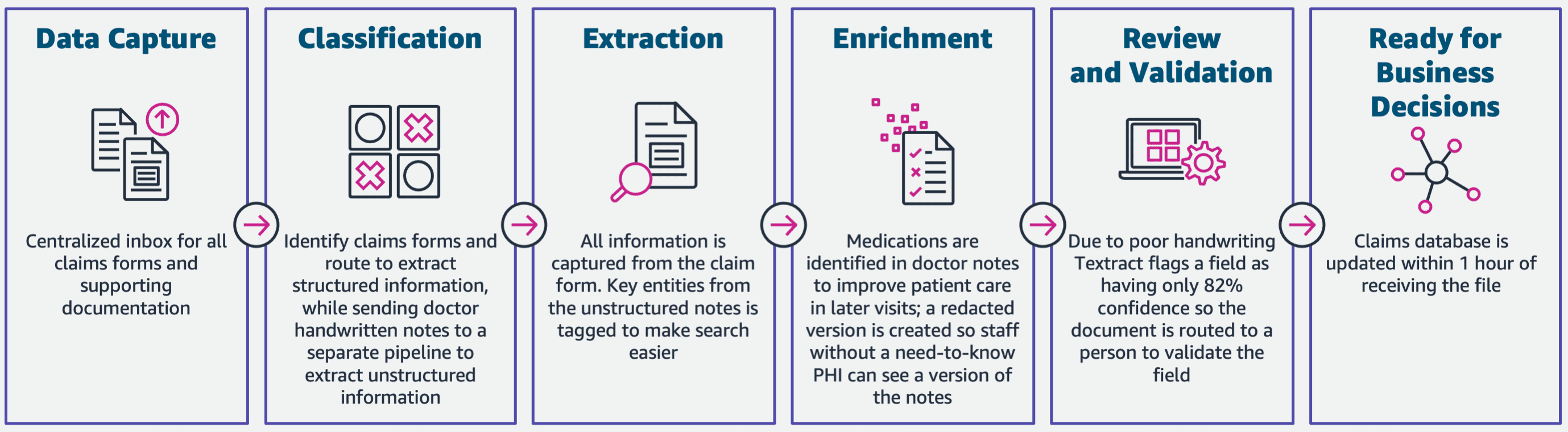

The following diagram represents each stage that we typically see in an IDP pipeline. We walk through each of these stages and how they connect to the steps involved in a claims application process, starting from when an application is submitted, to investigating and closing the application. In this post, we cover the technical details of the data capture, classification, and extraction stages. In Part 2, we expand the document extraction stage and continue to document enrichment, review and verification, and extend the solution to provide analytics and visualizations for a claims fraud use case.

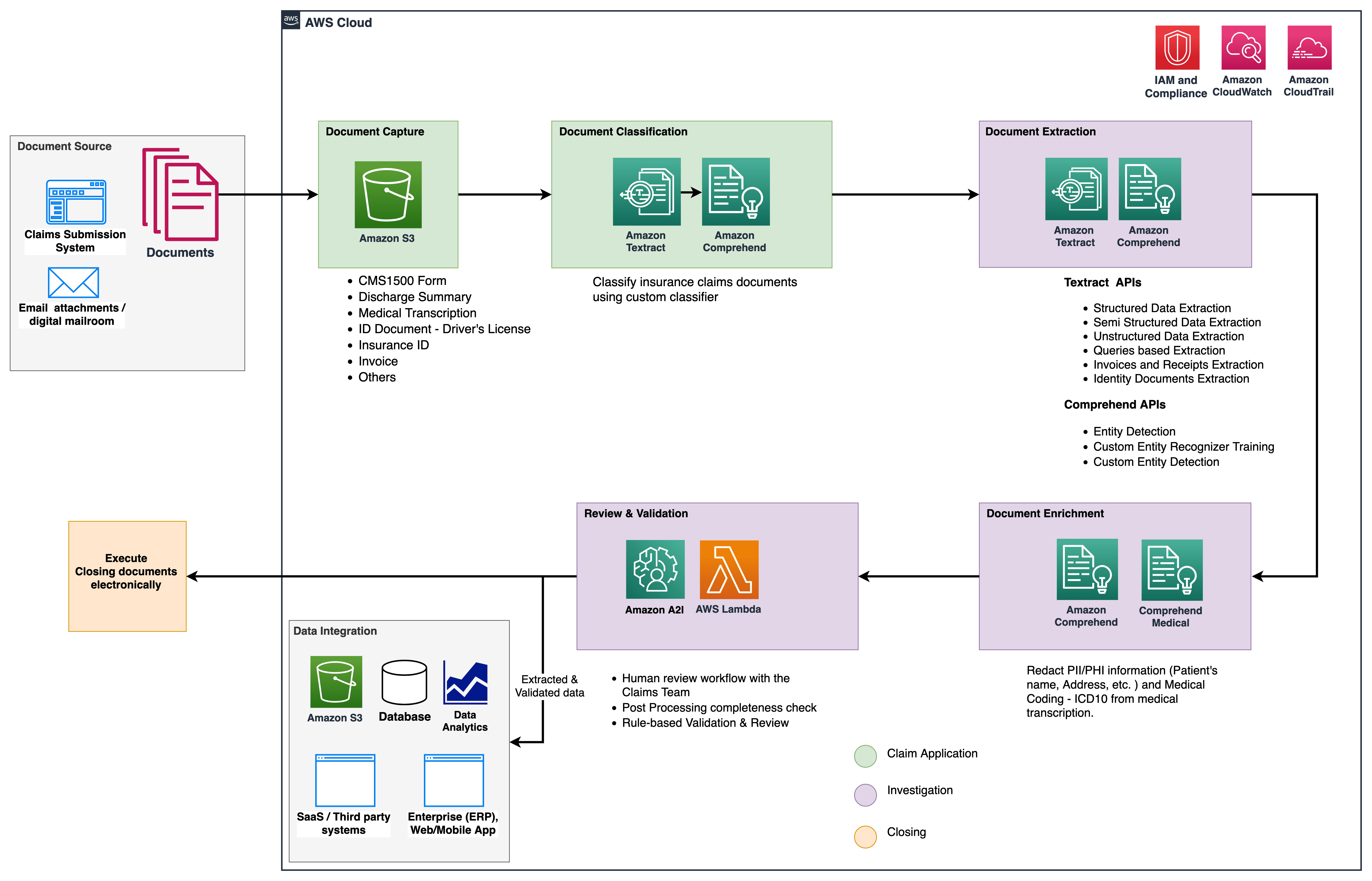

The following architecture diagram shows the different AWS services used during the phases of the IDP pipeline according to different stages of a claims processing application.

The solution uses the following key services:

- Amazon Textract is a machine learning (ML) service that automatically extracts text, handwriting, and data from scanned documents. It goes beyond simple optical character recognition (OCR) to identify, understand, and extract data from forms and tables. Amazon Textract uses ML to read and process any type of document, accurately extracting text, handwriting, tables, and other data with no manual effort.

- Amazon Comprehend is a natural language processing (NLP) service that uses ML to extract insights from text. Amazon Comprehend can detect entities such as person, location, date, quantity, and more. It can also detect the dominant language, personally identifiable information (PII) information, and classify documents into their relevant class.

- Amazon Augmented AI (Amazon A2I) is an ML service that makes it easy to build the workflows required for human review. Amazon A2I brings human review to all developers, removing the undifferentiated heavy lifting associated with building human review systems or managing large numbers of human reviewers. Amazon A2I integrates both with Amazon Textract and Amazon Comprehend to provide the ability to introduce human review or validation within the IDP workflow.

Prerequisites

In the following sections, we walk through the different services relating to the first three phases of the architecture, i.e., the data capture, classification and extraction phases.

Refer to our GitHub repository for full code samples along with the document samples in the claims processing packet.

Data capture phase

Claims and its supporting documents can come through various channels, such as fax, email, an admin portal, and more. You can store these documents in a highly scalable and durable storage like Amazon Simple Storage Service (Amazon S3). These documents can be of various types, such as PDF, JPEG, PNG, TIFF, and more. Documents can come in various formats and layouts, and can come from different channels to the data store.

Classification phase

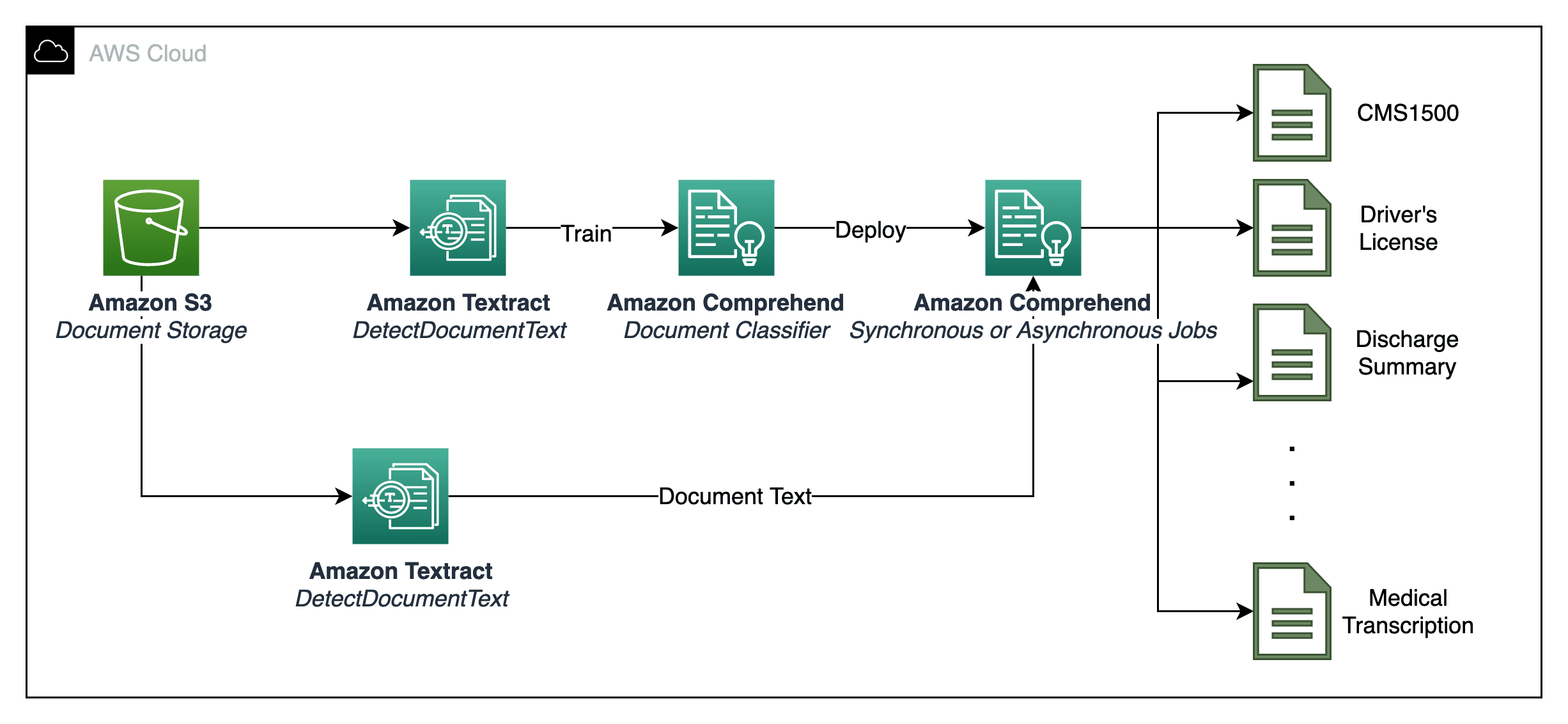

In the document classification stage, we can combine Amazon Comprehend with Amazon Textract to convert text to document context to classify the documents that are stored in the data capture stage. We can then use custom classification in Amazon Comprehend to organize documents into classes that we defined in the claims processing packet. Custom classification is also helpful for automating the document verification process and identifying any missing documents from the packet. There are two steps in custom classification, as shown in the architecture diagram:

- Extract text using Amazon Textract from all the documents in the data storage to prepare training data for the custom classifier.

- Train an Amazon Comprehend custom classification model (also called a document classifier) to recognize the classes of interest based on the text content.

After the Amazon Comprehend custom classification model is trained, we can use the real-time endpoint to classify documents. Amazon Comprehend returns all classes of documents with a confidence score linked to each class in an array of key-value pairs (Doc_name – Confidence_score). We recommend going through the detailed document classification sample code on GitHub.

Extraction phase

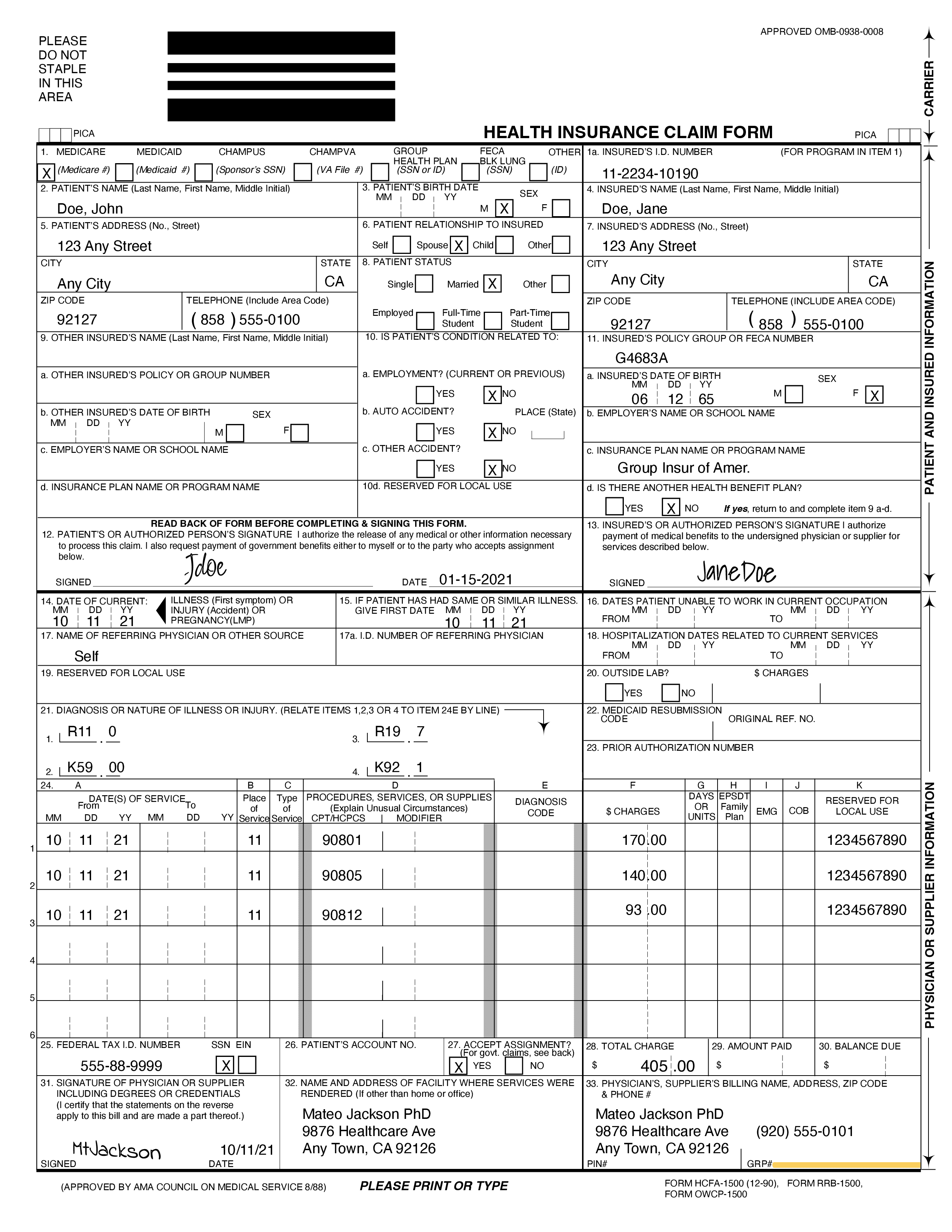

In the extraction phase, we extract data from documents using Amazon Textract and Amazon Comprehend. For this post, use the following sample documents in the claims processing packet: a Center of Medicaid and Medicare Services (CMS)-1500 claim form, driver’s license and insurance ID, and invoice.

Extract data from a CMS-1500 claim form

The CMS-1500 form is the standard claim form used by a non-institutional provider or supplier to bill Medicare carriers.

It’s important to process the CMS-1500 form accurately, otherwise it can slow down the claims process or delay payment by the carrier. With the Amazon Textract AnalyzeDocument API, we can speed up the extraction process with higher accuracy to extract text from documents in order to understand further insights within the claim form. The following is sample document of a CMS-1500 claim form.

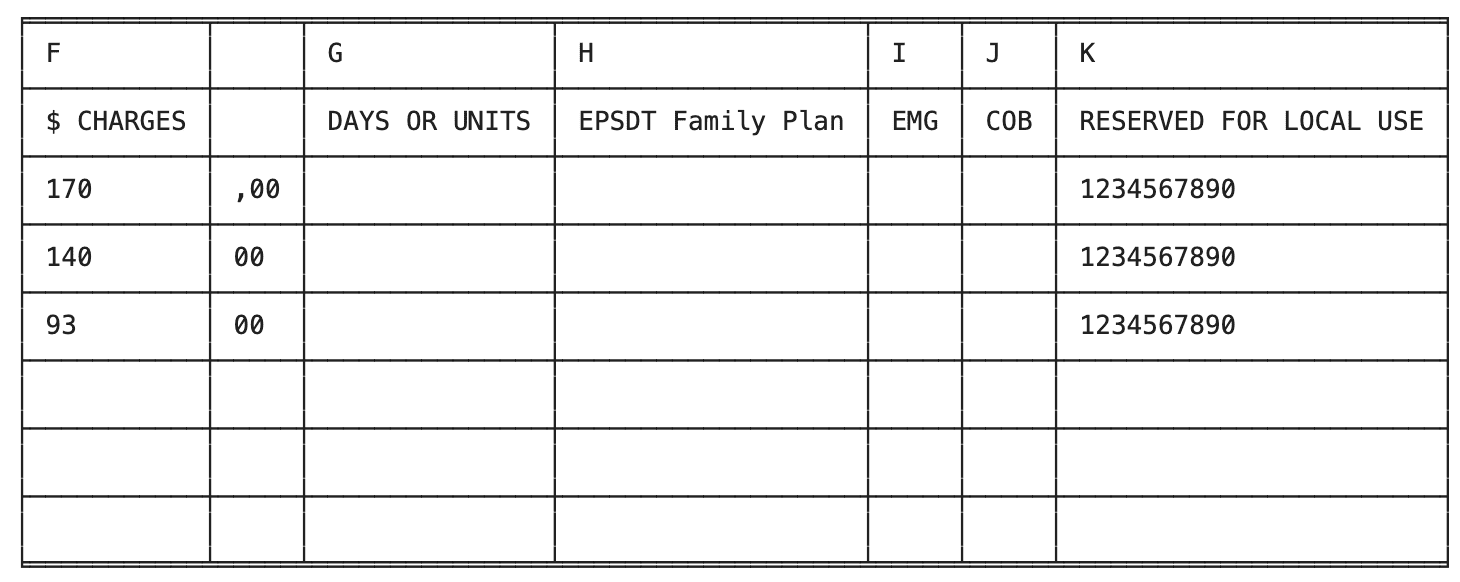

We now use the AnalyzeDocument API to extract two FeatureTypes, FORMS and TABLES, from the document:

The following results have been shortened for better readability. For more detailed information, see our GitHub repo.

The FORMS extraction is identified as key-value pairs.

The TABLES extraction contains cells, merged cells, and column headers within a detected table in the claim form.

Extract data from ID documents

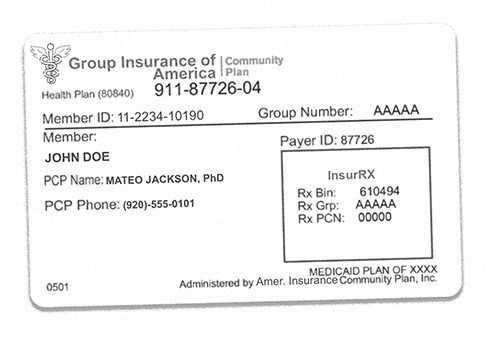

For identity documents like an insurance ID, which can have different layouts, we can use the Amazon Textract AnalyzeDocument API. We use the FeatureType FORMS as the configuration for the AnalyzeDocument API to extract the key-value pairs from the insurance ID (see the following sample):

Run the following code:

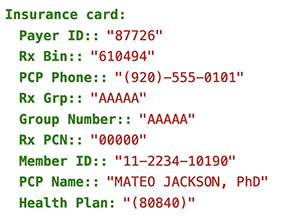

We get the key-value pairs in the result array, as shown in the following screenshot.

For ID documents like a US driver’s license or US passport, Amazon Textract provides specialized support to automatically extract key terms without the need for templates or formats, unlike what we saw earlier for the insurance ID example. With the AnalyzeID API, businesses can quickly and accurately extract information from ID documents that have different templates or formats. The AnalyzeID API returns two categories of data types:

- Key-value pairs available on the ID such as date of birth, date of issue, ID number, class, and restrictions

- Implied fields on the document that may not have explicit keys associated with them, such as name, address, and issuer

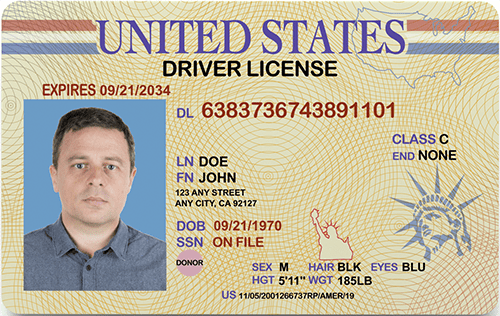

We use the following sample US driver’s license from our claims processing packet.

Run the following code:

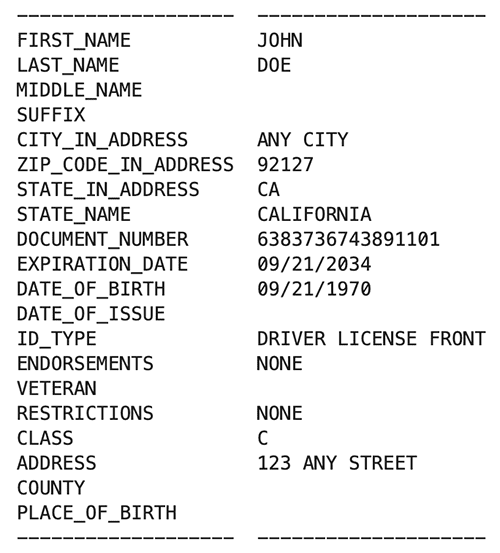

The following screenshot shows our result.

From the results screenshot, you can observe that certain keys are presented that were not in the driver’s license itself. For example, Veteran is not a key found in the license; however, it’s a pre-populated key-value that AnalyzeID supports, due to the differences found in licenses between states.

Extract data from invoices and receipts

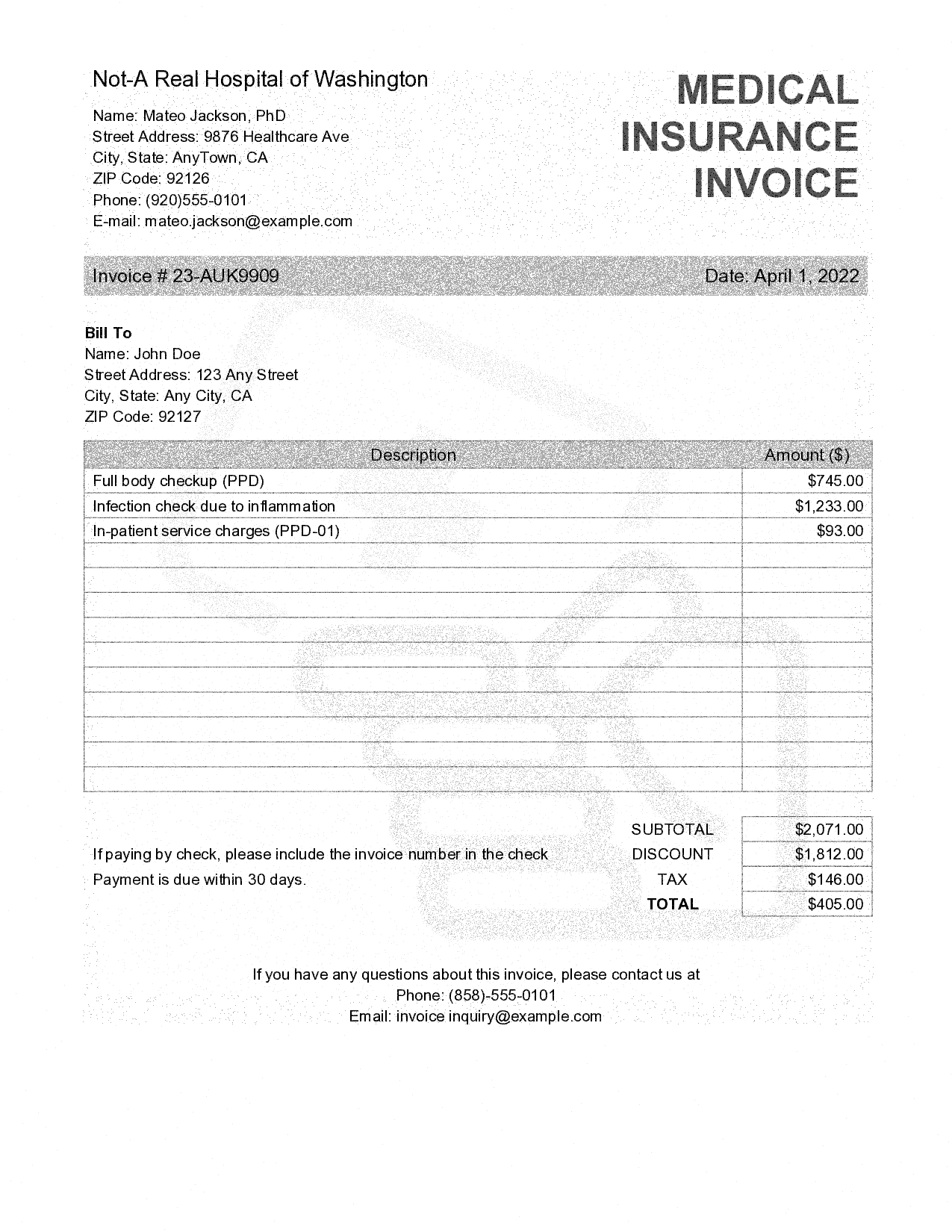

Similar to the AnalyzeID API, the AnalyzeExpense API provides specialized support for invoices and receipts to extract relevant information such as vendor name, subtotal and total amounts, and more from any format of invoice documents. You don’t need any template or configuration for extraction. Amazon Textract uses ML to understand the context of ambiguous invoices as well as receipts.

The following is a sample medical insurance invoice.

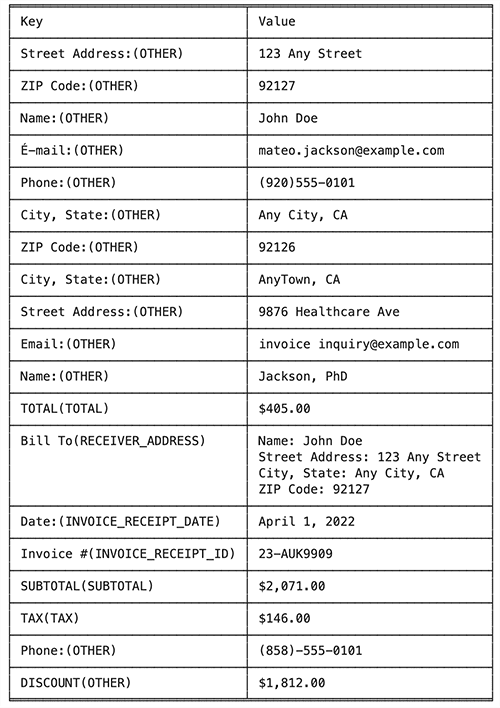

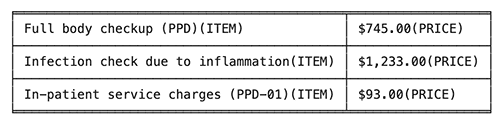

We use the AnalyzeExpense API to see a list of standardized fields. Fields that aren’t recognized as standard fields are categorized as OTHER:

We get the following list of fields as key-value pairs (see screenshot on the left) and the entire row of individual line items purchased (see screenshot on the right) in the results.

|

|

Conclusion

In this post, we showcased the common challenges in claims processing, and how we can use AWS AI services to automate an intelligent document processing pipeline to automatically adjudicate a claim. We saw how to classify documents into various document classes using an Amazon Comprehend custom classifier, and how to use Amazon Textract to extract unstructured, semi-structured, structured, and specialized document types.

In Part 2, we expand on the extraction phase with Amazon Textract. We also use Amazon Comprehend pre-defined entities and custom entities to enrich the data, and show how to extend the IDP pipeline to integrate with analytics and visualization services for further processing.

We recommend reviewing the security sections of the Amazon Textract, Amazon Comprehend, and Amazon A2I documentation and following the guidelines provided. To learn more about the pricing of the solution, review the pricing details of Amazon Textract, Amazon Comprehend, and Amazon A2I.

About the Authors

Chinmayee Rane is an AI/ML Specialist Solutions Architect at Amazon Web Services. She is passionate about applied mathematics and machine learning. She focuses on designing intelligent document processing solutions for AWS customers. Outside of work, she enjoys salsa and bachata dancing.

Chinmayee Rane is an AI/ML Specialist Solutions Architect at Amazon Web Services. She is passionate about applied mathematics and machine learning. She focuses on designing intelligent document processing solutions for AWS customers. Outside of work, she enjoys salsa and bachata dancing.

Sonali Sahu is leading the Intelligent Document Processing AI/ML Solutions Architect team at Amazon Web Services. She is a passionate technophile and enjoys working with customers to solve complex problems using innovation. Her core area of focus is artificial intelligence and machine learning for intelligent document processing.

Sonali Sahu is leading the Intelligent Document Processing AI/ML Solutions Architect team at Amazon Web Services. She is a passionate technophile and enjoys working with customers to solve complex problems using innovation. Her core area of focus is artificial intelligence and machine learning for intelligent document processing.

Tim Condello is a Senior AI/ML Specialist Solutions Architect at Amazon Web Services. His focus is natural language processing and computer vision. Tim enjoys taking customer ideas and turning them into scalable solutions.

Tim Condello is a Senior AI/ML Specialist Solutions Architect at Amazon Web Services. His focus is natural language processing and computer vision. Tim enjoys taking customer ideas and turning them into scalable solutions.

Leave a Reply