Cost-effective data preparation for machine learning using SageMaker Data Wrangler

Amazon SageMaker Data Wrangler is a capability of Amazon SageMaker that makes it faster for data scientists and engineers to prepare high-quality features for machine learning (ML) applications via a visual interface. Data Wrangler reduces the time it takes to aggregate and prepare data for ML from weeks to minutes. With Data Wrangler, you can simplify the process of data preparation and feature engineering, and complete each step of the data preparation workflow, including data selection, cleansing, exploration, and visualization from a single visual interface.

In this post, we dive into different aspects of data preparation and the associated features of Data Wrangler to understand the cost components of data preparation and how Data Wrangler offers a cost-effective approach to data preparation. We also cover cost optimization best practices to further reduce data preparation costs in Data Wrangler.

Overview of exploratory data analysis (EDA) and data preparation in Data Wrangler

To understand the cost-effectiveness of Data Wrangler, it’s important to look at different aspects of EDA and data preparation phase of ML. This blog will not compare different platforms or services for EDA, but understand different steps in EDA, their cost considerations, and how Data Wrangler facilitates EDA in a cost-effective way.

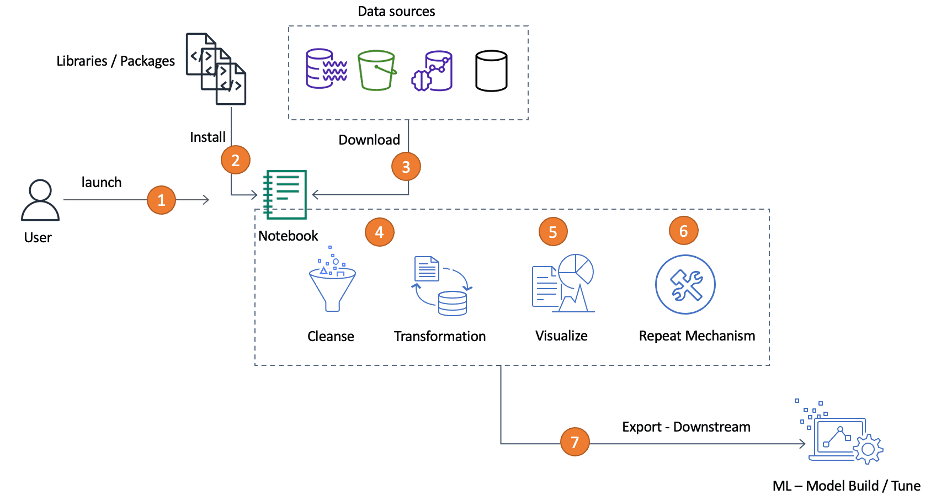

The typical EDA experience of a data scientist consists of the following steps:

- Launch a Jupyter notebook instance to carry out EDA.

- Import required packages for data analysis and visualization.

- Import the data from multiple sources.

- Carry out transformations such as handling missing values and outliers, one-hot encoding, balancing data, and more to clean the data and make it ready for modeling.

- Visualize the data.

- Create mechanisms to repeat the steps.

- Export processed data for downstream analytics or ML.

These steps are complex, and require flexibility in compute and memory requirements so you can run each step with appropriate compute and memory. You also need an integrated system that can import data from multiple sources and mechanisms to repeat or reuse so that you can apply the same EDA steps you already built to larger, similar, or different datasets as required by your downstream ML pipeline.

EDA cost considerations

The following are some of the cost considerations for EDA:

Compute

- Some EDA environments require data in a certain format. In such cases, you need to process the data to the format accepted by the EDA environment. For example, if the environment accepts only CSV format but you have data in Parquet or another format, you have to convert your dataset to CSV format. Reformatting data requires compute.

- Not all environments have the flexibility to change compute or memory configuration with the click of a button. You may need to have the highest compute capacity and memory footprint as applicable to each transformation you’re performing.

Storage and data transfer

- Data in multiple sources has to be collected. If only selected sources are supported by the EDA environment, you may have to move your data from different sources to that single supported source, which increases both storage and data transfer cost.

Labor cost and expertise

- Managing the EDA platform and the underlying compute infrastructure involves expertise, effort, and cost. When you manage the infrastructure, you have the operational burden of managing operating systems and applications such as provisioning, patching, and upgrading. Make sure to identify issues quickly. If you don’t validate the data before building your model, you have wasted a lot of resources as well as engineer time.

- Note that EDA requires data science and data experience expertise.

- Additionally, some EDA environments don’t offer a point-and-click interface and require you to write code to explore, visualize, and transform data, which involves labor cost.

Operations cost

- To move the data from the source to carry out transformations and then to downstream ML pipelines, you may have to carry out the repetitive EDA steps again from the beginning of fetching the data in each phase of EDA, which is time consuming and carries a cumulative labor cost. If you can use the transformed data from the previous step, it doesn’t cumulatively increase cost.

- Having an easy mechanism to repeat the same set of EDA steps on similar or incremental datasets saves time as well as cost from a people and compute resources perspective.

Let’s see how Data Wrangler facilitates EDA or data preparation in a cost-effective manner in regards to these different areas.

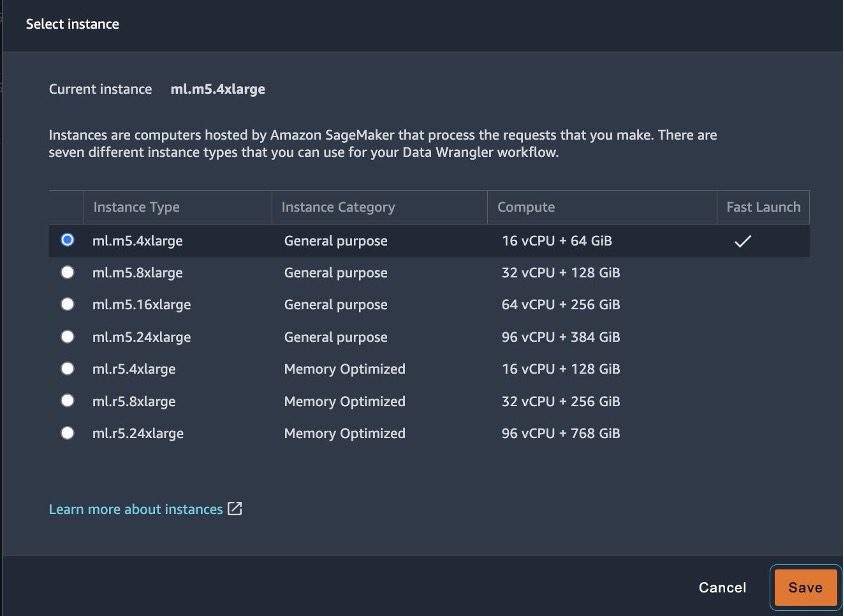

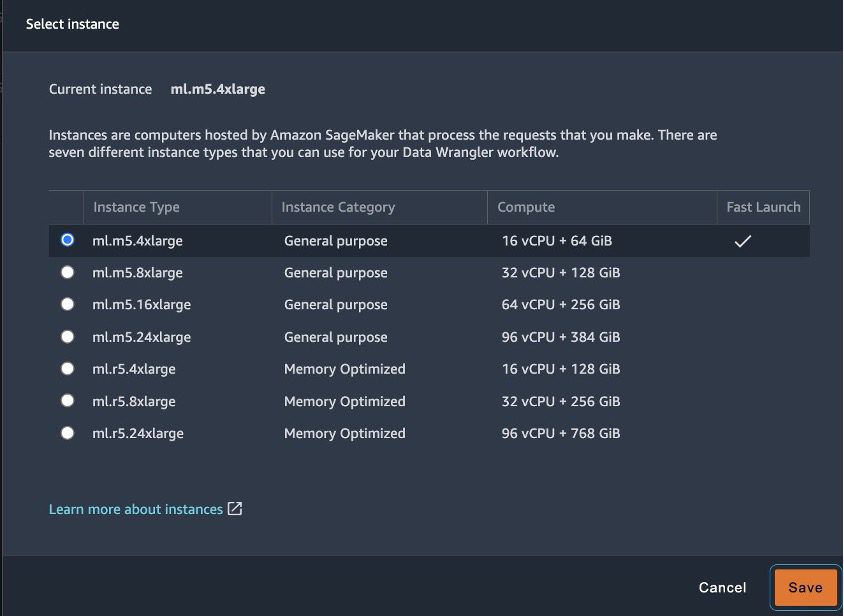

Compute

When you carry out EDA on a notebook, you may not have the flexibility to scale the compute or memory on demand, which may force you to run the transformation and visualizations in an oversized environment. If you have an undersized environment, you may run into out of memory issues. In Data Wrangler, you can choose a smaller instance type for certain transformations or analysis and then upscale the instance to a larger type and carry out complex transformations. When the complex transformation is complete, you can downscale the Data Wrangler instance to a smaller instance type. This gives you the flexibility to scale your compute based on the transformation requirements.

Data Wrangler supports a variety of instance types, and you can choose the right one for your workload, thereby eliminating the costs of oversized or undersized environments.

Storage and data transfer

In this section, we discuss some of the cost considerations for storage and data transfer.

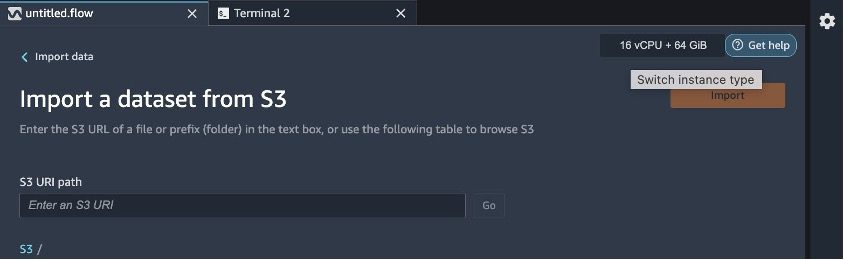

Import

Data for ML is often available from multiple sources and in different formats. With Data Wrangler, you can import data from the following data sources: Amazon Simple Storage Service (Amazon S3), Amazon Athena, Amazon Redshift, AWS Lake Formation, Amazon SageMaker Feature Store and Snowflake. Data can be in any of the following formats: CSV, Parquet, JSON, and Optimized Row Columnar (ORC), and more data formats will be added based on customer demand. Because the important data sources are already supported in Data Wrangler, data can be directly imported from the respective sources, and you only pay for the GB-month of provisioned storage. For more information, refer to Amazon SageMaker Pricing.

All the iterative data exploration, data transformation, and visualization can be carried out within Data Wrangler itself. This eliminates further data movement compared to other environments where you may have to move the data to different locations for ingestion, transformation, and processing. From a cost perspective, this eliminates duplicate data storage as well as reduced data movement.

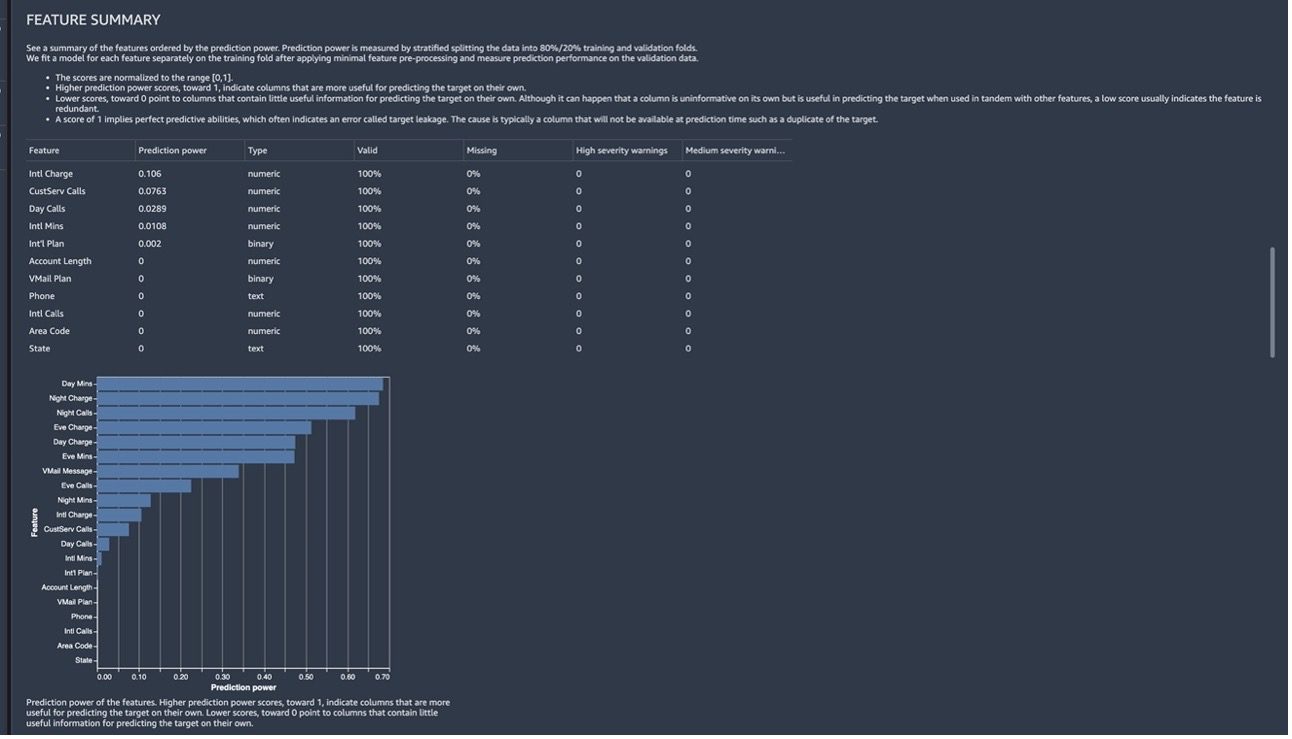

Data quality cost

If you don’t identify bad data and correct it early, it will become a costly problem to solve later. The Data Quality and Insights Report helps you eliminate this problem. You can use the Data Quality and Insights Report to perform an analysis of your data to gain insights into your dataset, such as the number of missing values and the number of outliers. If you have issues with your data, such as target leakage or imbalance, the insights report can bring those issues to your attention. As soon as you import your data, you can run an insights report with a click of a button. This reduces the effort of importing libraries and writing code to get the required insights on the dataset, which reduces the labor cost and expertise required.

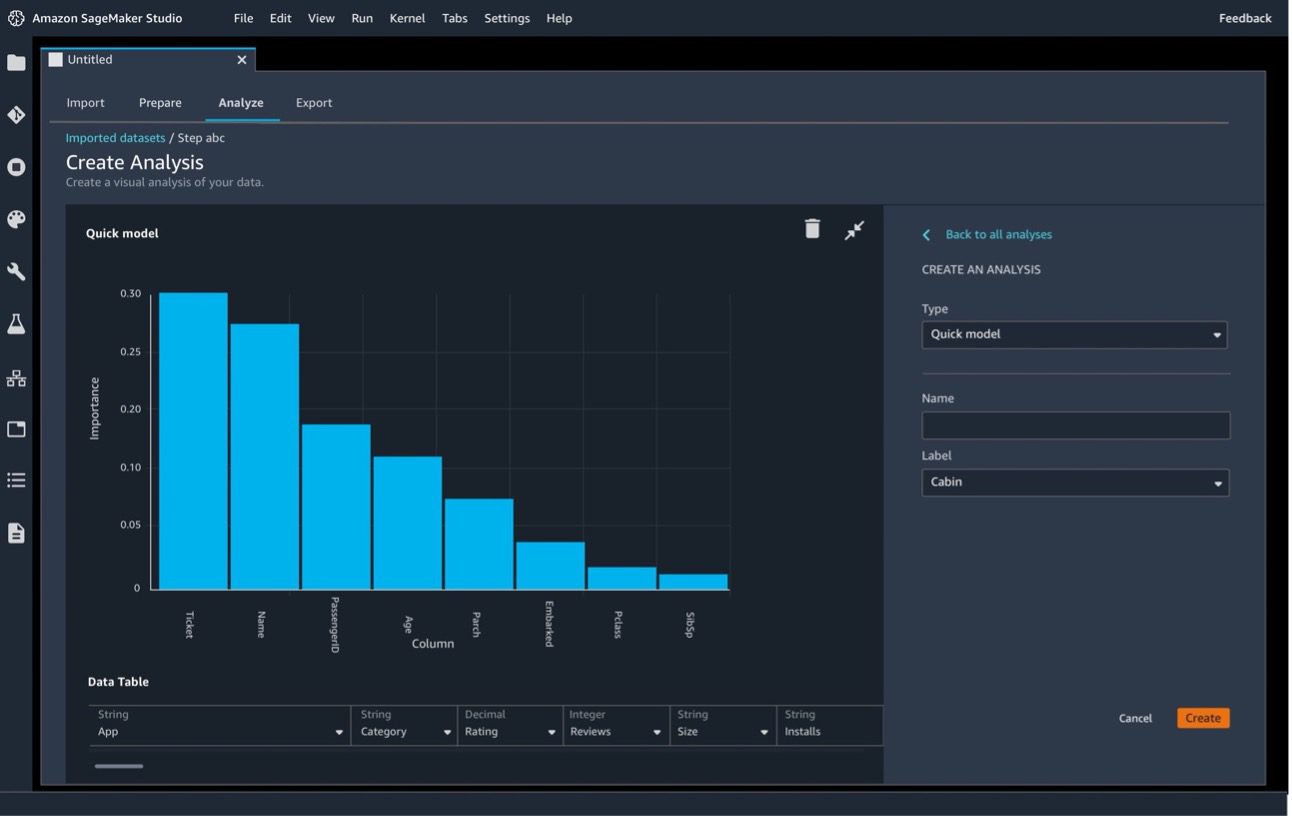

When you create the Data Quality and Insights Report, Data Wrangler gives you the option to select a target column (the column that you’re trying to predict). When you choose a target column, Data Wrangler automatically creates a target column analysis. It also ranks the features in the order of their predictive power (see the following screenshot). This contributes to the direct business benefit of high-quality features for the downstream ML process.

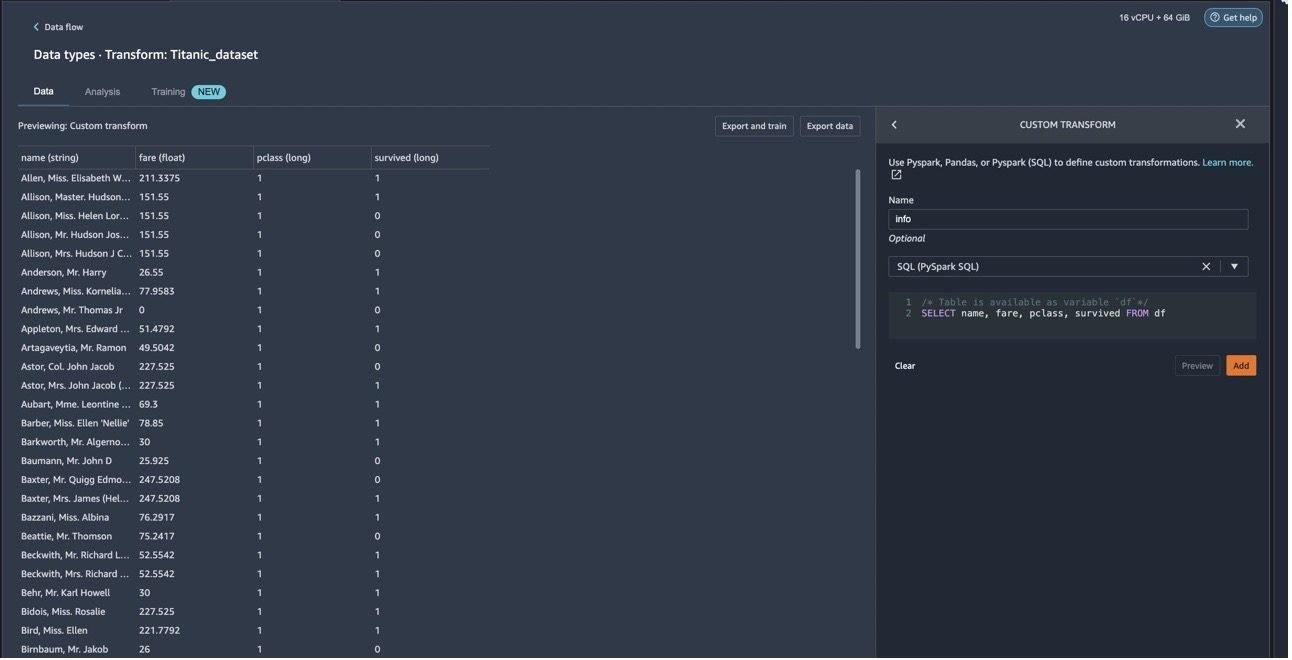

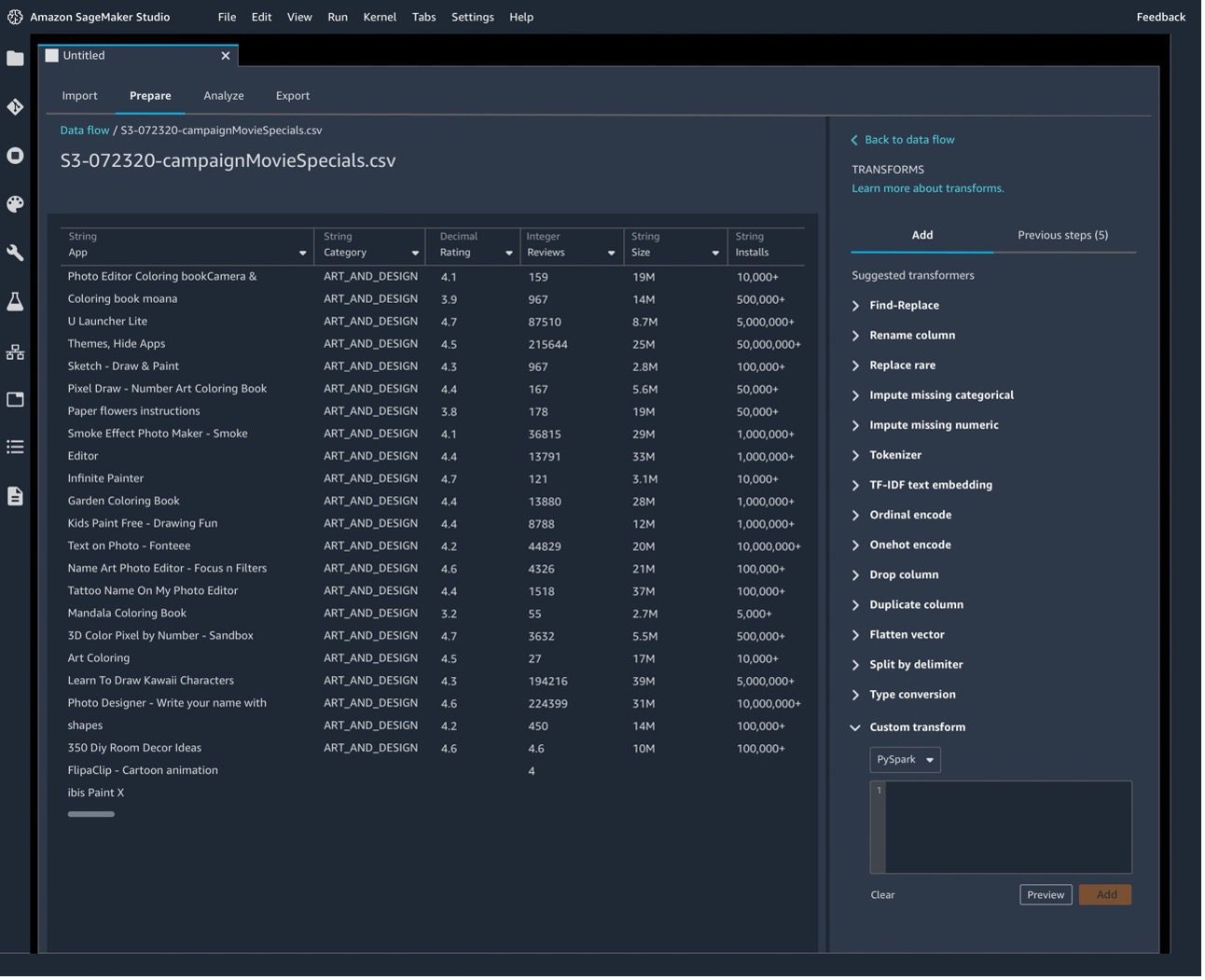

Transformation

If your EDA tool supports only certain transformations, you may need to move the data to a different environment to carry out the custom transformations such as Spark jobs. Data Wrangler supports custom transformations, which can be written in PySpark, Pandas, and SQL (see the following screenshot for an example). They’re developer friendly and all seamlessly packaged into one place, reducing data movement and saving cost associated with data transfer and storage.

You may also need to carry out mathematical operations on your datasets, such as taking an absolute value of a column. If your EDA tool doesn’t support mathematical operations, you may have to carry out the operations externally, which requires additional effort and cost. Some tools might support mathematical operations on the dataset but require you to import libraries, which involves additional effort. In Data Wrangler, you can also use a custom formula to define a new column using a Spark SQL expression to query data in the current data frame without incurring any additional cost for custom transformations or custom queries.

Labor cost and expertise

Managing the EDA platform and the underlying compute infrastructure involves expertise, effort, and cost. Data Wrangler offers a selection of over 300 preconfigured data transformations written in PySpark, so you can process datasets up to hundreds of gigabytes efficiently without having to worry about writing code to transform the data. You can use transformations such as convert column type, one hot encoding, impute missing data with mean or median, rescale columns, and data/time embeddings to transform your data into formats that the models can use without even writing a single line of code. This reduces time and effort, thereby reducing labor cost.

Data Wrangler offers a point-and-click interface to visualize and validate data (see the following screenshot). No expertise is required on data engineering or analytics because all the data preparation can be done via simple point and click.

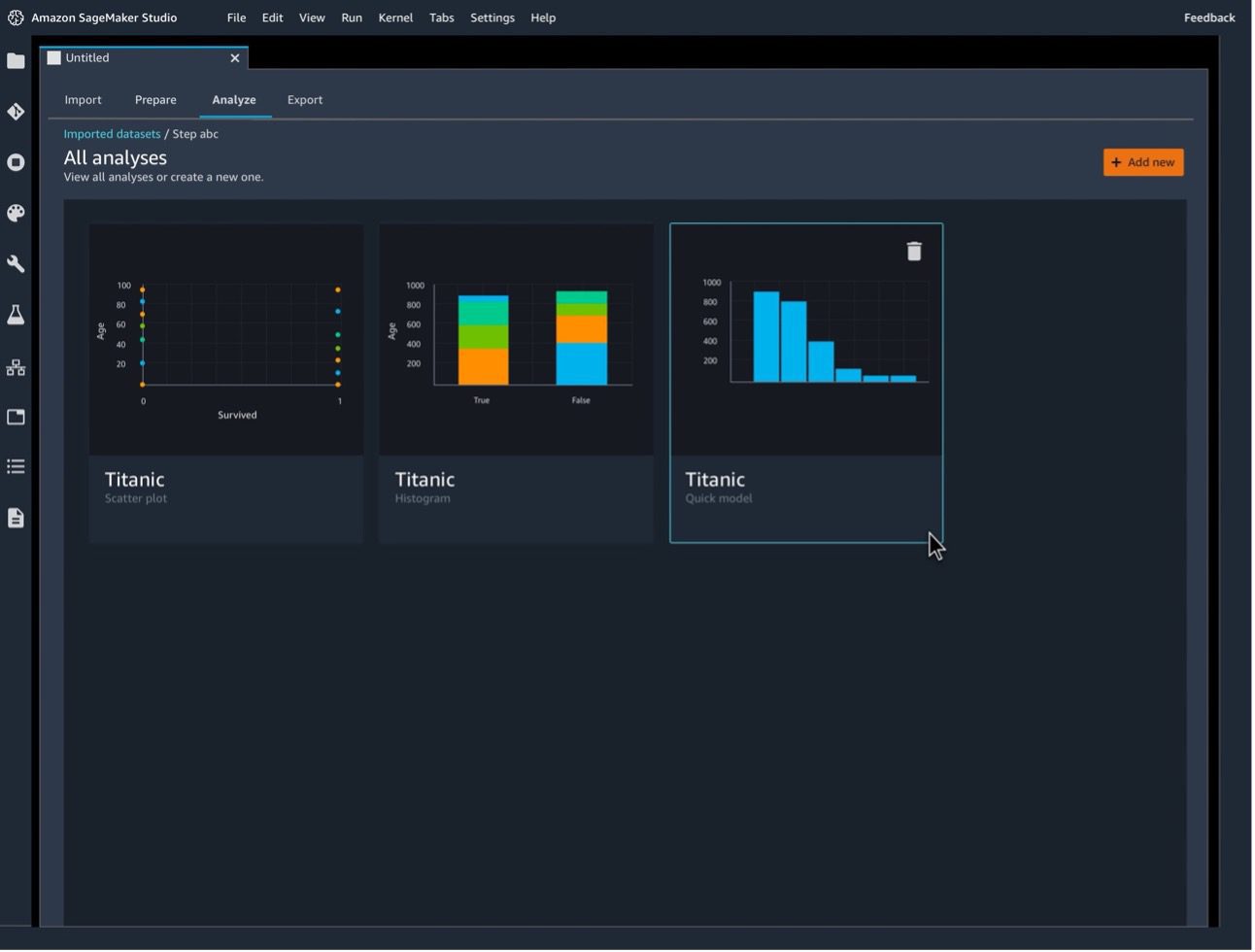

Visualization

Data Wrangler helps you understand your data and identify potential errors and extreme values with a set of robust preconfigured visualization templates. You don’t need familiarity or to spend additional time to import any external libraries or dependencies to carry out the visualizations. Histograms, scatter plots, box and whisker plots, line plots, and bar charts are all available (see the following screenshots for some examples). Templates such as histograms make it simple to create and edit your own visualizations without writing code.

Validation

Data Wrangler enables you to quickly identify inconsistencies in your data preparation workflow and diagnose issues before models are deployed into production (see the following screenshot). You can quickly identify if your prepared data will result in an accurate model so you can determine if additional feature engineering is needed to improve performance. All of this occurs before the model building phase, so there is no additional labor cost for building a model that’s not performing as expected (low performance metrics) that would result in additional transformations after the model build. The validation also results in the business benefit of better quality features.

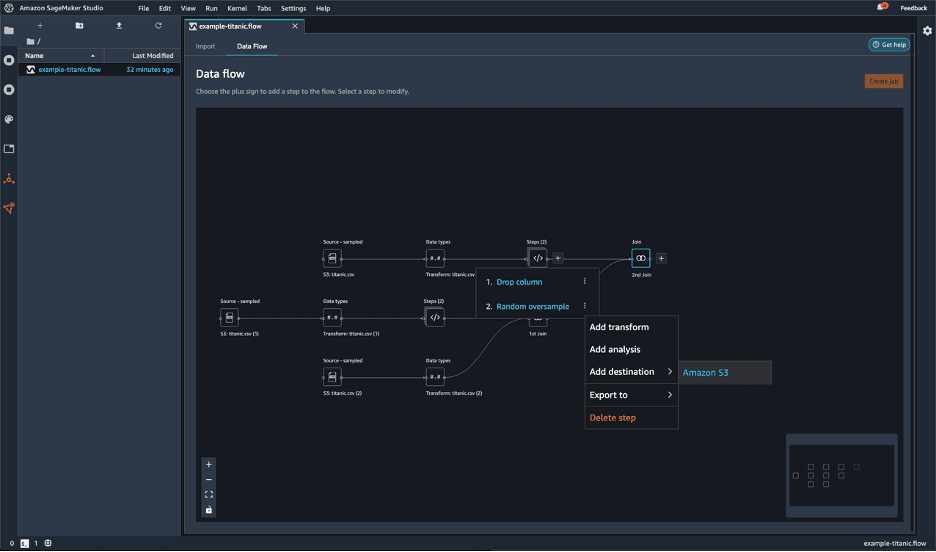

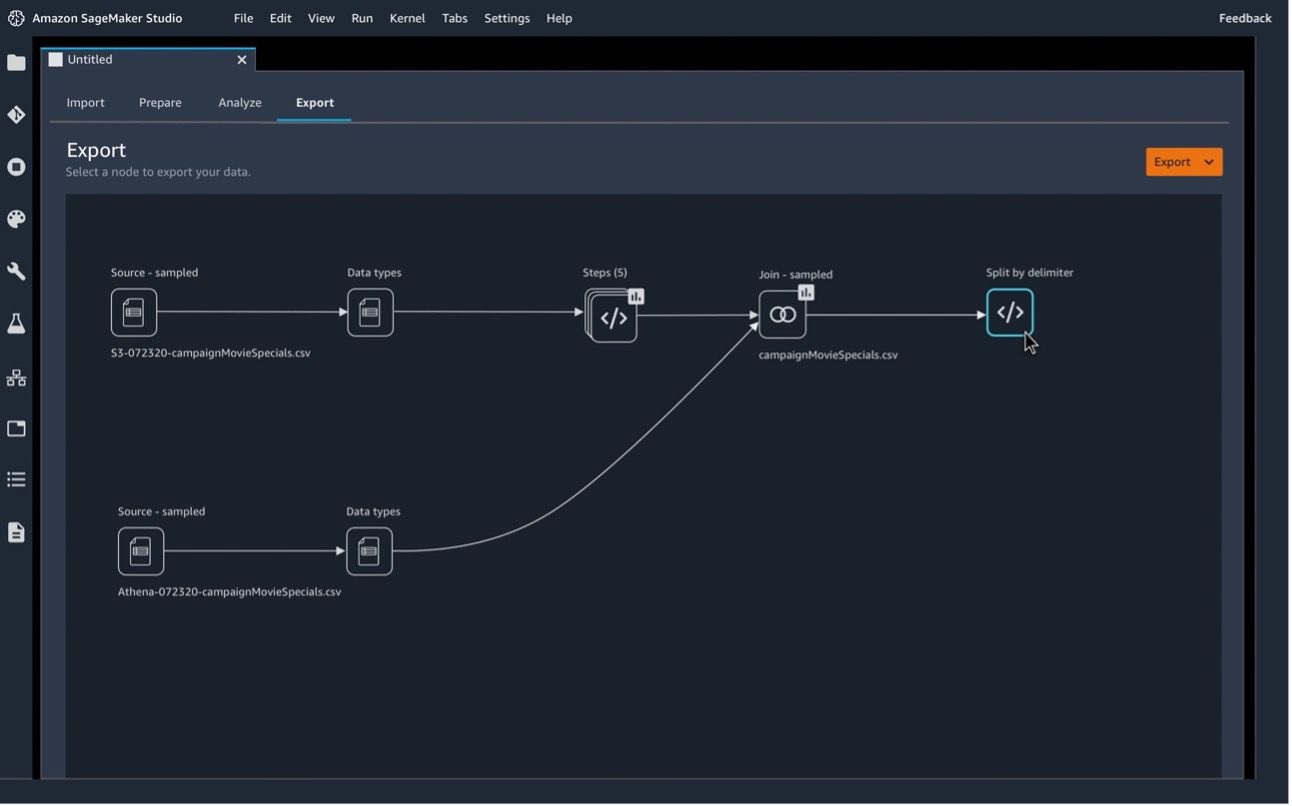

Build scalable data preparation pipelines

When you carry out EDA you have to build data preparation pipelines that can scale with datasets (see the following screenshot). This is important for repetition as well as downstream ML processes. Typically, customers use Spark for its distributed, scalable, and in-memory processing nature; however, this requires a lot of expertise on Spark. Setting up a Spark environment is time consuming and requires expertise for optimal configuration. With Data Wrangler, you can create data processing jobs and export to Amazon S3 and Amazon feature store purely via the visual interface without having to generate, run, or manage Jupyter notebooks, which facilitates scalable data preparation pipelines without any Spark expertise. For more information, refer to Launch processing jobs with a few clicks using Amazon SageMaker Data Wrangler.

Operations cost

Integration may not be a direct cost benefit; however, there are indirect cost benefits when you work in an integrated environment such as SageMaker. Because Data Wrangler is integrated with AWS services, you can export your data preparation workflow to a Data Wrangler job notebook, and launch Amazon SageMaker Autopilot training experiment, Amazon SageMaker Pipelines notebook, or code script. You can also create a Data Wrangler processing job with one click without needing to set up and manage infrastructure to carry out repetitive steps or automation in an ML workflow.

In your Data Wrangler flow, you can export some or all of the transformations that you made to your data processing pipelines. When you export your data flow, you’re charged for the AWS resources that you use. From a cost perspective, exporting the transformation gives you the ability to repeat the transformation on additional datasets with no incremental effort.

With Data Wrangler, you can export all the transformations that you made to a dataset to a destination node with just a few clicks. This allows you to create data processing jobs and export to Amazon S3 purely via the visual interface without having to generate, run, or manage Jupyter notebooks, thereby enhancing the low-code experience.

Data Wrangler allows you to export your data preparation steps or data flow into different environments. Data Wrangler has seamless integration with other AWS services and features, such as the following:

- SageMaker Feature Store – You can engineer your model features using Data Wrangler and then ingest into your feature store, which is a centralized store for features and their associated metadata

- SageMaker Pipelines – You can use the data flow exported from Data Wrangler in SageMaker pipelines, which are used to build and deploy large-scale ML workflows

- Amazon S3 – You can export the data to Amazon S3 and use it to create Data Wrangler jobs

- Python – Finally, you can export all the steps in your data flow to a Python file, which you can manually integrate into any data processing workflow.

Such tight integration helps reduce effort, time, expertise, and cost.

Cost optimization best practices

In this section, we discuss best practices to further optimize cost in Data Wrangler.

Update Data Wrangler to the latest release

When you update Data Wrangler to the latest release, you get all the latest features, security, and overall optimizations made to Data Wrangler, which may improve its cost-effectiveness.

Use built-in Data Wrangler transformers

Use the built-in Data Wrangler transformers over custom Pandas transforms when processing larger and wider datasets.

Choose the right instance type for your Data Wrangler flow

There are two families of ml instance types supported for Data Wrangler: m5 and r5. m5 instances are general purpose instances that provide a balance between compute and memory, whereas r5 instances are designed to deliver fast performance to process large datasets in memory.

We recommend choosing an instance that is best optimized around your workloads. For example, the r5.8xlarge might have a higher price than the m5.4xlarge, but the r5.8xlarge might be better optimized for your workloads. With better optimized instances, you can run your data flows in less time at lower cost.

Process larger and wider datasets

For datasets larger than tens of gigabytes, we recommend using built-in transforms, or sampling data on import to run custom Pandas transforms interactively. In the post, we share our findings from two benchmark tests to demonstrate how to do this.

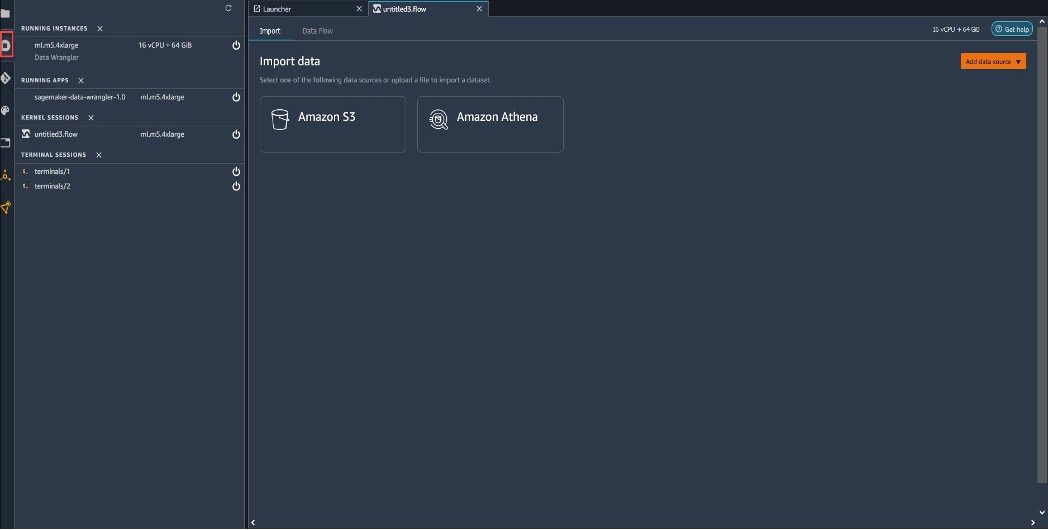

Shut down unused instances

You are charged for all running instances. To avoid incurring additional charges, shut down the instances that you aren’t using manually. To shut down an instance that is running, complete the following steps:

- On your data flow page, choose the instance icon in the navigation pane under Running instances.

- Choose Shut down.

If you shut down an instance used to run a flow, you can’t access the flow temporarily. If you get an error in opening the flow running an instance you previously shut down, wait for approximately 5 minutes and try opening it again.

When you’re not using Data Wrangler, it’s important to shut down the instance on which it runs to avoid incurring additional fees. For more information, refer to Shut Down Data Wrangler.

For information about shutting down Data Wrangler resources automatically, refer to Save costs by automatically shutting down idle resources within Amazon SageMaker Studio.

Export

When you export your Data Wrangler flow or transformations, you can use cost allocation tags to organize and manage the costs of those resources. You create these tags for your user profile and Data Wrangler automatically applies them to the resources used to export the data flow. For more information, see Using Cost Allocation Tags.

Pricing

Data Wrangler pricing has three components: Data Wrangler instances, Data Wrangler jobs, and ML storage. You can perform all the steps for EDA or data preparation within Data Wrangler and you pay for the instance, jobs, and storage pricing based on usage or consumption, with no upfront or licensing fees. For more information, refer to On-Demand Pricing.

Conclusion

In this post, we reviewed different cost aspects of EDA and data preparation to discover how feature-rich and integrated Data Wrangler reduces the time it takes to aggregate and prepare data for ML use cases from weeks to minutes, thereby facilitating cost-effective data preparation for ML. We also inspected the pricing components of Data Wrangler and best practices for cost optimization when using Data Wrangler for your ML data preparation requirements.

For more information, see the following resources:

- Prepare ML Data with Amazon SageMaker Data Wrangler

- Accelerate data preparation with data quality and insights in Amazon SageMaker Data Wrangler

- Amazon SageMaker Studio

- Save costs by automatically shutting down idle resources within Amazon SageMaker Studio

- Process larger and wider datasets with Amazon SageMaker Data Wrangler

- Using Cost Allocation Tags

About the Authors

Rajakumar Sampathkumar is a Principal Technical Account Manager at AWS, providing customer guidance on business-technology alignment and supporting the reinvention of their cloud operation models and processes. He is passionate about cloud and machine learning. Raj is also a machine learning specialist and works with AWS customers to design, deploy, and manage their AWS workloads and architectures.

Rajakumar Sampathkumar is a Principal Technical Account Manager at AWS, providing customer guidance on business-technology alignment and supporting the reinvention of their cloud operation models and processes. He is passionate about cloud and machine learning. Raj is also a machine learning specialist and works with AWS customers to design, deploy, and manage their AWS workloads and architectures.

Rahul Nabera is a Data Analytics Consultant in AWS Professional Services. His current work focuses on enabling customers build their data and machine learning workloads on AWS. In his spare time, he enjoys playing cricket and volleyball.

Rahul Nabera is a Data Analytics Consultant in AWS Professional Services. His current work focuses on enabling customers build their data and machine learning workloads on AWS. In his spare time, he enjoys playing cricket and volleyball.

Leave a Reply