Interactive data prep widget for notebooks powered by Amazon SageMaker Data Wrangler

According to a 2020 survey of data scientists conducted by Anaconda, data preparation is one of the critical steps in machine learning (ML) and data analytics workflows, and often very time consuming for data scientists. Data scientists spend about 66% of their time on data preparation and analysis tasks, including loading (19%), cleaning (26%), and visualizing data (21%).

Amazon SageMaker Studio is the first fully integrated development environment (IDE) for ML. With a single click, data scientists and developers can quickly spin up Studio notebooks to explore datasets and build models. If you prefer a GUI-based and interactive interface, you can use Amazon SageMaker Data Wrangler, with over 300 built in visualizations, analyses, and transformations to efficiently process data backed by Spark without writing a single line of code.

Data Wrangler now offers a built-in data preparation capability in Amazon SageMaker Studio Notebooks that allows ML practitioners to visually review data characteristics, identify issues, and remediate data-quality problems—in just a few clicks directly within the notebooks.

In this post, we show you how the Data Wrangler data prep widget automatically generates key visualizations on top of a Pandas data frame to understand data distribution, detect data quality issues, and surface data insights such as outliers for each feature. It helps interact with the data and discover insights that may go unnoticed with ad hoc querying. It also recommends transformations to remediate, enables you to apply data transformations on the UI and automatically generate code in the notebook cells. This feature is available in all regions where SageMaker Studio is available.

Solution overview

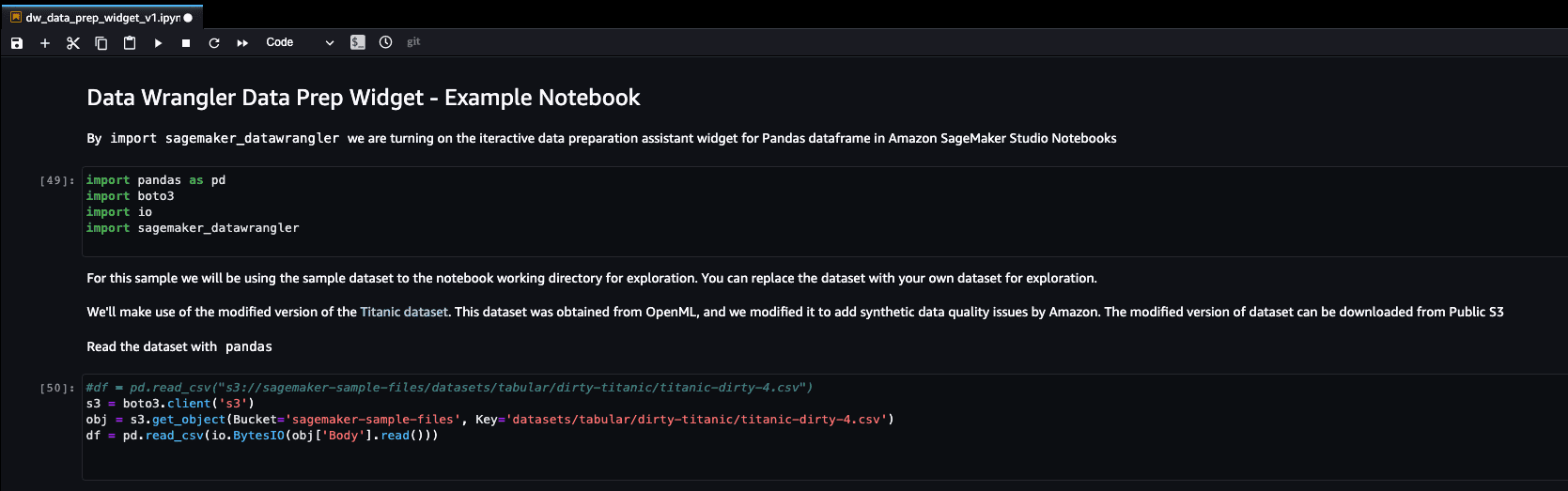

Let’s further understand how this new widget makes data exploration significantly easier and provides a seamless experience to improve the overall data preparation experience for data engineers and practitioners. For our use case, we use of a modified version of the Titanic dataset, a popular dataset in the ML community, which has now been added as a sample dataset so you can get started with SageMaker Data Wrangler quickly. The original dataset was obtained from OpenML, and modified to add synthetic data quality issues by Amazon for this demo. You can download the modified version of dataset from public S3 path s3://sagemaker-sample-files/datasets/tabular/dirty-titanic/titanic-dirty-4.csv.

Prerequisites

To get hands-on experience with all the features described in this post, complete the following prerequisites:

- Ensure that you have an AWS account, secure access to log in to the account via the AWS Management Console, and AWS Identity and Access Management (IAM) permissions to use Amazon SageMaker and Amazon Simple Storage Service (Amazon S3) resources.

- Use the sample dataset from public S3 path

s3://sagemaker-sample-files/datasets/tabular/dirty-titanic/titanic-dirty-4.csvor alternatively upload it to an S3 bucket in your account. - Onboard to a SageMaker domain and access Studio to use notebooks. For instructions, refer to Onboard to Amazon SageMaker Domain. If you’re using existing Studio, upgrade to the latest version of Studio.

Enable the data exploration widget

When you’re using Pandas data frames, Studio notebook users can manually enable the data exploration widget so that new visualizations are displayed by default on top of each column. The widget shows a histogram for numerical data and a bar chart for other types of data. These representations allow you to quickly comprehend the data distribution and discover missing values and outliers without having to write boilerplate methods for each and every column. You can hover over the bar in each visual to get a quick understanding of the distribution.

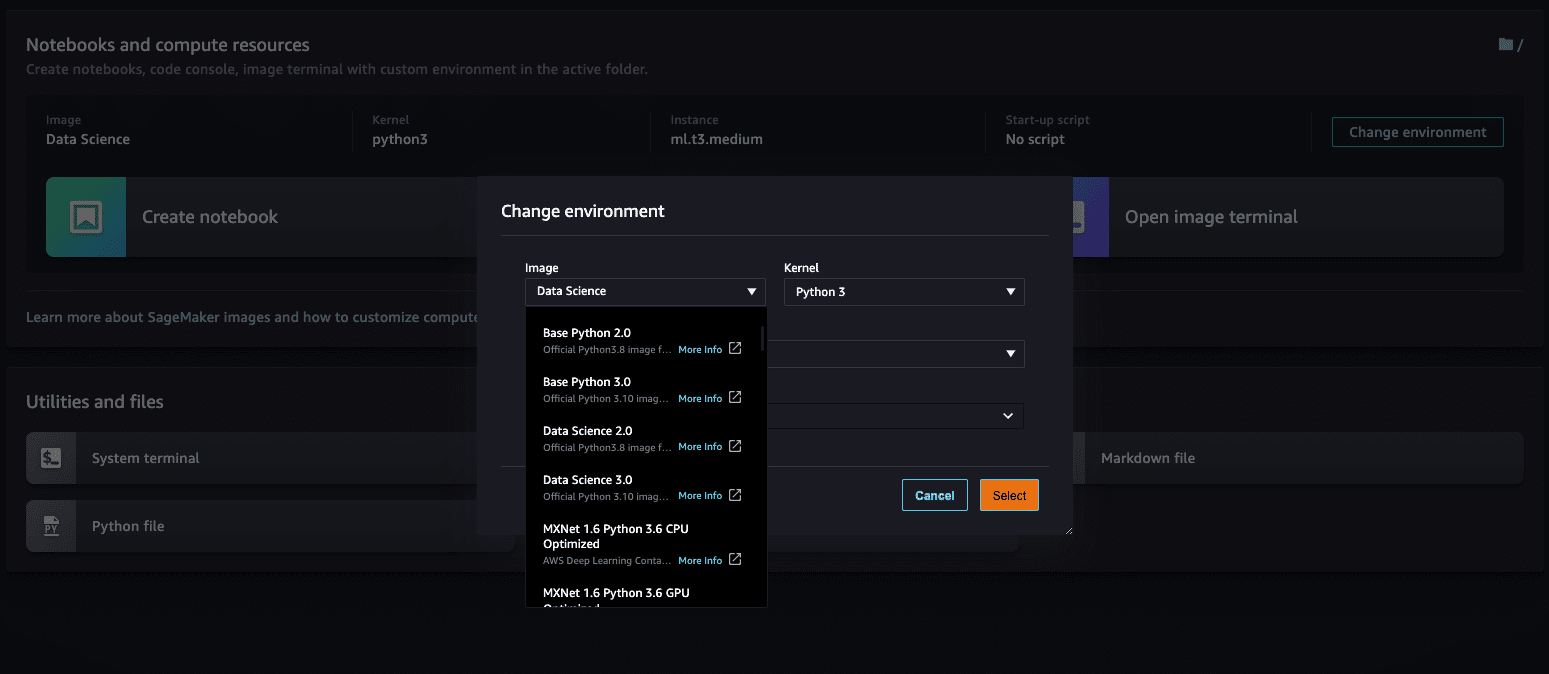

Open Studio and create a new Python 3 notebook. Make sure to choose the Data Science 3.0 image from SageMaker images by clicking Change environment button.

The data exploration widget is available in the following images. For the list of default SageMaker images, refer to Available Amazon SageMaker Images.

- Python 3 (Data Science) with Python 3.7

- Python 3 (Data Science 2.0) with Python 3.8

- Python 3 (Data Science 3.0) with Python 3.10

- Spark Analytics 1.0 and 2.0

To use this widget, import the SageMaker_DataWrangler library. Load the modified version of the Titanic dataset from S3://sagemaker-sample-files/datasets/tabular/dirty-titanic/titanic-dirty-4.csv and read the CSV with the Pandas library:

Visualize the data

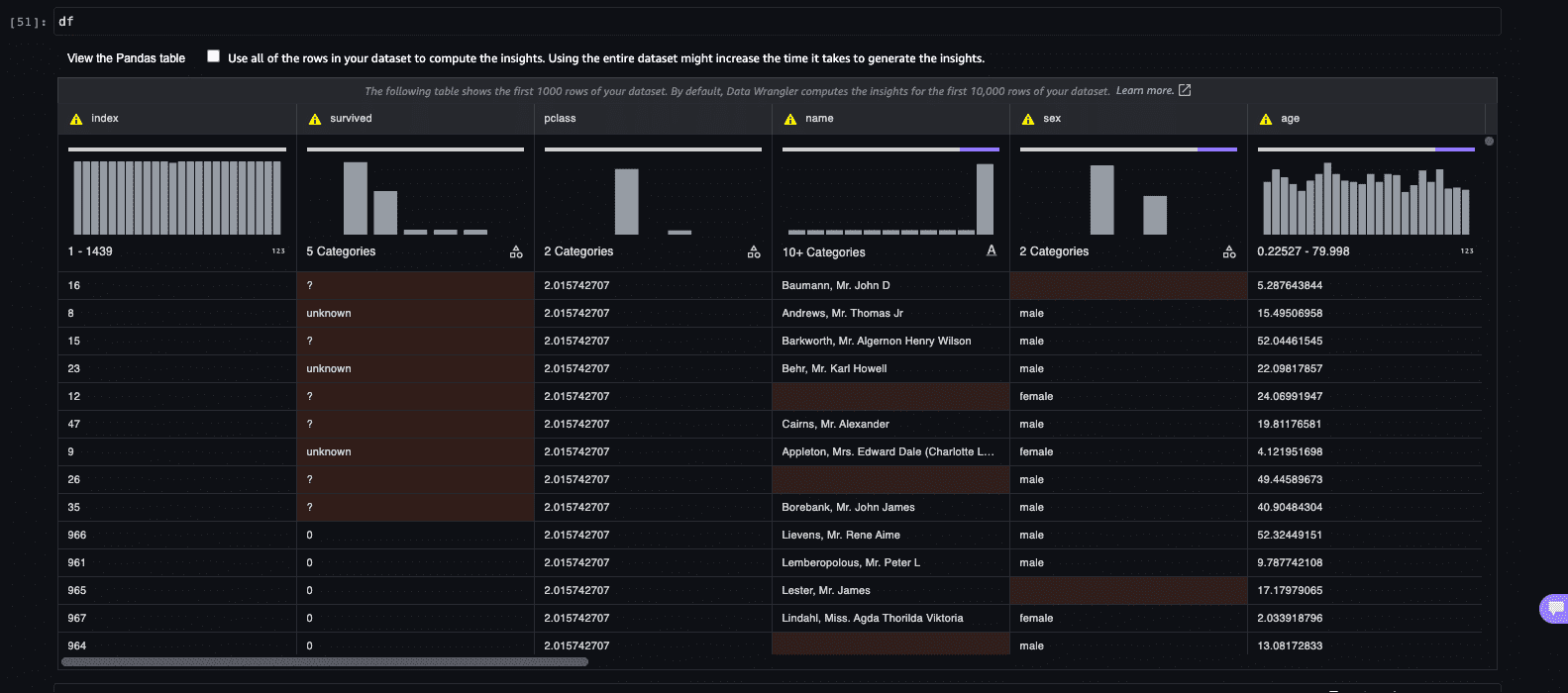

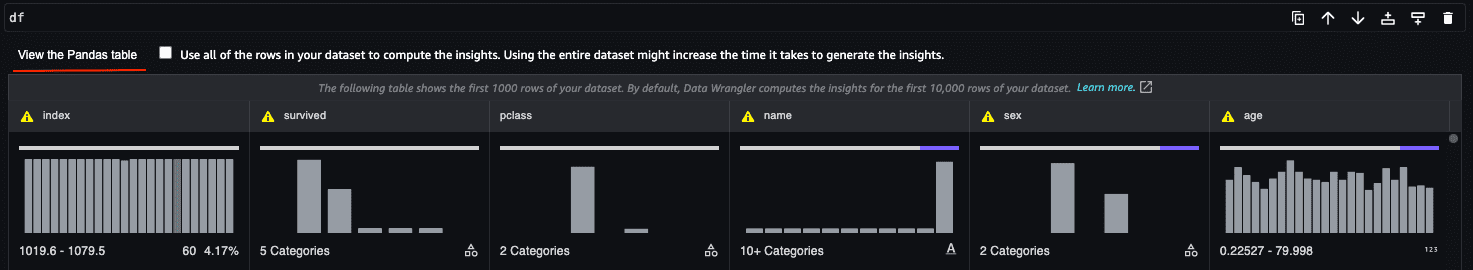

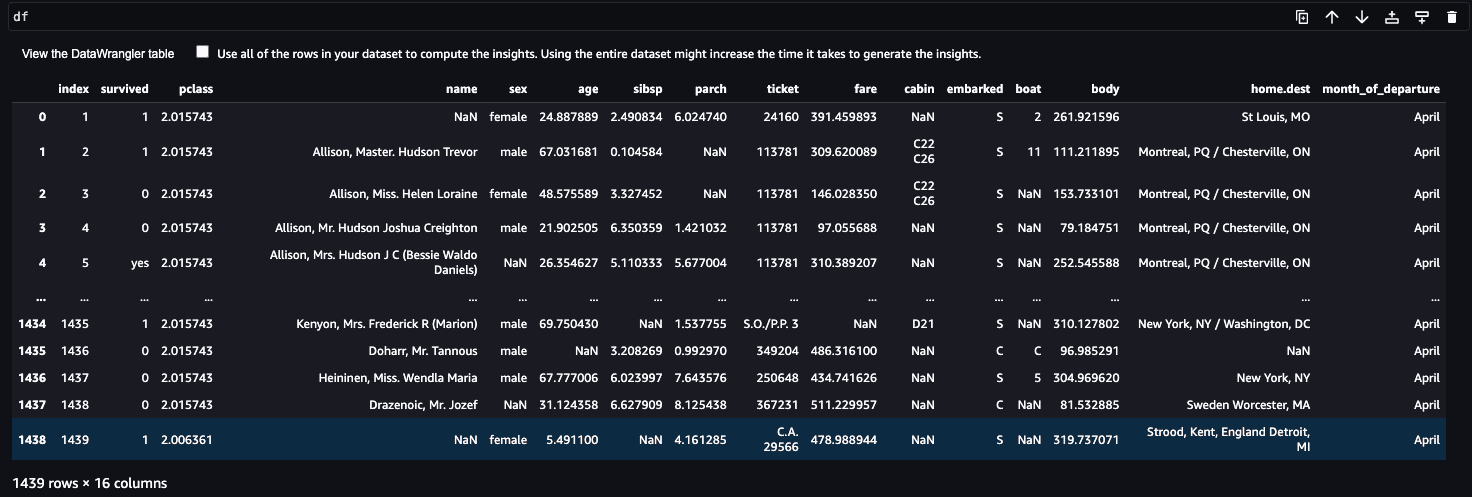

After the data is loaded in the Pandas data frame, you can view the data by just using df or display(df). Along with listing the row, the data prep widget produces insights, visualizations, and advice on data quality. You don’t need to write any additional code to generate feature and target insights, distribution information, or rendering data quality checks. You can choose the data frame table’s header to view the statistical summary showing the data quality warnings, if any.

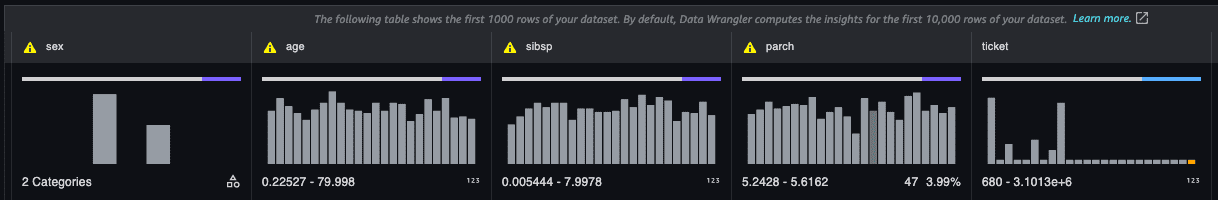

Each column shows a bar chart or histogram based on the data type. By default, the widget samples up to 10,000 observations for generating meaningful insights. It also provides the option to run the insight analysis on the entire dataset.

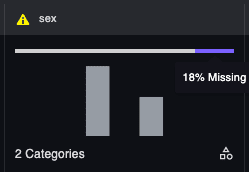

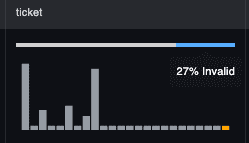

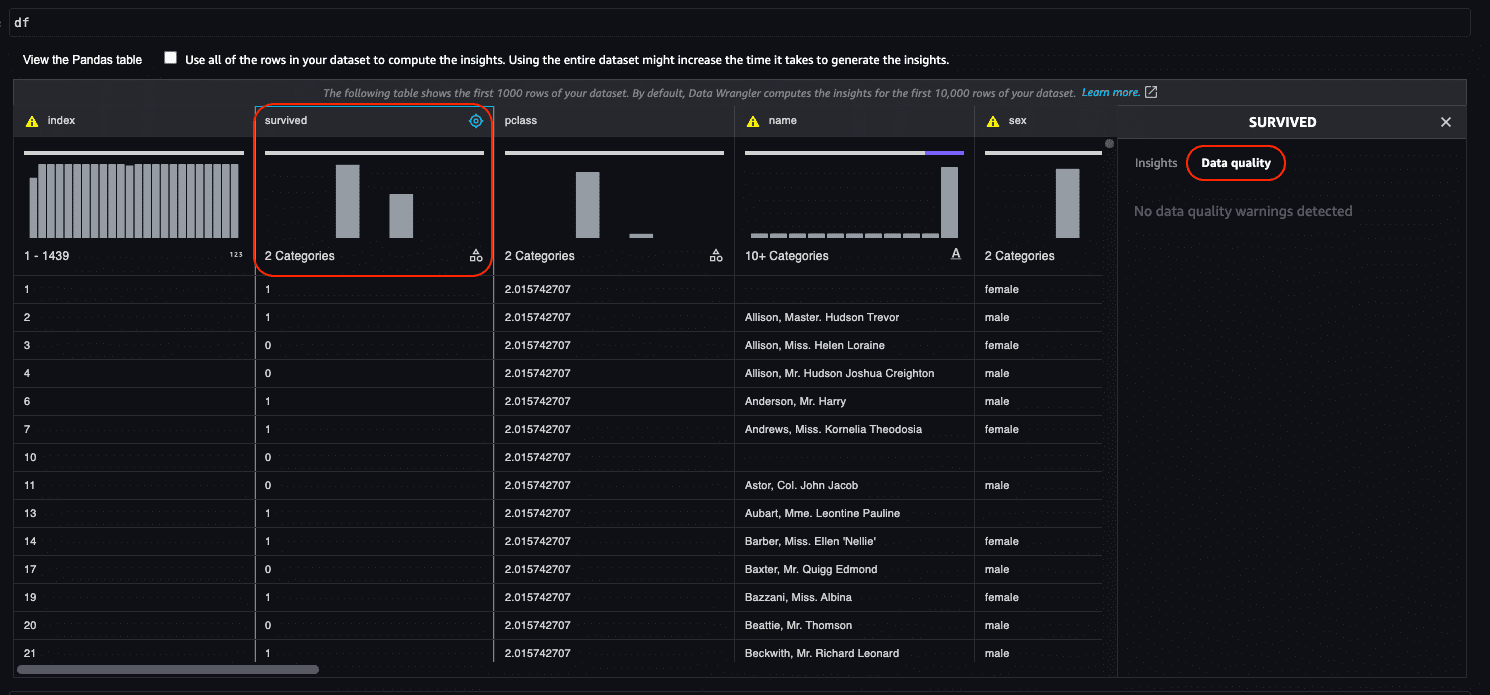

As shown in the following screenshot, this widget identifies whether a column has categorical or quantitative data.

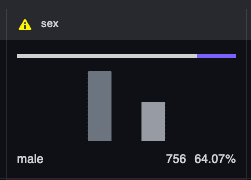

For categorical data, the widget generates the bar chart with all the categories. In the following screenshot, for example, the column Sex identifies the categories on the data. You can hover over the bar (male in this case) to see the details of these categories, like the total number of rows with the value male and its distribution in the total visualized dataset (64.07% in this example). It also highlights the total percentage of missing values in a different color for categorical data. For quantitative data like the ticket column, it shows distribution along with the percentage of invalid values.

|

|

|

If you want to see a standard Pandas visualization in the notebook, you can choose View the Pandas table and toggle between the widget and the Pandas representation, as shown in the following screenshot.

To get more detailed insights about the data in the column, choose the column’s header to open a side panel dedicated to the column. Here you can observe two tabs: Insights and Data quality.

In the following sections, we explore these two options in more detail.

Insights

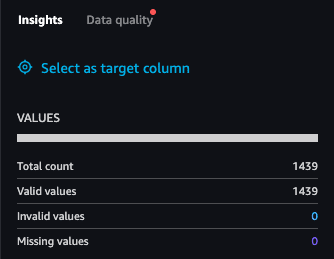

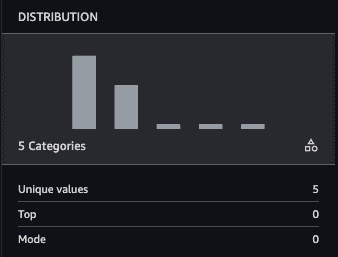

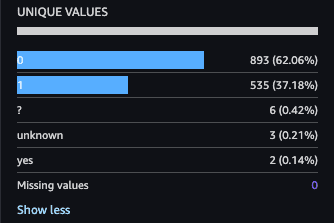

The Insights tab provides details with descriptions for each column. This section lists aggregated statistics, such as mode, number of uniques, ratios and counts for missing/invalid values, etc., as well as visualize data distribution with help of a histogram or a bar chart. In the following screenshots, you can check out the data insights and distribution information displayed with easily understandable visualizations generated for the selected column survived.

|

|

|

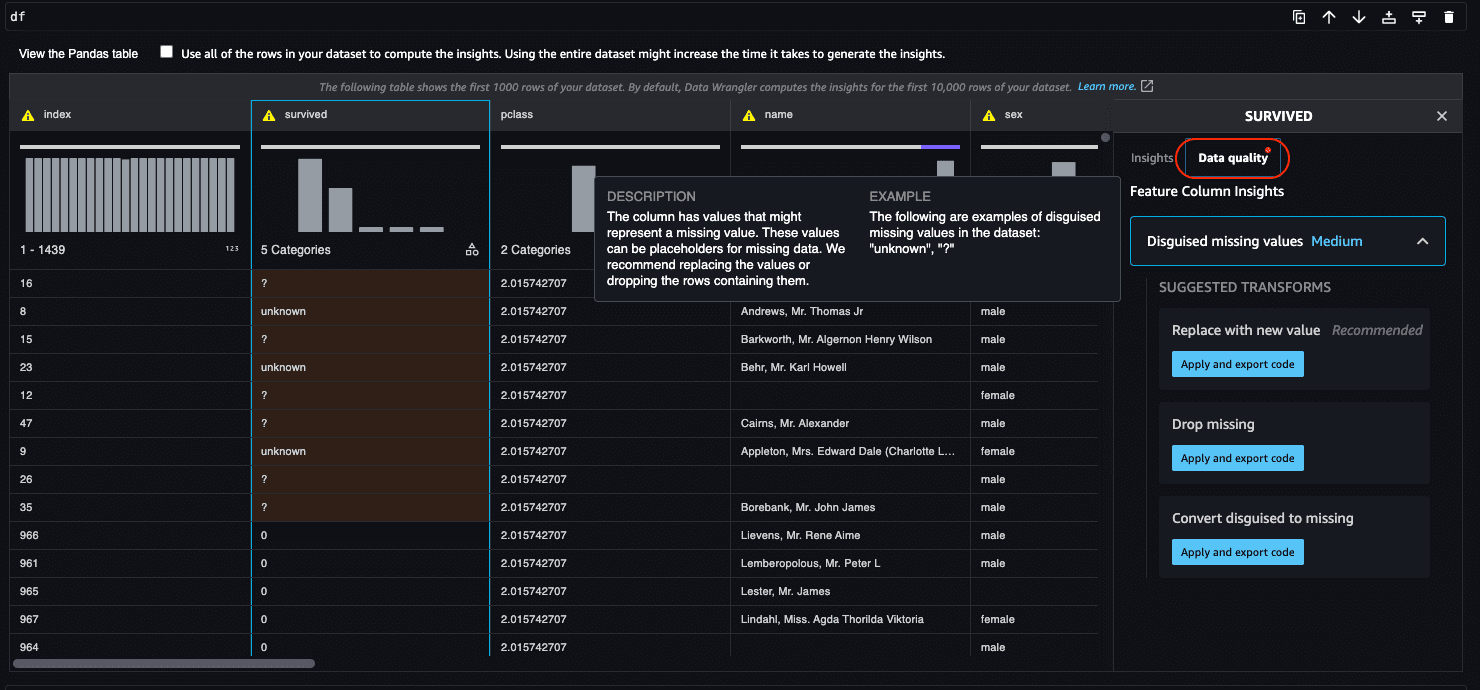

Data quality

The studio data prep widget highlights identified data quality issues with the warning sign in the header. Widget can identify the whole spectrum of data quality issues from basics (missing values, constant column, etc.) to more ML specific (target leakage, low predictive score features, etc.). Widget highlights the cells causing the data quality issue and reorganize the rows to put the problematic cells at the top. To remedy the data quality issue widget provides several transformers, applicable on a click of a button.

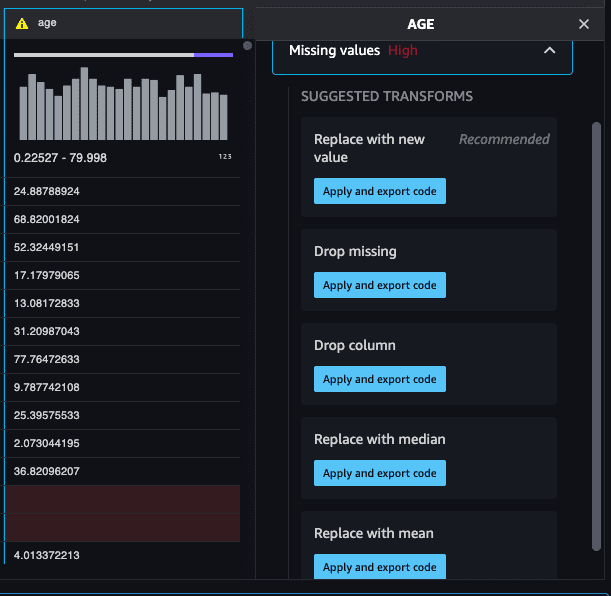

To explore the data quality section, choose the column header, and in the side panel, choose the Data quality tab. You should see the following in your Studio environment.

Let’s look at the different options available on the Data quality tab. For this example, we choose the age column, which is detected as a quantitative column based on the data. As we can see in the following screenshot, this widget suggests different type of transformations that you could apply, including the most common actions, such as Replace with new value, Drop missing, Replace with median, or Replace with mean. You can choose any of those for your dataset based on the use case (the ML problem you’re trying to solve). It also gives you the Drop column option if you want to remove the feature altogether.

When you choose Apply and export code, the transform is applied to the deep copy of the data frame. After the transform is applied successfully, the data table is refreshed with the insights and visualizations. The transform code is generated after the existing cell in the notebook. You can run this exported code later on to apply the transformation on your datasets, and extend it as per your needs. You can customize the transformation by directly modifying the generated code. If we apply the Drop missing option in the Age column, the following transformation code is applied to the dataset, and code is also generated in a cell below the widget:

The following is another example of a code snippet for Replace with median:

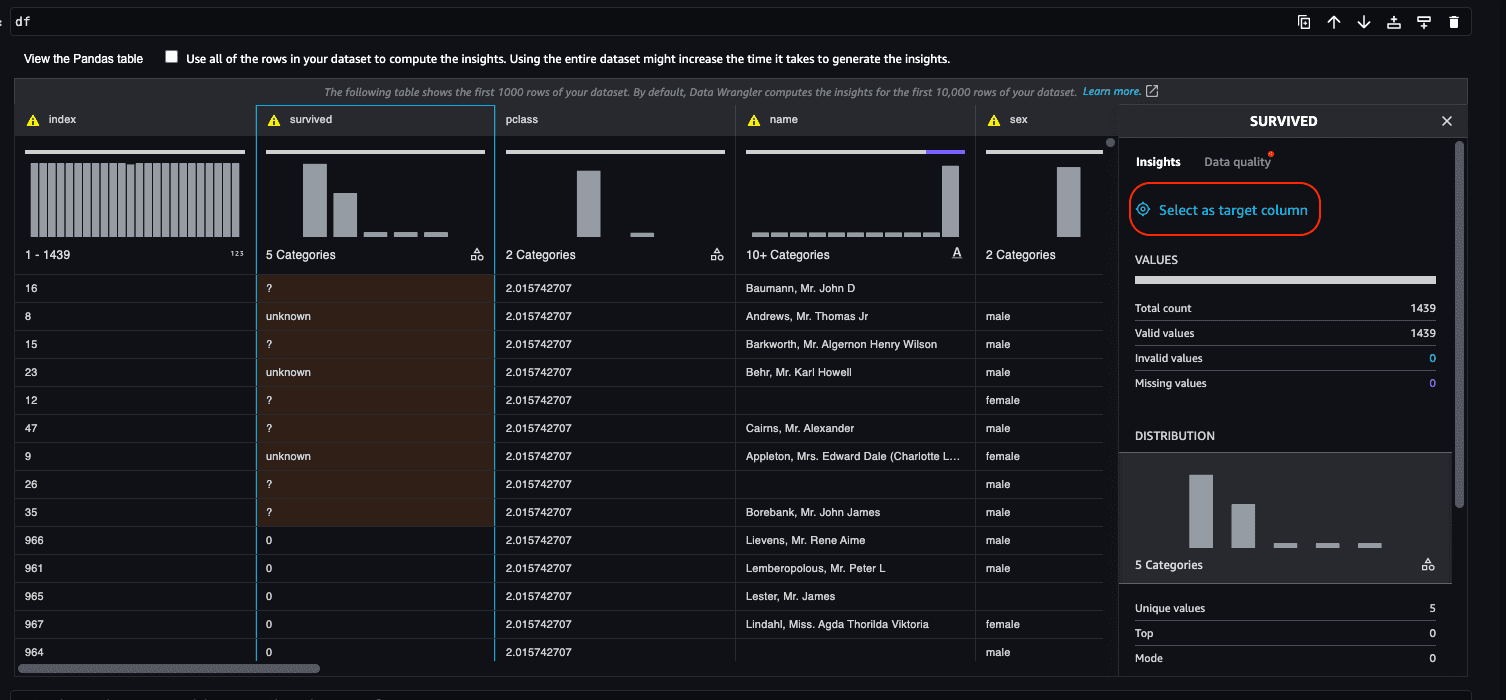

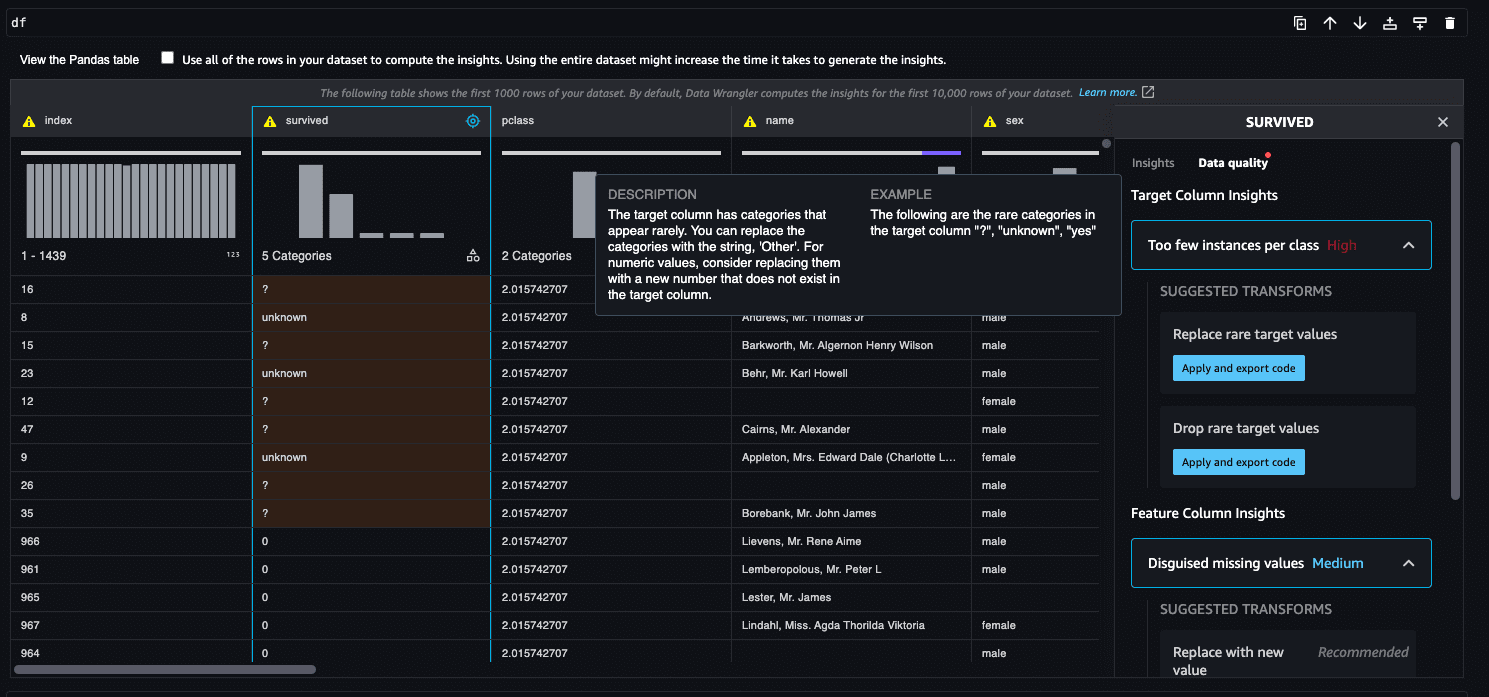

Now let’s look at the data prep widget’s target insight capability. Assume you want to use the survived feature to predict if a passenger will survive. Choose the survived column header. In the side panel, choose Select as target column. The ideal data distribution for the survived feature should have only two classes: yes (1) or no (0), which helps classify the Titanic crash survival chances. However, due to data inconsistencies in the chosen target column, the survived feature has 0, 1, ?, unknown, and yes.

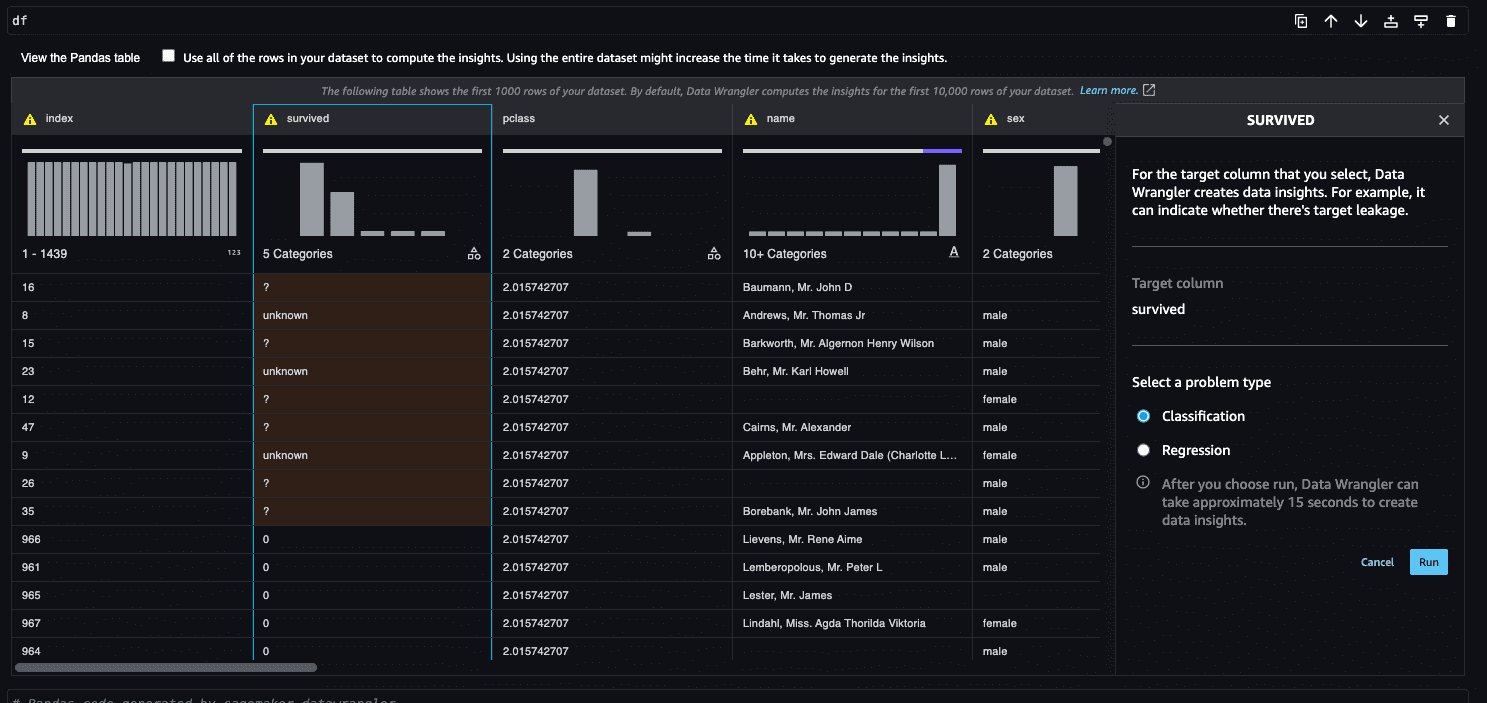

Choose the problem type based on the selected target column, which can be either Classification or Regression. For the survived column, the problem type is classification. Choose Run to generate insights for the target column.

The data prep widget lists the target column insights with recommendations and sample explanations to solve the issues with the target column data quality. It also automatically highlights the anomalous data in the column.

We choose the recommended transform Drop rare target values, because there are fewer observations for the rare target values.

The chosen transform is applied to the Pandas data frame and the uncommon target values were eliminated from the survived column. See the following code:

The results of the applied transform are immediately visible on the data frame. To track the data preparation activities applied using the data prep widget, the transformed code is also generated in the following notebook cell.

Conclusion

In this post, we provided guidance on how the Studio data prep widget can help you analyze data distributions, explore data quality insights generated by the tool, and uncover potential issues such as outliers for each critical feature. This helps improve the overall data quality to help you train high-quality models, and it removes the undifferentiated heavy lifting by allowing you to transform data on the user interface and generate code for the notebook cells automatically. You can then use this code in your MLOps pipelines to build reproducibility, avoid wasting time on repetitive tasks, and reduce compatibility problems by quickening the construction and deployment of data wrangling pipelines.

If you’re new to SageMaker Data Wrangler or Studio, refer to Get Started with SageMaker Data Wrangler. If you have any questions related to this post, please add it in the comments section.

About the Authors

Parth Patel is a Solutions Architect at AWS in the San Francisco Bay Area. Parth guides customers to accelerate their journey to the cloud and help them adopt and grow on the AWS Cloud successfully. He focuses on machine learning, environmental sustainability, and application modernization.

Parth Patel is a Solutions Architect at AWS in the San Francisco Bay Area. Parth guides customers to accelerate their journey to the cloud and help them adopt and grow on the AWS Cloud successfully. He focuses on machine learning, environmental sustainability, and application modernization.

Isha Dua is a Senior Solutions Architect based in the San Francisco Bay Area. She helps AWS Enterprise customers grow by understanding their goals and challenges, and guiding them on how they can architect their applications in a cloud-native manner while making sure they are resilient and scalable. She’s passionate about machine learning technologies and environmental sustainability.

Isha Dua is a Senior Solutions Architect based in the San Francisco Bay Area. She helps AWS Enterprise customers grow by understanding their goals and challenges, and guiding them on how they can architect their applications in a cloud-native manner while making sure they are resilient and scalable. She’s passionate about machine learning technologies and environmental sustainability.

Hariharan Suresh is a Senior Solutions Architect at AWS. He is passionate about databases, machine learning, and designing innovative solutions. Prior to joining AWS, Hariharan was a product architect, core banking implementation specialist, and developer, and worked with BFSI organizations for over 11 years. Outside of technology, he enjoys paragliding and cycling.

Hariharan Suresh is a Senior Solutions Architect at AWS. He is passionate about databases, machine learning, and designing innovative solutions. Prior to joining AWS, Hariharan was a product architect, core banking implementation specialist, and developer, and worked with BFSI organizations for over 11 years. Outside of technology, he enjoys paragliding and cycling.

Dani Mitchell is an AI/ML Specialist Solutions Architect at Amazon Web Services. He is focused on Computer Vision use cases and helping customers across EMEA to accelerate their ML journey.

Dani Mitchell is an AI/ML Specialist Solutions Architect at Amazon Web Services. He is focused on Computer Vision use cases and helping customers across EMEA to accelerate their ML journey.

Leave a Reply