Use your own training scripts and automatically select the best model using hyperparameter optimization in Amazon SageMaker

The success of any machine learning (ML) pipeline depends not just on the quality of model used, but also the ability to train and iterate upon this model. One of the key ways to improve an ML model is by choosing better tunable parameters, known as hyperparameters. This is known as hyperparameter optimization (HPO). However, doing this tuning manually can often be cumbersome due to the size of the search space, sometimes involving thousands of training iterations.

This post shows how Amazon SageMaker enables you to not only bring your own model algorithm using script mode, but also use the built-in HPO algorithm. You will learn how to easily output the evaluation metric of choice to Amazon CloudWatch, from which you can extract this metric to guide the automatic HPO algorithm. You can then create an HPO tuning job that orchestrates several training jobs and associated compute resources. Upon completion, you can see the best training job according to the evaluation metric.

Solution overview

We walk through the following steps:

- Use SageMaker script mode to bring our own model on top of an AWS-managed container.

- Refactor our training script to print out our evaluation metric.

- Find the metric in CloudWatch Logs.

- Extract the metric from CloudWatch.

- Use HPO to select the best model by tuning on this evaluation metric.

- Monitor the HPO and find the best training job.

Prerequisites

For this walkthrough, you should have the following prerequisites:

- An AWS account.

- An Amazon Simple Storage Service (Amazon S3) bucket. For instructions on creating a bucket, see Step 1: Create your first S3 bucket.

- An AWS Identity and Access Management (IAM) role. To build and run an ML model using SageMaker, you must provide an IAM role that grants SageMaker permission to access Amazon S3 in your account to fetch the training and test datasets.

- A SageMaker notebook instance. For instructions, see Create a Notebook Instance. Attach the IAM role you created for SageMaker to this notebook instance.

Use custom algorithms on an AWS-managed container

Refer to Bring your own model with Amazon SageMaker script mode for a more detailed look at bringing a custom model into SageMaker using an AWS-managed container.

We use the MNIST dataset for this example. MNIST is a widely used dataset for handwritten digit classification, consisting of 70,000 labeled 28×28 pixel grayscale images of handwritten digits. The dataset is split into 60,000 training images and 10,000 test images, containing 10 classes (one for each digit).

- Open your notebook instance and run the following command to downloaded the

mnist.pyfile:Before we get and store the data, let’s create a SageMaker session. We should also specify the S3 bucket and prefix to use for training and model data. This should be within the same Region as the notebook instance, training, and hosting. The following code uses the default bucket if it already exists, or creates a new one if it doesn’t. We also must include the IAM role ARN to give training and hosting access to your data. We use

get_execution_role()to get the IAM role that you created for your notebook instance. - Create a session with the following code:

- Now let’s get the data, store it in our local folder

/data, and upload it to Amazon S3:We can now create an estimator to set up the PyTorch training job. We don’t focus on the actual training code here (

mnist.py) in great detail. Let’s look at how we can easily invoke this training script to initialize a training job. - In the following code, we include an entry point script called mnist.py that contains our custom training code:

- To ensure that this training job has been configured correctly, with working training code, we can start a training job by fitting it to the data we uploaded to Amazon S3. SageMaker ensures our data is available in the local file system, so our training script can just read the data from disk:

However, we’re not creating a single training job. We use the automatic model tuning capability of SageMaker through the use of a hyperparameter tuning job. Model tuning is completely agnostic to the actual model algorithm. For more information on all the hyperparameters that you can tune, refer to Perform Automatic Model Tuning with SageMaker.

For each hyperparameter that we want to optimize, we have to define the following:

- A name

- A type (parameters can either be an integer, continuous, or categorical)

- A range of values to explore

- A scaling type (linear, logarithmic, reverse logarithmic, or auto); this lets us control how a specific parameter range will be explored

We must also define the metric we’re optimizing for. It can be any numerical value as long as it’s visible in the training log and you can pass a regular expression to extract it.

If we look at line 181 in mnist.py, we can see how we print to the logger:

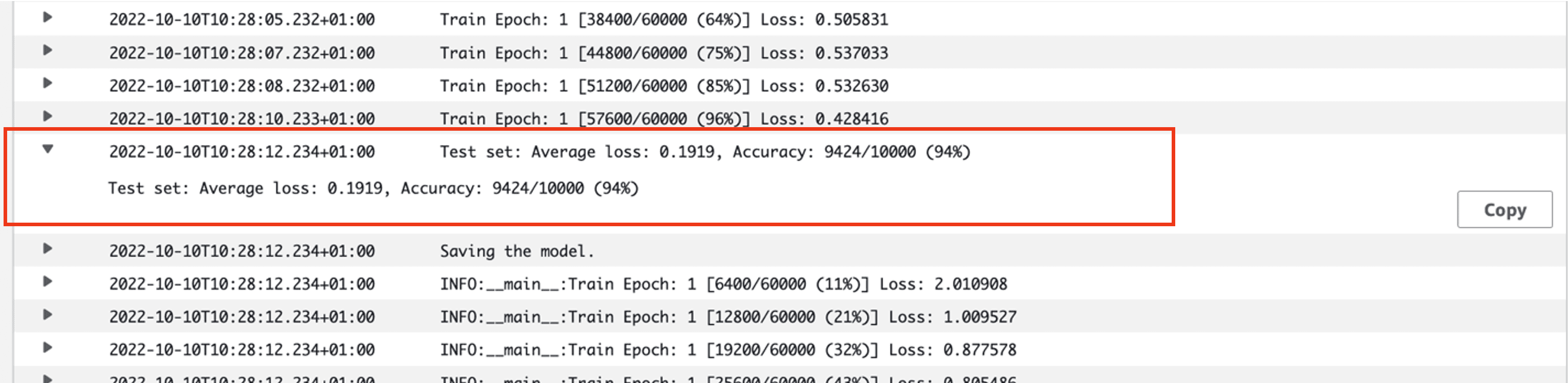

In fact, we can see this output in the logs of the training job we just ran. By opening the log group /aws/sagemaker/TrainingJobs on the CloudWatch console, we should have a log event beginning with pytorch-training- followed by a timestamp and generated name.

The following screenshot highlights the log we’re looking for.

Let’s now start on building our hyperparameter tuning job.

- As mentioned, we must first define some information about the hyperparameters, under the object as follows:

Here we defined our two hyperparameters. The learning rate (lr) is a continuous parameter (therefore a continuous value) in the range 0.001 and 0.1. The batch size (batch-size) is a categorical parameter with the preceding discrete values.

Next, we specify the objective metric that we’d like to tune and its definition. This includes the regular expression (regex) needed to extract that metric from the CloudWatch logs of the training job that we previously saw. We also specify a descriptive name average test loss and the objective type as Minimize, so the hyperparameter tuning seeks to minimize the objective metric when searching for the best hyperparameter setting.

- Specify the metric with the following code:

Now we’re ready to create our

HyperparameterTunerobject. In addition to the objective metric name, type, and definition, we pass in thehyperparameter_rangesobject and the estimator we previously created. We also specify the number of jobs we want to run in total, along with the number that should run in parallel. We have chosen the maximum number of jobs as 9, but you would typically opt for a much higher number (such as 50) for optimal performance. - Create the

HyperparameterTunerobject with the following code:

Before we start the tuning job, it’s worth noting how the combinations of hyperparameters are determined. To get good results, you need to choose the right ranges to explore. By default, the Bayesian search strategy is used, described further in How Hyperparameter Tuning works.

With Bayesian optimization, hyperparameter tuning is treated as a regression problem. To solve this regression problem, it makes guesses about which hyperparameter combinations will get the best results, and runs training jobs to test these values. It uses regression to choose the next set of hyperparameter values to test. There is a clear exploit/explore trade-off that the search strategy makes here. It can choose hyperparameter values close to the combination that resulted in the best previous training job to incrementally improve performance. Or, it may choose values further away, to try and explore a new range of values that isn’t yet well understood.

You may specify other search strategies, however. The following strategies are supported in SageMaker:

- Grid search – Tries every possible combination among the range of hyperparameters that is specified.

- Random search – Tries random combinations among the range of values specified. It doesn’t depend on the results of previous training jobs, so you can run the maximum number of concurrent training jobs without affecting the performance of the tuning.

- Hyperband search – Uses both intermediate and final results of training jobs to reallocate epochs to well-utilized hyperparameter configurations, and automatically stops those that underperform.

You may also explore bringing your own algorithm, as explained in Bring your own hyperparameter optimization algorithm on Amazon SageMaker.

- We then launch training on the tuner object itself (not the estimator), calling

.fit()and passing in the S3 path to our train and test dataset:

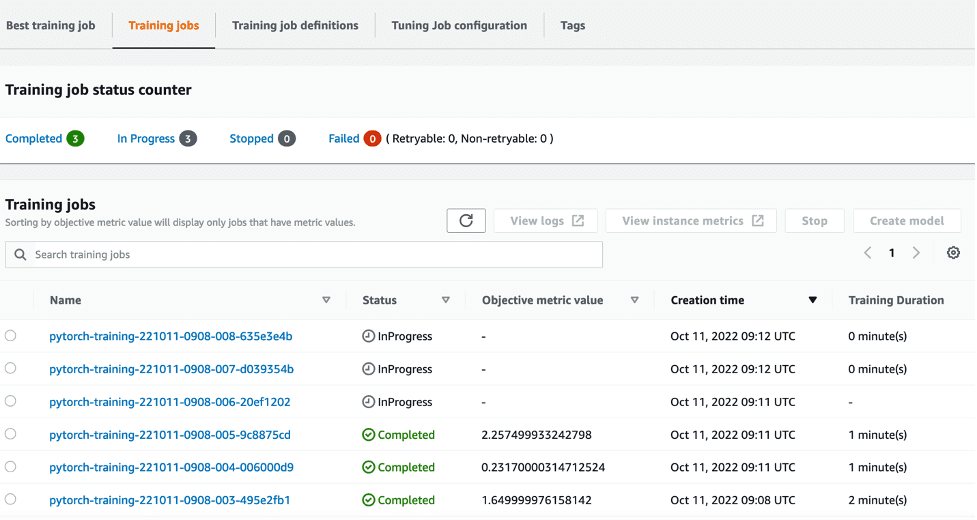

We can then follow the progress of our tuning job on the SageMaker console, on the Hyperparameter tuning jobs page. The tuning job spins up the underlying compute resources necessary by orchestrating each individual training run and its associated compute.

Then it’s easy to see all the individual training jobs that have been completed or are in progress, along with their associated objective metric value. In the following screenshot, we can see the first batch of training jobs is complete, which contains three in total according to our specified max_parallel_jobs value of 3. Upon completion, we can find the best training job—the one that minimizes average test loss—on the Best training job tab.

Clean up

To avoid incurring future charges, delete the resources you initialized. These are the S3 bucket, IAM role, and SageMaker notebook instance.

Conclusion

In this post, we discussed how we can bring our own model into SageMaker, and then use automated hyperparameter optimization to select the best training job. We used the popular MNIST dataset to look at how we can specify a custom objective metric for which the HPO job should optimize on. By extracting this objective metric from CloudWatch, and specifying various hyperparameter values, we can easily launch and monitor the HPO job.

If you need more information, or want to see how our customers are using HPO, refer to Amazon SageMaker Automatic Model Tuning. Adapt your own model for automated hyperparameter optimization in SageMaker today.

About the author

Sam Price is a a Professional Services Consultant specializing in AI/ML and data analytics at Amazon Web Services. He works closely with public sector customers in healthcare and life sciences to solve challenging problems. When not doing this, Sam enjoys playing guitar and tennis, and seeing his favorite indie bands.

Sam Price is a a Professional Services Consultant specializing in AI/ML and data analytics at Amazon Web Services. He works closely with public sector customers in healthcare and life sciences to solve challenging problems. When not doing this, Sam enjoys playing guitar and tennis, and seeing his favorite indie bands.

Leave a Reply