Deploy Amazon SageMaker Autopilot models to serverless inference endpoints

Amazon SageMaker Autopilot automatically builds, trains, and tunes the best machine learning (ML) models based on your data, while allowing you to maintain full control and visibility. Autopilot can also deploy trained models to real-time inference endpoints automatically.

If you have workloads with spiky or unpredictable traffic patterns that can tolerate cold starts, then deploying the model to a serverless inference endpoint would be more cost efficient.

Amazon SageMaker Serverless Inference is a purpose-built inference option ideal for workloads with unpredictable traffic patterns and that can tolerate cold starts. Unlike a real-time inference endpoint, which is backed by a long-running compute instance, serverless endpoints provision resources on demand with built-in auto scaling. Serverless endpoints scale automatically based on the number of incoming requests and scale down resources to zero when there are no incoming requests, helping you minimize your costs.

In this post, we show how to deploy Autopilot trained models to serverless inference endpoints using the Boto3 libraries for Amazon SageMaker.

Autopilot training modes

Before creating an Autopilot experiment, you can either let Autopilot select the training mode automatically, or you can select the training mode manually.

Autopilot currently supports three training modes:

- Auto – Based on dataset size, Autopilot automatically chooses either ensembling or HPO mode. For datasets larger than 100 MB, Autopilot chooses HPO; otherwise, it chooses ensembling.

- Ensembling – Autopilot uses the AutoGluon ensembling technique using model stacking and produces an optimal predictive model.

- Hyperparameter optimization (HPO) – Autopilot finds the best version of a model by tuning hyperparameters using Bayesian optimization or multi-fidelity optimization while running training jobs on your dataset. HPO mode selects the algorithms most relevant to your dataset and selects the best range of hyperparameters to tune your models.

To learn more about Autopilot training modes, refer to Training modes.

Solution overview

In this post, we use the UCI Bank Marketing dataset to predict if a client will subscribe to a term deposit offered by the bank. This is a binary classification problem type.

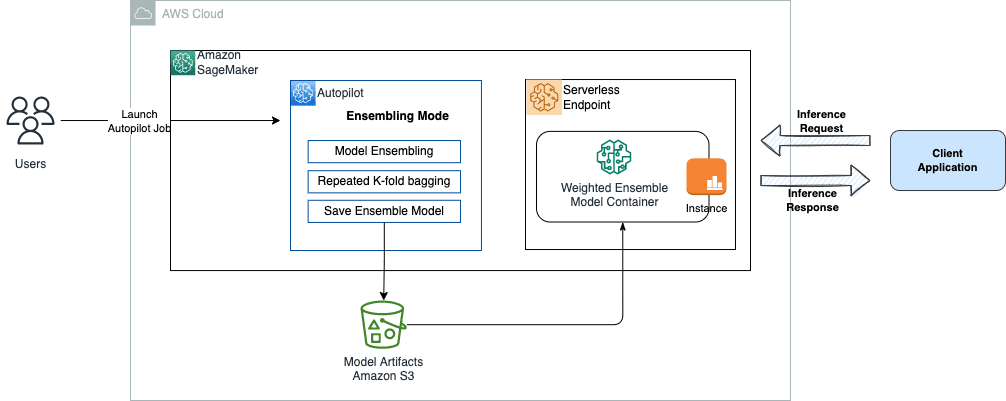

We launch two Autopilot jobs using the Boto3 libraries for SageMaker. The first job uses ensembling as the chosen training mode. We then deploy the single ensemble model generated to a serverless endpoint and send inference requests to this hosted endpoint.

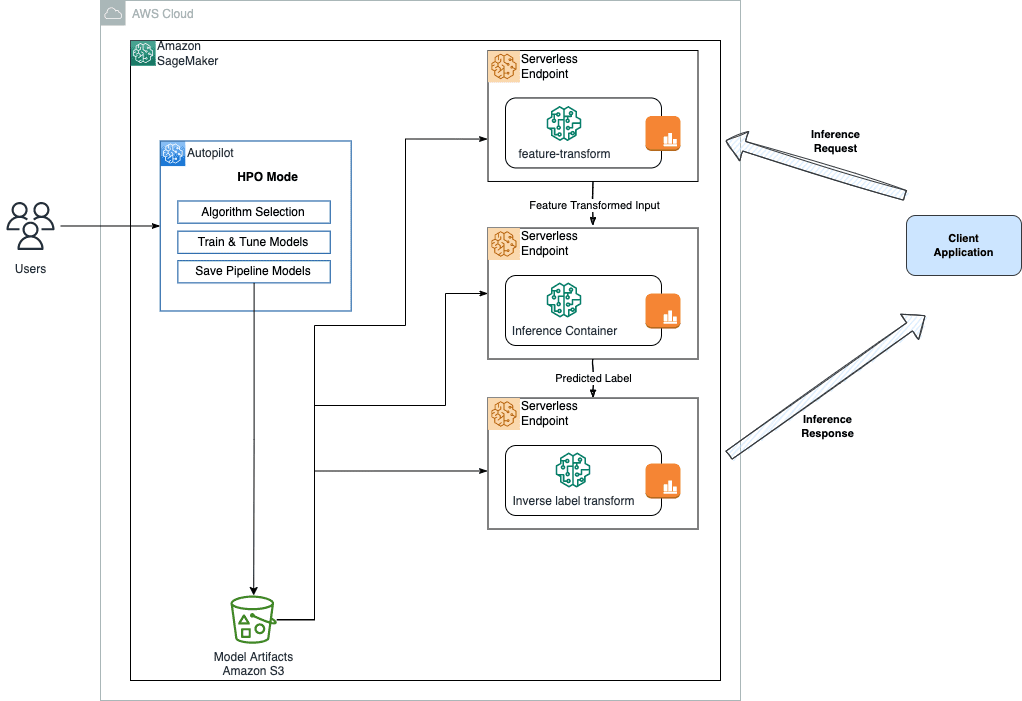

The second job uses the HPO training mode. For classification problem types, Autopilot generates three inference containers. We extract these three inference containers and deploy them to separate serverless endpoints. Then we send inference requests to these hosted endpoints.

For more information about regression and classification problem types, refer to Inference container definitions for regression and classification problem types.

We can also launch Autopilot jobs from the Amazon SageMaker Studio UI. If you launch jobs from the UI, ensure to turn off the Auto deploy option in the Deployment and Advanced settings section. Otherwise, Autopilot will deploy the best candidate to a real-time endpoint.

Prerequisites

Ensure you have the latest version of Boto3 and the SageMaker Python packages installed:

pip install -U boto3 sagemaker

We need the SageMaker package version >= 2.110.0 and Boto3 version >= boto3-1.24.84.

Launch an Autopilot job with ensembling mode

To launch an Autopilot job using the SageMaker Boto3 libraries, we use the create_auto_ml_job API. We then pass in AutoMLJobConfig, InputDataConfig, and AutoMLJobObjective as inputs to the create_auto_ml_job. See the following code:

Autopilot returns the BestCandidate model object that has the InferenceContainers required to deploy the models to inference endpoints. To get the BestCandidate for the preceding job, we use the describe_automl_job function:

Deploy the trained model

We now deploy the preceding inference container to a serverless endpoint. The first step is to create a model from the inference container, then create an endpoint configuration in which we specify the MemorySizeInMB and MaxConcurrency values for the serverless endpoint along with the model name. Finally, we create an endpoint with the endpoint configuration created above.

We recommend choosing your endpoint’s memory size according to your model size. The memory size should be at least as large as your model size. Your serverless endpoint has a minimum RAM size of 1024 MB (1 GB), and the maximum RAM size you can choose is 6144 MB (6 GB).

The memory sizes you can choose are 1024 MB, 2048 MB, 3072 MB, 4096 MB, 5120 MB, or 6144 MB.

To help determine whether a serverless endpoint is the right deployment option from a cost and performance perspective, we encourage you to refer to the SageMaker Serverless Inference Benchmarking Toolkit, which tests different endpoint configurations and compares the most optimal one against a comparable real-time hosting instance.

Note that serverless endpoints only accept SingleModel for inference containers. Autopilot in ensembling mode generates a single model, so we can deploy this model container as is to the endpoint. See the following code:

When the serverless inference endpoint is InService, we can test the endpoint by sending an inference request and observe the predictions. The following diagram illustrates the architecture of this setup.

Note that we can send raw data as a payload to the endpoint. The ensemble model generated by Autopilot automatically incorporates all required feature-transform and inverse-label transform steps, along with the algorithm model and packages, into a single model.

Send inference request to the trained model

Use the following code to send inference on your model trained using ensembling mode:

Launch an Autopilot Job with HPO mode

In HPO mode, for CompletionCriteria, besides MaxRuntimePerTrainingJobInSeconds and MaxAutoMLJobRuntimeInSeconds, we could also specify the MaxCandidates to limit the number of candidates an Autopilot job will generate. Note that these are optional parameters and are only set to limit the job runtime for demonstration. See the following code:

To get the BestCandidate for the preceding job, we can again use the describe_automl_job function:

Deploy the trained model

Autopilot in HPO mode for the classification problem type generates three inference containers.

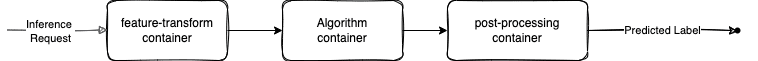

The first container handles the feature-transform steps. Next, the algorithm container generates the predicted_label with the highest probability. Finally, the post-processing inference container performs an inverse transform on the predicted label and maps it to the original label. For more information, refer to Inference container definitions for regression and classification problem types.

We extract these three inference containers and deploy them to a separate serverless endpoints. For inference, we invoke the endpoints in sequence by sending the payload first to the feature-transform container, then passing the output from this container to the algorithm container, and finally passing the output from the previous inference container to the post-processing container, which outputs the predicted label.

The following diagram illustrates the architecture of this setup.

We extract the three inference containers from the BestCandidate with the following code:

Send inference request to the trained model

For inference, we send the payload in sequence: first to the feature-transform container, then to the model container, and finally to the inverse-label transform container.

See the following code:

The full implementation of this example is available in the following jupyter notebook.

Clean up

To clean up resources, you can delete the created serverless endpoints, endpoint configs, and models:

Conclusion

In this post, we showed how we can deploy Autopilot generated models both in ensemble and HPO modes to serverless inference endpoints. This solution can speed up your ability to use and take advantage of cost-efficient and fully managed ML services like Autopilot to generate models quickly from raw data, and then deploy them to fully managed serverless inference endpoints with built-in auto scaling to reduce costs.

We encourage you to try this solution with a dataset relevant to your business KPIs. You can refer to the solution implemented in a Jupyter notebook in the GitHub repo.

Additional references

- If you’re new to Autopilot, we encourage you to refer to Get started with Amazon SageMaker Autopilot.

- To determine the optimal configuration for your serverless endpoint from a cost and performance perspective, we encourage you to explore our Serverless Inference Benchmarking Toolkit. For more information, refer to Introducing the Amazon SageMaker Serverless Inference Benchmarking Toolkit.

- To learn more about Autopilot training modes, refer to Amazon SageMaker Autopilot is up to eight times faster with new ensemble training mode powered by AutoGluon.

- For an overview on how to deploy an XGBoost model to a serverless inference endpoint, we encourage you to refer to this example notebook.

About the Author

Praveen Chamarthi is a Senior AI/ML Specialist with Amazon Web Services. He is passionate about AI/ML and all things AWS. He helps customers across the Americas to scale, innovate, and operate ML workloads efficiently on AWS. In his spare time, Praveen loves to read and enjoys sci-fi movies.

Praveen Chamarthi is a Senior AI/ML Specialist with Amazon Web Services. He is passionate about AI/ML and all things AWS. He helps customers across the Americas to scale, innovate, and operate ML workloads efficiently on AWS. In his spare time, Praveen loves to read and enjoys sci-fi movies.

Leave a Reply