LightOn Lyra-fr model is now available on Amazon SageMaker

We are thrilled to announce the availability of the LightOn Lyra-fr foundation model for customers using Amazon SageMaker. LightOn is a leader in building foundation models specializing in European languages. Lyra-fr is a state-of-the-art French language model that can be used to build conversational AI, copywriting tools, text classifiers, semantic search, and more. You can easily try out this model and use it with Amazon SageMaker JumpStart. JumpStart is the machine learning (ML) hub of SageMaker that provides access to foundation models in addition to built-in algorithms and end-to-end solution templates to help you quickly get started with ML.

In this blog, we will demonstrate how to use the Lyra-fr model in SageMaker.

Foundation models

Foundation models are typically trained on billions of parameters and are adaptable to a wide category of use cases. The most well-known foundation models today are used to summarize articles, create digital art, and generate code from simple text instructions. These models are expensive to train, so customers want to use existing pre-trained foundation models and fine-tune them as needed rather than train these models themselves. SageMaker provides a curated list of models that you can choose from on the SageMaker console. You can test these models directly on the web interface. When you want to use a foundation model at scale, you can do so easily without leaving SageMaker by using pre-built notebooks from model providers. Because the models are hosted and deployed on AWS, you can rest assured that your data, whether used for evaluating or using the model at scale, is never shared with third parties.

Lyra-fr is the largest French language model available on the market today. It is a 10 billion parameter model, trained and made accessible by LightOn. Lyra-fr was trained on a large corpus of French curated data, and it is capable of writing human-like text and solving complex tasks such as classification, question answering, and summarization. All of this while maintaining reasonable inference speed, in the range of 1–2 seconds for the average request. You can simply describe the task you want to perform in natural language, and Lyra-fr will generate responses of the level of a native French speaker. Lyra-fr offers business-ready intelligence primitives, such as steerable generation and text classification, in just a few lines of code. For more challenging tasks, performance can be improved in a “few shot” learning mode, providing in the prompt a couple of input-output examples.

Using Lyra-fr on SageMaker

We’ll take you on a walkthrough of how to use the Lyra-fr model in 3 simple steps:

- Discover – Find the Lyra-fr model on the AWS Management Console for SageMaker.

- Test – Test the model using the web interface.

- Deploy – Use a notebook to deploy and test the advanced capabilities of the model.

Discover

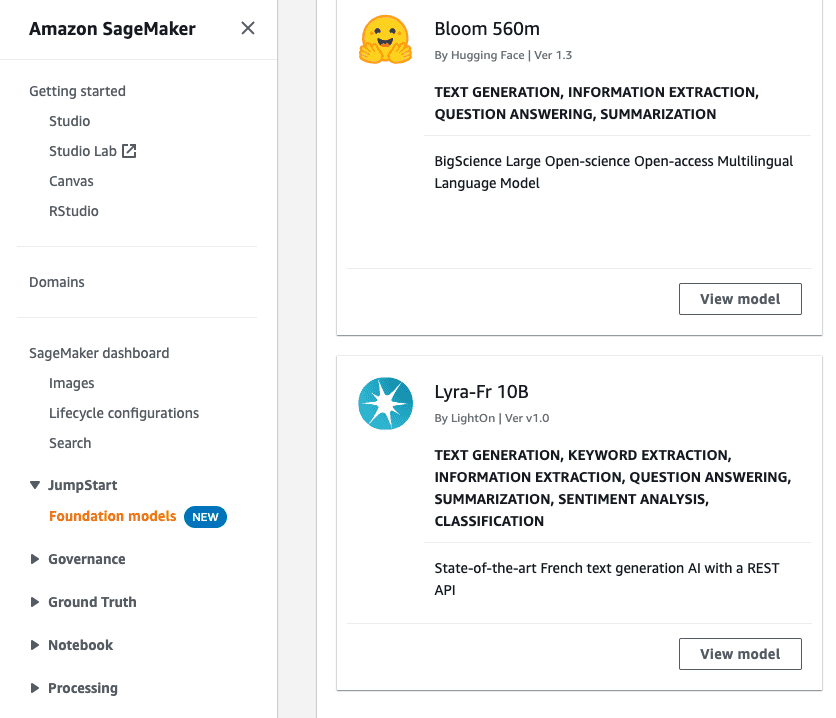

To make it easy to discover foundation models like the Lyra-fr, we have consolidated all the foundation models in one place. To find the Lyra-fr model:

- Sign in to the AWS Management Console for SageMaker.

- On the left navigation panel, you should see a section called JumpStart with Foundation models under it. Request access to this feature if you don’t have access yet.

- Once your account is allowlisted, you will see a list of models on the right. This is where you will find the Lyra-fr 10B model.

- Clicking on View model will show the full model card with additional options.

Test

A common use case is to run ad hoc tests to make sure the model meets your needs. You can test the Lyra-fr model directly from the SageMaker console. In this example, we’re going to use a simple text prompt by asking the model to generate a list of article ideas for the topic of “watercolor” or “l’aquarelle” in French.

- From the model card shown in the previous section, select Try out model. This will open a new tab with the test interface.

- On this interface, provide the text input you would like to pass to the model. You can also tune any parameters you would like using the sliders on the right. Once you’re satisfied, select Generate text.

Note that foundation models and their output are from the model provider, and AWS is not responsible for the content or accuracy therein.

Deploy

Text generation models work best when you provide examples of information you want the model to provide. This is called few-shot learning. We will demo this capability using the Lyra-fr sample notebook. The sample notebook goes through how to deploy the Lyra-fr model on SageMaker, how to summarize and generate text, and few-shot learning.

It also includes examples of making the inference requests directly using JSON or with the Lyra Python SDK. The Lyra Python SDK takes care of formatting the input, calling the endpoint, and unpacking the output. There is one class per endpoint: Create, Analyze, Select, Embed, Compare, and Tokenize. Note that this example uses an ml.p4d.24xlarge instance. If your default limit for your AWS account is 0, you need to request a limit increase for this GPU instance.

SageMaker offers a managed notebook experience through SageMaker Studio. For details on how to set up SageMaker Studio, see the Amazon SageMaker Developer Guide. We’re going to clone this GitHub repo into the SageMaker Studio in this demo, but the notebook will work in other environments as well.

Let’s take a look at how to run the notebook:

- Go to the model card from the Discover section in this blog post, and select View notebook. You should see a new tab open in GitHub with the Lyra-fr notebook.

- In GitHub, select lightonmuse-sagemaker-sdk; this will bring you to the repo. Select the Code button and copy the HTTPS URL.

- Open SageMaker Studio. Select Clone a Repository and then paste in the URL copied from above.

- Navigate to the Lyra-fr notebook using the file browser on the left.

- This notebook runs end to end without additional input needed and also cleans up the resources it creates. We can take a look at the “using Create for sentiment analysis” example. This example uses the Lyra Python SDK and demonstrates few-shot learning by teaching the model with a few examples of what text should be categorized as positive (positifs), negative (négatifs), or mixed (mitigés).

- You can see that, with the Lyra Python SDK, all you have to do is provide the name of the SageMaker endpoint and the input. The SDK handles all the parsing, formatting, and setup for you.

- Running this prompt returns that the last statement is a positive one.

Clean up

After you have tested the endpoint, make sure you delete the SageMaker inference endpoint and delete the model to avoid incurring charges.

Conclusion

In this post, we showed you how to discover, test, and deploy the Lyra-fr model using Amazon SageMaker. Request access to try out the foundation model in SageMaker today, and let us know your feedback!

About the authors

Iacopo Poli is the CTO of LightOn, responsible for strategic technical choices for the company in building very large language models and offering them to the public. He is passionate about democratization of Machine Learning through intuitive interfaces. In his spare time, he enjoys the quest for the best restaurants in Paris.

Iacopo Poli is the CTO of LightOn, responsible for strategic technical choices for the company in building very large language models and offering them to the public. He is passionate about democratization of Machine Learning through intuitive interfaces. In his spare time, he enjoys the quest for the best restaurants in Paris.

Alan Tan is a Senior Product Manager with SageMaker, leading efforts on large model inference. He’s passionate about applying machine learning to the area of analytics. Outside of work, he enjoys the outdoors.

Alan Tan is a Senior Product Manager with SageMaker, leading efforts on large model inference. He’s passionate about applying machine learning to the area of analytics. Outside of work, he enjoys the outdoors.

Leave a Reply