Build a GNN-based real-time fraud detection solution using the Deep Graph Library without using external graph storage

Fraud detection is an important problem that has applications in financial services, social media, ecommerce, gaming, and other industries. This post presents an implementation of a fraud detection solution using the Relational Graph Convolutional Network (RGCN) model to predict the probability that a transaction is fraudulent through both the transductive and inductive inference modes. You can deploy our implementation to an Amazon SageMaker endpoint as a real-time fraud detection solution, without requiring external graph storage or orchestration, thereby significantly reducing the deployment cost of the model.

Businesses looking for a fully-managed AWS AI service for fraud detection can also use Amazon Fraud Detector, which you can use to identify suspicious online payments, detect new account fraud, prevent trial and loyalty program abuse, or improve account takeover detection.

Solution overview

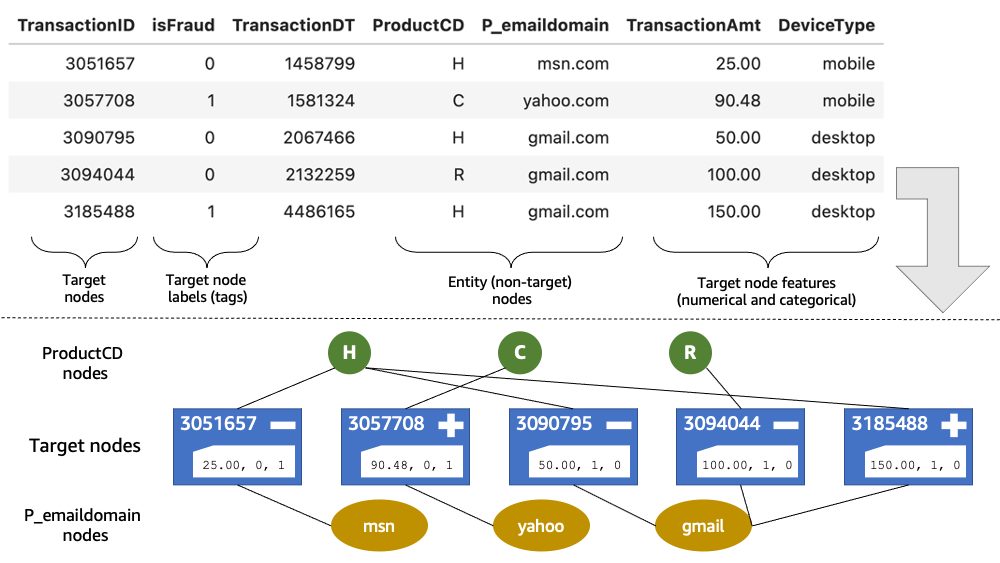

The following diagram describes an exemplar financial transaction network that includes different types of information. Each transaction contains information like device identifiers, Wi-Fi IDs, IP addresses, physical locations, telephone numbers, and more. We represent the transaction datasets through a heterogeneous graph that contains different types of nodes and edges. Then, the fraud detection problem is handled as a node classification task on this heterogeneous graph.

Graph neural networks (GNNs) have shown great promise in tackling fraud detection problems, outperforming popular supervised learning methods like gradient-boosted decision trees or fully connected feed-forward networks on benchmarking datasets. In a typical fraud detection setup, during the training phase, a GNN model is trained on a set of labeled transactions. Each training transaction is provided with a binary label denoting if it is fraudulent. This trained model can then be used to detect fraudulent transactions among a set of unlabeled transactions during the inference phase. Two different modes of inference exist: transductive inference vs. inductive inference (which we discuss more later in this post).

GNN-based models, like RGCN, can take advantage of topological information, combining both graph structure and features of nodes and edges to learn a meaningful representation that distinguishes malicious transactions from legitimate transactions. RGCN can effectively learn to represent different types of nodes and edges (relations) via heterogeneous graph embedding. In the preceding diagram, each transaction is being modeled as a target node, and several entities associated with each transaction get modeled as non-target node types, like ProductCD and P_emaildomain. Target nodes have numerical and categorical features assigned, whereas other node types are featureless. The RGCN model learns an embedding for each non-target node type. For the embedding of a target node, a convolutional operation is used to compute its embedding using its features and neighborhood embeddings. In the rest of the post, we use the terms GNN and RGCN interchangeably.

It’s worth noting that alternative strategies, such as treating the non-target entities as features and one-hot-encoding them, would often be infeasible because of the large cardinalities of these entities. Conversely, encoding them as graph entities enables the GNN model to take advantage of the implicit topology in the entity relationships. For example, transactions that share a phone number with known fraudulent transactions are more likely to be fraudulent too.

The graph representation employed by GNNs creates some complexity in their implementation. This is especially true for applications such as fraud detection, in which the graph representation may get augmented during inference with newly added nodes that correspond to entities not known during model training. This inference scenario is usually referred to as inductive mode. In contrast, transductive mode is a scenario that assumes the graph representation constructed during model training won’t change during inference. GNN models are often evaluated in transductive mode by constructing graph representations from a combined set of training and test examples, while masking test labels during back-propagation. This ensures the graph representation is static, and there the GNN model doesn’t require implementation of operations to extend the graph with new nodes during inference. Unfortunately, static graph representation can’t be assumed when detecting fraudulent transactions in a real-world setting. Therefore, support for inductive inference is required when deploying GNN models for fraud detection to production environments.

In addition, detecting fraudulent transactions in real time is crucial, especially in business cases where there is only one chance of stopping illegal activities. For example, fraudulent users can behave maliciously just once with an account and never use the same account again. Real-time inference on GNN models introduces additional complexity to the implementation. It is often necessary to implement subgraph extraction operations to support real-time inference. The subgraph extraction operation is needed to reduce inference latency when graph representation is large and performing inference on the entire graph becomes prohibitively expensive. An algorithm for real-time inductive inference with an RGCN model runs as follows:

- Given a batch of transactions and a trained RGCN model, extend graph representation with entities from the batch.

- Assign embedding vectors of new non-target nodes with the mean embedding vector of their respective node type.

- Extract a subgraph induced by k-hop out-neighborhood of the target nodes from the batch.

- Perform inference on the subgraph and return prediction scores for the batch’s target nodes.

- Clean up the graph representation by removing newly added nodes (this step ensures the memory requirement for model inference stays constant).

The key contribution of this post is to present an RGCN model implementing the real-time inductive inference algorithm. You can deploy our RGCN implementation to a SageMaker endpoint as a real-time fraud detection solution. Our solution doesn’t require external graph storage or orchestration, and significantly reduces the deployment cost of the RGCN model for fraud detection tasks. The model also implements transductive inference mode, enabling us to carry out experiments to compare model performance in inductive and transductive modes. The model code and notebooks with experiments can be accessed from the AWS Examples GitHub repo.

This post builds on the post Build a GNN-based real-time fraud detection solution using Amazon SageMaker, Amazon Neptune, and the Deep Graph Library. The previous post built a RGCN-based real-time fraud detection solution using SageMaker, Amazon Neptune, and the Deep Graph Library (DGL). The prior solution used a Neptune database as external graph storage, required AWS Lambda for orchestration for real-time inference, and only included experiments in transductive mode.

The RGCN model introduced in this post implements all operations of the real-time inductive inference algorithm using only the DGL as a dependency, and doesn’t require external graph storage or orchestration for deployment.

We first evaluate the performance of the RGCN model in transductive and inductive modes on a benchmark dataset. As expected, model performance in inductive mode is slightly lower than in transductive mode. We also study the effect of hyperparameter k on model performance. The hyperparameter k controls the number of hops performed to extract a subgraph in Step 3 of the real-time inference algorithm. Higher values of k will produce larger subgraphs and can lead to better inference performance at the expense of higher latency. As such, we also conduct timing experiments to evaluate the feasibility of the RGCN model for a real-time application.

Dataset

We use the IEEE-CIS fraud dataset, the same dataset that was used in the previous post. The dataset contains over 590,000 transaction records that have a binary fraud label (the isFraud column). The data is split into two tables: transaction and identity. However, not all transaction records have corresponding identity information. We join the two tables on the TransactionID column, which leaves us with a total of 144,233 transaction records. We sort the table by transaction timestamp (the TransactionDT column) and create an 80/20 percentage split by time, producing 115,386 and 28,847 transactions for training and testing, respectively.

For more details on the dataset and how to format it to suit the input requirement of the DGL, refer to Detecting fraud in heterogeneous networks using Amazon SageMaker and Deep Graph Library.

Graph construction

We use the TransactionID column to generate target nodes. We use the following columns to generate 11 types of non-target nodes:

card1throughcard6ProductCDaddr1andaddr2P_emaildomainandR_emaildomain

We use 38 columns as categorical features of target nodes:

M1throughM9DeviceTypeandDeviceInfoid_12throughid_38

We use 382 columns as numerical features of target nodes:

TransactionAmtdist1anddist2id_01throughid_11C1throughC14D1throughD15V1throughV339

Our graph constructed from the training transactions contains 217,935 nodes and 2,653,878 edges.

Hyperparameters

Other parameters are set to match the parameters reported in the previous post. The following snippet illustrates training the RGCN model in transductive and inductive modes:

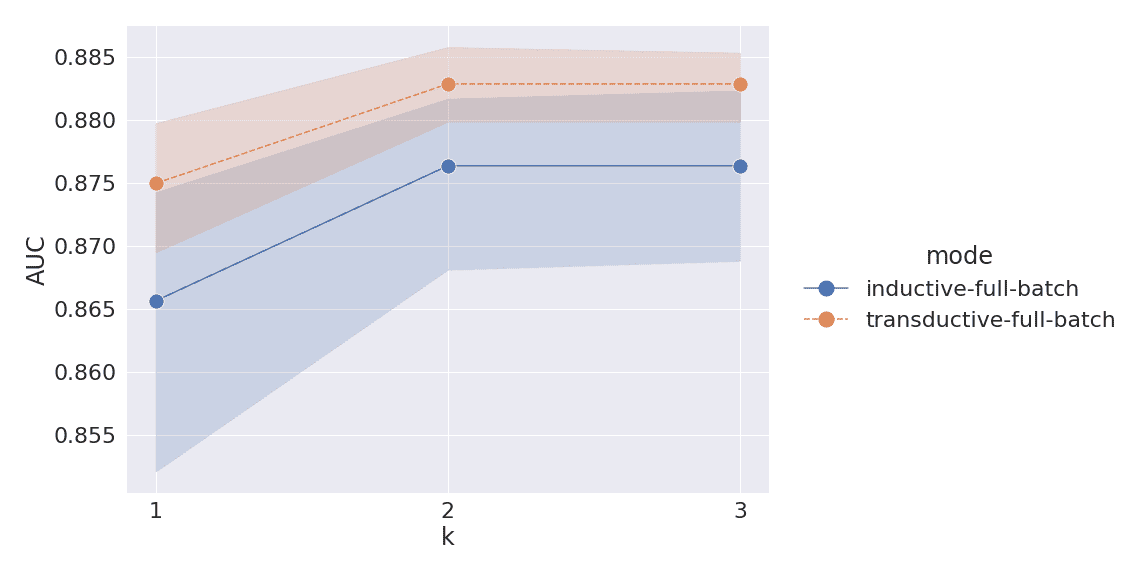

Inductive vs. transductive mode

We perform five trials for inductive and five trials for transductive mode. For each trial, we train an RGCN model and save it to disk, obtaining 10 models. We evaluate each model on test examples while increasing the number of hops (parameter k) used to extract a subgraph for inference, setting k to 1, 2, and 3. We predict on all test examples at once, and compute the ROC AUC score for each trial. The following plot shows the mean and 95% confidence intervals of AUC scores.

We can see that performance in transductive mode is slightly higher than in inductive mode. For k=2, mean AUC scores for inductive and transductive modes are 0.876 and 0.883, respectively. This is expected because the RGCN model is able to learn embeddings of all entity nodes in transductive mode, including those in the test set. In contrast, inductive mode only allows the model to learn embeddings of entity nodes that are present in the training examples, and therefore some nodes have to be mean-filled during inference. At the same time, the drop in performance between transductive and inductive modes is not significant, and even in inductive mode, the RGCN model achieves good performance with an AUC of 0.876. We also observe that model performance doesn’t improve for values of k>2. This implies that setting k=2 would extract a sufficiently large subgraph during inference, resulting in optimal performance. This observation is also confirmed by our next experiment.

It’s also worth noting that, for transductive mode, our model’s AUC of 0.883 is higher than the corresponding AUC of 0.870 reported in the previous post. We use more columns as numerical and categorical features of target nodes, which can explain the higher AUC score. We also note that the experiments in the previous post only performed a single trial.

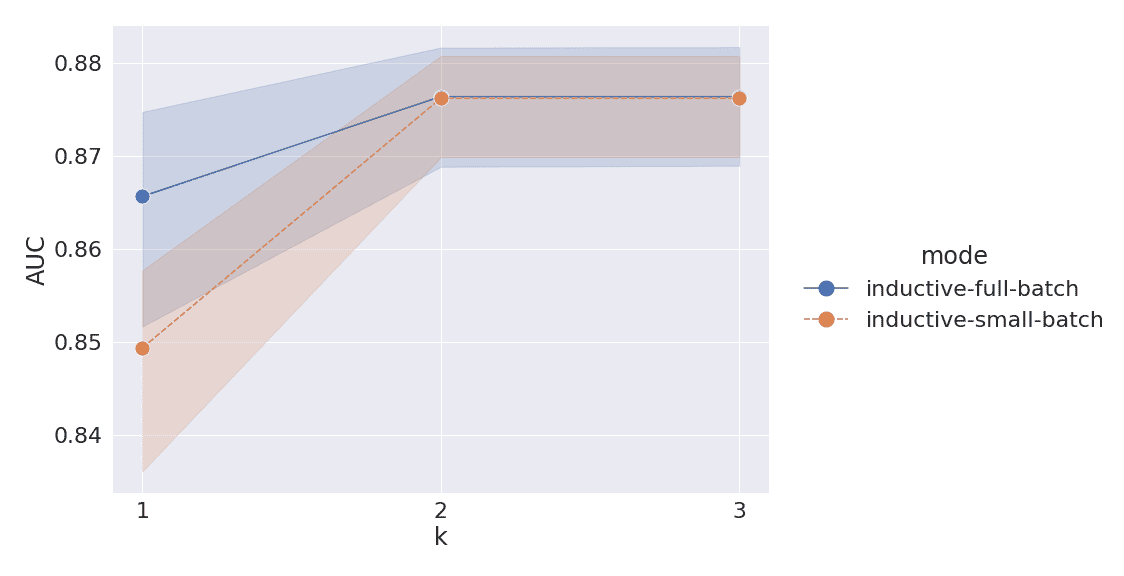

Inference on a small batch

For this experiment, we evaluate the RGCN model in a small batch inference setting. We use five models that were trained in inductive mode in the previous experiment. We compare performance of these models when predicting in two settings: full and small batch inference. For full batch inference, we predict on the entire test set, as was done in the previous experiment. For small batch inference, we predict in small batches by partitioning the test set into 28 batches of equal size with approximately 1,000 transactions in each batch. We compute AUC scores for both settings using different values of k. The following plot shows the mean and 95% confidence intervals for full and small batch inference settings.

We observe that performance for small batch inference when k=1 is lower than for full batch. However, small batch inference performance matches full batch when k>1. This can be attributed to much smaller subgraphs being extracted for small batches. We confirm this by comparing subgraph sizes with the size of the entire graph constructed from the training transactions. We compare graph sizes in terms of number of nodes. For k=1, the average subgraph size for small batch inference is less than 2% of the training graph. And for full batch inference when k=1, the subgraph size is 22%. When k=2, subgraph sizes for small and full batch inference are 54% and 64%, respectively. Finally, subgraph sizes for both inference settings reach 100% for k=3. In other words, when k>1, the subgraph for a small batch becomes sufficiently large, enabling small batch inference to reach the same performance as full batch inference.

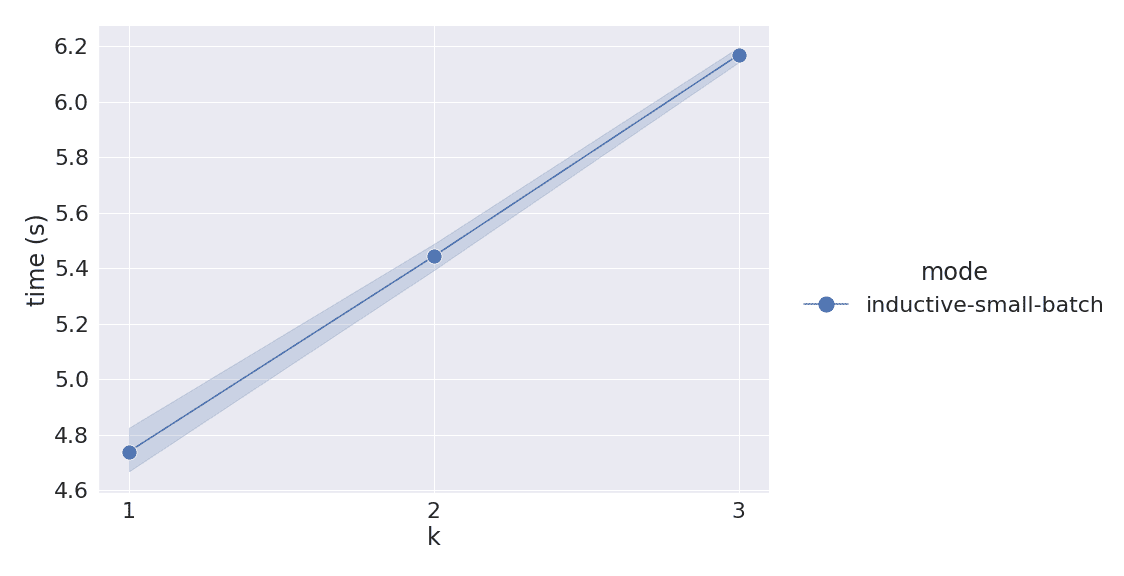

We also record prediction latency for every batch. We perform our experiments on an ml.r5.12xlarge instance, but you can use a smaller instance with 64 G memory to run the same experiments. The following plot shows the mean and 95% confidence intervals of small batch prediction latencies for different values of k.

The latency includes all five steps of the real-time inductive inference algorithm. We see that when k=2, predicting on 1,030 transactions takes 5.4 seconds on average, resulting in a throughput of 190 transactions per second. This confirms that the RGCN model implementation is suitable for real-time fraud detection. We also note that the previous post did not provide hard latency values for their implementation.

Conclusion

The RGCN model released with this post implements the algorithm for real-time inductive inference, and doesn’t require external graph storage or orchestration. The parameter k in Step 3 of the algorithm specifies the number of hops performed to extract the subgraph for inference, and results in a trade-off between model accuracy and prediction latency. We used the IEEE-CIS fraud dataset in our experiments, and empirically validated that the optimal value of parameter k for this dataset is 2, achieving an AUC score of 0.876 and prediction latency of less than 6 seconds per 1,000 transactions.

This post provided a step-by-step process for training and evaluating an RGCN model for real-time fraud detection. The included model class implements methods for the entire model lifecycle, including serialization and deserialization methods. This enables the model to be used for real-time fraud detection. You can train the model as a PyTorch SageMaker estimator and then deploy it to a SageMaker endpoint by using the following notebook as a template. The endpoint is able to predict fraud on small batches of raw transactions in real time. You can also use Amazon SageMaker Inference Recommender to select the best instance type and configuration for the inference endpoint based on your workloads.

For more information about this topic and implementation, we encourage you to explore and test our scripts on your own. You can access the notebooks and related model class code from the AWS Examples GitHub repo.

About the Authors

Dmitriy Bespalov is a Senior Applied Scientist at the Amazon Machine Learning Solutions Lab, where he helps AWS customers across different industries accelerate their AI and cloud adoption.

Dmitriy Bespalov is a Senior Applied Scientist at the Amazon Machine Learning Solutions Lab, where he helps AWS customers across different industries accelerate their AI and cloud adoption.

Ryan Brand is an Applied Scientist at the Amazon Machine Learning Solutions Lab. He has specific experience in applying machine learning to problems in healthcare and life sciences. In his free time, he enjoys reading history and science fiction.

Ryan Brand is an Applied Scientist at the Amazon Machine Learning Solutions Lab. He has specific experience in applying machine learning to problems in healthcare and life sciences. In his free time, he enjoys reading history and science fiction.

Yanjun Qi is a Senior Applied Science Manager at the Amazon Machine Learning Solution Lab. She innovates and applies machine learning to help AWS customers speed up their AI and cloud adoption.

Yanjun Qi is a Senior Applied Science Manager at the Amazon Machine Learning Solution Lab. She innovates and applies machine learning to help AWS customers speed up their AI and cloud adoption.

Leave a Reply