How to measure the pull-based knowledge cycle

Last month I described a “Pull cycle” for knowledge – let’s now look at the the measures we can introduce to that cycle.

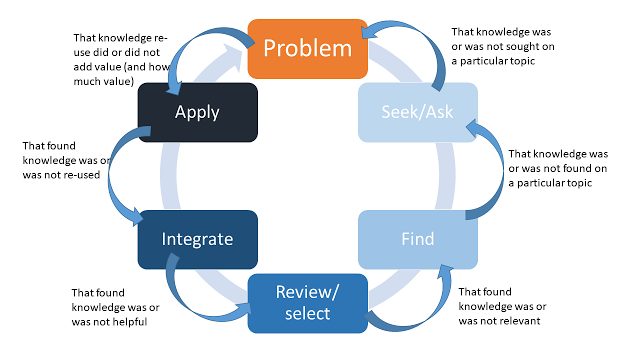

You can find a description of the cycle here. This is a cycle based on knowledge demand (unlike the supply-side cycles you normally see) and includes the following steps;

- The cycle starts with a problem, and the identification of the need for knowledge to solve the problem (the “need to know”)

- The first step is to seek for that knowledge – to search online, and to ask others

- Seeking/asking is followed by finding

- However generally we tend to “over-find”. Unless we are lucky, or there is a very good KM system, we find more than we need, so the next step is to review the results and select those which seem most relevant in the context of the problem.

- This found knowledge then needs to be integrated into what is already known about the problem, and integrated into solutions, approaches, procedures and plans.

- Finally the integrated knowledge needs to be applied to the problem.

Some of the people who responded to this post on linked-in pointed out that the cycle could break at certain points. In the picture above, I have added the monitoring and feedback loops to each step, and acknowledged that each step could succeed or fail. The metrics would work like this:

- Once a problem has been identified, you can measure whether there was any asking/seeking that happened. You would need to interview people in the business to do this, unless there was a process of KM planning in the organisation, in which case you could interrogate the KM plans. You can also monitor the topics that people are asking about or searching for. You can for example analyse questions in a community of practice, or queries to a helpdesk, or you can analyse search terms from the corporate search engine logs. These will give you some ideas of what people are looking for, which you can equate with the knowledge needs within the organisation, which knowledge supply has to match.

- After the question has been asked you can measure whether people found the knowledge they needed, or not. This is easy to do with questions to a CoP; unanswered questions in a CoP forum are pretty obvious (you still have to check whether the question was answered offline or by direct message), and may identify knowledge gaps in the organisation. It is not so easy to measure the success of searches of a knowledge base. However if you do it, you can identify gaps in the knowledge base where the knowledge needs are not being met, and this can trigger the creation of new knowledge assets of articles.

- After knowledge has been found you can ask for feedback on whether the knowledge was relevant or not. In reality this feedback will often be merged with the previous step. You can also ask for feedback on the quality of the knowledge you found. In the context of a knowledge base, this feedback will identify knowledge assets or knowledge articles which need to be updated or improved. In the context of a CoP, this can also help identify areas where existing knowledge is not enough.

- After good and relevant knowledge has been found, you can look for feedback whether the knowledge was actually applied. This will help identify the most applicable and useful knowledge assets and articles in a knowledge base, and in a CoP will help demonstrate that knowledge shared in the CoP is of real value. The CoP moderator or facilitator can try and nudge members who ask questions, to feed back to the community whether the answers have been applied.

- Finally, you can look for feedback on how much difference the knowledge has made, and how much value was realised through problem solution. This allows you to track the value of the entire pull cycle and the entire knowledge management framework. Looking for this feedback will be hard work for someone in the KM team, and you will receive a lot of push-back from the organisation, but there are many examples where organisations have demonstrated measurable value from shared knowledge (see my collection of value stories), and often you can reduce this to discrete examples of shared knowledge (see here, here and here for example), or the value of a specific collection (see Accenture example, and Domestic and general). Just because its hard to get a value figure, doesn’t mean you shouldn’t try.

Many of these monitoring and feedback loops are well developed in the customer-focused KM approaches such as KCS (Knowledge Centred Support), but any KM approach can apply these as part of their own KM metrics framework.

It is through the feedback and metrics associated with the steps that you can tell whether KM is actually working, and if not, where the cycle is broken.

Leave a Reply