Deploy pre-trained models on AWS Wavelength with 5G edge using Amazon SageMaker JumpStart

With the advent of high-speed 5G mobile networks, enterprises are more easily positioned than ever with the opportunity to harness the convergence of telecommunications networks and the cloud. As one of the most prominent use cases to date, machine learning (ML) at the edge has allowed enterprises to deploy ML models closer to their end-customers to reduce latency and increase responsiveness of their applications. As an example, smart venue solutions can use near-real-time computer vision for crowd analytics over 5G networks, all while minimizing investment in on-premises hardware networking equipment. Retailers can deliver more frictionless experiences on the go with natural language processing (NLP), real-time recommendation systems, and fraud detection. Even ground and aerial robotics can use ML to unlock safer, more autonomous operations.

To reduce the barrier to entry of ML at the edge, we wanted to demonstrate an example of deploying a pre-trained model from Amazon SageMaker to AWS Wavelength, all in less than 100 lines of code. In this post, we demonstrate how to deploy a SageMaker model to AWS Wavelength to reduce model inference latency for 5G network-based applications.

Solution overview

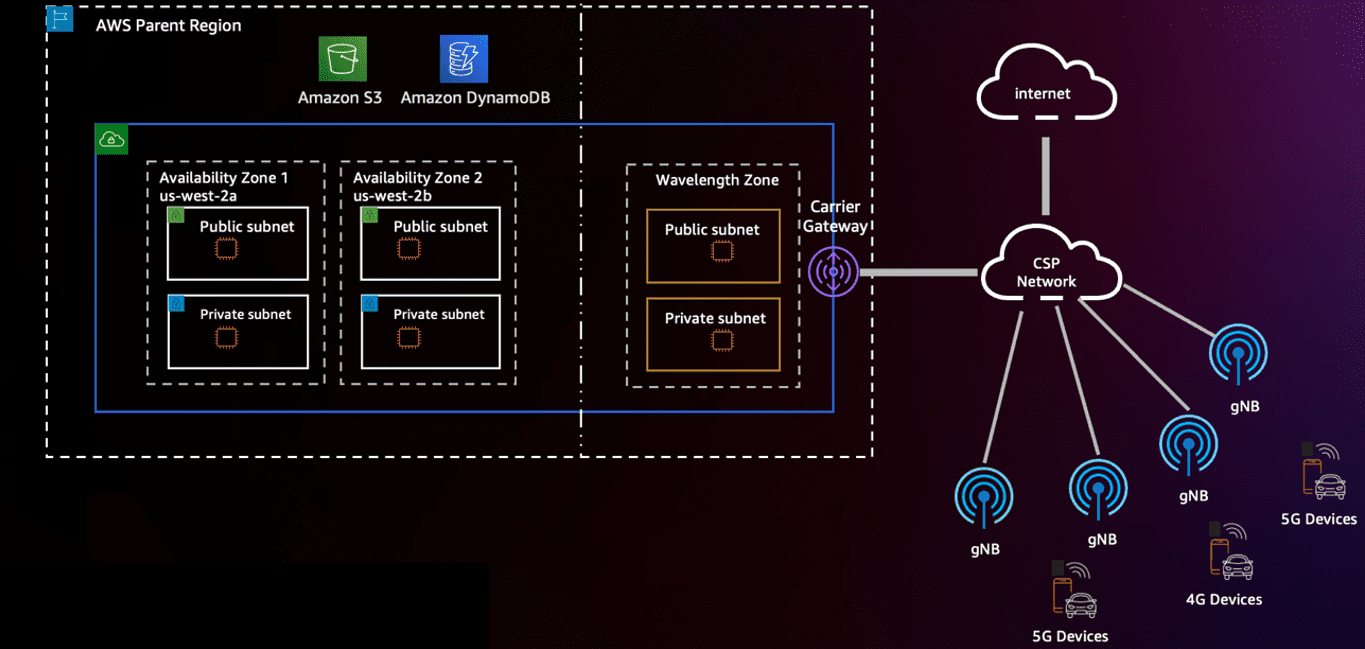

Across AWS’s rapidly expanding global infrastructure, AWS Wavelength brings the power of cloud compute and storage to the edge of 5G networks, unlocking more performant mobile experiences. With AWS Wavelength, you can extend your virtual private cloud (VPC) to Wavelength Zones corresponding to the telecommunications carrier’s network edge in 29 cities across the globe. The following diagram shows an example of this architecture.

You can opt in to the Wavelength Zones within a given Region via the AWS Management Console or the AWS Command Line Interface (AWS CLI). To learn more about deploying geo-distributed applications on AWS Wavelength, refer to Deploy geo-distributed Amazon EKS clusters on AWS Wavelength.

Building on the fundamentals discussed in this post, we look to ML at the edge as a sample workload with which to deploy to AWS Wavelength. As our sample workload, we deploy a pre-trained model from Amazon SageMaker JumpStart.

SageMaker is a fully managed ML service that allows developers to easily deploy ML models into their AWS environments. Although AWS offers a number of options for model training—from AWS Marketplace models and SageMaker built-in algorithms—there are a number of techniques to deploy open-source ML models.

JumpStart provides access to hundreds of built-in algorithms with pre-trained models that can be seamlessly deployed to SageMaker endpoints. From predictive maintenance and computer vision to autonomous driving and fraud detection, JumpStart supports a variety of popular use cases with one-click deployment on the console.

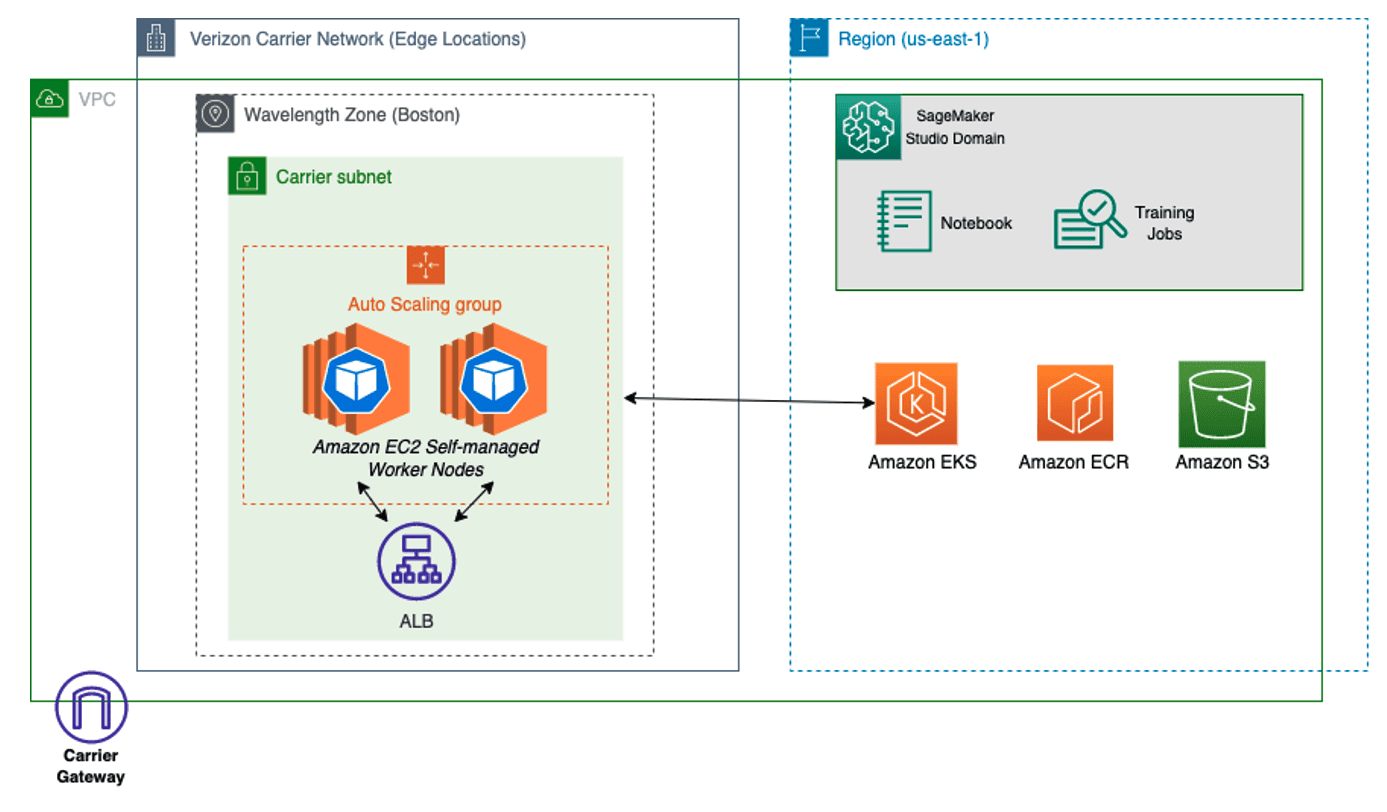

Because SageMaker is not natively supported in Wavelength Zones, we demonstrate how to extract the model artifacts from the Region and re-deploy to the edge. To do so, you use Amazon Elastic Kubernetes Service (Amazon EKS) clusters and node groups in Wavelength Zones, followed by creating a deployment manifest with the container image generated by JumpStart. The following diagram illustrates this architecture.

Prerequisites

To make this as easy as possible, ensure that your AWS account has Wavelength Zones enabled. Note that this integration is only available in us-east-1 and us-west-2, and you will be using us-east-1 for the duration of the demo.

To opt in to AWS Wavelength, complete the following steps:

- On the Amazon VPC console, choose Zones under Settings and choose US East (Verizon) / us-east-1-wl1.

- Choose Manage.

- Select Opted in.

- Choose Update zones.

Create AWS Wavelength infrastructure

Before we convert the local SageMaker model inference endpoint to a Kubernetes deployment, you can create an EKS cluster in a Wavelength Zone. To do so, deploy an Amazon EKS cluster with an AWS Wavelength node group. To learn more, you can visit this guide on the AWS Containers Blog or Verizon’s 5GEdgeTutorials repository for one such example.

Next, using an AWS Cloud9 environment or interactive development environment (IDE) of choice, download the requisite SageMaker packages and Docker Compose, a key dependency of JumpStart.

Create model artifacts using JumpStart

First, make sure that you have an AWS Identity and Access Management (IAM) execution role for SageMaker. To learn more, visit SageMaker Roles.

- Using this example, create a file called train_model.py that uses the SageMaker Software Development Kit (SDK) to retrieve a pre-built model (replace

with the Amazon Resource Name (ARN) of your SageMaker execution role). In this file, you deploy a model locally using the instance_typeattribute in themodel.deploy()function, which starts a Docker container within your IDE using all requisite model artifacts you defined:

- Next, set

infer_model_idto the ID of the SageMaker model that you would like to use.

For a complete list, refer to Built-in Algorithms with pre-trained Model Table. In our example, we use the Bidirectional Encoder Representations from Transformers (BERT) model, commonly used for natural language processing.

- Run the

train_model.pyscript to retrieve the JumpStart model artifacts and deploy the pre-trained model to your local machine:

Should this step succeed, your output may resemble the following:

In the output, you will see three artifacts in order: the base image for TensorFlow inference, the inference script that serves the model, and the artifacts containing the trained model. Although you could create a custom Docker image with these artifacts, another approach is to let SageMaker local mode create the Docker image for you. In the subsequent steps, we extract the container image running locally and deploy to Amazon Elastic Container Registry (Amazon ECR) as well as push the model artifact separately to Amazon Simple Storage Service (Amazon S3).

Convert local mode artifacts to remote Kubernetes deployment

Now that you have confirmed that SageMaker is working locally, let’s extract the deployment manifest from the running container. Complete the following steps:

Identify the location of the SageMaker local mode deployment manifest: To do so, search our root directory for any files named docker-compose.yaml.

docker_manifest=$( find /tmp/tmp* -name "docker-compose.yaml" -printf '%T+ %pn' | sort | tail -n 1 | cut -d' ' -f2-)

echo $docker_manifestIdentify the location of the SageMaker local mode model artifacts: Next, find the underlying volume mounted to the local SageMaker inference container, which will be used in each EKS worker node after we upload the artifact to Amazon s3.

model_local_volume = $(grep -A1 -w "volumes:" $docker_manifest | tail -n 1 | tr -d ' ' | awk -F: '{print $1}' | cut -c 2-)

# Returns something like: /tmp/tmpcr4bu_a7Create local copy of running SageMaker inference container: Next, we’ll find the currently running container image running our machine learning inference model and make a copy of the container locally. This will ensure we have our own copy of the container image to pull from Amazon ECR.

# Find container ID of running SageMaker Local container

mkdir sagemaker-container

container_id=$(docker ps --format "{{.ID}} {{.Image}}" | grep "tensorflow" | awk '{print $1}')

# Retrieve the files of the container locally

docker cp $my_container_id:/ sagemaker-container/Before acting on the model_local_volume, which we’ll push to Amazon S3, push a copy of the running Docker image, now in the sagemaker-container directory, to Amazon Elastic Container Registry. Be sure to replace region, aws_account_id, docker_image_id and my-repository:tag or follow the Amazon ECR user guide. Also, be sure to take note of the final ECR Image URL (aws_account_id.dkr.ecr.region.amazonaws.com/my-repository:tag), which we will use in our EKS deployment.

Now that we have an ECR image corresponding to the inference endpoint, create a new Amazon S3 bucket and copy the SageMaker Local artifacts (model_local_volume) to this bucket. In parallel, create an Identity Access Management (IAM) that provides Amazon EC2 instances access to read objects within the bucket. Be sure to replace

Next, to ensure that each EC2 instance pulls a copy of the model artifact on launch, edit the user data for your EKS worker nodes. In your user data script, ensure that each node retrieves the model artifacts using the the S3 API at launch. Be sure to replace

Now, you can inspect the existing docker manifest it and translate it to Kubernetes-friendly manifest files using Kompose, a well-known conversion tool. Note: if you get a version compatibility error, change the version attribute in line 27 of docker-compose.yml to “2”.

After running Kompose, you’ll see four new files: a Deployment object, Service object, PersistentVolumeClaim object, and NetworkPolicy object. You now have everything you need to begin your foray into Kubernetes at the edge!

Deploy SageMaker model artifacts

Make sure you have kubectl and aws-iam-authenticator downloaded to your AWS Cloud9 IDE. If not, follow the installation guides:

Now, complete the following steps:

Modify the service/algo-1-ow3nv object to switch the service type from ClusterIP to NodePort. In our example, we have selected port 30,007 as our NodePort:

Next, you must allow the NodePort in the security group for your node. To do so, retrieve the security groupID and allow-list the NodePort:

Next, modify the algo-1-ow3nv-deployment.yaml manifest to mount the /tmp/model hostPath directory to the container. Replace

With the manifest files you created from Kompose, use kubectl to apply the configs to your cluster:

Connect to the 5G edge model

To connect to your model, complete the following steps:

On the Amazon EC2 console, retrieve the carrier IP of the EKS worker node or use the AWS CLI to query the carrier IP address directly:

Now, with the carrier IP address extracted, you can connect to the model directly using the NodePort. Create a file called invoke.py to invoke the BERT model directly by providing a text-based input that will be run against a sentiment-analyzer to determine whether the tone was positive or negative:

Your output should resemble the following:

Clean up

To destroy all application resources created, delete the AWS Wavelength worker nodes, the EKS control plane, and all the resources created within the VPC. Additionally, delete the ECR repo used to host the container image, the S3 buckets used to host the SageMaker model artifacts and the sagemaker-demo-app-s3 IAM policy.

Conclusion

In this post, we demonstrated a novel approach to deploying SageMaker models to the network edge using Amazon EKS and AWS Wavelength. To learn about Amazon EKS best practices on AWS Wavelength, refer to Deploy geo-distributed Amazon EKS clusters on AWS Wavelength. Additionally, to learn more about Jumpstart, visit the Amazon SageMaker JumpStart Developer Guide or the JumpStart Available Model Table.

About the Authors

Robert Belson is a Developer Advocate in the AWS Worldwide Telecom Business Unit, specializing in AWS Edge Computing. He focuses on working with the developer community and large enterprise customers to solve their business challenges using automation, hybrid networking and the edge cloud.

Robert Belson is a Developer Advocate in the AWS Worldwide Telecom Business Unit, specializing in AWS Edge Computing. He focuses on working with the developer community and large enterprise customers to solve their business challenges using automation, hybrid networking and the edge cloud.

Mohammed Al-Mehdar is a Senior Solutions Architect in the Worldwide Telecom Business Unit at AWS. His main focus is to help enable customers to build and deploy Telco and Enterprise IT workloads on AWS. Prior to joining AWS, Mohammed has been working in the Telco industry for over 13 years and brings a wealth of experience in the areas of LTE Packet Core, 5G, IMS and WebRTC. Mohammed holds a bachelor’s degree in Telecommunications Engineering from Concordia University.

Mohammed Al-Mehdar is a Senior Solutions Architect in the Worldwide Telecom Business Unit at AWS. His main focus is to help enable customers to build and deploy Telco and Enterprise IT workloads on AWS. Prior to joining AWS, Mohammed has been working in the Telco industry for over 13 years and brings a wealth of experience in the areas of LTE Packet Core, 5G, IMS and WebRTC. Mohammed holds a bachelor’s degree in Telecommunications Engineering from Concordia University.

Evan Kravitz is a software engineer at Amazon Web Services, working on SageMaker JumpStart. He enjoys cooking and going on runs in New York City.

Evan Kravitz is a software engineer at Amazon Web Services, working on SageMaker JumpStart. He enjoys cooking and going on runs in New York City.

Justin St. Arnauld is an Associate Director – Solution Architects at Verizon for the Public Sector with over 15 years of experience in the IT industry. He is a passionate advocate for the power of edge computing and 5G networks and is an expert in developing innovative technology solutions that leverage these technologies. Justin is particularly enthusiastic about the capabilities offered by Amazon Web Services (AWS) in delivering cutting-edge solutions for his clients. In his free time, Justin enjoys keeping up-to-date with the latest technology trends and sharing his knowledge and insights with others in the industry.

Justin St. Arnauld is an Associate Director – Solution Architects at Verizon for the Public Sector with over 15 years of experience in the IT industry. He is a passionate advocate for the power of edge computing and 5G networks and is an expert in developing innovative technology solutions that leverage these technologies. Justin is particularly enthusiastic about the capabilities offered by Amazon Web Services (AWS) in delivering cutting-edge solutions for his clients. In his free time, Justin enjoys keeping up-to-date with the latest technology trends and sharing his knowledge and insights with others in the industry.

Leave a Reply