Announcing New Tools for Building with Generative AI on AWS

The seeds of a machine learning (ML) paradigm shift have existed for decades, but with the ready availability of scalable compute capacity, a massive proliferation of data, and the rapid advancement of ML technologies, customers across industries are transforming their businesses. Just recently, generative AI applications like ChatGPT have captured widespread attention and imagination. We are truly at an exciting inflection point in the widespread adoption of ML, and we believe most customer experiences and applications will be reinvented with generative AI.

AI and ML have been a focus for Amazon for over 20 years, and many of the capabilities customers use with Amazon are driven by ML. Our e-commerce recommendations engine is driven by ML; the paths that optimize robotic picking routes in our fulfillment centers are driven by ML; and our supply chain, forecasting, and capacity planning are informed by ML. Prime Air (our drones) and the computer vision technology in Amazon Go (our physical retail experience that lets consumers select items off a shelf and leave the store without having to formally check out) use deep learning. Alexa, powered by more than 30 different ML systems, helps customers billions of times each week to manage smart homes, shop, get information and entertainment, and more. We have thousands of engineers at Amazon committed to ML, and it’s a big part of our heritage, current ethos, and future.

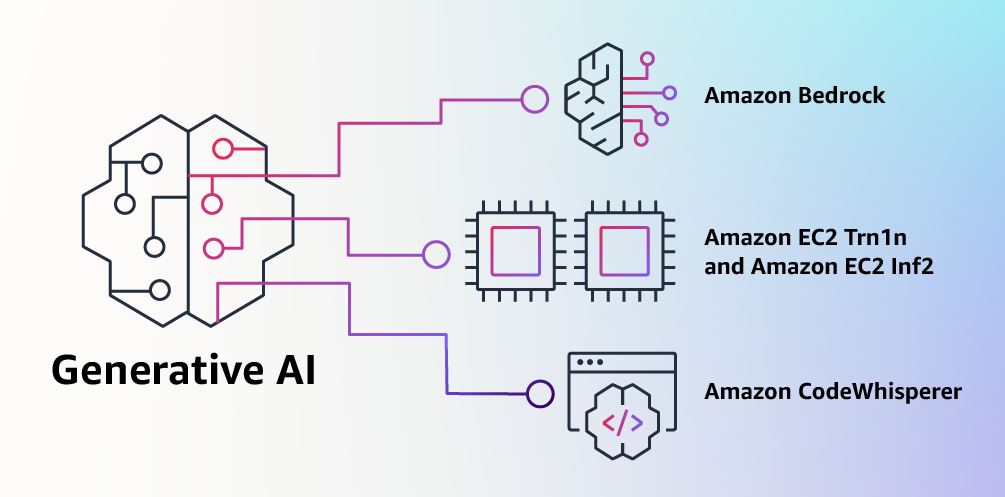

At AWS, we have played a key role in democratizing ML and making it accessible to anyone who wants to use it, including more than 100,000 customers of all sizes and industries. AWS has the broadest and deepest portfolio of AI and ML services at all three layers of the stack. We’ve invested and innovated to offer the most performant, scalable infrastructure for cost-effective ML training and inference; developed Amazon SageMaker, which is the easiest way for all developers to build, train, and deploy models; and launched a wide range of services that allow customers to add AI capabilities like image recognition, forecasting, and intelligent search to applications with a simple API call. This is why customers like Intuit, Thomson Reuters, AstraZeneca, Ferrari, Bundesliga, 3M, and BMW, as well as thousands of startups and government agencies around the world, are transforming themselves, their industries, and their missions with ML. We take the same democratizing approach to generative AI: we work to take these technologies out of the realm of research and experiments and extend their availability far beyond a handful of startups and large, well-funded tech companies. That’s why today I’m excited to announce several new innovations that will make it easy and practical for our customers to use generative AI in their businesses.

Building with Generative AI on AWS

Generative AI and foundation models

Generative AI is a type of AI that can create new content and ideas, including conversations, stories, images, videos, and music. Like all AI, generative AI is powered by ML models—very large models that are pre-trained on vast amounts of data and commonly referred to as Foundation Models (FMs). Recent advancements in ML (specifically the invention of the transformer-based neural network architecture) have led to the rise of models that contain billions of parameters or variables. To give a sense for the change in scale, the largest pre-trained model in 2019 was 330M parameters. Now, the largest models are more than 500B parameters—a 1,600x increase in size in just a few years. Today’s FMs, such as the large language models (LLMs) GPT3.5 or BLOOM, and the text-to-image model Stable Diffusion from Stability AI, can perform a wide range of tasks that span multiple domains, like writing blog posts, generating images, solving math problems, engaging in dialog, and answering questions based on a document. The size and general-purpose nature of FMs make them different from traditional ML models, which typically perform specific tasks, like analyzing text for sentiment, classifying images, and forecasting trends.

FMs can perform so many more tasks because they contain such a large number of parameters that make them capable of learning complex concepts. And through their pre-training exposure to internet-scale data in all its various forms and myriad of patterns, FMs learn to apply their knowledge within a wide range of contexts. While the capabilities and resulting possibilities of a pre-trained FM are amazing, customers get really excited because these generally capable models can also be customized to perform domain-specific functions that are differentiating to their businesses, using only a small fraction of the data and compute required to train a model from scratch. The customized FMs can create a unique customer experience, embodying the company’s voice, style, and services across a wide variety of consumer industries, like banking, travel, and healthcare. For instance, a financial firm that needs to auto-generate a daily activity report for internal circulation using all the relevant transactions can customize the model with proprietary data, which will include past reports, so that the FM learns how these reports should read and what data was used to generate them.

The potential of FMs is incredibly exciting. But, we are still in the very early days. While ChatGPT has been the first broad generative AI experience to catch customers’ attention, most folks studying generative AI have quickly come to realize that several companies have been working on FMs for years, and there are several different FMs available—each with unique strengths and characteristics. As we’ve seen over the years with fast-moving technologies, and in the evolution of ML, things change rapidly. We expect new architectures to arise in the future, and this diversity of FMs will set off a wave of innovation. We’re already seeing new application experiences never seen before. AWS customers have asked us how they can quickly take advantage of what is out there today (and what is likely coming tomorrow) and quickly begin using FMs and generative AI within their businesses and organizations to drive new levels of productivity and transform their offerings.

Announcing Amazon Bedrock and Amazon Titan models, the easiest way to build and scale generative AI applications with FMs

Customers have told us there are a few big things standing in their way today. First, they need a straightforward way to find and access high-performing FMs that give outstanding results and are best-suited for their purposes. Second, customers want integration into applications to be seamless, without having to manage huge clusters of infrastructure or incur large costs. Finally, customers want it to be easy to take the base FM, and build differentiated apps using their own data (a little data or a lot). Since the data customers want to use for customization is incredibly valuable IP, they need it to stay completely protected, secure, and private during that process, and they want control over how their data is shared and used.

We took all of that feedback from customers, and today we are excited to announce Amazon Bedrock, a new service that makes FMs from AI21 Labs, Anthropic, Stability AI, and Amazon accessible via an API. Bedrock is the easiest way for customers to build and scale generative AI-based applications using FMs, democratizing access for all builders. Bedrock will offer the ability to access a range of powerful FMs for text and images—including Amazon’s Titan FMs, which consist of two new LLMs we’re also announcing today—through a scalable, reliable, and secure AWS managed service. With Bedrock’s serverless experience, customers can easily find the right model for what they’re trying to get done, get started quickly, privately customize FMs with their own data, and easily integrate and deploy them into their applications using the AWS tools and capabilities they are familiar with (including integrations with Amazon SageMaker ML features like Experiments to test different models and Pipelines to manage their FMs at scale) without having to manage any infrastructure.

Bedrock customers can choose from some of the most cutting-edge FMs available today. This includes the Jurassic-2 family of multilingual LLMs from AI21 Labs, which follow natural language instructions to generate text in Spanish, French, German, Portuguese, Italian, and Dutch. Claude, Anthropic’s LLM, can perform a wide variety of conversational and text processing tasks and is based on Anthropic’s extensive research into training honest and responsible AI systems. Bedrock also makes it easy to access Stability AI’s suite of text-to-image foundation models, including Stable Diffusion (the most popular of its kind), which is capable of generating unique, realistic, high-quality images, art, logos, and designs.

One of the most important capabilities of Bedrock is how easy it is to customize a model. Customers simply point Bedrock at a few labeled examples in Amazon S3, and the service can fine-tune the model for a particular task without having to annotate large volumes of data (as few as 20 examples is enough). Imagine a content marketing manager who works at a leading fashion retailer and needs to develop fresh, targeted ad and campaign copy for an upcoming new line of handbags. To do this, they provide Bedrock a few labeled examples of their best performing taglines from past campaigns, along with the associated product descriptions, and Bedrock will automatically start generating effective social media, display ad, and web copy for the new handbags. None of the customer’s data is used to train the underlying models, and since all data is encrypted and does not leave a customer’s Virtual Private Cloud (VPC), customers can trust that their data will remain private and confidential.

Bedrock is now in limited preview, and customers like Coda are excited about how fast their development teams have gotten up and running. Shishir Mehrotra, Co-founder and CEO of Coda, says, “As a longtime happy AWS customer, we’re excited about how Amazon Bedrock can bring quality, scalability, and performance to Coda AI. Since all our data is already on AWS, we are able to quickly incorporate generative AI using Bedrock, with all the security and privacy we need to protect our data built-in. With over tens of thousands of teams running on Coda, including large teams like Uber, the New York Times, and Square, reliability and scalability are really important.”

We have been previewing Amazon’s new Titan FMs with a few customers before we make them available more broadly in the coming months. We’ll initially have two Titan models. The first is a generative LLM for tasks such as summarization, text generation (for example, creating a blog post), classification, open-ended Q&A, and information extraction. The second is an embeddings LLM that translates text inputs (words, phrases or possibly large units of text) into numerical representations (known as embeddings) that contain the semantic meaning of the text. While this LLM will not generate text, it is useful for applications like personalization and search because by comparing embeddings the model will produce more relevant and contextual responses than word matching. In fact, Amazon.com’s product search capability uses a similar embeddings model among others to help customers find the products they’re looking for. To continue supporting best practices in the responsible use of AI, Titan FMs are built to detect and remove harmful content in the data, reject inappropriate content in the user input, and filter the models’ outputs that contain inappropriate content (such as hate speech, profanity, and violence).

Bedrock makes the power of FMs accessible to companies of all sizes so that they can accelerate the use of ML across their organizations and build their own generative AI applications because it will be easy for all developers. We think Bedrock will be a massive step forward in democratizing FMs, and our partners like Accenture, Deloitte, Infosys, and Slalom are building practices to help enterprises go faster with generative AI. Independent Software Vendors (ISVs) like C3 AI and Pega are excited to leverage Bedrock for easy access to its great selection of FMs with all of the security, privacy, and reliability they expect from AWS.

Announcing the general availability of Amazon EC2 Trn1n instances powered by AWS Trainium and Amazon EC2 Inf2 instances powered by AWS Inferentia2, the most cost-effective cloud infrastructure for generative AI

Whatever customers are trying to do with FMs—running them, building them, customizing them—they need the most performant, cost-effective infrastructure that is purpose-built for ML. Over the last five years, AWS has been investing in our own silicon to push the envelope on performance and price performance for demanding workloads like ML training and Inference, and our AWS Trainium and AWS Inferentia chips offer the lowest cost for training models and running inference in the cloud. This ability to maximize performance and control costs by choosing the optimal ML infrastructure is why leading AI startups, like AI21 Labs, Anthropic, Cohere, Grammarly, Hugging Face, Runway, and Stability AI run on AWS.

Trn1 instances, powered by Trainium, can deliver up to 50% savings on training costs over any other EC2 instance, and are optimized to distribute training across multiple servers connected with 800 Gbps of second-generation Elastic Fabric Adapter (EFA) networking. Customers can deploy Trn1 instances in UltraClusters that can scale up to 30,000 Trainium chips (more than 6 exaflops of compute) located in the same AWS Availability Zone with petabit scale networking. Many AWS customers, including Helixon, Money Forward, and the Amazon Search team, use Trn1 instances to help reduce the time required to train the largest-scale deep learning models from months to weeks or even days while lowering their costs. 800 Gbps is a lot of bandwidth, but we have continued to innovate to deliver more, and today we are announcing the general availability of new, network-optimized Trn1n instances, which offer 1600 Gbps of network bandwidth and are designed to deliver 20% higher performance over Trn1 for large, network-intensive models.

Today, most of the time and money spent on FMs goes into training them. This is because many customers are only just starting to deploy FMs into production. However, in the future, when FMs are deployed at scale, most costs will be associated with running the models and doing inference. While you typically train a model periodically, a production application can be constantly generating predictions, known as inferences, potentially generating millions per hour. And these predictions need to happen in real-time, which requires very low-latency and high-throughput networking. Alexa is a great example with millions of requests coming in every minute, which accounts for 40% of all compute costs.

Because we knew that most of the future ML costs would come from running inferences, we prioritized inference-optimized silicon when we started investing in new chips a few years ago. In 2018, we announced Inferentia, the first purpose-built chip for inference. Every year, Inferentia helps Amazon run trillions of inferences and has saved companies like Amazon over a hundred million dollars in capital expense already. The results are impressive, and we see many opportunities to keep innovating as workloads will only increase in size and complexity as more customers integrate generative AI into their applications.

That’s why we’re announcing today the general availability of Inf2 instances powered by AWS Inferentia2, which are optimized specifically for large-scale generative AI applications with models containing hundreds of billions of parameters. Inf2 instances deliver up to 4x higher throughput and up to 10x lower latency compared to the prior generation Inferentia-based instances. They also have ultra-high-speed connectivity between accelerators to support large-scale distributed inference. These capabilities drive up to 40% better inference price performance than other comparable Amazon EC2 instances and the lowest cost for inference in the cloud. Customers like Runway are seeing up to 2x higher throughput with Inf2 than comparable Amazon EC2 instances for some of their models. This high-performance, low-cost inference will enable Runway to introduce more features, deploy more complex models, and ultimately deliver a better experience for the millions of creators using Runway.

Announcing the general availability of Amazon CodeWhisperer, free for individual developers

We know that building with the right FMs and running Generative AI applications at scale on the most performant cloud infrastructure will be transformative for customers. The new wave of experiences will also be transformative for users. With generative AI built-in, users will be able to have more natural and seamless interactions with applications and systems. Think of how we can unlock our mobile phones just by looking at them, without needing to know anything about the powerful ML models that make this feature possible.

One area where we foresee the use of generative AI growing rapidly is in coding. Software developers today spend a significant amount of their time writing code that is pretty straightforward and undifferentiated. They also spend a lot of time trying to keep up with a complex and ever-changing tool and technology landscape. All of this leaves developers less time to develop new, innovative capabilities and services. Developers try to overcome this by copying and modifying code snippets from the web, which can result in inadvertently copying code that doesn’t work, contains security vulnerabilities, or doesn’t track usage of open source software. And, ultimately, searching and copying still takes time away from the good stuff.

Generative AI can take this heavy lifting out of the equation by “writing” much of the undifferentiated code, allowing developers to build faster while freeing them up to focus on the more creative aspects of coding. This is why, last year, we announced the preview of Amazon CodeWhisperer, an AI coding companion that uses a FM under the hood to radically improve developer productivity by generating code suggestions in real-time based on developers’ comments in natural language and prior code in their Integrated Development Environment (IDE). Developers can simply tell CodeWhisperer to do a task, such as “parse a CSV string of songs” and ask it to return a structured list based on values such as artist, title, and highest chart rank. CodeWhisperer provides a productivity boost by generating an entire function that parses the string and returns the list as specified. Developer response to the preview has been overwhelmingly positive, and we continue to believe that helping developers code could end up being one of the most powerful uses of generative AI we’ll see in the coming years. During the preview, we ran a productivity challenge, and participants who used CodeWhisperer completed tasks 57% faster, on average, and were 27% more likely to complete them successfully than those who didn’t use CodeWhisperer. This is a giant leap forward in developer productivity, and we believe this is only the beginning.

Today, we’re excited to announce the general availability of Amazon CodeWhisperer for Python, Java, JavaScript, TypeScript, and C#—plus ten new languages, including Go, Kotlin, Rust, PHP, and SQL. CodeWhisperer can be accessed from IDEs such as VS Code, IntelliJ IDEA, AWS Cloud9, and many more via the AWS Toolkit IDE extensions. CodeWhisperer is also available in the AWS Lambda console. In addition to learning from the billions of lines of publicly available code, CodeWhisperer has been trained on Amazon code. We believe CodeWhisperer is now the most accurate, fastest, and most secure way to generate code for AWS services, including Amazon EC2, AWS Lambda, and Amazon S3.

Developers aren’t truly going to be more productive if code suggested by their generative AI tool contains hidden security vulnerabilities or fails to handle open source responsibly. CodeWhisperer is the only AI coding companion with built-in security scanning (powered by automated reasoning) for finding and suggesting remediations for hard-to-detect vulnerabilities, such as those in the top ten Open Worldwide Application Security Project (OWASP), those that don’t meet crypto library best practices, and others. To help developers code responsibly, CodeWhisperer filters out code suggestions that might be considered biased or unfair, and CodeWhisperer is the only coding companion that can filter and flag code suggestions that resemble open source code that customers may want to reference or license for use.

We know generative AI is going to change the game for developers, and we want it to be useful to as many as possible. This is why CodeWhisperer is free for all individual users with no qualifications or time limits for generating code! Anyone can sign up for CodeWhisperer with just an email account and become more productive within minutes. You don’t even have to have an AWS account. For business users, we’re offering a CodeWhisperer Professional Tier that includes administration features like single sign-on (SSO) with AWS Identity and Access Management (IAM) integration, as well as higher limits on security scanning.

Building powerful applications like CodeWhisperer is transformative for developers and all our customers. We have a lot more coming, and we are excited about what you will build with generative AI on AWS. Our mission is to make it possible for developers of all skill levels and for organizations of all sizes to innovate using generative AI. This is just the beginning of what we believe will be the next wave of ML powering new possibilities for you.

Resources

Check out the following resources to learn more about generative AI on AWS and these announcements:

- Explore generative AI on AWS

- An introduction to generative AI: Read what Werner Vogels, Amazon.com CTO, has to say about Generative AI

- Demystifying generative AI: Listen to Werner Vogels and I discuss the impact of generative AI to businesses

- Learn about Amazon Bedrock, the easiest way to build and scale generative AI applications with FMs

- Learn about Amazon Titan, high-performing FMs from Amazon to innovate responsibly

- Discover the new AWS Trainium based Trn1n instance and the AWS Inferentia based Inf2 instance

- Learn how you can use Amazon CodeWhisperer as your coding companion

About the author

Swami Sivasubramanian is Vice President of Data and Machine Learning at AWS. In this role, Swami oversees all AWS Database, Analytics, and AI & Machine Learning services. His team’s mission is to help organizations put their data to work with a complete, end-to-end data solution to store, access, analyze, and visualize, and predict.

Swami Sivasubramanian is Vice President of Data and Machine Learning at AWS. In this role, Swami oversees all AWS Database, Analytics, and AI & Machine Learning services. His team’s mission is to help organizations put their data to work with a complete, end-to-end data solution to store, access, analyze, and visualize, and predict.

Leave a Reply