Accelerate protein structure prediction with the ESMFold language model on Amazon SageMaker

Proteins drive many biological processes, such as enzyme activity, molecular transport, and cellular support. The three-dimensional structure of a protein provides insight into its function and how it interacts with other biomolecules. Experimental methods to determine protein structure, such as X-ray crystallography and NMR spectroscopy, are expensive and time-consuming.

In contrast, recently-developed computational methods can rapidly and accurately predict the structure of a protein from its amino acid sequence. These methods are critical for proteins that are difficult to study experimentally, such as membrane proteins, the targets of many drugs. One well-known example of this is AlphaFold, a deep learning-based algorithm celebrated for its accurate predictions.

ESMFold is another highly-accurate, deep learning-based method developed to predict protein structure from its amino acid sequence. ESMFold uses a large protein language model (pLM) as a backbone and operates end to end. Unlike AlphaFold2, it doesn’t need a lookup or Multiple Sequence Alignment (MSA) step, nor does it rely on external databases to generate predictions. Instead, the development team trained the model on millions of protein sequences from UniRef. During training, the model developed attention patterns that elegantly represent the evolutionary interactions between amino acids in the sequence. This use of a pLM instead of an MSA enables up to 60 times faster prediction times than other state-of-the-art models.

In this post, we use the pre-trained ESMFold model from Hugging Face with Amazon SageMaker to predict the heavy chain structure of trastuzumab, a monoclonal antibody first developed by Genentech for the treatment of HER2-positive breast cancer. Quickly predicting the structure of this protein could be useful if researchers wanted to test the effect of sequence modifications. This could potentially lead to improved patient survival or fewer side effects.

This post provides an example Jupyter notebook and related scripts in the following GitHub repository.

Prerequisites

We recommend running this example in an Amazon SageMaker Studio notebook running the PyTorch 1.13 Python 3.9 CPU-optimized image on an ml.r5.xlarge instance type.

Visualize the experimental structure of trastuzumab

To begin, we use the biopython library and a helper script to download the trastuzumab structure from the RCSB Protein Data Bank:

Next, we use the py3Dmol library to visualize the structure as an interactive 3D visualization:

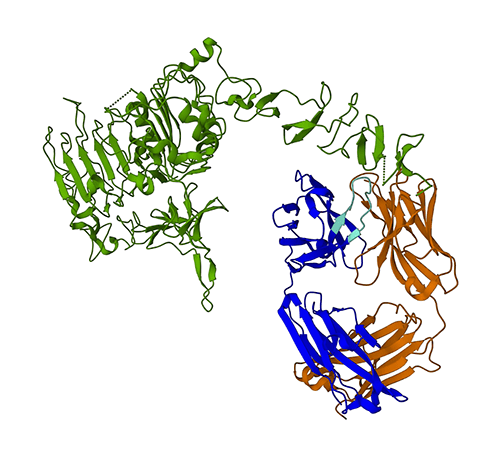

The following figure represents the 3D protein structure 1N8Z from the Protein Data Bank (PDB). In this image, the trastuzumab light chain is displayed in orange, the heavy chain is blue (with the variable region in light blue), and the HER2 antigen is green.

We’ll first use ESMFold to predict the structure of the heavy chain (Chain B) from its amino acid sequence. Then, we will compare the prediction to the experimentally determined structure shown above.

Predict the trastuzumab heavy chain structure from its sequence using ESMFold

Let’s use the ESMFold model to predict the structure of the heavy chain and compare it to the experimental result. To start, we’ll use a pre-built notebook environment in Studio that comes with several important libraries, like PyTorch, pre-installed. Although we could use an accelerated instance type to improve the performance of our notebook analysis, we’ll instead use a non-accelerated instance and run the ESMFold prediction on a CPU.

First, we load the pre-trained ESMFold model and tokenizer from Hugging Face Hub:

Next, we copy the model to our device (CPU in this case) and set some model parameters:

To prepare the protein sequence for analysis, we need to tokenize it. This translates the amino acid symbols (EVQLV…) into a numerical format that the ESMFold model can understand (6,19,5,10,19,…):

Next, we copy the tokenized input to the mode, make a prediction, and save the result to a file:

This takes about 3 minutes on a non-accelerated instance type, like a r5.

We can check the accuracy of the ESMFold prediction by comparing it to the experimental structure. We do this using the US-Align tool developed by the Zhang Lab at the University of Michigan:

| PDBchain1 | PDBchain2 | TM-Score |

| data/prediction.pdb:A | data/experimental.pdb:B | 0.802 |

The template modeling score (TM-score) is a metric for assessing the similarity of protein structures. A score of 1.0 indicates a perfect match. Scores above 0.7 indicate that proteins share the same backbone structure. Scores above 0.9 indicate that the proteins are functionally interchangeable for downstream use. In our case of achieving TM-Score 0.802, the ESMFold prediction would likely be appropriate for applications like structure scoring or ligand binding experiments, but may not be suitable for use cases like molecular replacement that require extremely high accuracy.

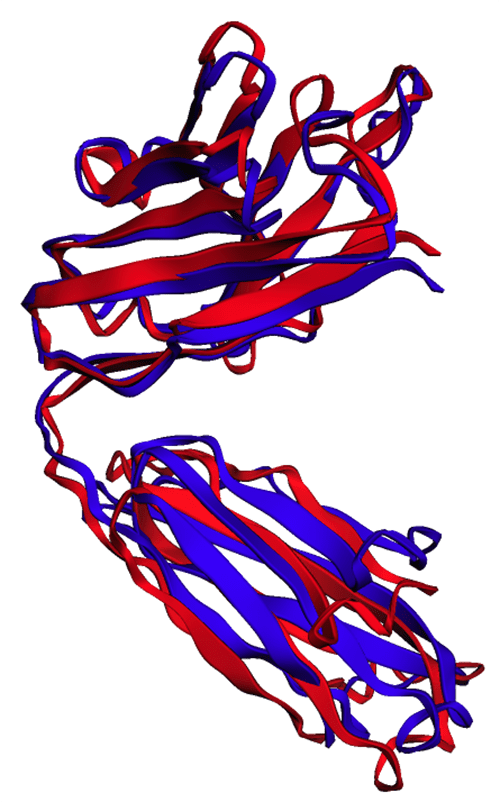

We can validate this result by visualizing the aligned structures. The two structures show a high, but not perfect, degree of overlap. Protein structure predictions is a rapidly-evolving field and many research teams are developing ever-more accurate algorithms!

Deploy ESMFold as a SageMaker inference endpoint

Running model inference in a notebook is fine for experimentation, but what if you need to integrate your model with an application? Or an MLOps pipeline? In this case, a better option is to deploy your model as an inference endpoint. In the following example, we’ll deploy ESMFold as a SageMaker real-time inference endpoint on an accelerated instance. SageMaker real-time endpoints provide a scalable, cost-effective, and secure way to deploy and host machine learning (ML) models. With automatic scaling, you can adjust the number of instances running the endpoint to meet the demands of your application, optimizing costs and ensuring high availability.

The pre-built SageMaker container for Hugging Face makes it easy to deploy deep learning models for common tasks. However, for novel use cases like protein structure prediction, we need to define a custom inference.py script to load the model, run the prediction, and format the output. This script includes much of the same code we used in our notebook. We also create a requirements.txt file to define some Python dependencies for our endpoint to use. You can see the files we created in the GitHub repository.

In the following figure, the experimental (blue) and predicted (red) structures of the trastuzumab heavy chain are very similar, but not identical.

After we’ve created the necessary files in the code directory, we deploy our model using the SageMaker HuggingFaceModel class. This uses a pre-built container to simplify the process of deploying Hugging Face models to SageMaker. Note that it may take 10 minutes or more to create the endpoint, depending on the availability of ml.g4dn instance types in our Region.

When the endpoint deployment is complete, we can resubmit the protein sequence and display the first few rows of the prediction:

Because we deployed our endpoint to an accelerated instance, the prediction should only take a few seconds. Each row in the result corresponds to a single atom and includes the amino acid identity, three spatial coordinates, and a pLDDT score representing the prediction confidence at that location.

| PDB_GROUP | ID | ATOM_LABEL | RES_ID | CHAIN_ID | SEQ_ID | CARTN_X | CARTN_Y | CARTN_Z | OCCUPANCY | PLDDT | ATOM_ID |

| ATOM | 1 | N | GLU | A | 1 | 14.578 | -19.953 | 1.47 | 1 | 0.83 | N |

| ATOM | 2 | CA | GLU | A | 1 | 13.166 | -19.595 | 1.577 | 1 | 0.84 | C |

| ATOM | 3 | CA | GLU | A | 1 | 12.737 | -18.693 | 0.423 | 1 | 0.86 | C |

| ATOM | 4 | CB | GLU | A | 1 | 12.886 | -18.906 | 2.915 | 1 | 0.8 | C |

| ATOM | 5 | O | GLU | A | 1 | 13.417 | -17.715 | 0.106 | 1 | 0.83 | O |

| ATOM | 6 | cg | GLU | A | 1 | 11.407 | -18.694 | 3.2 | 1 | 0.71 | C |

| ATOM | 7 | cd | GLU | A | 1 | 11.141 | -18.042 | 4.548 | 1 | 0.68 | C |

| ATOM | 8 | OE1 | GLU | A | 1 | 12.108 | -17.805 | 5.307 | 1 | 0.68 | O |

| ATOM | 9 | OE2 | GLU | A | 1 | 9.958 | -17.767 | 4.847 | 1 | 0.61 | O |

| ATOM | 10 | N | VAL | A | 2 | 11.678 | -19.063 | -0.258 | 1 | 0.87 | N |

| ATOM | 11 | CA | VAL | A | 2 | 11.207 | -18.309 | -1.415 | 1 | 0.87 | C |

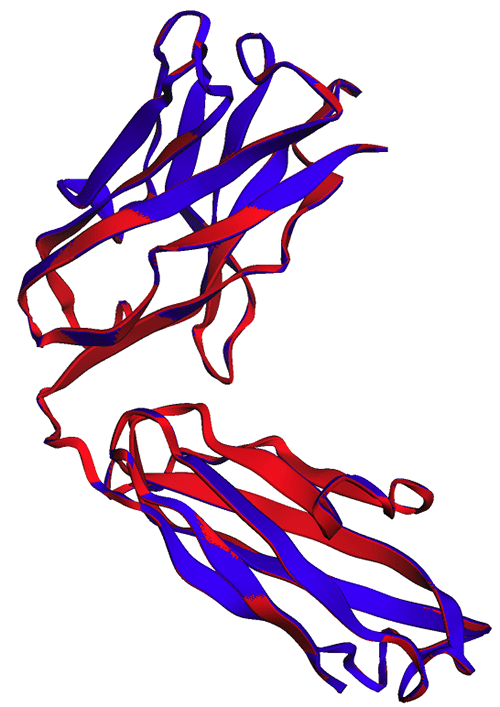

Using the same method as before, we see that the notebook and endpoint predictions are identical.

| PDBchain1 | PDBchain2 | TM-Score |

| data/endpoint_prediction.pdb:A | data/prediction.pdb:A | 1.0 |

As observed in the following figure, the ESMFold predictions generated in-notebook (red) and by the endpoint (blue) show perfect alignment.

Clean up

To avoid further charges, we delete our inference endpoint and test data:

Summary

Computational protein structure prediction is a critical tool for understanding the function of proteins. In addition to basic research, algorithms like AlphaFold and ESMFold have many applications in medicine and biotechnology. The structural insights generated by these models help us better understand how biomolecules interact. This can then lead to better diagnostic tools and therapies for patients.

In this post, we show how to deploy the ESMFold protein language model from Hugging Face Hub as a scalable inference endpoint using SageMaker. For more information about deploying Hugging Face models on SageMaker, refer to Use Hugging Face with Amazon SageMaker. You can also find more protein science examples in the Awesome Protein Analysis on AWS GitHub repo. Please leave us a comment if there are any other examples you’d like to see!

About the Authors

Brian Loyal is a Senior AI/ML Solutions Architect in the Global Healthcare and Life Sciences team at Amazon Web Services. He has more than 17 years’ experience in biotechnology and machine learning, and is passionate about helping customers solve genomic and proteomic challenges. In his spare time, he enjoys cooking and eating with his friends and family.

Brian Loyal is a Senior AI/ML Solutions Architect in the Global Healthcare and Life Sciences team at Amazon Web Services. He has more than 17 years’ experience in biotechnology and machine learning, and is passionate about helping customers solve genomic and proteomic challenges. In his spare time, he enjoys cooking and eating with his friends and family.

Shamika Ariyawansa is an AI/ML Specialist Solutions Architect in the Global Healthcare and Life Sciences team at Amazon Web Services. He passionately works with customers to accelerate their AI and ML adoption by providing technical guidance and helping them innovate and build secure cloud solutions on AWS. Outside of work, he loves skiing and off-roading.

Shamika Ariyawansa is an AI/ML Specialist Solutions Architect in the Global Healthcare and Life Sciences team at Amazon Web Services. He passionately works with customers to accelerate their AI and ML adoption by providing technical guidance and helping them innovate and build secure cloud solutions on AWS. Outside of work, he loves skiing and off-roading.

Yanjun Qi is a Senior Applied Science Manager at the AWS Machine Learning Solution Lab. She innovates and applies machine learning to help AWS customers speed up their AI and cloud adoption.

Yanjun Qi is a Senior Applied Science Manager at the AWS Machine Learning Solution Lab. She innovates and applies machine learning to help AWS customers speed up their AI and cloud adoption.

Leave a Reply