Harness large language models in fake news detection

Fake news, defined as news that conveys or incorporates false, fabricated, or deliberately misleading information, has been around as early as the emergence of the printing press. The rapid spread of fake news and disinformation online is not only deceiving to the public, but can also have a profound impact on society, politics, economy, and culture. Examples include:

- Cultivating distrust in the media

- Undermining the democratic process

- Spreading false or discredited science (for example, the anti-vax movement)

Advances in artificial intelligence (AI) and machine learning (ML) have made developing tools for creating and sharing fake news even easier. Early examples include advanced social bots and automated accounts that supercharge the initial stage of spreading fake news. In general, it is not trivial for the public to determine whether such accounts are people or bots. In addition, social bots are not illegal tools, and many companies legally purchase them as part of their marketing strategy. Therefore, it’s not easy to curb the use of social bots systematically.

Recent discoveries in the field of generative AI make it possible to produce textual content at an unprecedented pace with the help of large language models (LLMs). LLMs are generative AI text models with over 1 billion parameters, and they are facilitated in the synthesis of high-quality text.

In this post, we explore how you can use LLMs to tackle the prevalent issue of detecting fake news. We suggest that LLMs are sufficiently advanced for this task, especially if improved prompt techniques such as Chain-of-Thought and ReAct are used in conjunction with tools for information retrieval.

We illustrate this by creating a LangChain application that, given a piece of news, informs the user whether the article is true or fake using natural language. The solution also uses Amazon Bedrock, a fully managed service that makes foundation models (FMs) from Amazon and third-party model providers accessible through the AWS Management Console and APIs.

LLMs and fake news

The fake news phenomenon started evolving rapidly with the advent of the internet and more specifically social media (Nielsen et al., 2017). On social media, fake news can be shared quickly in a user’s network, leading the public to form the wrong collective opinion. In addition, people often propagate fake news impulsively, ignoring the factuality of the content if the news resonates with their personal norms (Tsipursky et al. 2018). Research in social science has suggested that cognitive bias (confirmation bias, bandwagon effect, and choice-supportive bias) is one of the most pivotal factors in making irrational decisions in terms of the both creation and consumption of fake news (Kim, et al., 2021). This also implies that news consumers share and consume information only in the direction of strengthening their beliefs.

The power of generative AI to produce textual and rich content at an unprecedented pace aggravates the fake news problem. An example worth mentioning is deepfake technology—combining various images on an original video and generating a different video. Besides the disinformation intent that human actors bring to the mix, LLMs add a whole new set of challenges:

- Factual errors – LLMs have an increased risk of containing factual errors due to the nature of their training and ability to be creative while generating the next words in a sentence. LLM training is based on repeatedly presenting a model with incomplete input, then using ML training techniques until it correctly fills in the gaps, thereby learning language structure and a language-based world model. Consequently, although LLMs are great pattern matchers and re-combiners (“stochastic parrots”), they fail at a number of simple tasks that require logical reasoning or mathematical deduction, and can hallucinate answers. In addition, temperature is one of the LLM input parameters that controls the behavior of the model when generating the next word in a sentence. By selecting a higher temperature, the model will use a lower-probability word, providing a more random response.

- Lengthy – Generated texts tend to be lengthy and lack a clearly defined granularity for individual facts.

- Lack of fact-checking – There is no standardized tooling available for fact-checking during the process of text generation.

Overall, the combination of human psychology and limitations of AI systems has created a perfect storm for the proliferation of fake news and misinformation online.

Solution overview

LLMs are demonstrating outstanding capabilities in language generation, understanding, and few-shot learning. They are trained on a vast corpus of text from the internet, where quality and accuracy of extracted natural language may not be assured.

In this post, we provide a solution to detect fake news based both on Chain-of-Thought and Re-Act (Reasoning and Acting) prompt approaches. First, we discuss those two prompt engineering techniques, then we show their implementation using LangChain and Amazon Bedrock.

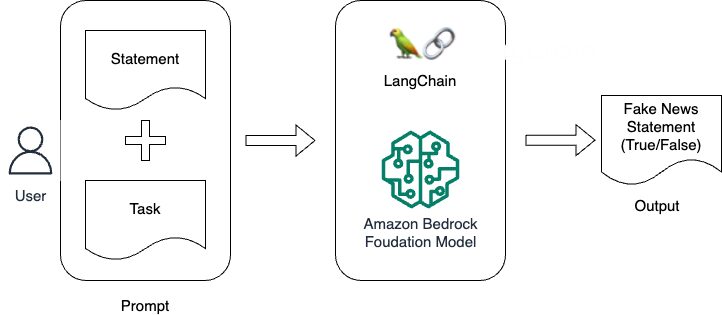

The following architecture diagram outlines the solution for our fake news detector.

Architecture diagram for fake news detection.

We use a subset of the FEVER dataset containing a statement and the ground truth about the statement indicating false, true, or unverifiable claims (Thorne J. et al., 2018).

The workflow can be broken down into the following steps:

- The user selects one of the statements to check if fake or true.

- The statement and the fake news detection task are incorporated into the prompt.

- The prompt is passed to LangChain, which invokes the FM in Amazon Bedrock.

- Amazon Bedrock returns a response to the user request with the statement True or False.

In this post, we use the Claude v2 model from Anthrophic (anthropic.claude-v2). Claude is a generative LLM based on Anthropic’s research into creating reliable, interpretable, and steerable AI systems. Created using techniques like constitutional AI and harmlessness training, Claude excels at thoughtful dialogue, content creation, complex reasoning, creativity, and coding. However, by using Amazon Bedrock and our solution architecture, we also have the flexibility to choose among other FMs provided by Amazon, AI21labs, Cohere, and Stability.ai.

You can find the implementation details in the following sections. The source code is available in the GitHub repository.

Prerequisites

For this tutorial, you need a bash terminal with Python 3.9 or higher installed on either Linux, Mac, or a Windows Subsystem for Linux and an AWS account.

We also recommend using either an Amazon SageMaker Studio notebook, an AWS Cloud9 instance, or an Amazon Elastic Compute Cloud (Amazon EC2) instance.

Deploy fake news detection using the Amazon Bedrock API

The solution uses the Amazon Bedrock API, which can be accessed using the AWS Command Line Interface (AWS CLI), the AWS SDK for Python (Boto3), or an Amazon SageMaker notebook. Refer to the Amazon Bedrock User Guide for more information. For this post, we use the Amazon Bedrock API via the AWS SDK for Python.

Set up Amazon Bedrock API environment

To set up your Amazon Bedrock API environment, complete the following steps:

- Download the latest Boto3 or upgrade it:

- Make sure you configure the AWS credentials using the

aws configurecommand or pass them to the Boto3 client. - Install the latest version of LangChain:

You can now test your setup using the following Python shell script. The script instantiates the Amazon Bedrock client using Boto3. Next, we call the list_foundation_models API to get the list of foundation models available for use.

After successfully running the preceding command, you should get the list of FMs from Amazon Bedrock.

LangChain as a prompt chaining solution

To detect fake news for a given sentence, we follow the zero-shot Chain-of-Thought reasoning process (Wei J. et al., 2022), which is composed of the following steps:

- Initially, the model attempts to create a statement about the news prompted.

- The model creates a bullet point list of assertions.

- For each assertion, the model determines if the assertion is true or false. Note that using this methodology, the model relies exclusively on its internal knowledge (weights computed in the pre-training phase) to reach a verdict. The information is not verified against any external data at this point.

- Given the facts, the model answers TRUE or FALSE for the given statement in the prompt.

To achieve these steps, we use LangChain, a framework for developing applications powered by language models. This framework allows us to augment the FMs by chaining together various components to create advanced use cases. In this solution, we use the built-in SimpleSequentialChain in LangChain to create a simple sequential chain. This is very useful, because we can take the output from one chain and use it as the input to another.

Amazon Bedrock is integrated with LangChain, so you only need to instantiate it by passing the model_id when instantiating the Amazon Bedrock object. If needed, the model inference parameters can be provided through the model_kwargs argument, such as:

- maxTokenCount – The maximum number of tokens in the generated response

- stopSequences – The stop sequence used by the model

- temperature – A value that ranges between 0–1, with 0 being the most deterministic and 1 being the most creative

- top – A value that ranges between 0–1, and is used to control tokens’ choices based on the probability of the potential choices

If this is the first time you are using an Amazon Bedrock foundational model, make sure you request access to the model by selecting from the list of models on the Model access page on the Amazon Bedrock console, which in our case is claude-v2 from Anthropic.

The following function defines the Chain-of-Thought prompt chain we mentioned earlier for detecting fake news. The function takes the Amazon Bedrock object (llm) and the user prompt (q) as arguments. LangChain’s PromptTemplate functionality is used here to predefine a recipe for generating prompts.

The following code calls the function we defined earlier and provides the answer. The statement is TRUE or FALSE. TRUE means that the statement provided contains correct facts, and FALSE means that the statement contains at least one incorrect fact.

An example of a statement and model response is provided in the following output:

ReAct and tools

In the preceding example, the model correctly identified that the statement is false. However, submitting the query again demonstrates the model’s inability to distinguish the correctness of facts. The model doesn’t have the tools to verify the truthfulness of statements beyond its own training memory, so subsequent runs of the same prompt can lead it to mislabel fake statements as true. In the following code, you have a different run of the same example:

One technique for guaranteeing truthfulness is ReAct. ReAct (Yao S. et al., 2023) is a prompt technique that augments the foundation model with an agent’s action space. In this post, as well as in the ReAct paper, the action space implements information retrieval using search, lookup, and finish actions from a simple Wikipedia web API.

The reason behind using ReAct in comparison to Chain-of-Thought is to use external knowledge retrieval to augment the foundation model to detect if a given piece of news is fake or true.

In this post, we use LangChain’s implementation of ReAct through the agent ZERO_SHOT_REACT_DESCRIPTION. We modify the previous function to implement ReAct and use Wikipedia by using the load_tools function from the langchain.agents.

We also need to install the Wikipedia package:

Below is the new code:

The following is the output of the preceding function given the same statement used before:

Clean up

To save costs, delete all the resources you deployed as part of the tutorial. If you launched AWS Cloud9 or an EC2 instance, you can delete it via the console or using the AWS CLI. Similarly, you can delete the SageMaker notebook you may have created via the SageMaker console.

Limitations and related work

The field of fake news detection is actively researched in the scientific community. In this post, we used Chain-of-Thought and ReAct techniques and in evaluating the techniques, we only focused on the accuracy of the prompt technique classification (if a given statement is true or false). Therefore, we haven’t considered other important aspects such as speed of the response, nor extended the solution to additional knowledge base sources besides Wikipedia.

Although this post focused on two techniques, Chain-of-Thought and ReAct, an extensive body of work has explored how LLMs can detect, eliminate or mitigate fake news. Lee et al. has proposed the use of an encoder-decoder model using NER (named entity recognition) to mask the named entities in order to ensure that the token masked actually uses the knowledge encoded in the language model. Chern et.al. developed FacTool, which uses Chain-of-Thought principles to extract claims from the prompt, and consequently collect relevant evidences of the claims. The LLM then judges the factuality of the claim given the retrieved list of evidences. Du E. et al. presents a complementary approach where multiple LLMs propose and debate their individual responses and reasoning processes over multiple rounds in order to arrive at a common final answer.

Based on the literature, we see that the effectiveness of LLMs in detecting fake news increases when the LLMs are augmented with external knowledge and multi-agent conversation capability. However, these approaches are more computationally complex because they require multiple model calls and interactions, longer prompts, and lengthy network layer calls. Ultimately, this complexity translates into an increased overall cost. We recommend assessing the cost-to-performance ratio before deploying similar solutions in production.

Conclusion

In this post, we delved into how to use LLMs to tackle the prevalent issue of fake news, which is one of the major challenges of our society nowadays. We started by outlining the challenges presented by fake news, with an emphasis on its potential to sway public sentiment and cause societal disruptions.

We then introduced the concept of LLMs as advanced AI models that are trained on a substantial quantity of data. Due to this extensive training, these models boast an impressive understanding of language, enabling them to produce human-like text. With this capacity, we demonstrated how LLMs can be harnessed in the battle against fake news by using two different prompt techniques, Chain-of-Thought and ReAct.

We underlined how LLMs can facilitate fact-checking services on an unparalleled scale, given their capability to process and analyze vast amounts of text swiftly. This potential for real-time analysis can lead to early detection and containment of fake news. We illustrated this by creating a Python script that, given a statement, highlights to the user whether the article is true or fake using natural language.

We concluded by underlining the limitations of the current approach and ended on a hopeful note, stressing that, with the correct safeguards and continuous enhancements, LLMs could become indispensable tools in the fight against fake news.

We’d love to hear from you. Let us know what you think in the comments section, or use the issues forum in the GitHub repository.

Disclaimer: The code provided in this post is meant for educational and experimentation purposes only. It should not be relied upon to detect fake news or misinformation in real-world production systems. No guarantees are made about the accuracy or completeness of fake news detection using this code. Users should exercise caution and perform due diligence before utilizing these techniques in sensitive applications.

To get started with Amazon Bedrock, visit the Amazon Bedrock console.

About the authors

Anamaria Todor is a Principal Solutions Architect based in Copenhagen, Denmark. She saw her first computer when she was 4 years old and never let go of computer science, video games, and engineering since. She has worked in various technical roles, from freelancer, full-stack developer, to data engineer, technical lead, and CTO, at various companies in Denmark, focusing on the gaming and advertising industries. She has been at AWS for over 3 years, working as a Principal Solutions Architect, focusing mainly on life sciences and AI/ML. Anamaria has a bachelor’s in Applied Engineering and Computer Science, a master’s degree in Computer Science, and over 10 years of AWS experience. When she’s not working or playing video games, she’s coaching girls and female professionals in understanding and finding their path through technology.

Anamaria Todor is a Principal Solutions Architect based in Copenhagen, Denmark. She saw her first computer when she was 4 years old and never let go of computer science, video games, and engineering since. She has worked in various technical roles, from freelancer, full-stack developer, to data engineer, technical lead, and CTO, at various companies in Denmark, focusing on the gaming and advertising industries. She has been at AWS for over 3 years, working as a Principal Solutions Architect, focusing mainly on life sciences and AI/ML. Anamaria has a bachelor’s in Applied Engineering and Computer Science, a master’s degree in Computer Science, and over 10 years of AWS experience. When she’s not working or playing video games, she’s coaching girls and female professionals in understanding and finding their path through technology.

Marcel Castro is a Senior Solutions Architect based in Oslo, Norway. In his role, Marcel helps customers with architecture, design, and development of cloud-optimized infrastructure. He is a member of the AWS Generative AI Ambassador team with the goal to drive and support EMEA customers on their generative AI journey. He holds a PhD in Computer Science from Sweden and a master’s and bachelor’s degree in Electrical Engineering and Telecommunications from Brazil.

Marcel Castro is a Senior Solutions Architect based in Oslo, Norway. In his role, Marcel helps customers with architecture, design, and development of cloud-optimized infrastructure. He is a member of the AWS Generative AI Ambassador team with the goal to drive and support EMEA customers on their generative AI journey. He holds a PhD in Computer Science from Sweden and a master’s and bachelor’s degree in Electrical Engineering and Telecommunications from Brazil.

Leave a Reply