Operationalize LLM Evaluation at Scale using Amazon SageMaker Clarify and MLOps services

In the last few years Large Language Models (LLMs) have risen to prominence as outstanding tools capable of understanding, generating and manipulating text with unprecedented proficiency. Their potential applications span from conversational agents to content generation and information retrieval, holding the promise of revolutionizing all industries. However, harnessing this potential while ensuring the responsible and effective use of these models hinges on the critical process of LLM evaluation. An evaluation is a task used to measure the quality and responsibility of output of an LLM or generative AI service. Evaluating LLMs is not only motivated by the desire to understand a model performance but also by the need to implement responsible AI and by the need to mitigate the risk of providing misinformation or biased content and to minimize the generation of harmful, unsafe, malicious and unethical content. Furthermore, evaluating LLMs can also help mitigating security risks, particularly in the context of prompt data tampering. For LLM-based applications, it is crucial to identify vulnerabilities and implement safeguards that protect against potential breaches and unauthorized manipulations of data.

By providing essential tools for evaluating LLMs with a straightforward configuration and one-click approach, Amazon SageMaker Clarify LLM evaluation capabilities grant customers access to most of the aforementioned benefits. With these tools in hand, the next challenge is to integrate LLM evaluation into the Machine Learning and Operation (MLOps) lifecycle to achieve automation and scalability in the process. In this post, we show you how to integrate Amazon SageMaker Clarify LLM evaluation with Amazon SageMaker Pipelines to enable LLM evaluation at scale. Additionally, we provide code example in this GitHub repository to enable the users to conduct parallel multi-model evaluation at scale, using examples such as Llama2-7b-f, Falcon-7b, and fine-tuned Llama2-7b models.

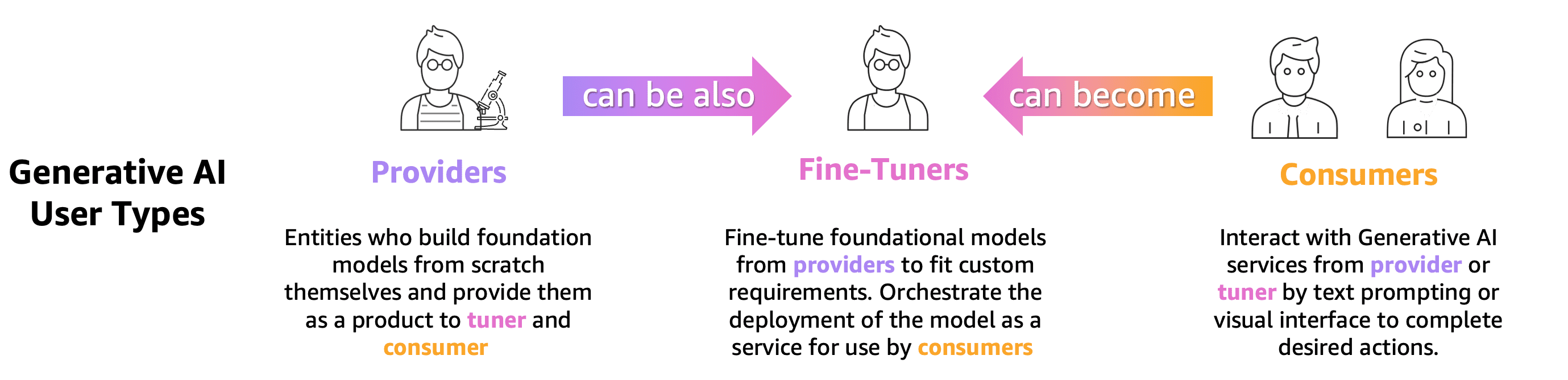

Who needs to perform LLM evaluation?

Anyone who trains, fine-tunes or simply uses a pre-trained LLM needs to accurately evaluate it to assess the behavior of the application powered by that LLM. Based on this tenet, we can classify generative AI users who need LLM evaluation capabilities into 3 groups as shown in the following figure: model providers, fine-tuners, and consumers.

- Foundational Model (FM) providers train models that are general-purpose. These models can be used for many downstream tasks, such as feature extraction or to generate content. Each trained model needs to be benchmarked against many tasks not only to assess its performances but also to compare it with other existing models, to identify areas that needs improvements and finally, to keep track of advancements in the field. Model providers also need to check the presence of any biases to ensure of the quality of the starting dataset and of the correct behavior of their model. Gathering evaluation data is vital for model providers. Furthermore, these data and metrics must be collected to comply with upcoming regulations. ISO 42001, the Biden Administration Executive Order, and EU AI Act develop standards, tools, and tests to help ensure that AI systems are safe, secure, and trustworthy. For example, the EU AI Act is tasked providing information on which datasets are used for training, what compute power is required to run the model, report model results against public/industry-standard benchmarks and share results of internal and external testing.

- Model fine-tuners want to solve specific tasks (e.g. sentiment classification, summarization, question answering) as well as pre-trained models for adopting domain specific tasks. They need evaluation metrics generated by model providers to select the right pre-trained model as a starting point.

They need to evaluate their fine-tuned models against their desired use-case with task-specific or domain-specific datasets. Frequently, they must curate and create their private datasets since publicly available datasets, even those designed for a specific task, may not adequately capture the nuances required for their particular use case.

Fine-tuning is faster and cheaper than a full training and requires faster operative iteration for deployment and testing because many candidate models are usually generated. Evaluating these models allows continuous model improvement, calibration and debugging. Note that fine-tuners can become consumers of their own models when they develop real world applications. - Model consumers or model deployers serve and monitor general purpose or fine-tuned models in production, aiming to enhance their applications or services through the adoption of LLMs. The first challenge they have is to ensure that the chosen LLM aligns with their specific needs, cost, and performance expectations. Interpreting and understanding the model’s outputs is a persistent concern, especially when privacy and data security are involved (e.g. for auditing risk and compliance in regulated industries, such as financial sector). Continuous model evaluation is critical to prevent propagation of bias or harmful content. By implementing a robust monitoring and evaluation framework, model consumers can proactively identify and address regression in LLMs, ensuring that these models maintain their effectiveness and reliability over time.

How to perform LLM evaluation

Effective model evaluation involves three fundamental components: one or more FMs or fine-tuned models to evaluate the input datasets (prompts, conversations or regular inputs) and the evaluation logic.

To select the models for evaluation, different factors must be considered, including data characteristics, problem complexity, available computational resources, and the desired outcome. The input datastore provides the data necessary for training, fine-tuning, and testing the selected model. It’s vital that this datastore is well-structured, representative, and of high quality, as the model’s performance heavily depends on the data it learns from. Lastly, evaluation logics define the criteria and metrics used to assess the model’s performance.

Together, these three components form a cohesive framework that ensures the rigorous and systematic assessment of machine learning models, ultimately leading to informed decisions and improvements in model effectiveness.

Model evaluation techniques are still an active field of research. Many public benchmarks and frameworks were created by the community of researchers in the last few years to cover a wide range of tasks and scenarios such as GLUE, SuperGLUE, HELM, MMLU and BIG-bench. These benchmarks have leaderboards that can be used to compare and contrast evaluated models. Benchmarks, like HELM, also aim to assess on metrics beyond accuracy measures, like precision or F1 score. The HELM benchmark includes metrics for fairness, bias and toxicity which have an equally significant importance in the overall model evaluation score.

All these benchmarks include a set of metrics that measure how the model performs on a certain task. The most famous and most common metrics are ROUGE (Recall-Oriented Understudy for Gisting Evaluation), BLEU (BiLingual Evaluation Understudy), or METEOR (Metric for Evaluation of Translation with Explicit ORdering). Those metrics serve as a useful tool for automated evaluation, providing quantitative measures of lexical similarity between generated and reference text. However, they do not capture the full breadth of human-like language generation, which includes semantic understanding, context, or stylistic nuances. For example, HELM doesn’t provide evaluation details relevant to specific use cases, solutions for testing custom prompts, and easily interpreted results used by non-experts, because the process can be costly, not easy to scale, and only for specific tasks.

Furthermore, achieving human-like language generation often requires the incorporation of human-in-the-loop to bring qualitative assessments and human judgement to complement the automated accuracy metrics. Human evaluation is a valuable method for assessing LLM outputs but it can also be subjective and prone to bias because different human evaluators may have diverse opinions and interpretations of text quality. Furthermore, human evaluation can be resource-intensive and costly and it can demand significant time and effort.

Let’s dive deep into how Amazon SageMaker Clarify seamlessly connects the dots, aiding customers in conducting thorough model evaluation and selection.

LLM evaluation with Amazon SageMaker Clarify

Amazon SageMaker Clarify helps customers to automate the metrics, including but not limited to accuracy, robustness, toxicity, stereotyping and factual knowledge for automated, and style, coherence, relevance for human-based evaluation, and evaluation methods by providing a framework to evaluate LLMs and LLM-based services such as Amazon Bedrock. As a fully-managed service, SageMaker Clarify simplifies the use of open-source evaluation frameworks within Amazon SageMaker. Customers can select relevant evaluation datasets and metrics for their scenarios and extend them with their own prompt datasets and evaluation algorithms. SageMaker Clarify delivers evaluation results in multiple formats to support different roles in the LLM workflow. Data scientists can analyze detailed results with SageMaker Clarify visualizations in Notebooks, SageMaker Model Cards, and PDF reports. Meanwhile, operations teams can use Amazon SageMaker GroundTruth to review and annotate high-risk items that SageMaker Clarify identifies. For example, by stereotyping, toxicity, escaped PII, or low accuracy.

Annotations and reinforcement learning are subsequently employed to mitigate potential risks. Human-friendly explanations of the identified risks expedite the manual review process, thereby reducing costs. Summary reports offer business stakeholders comparative benchmarks between different models and versions, facilitating informed decision-making.

The following figure shows the framework to evaluate LLMs and LLM-based services:

Amazon SageMaker Clarify LLM evaluation is an open-source Foundation Model Evaluation (FMEval) library developed by AWS to help customers easily evaluate LLMs. All the functionalities have been also incorporated into Amazon SageMaker Studio to enable LLM evaluation for its users. In the following sections, we introduce the integration of Amazon SageMaker Clarify LLM evaluation capabilities with SageMaker Pipelines to enable LLM evaluation at scale by using MLOps principles.

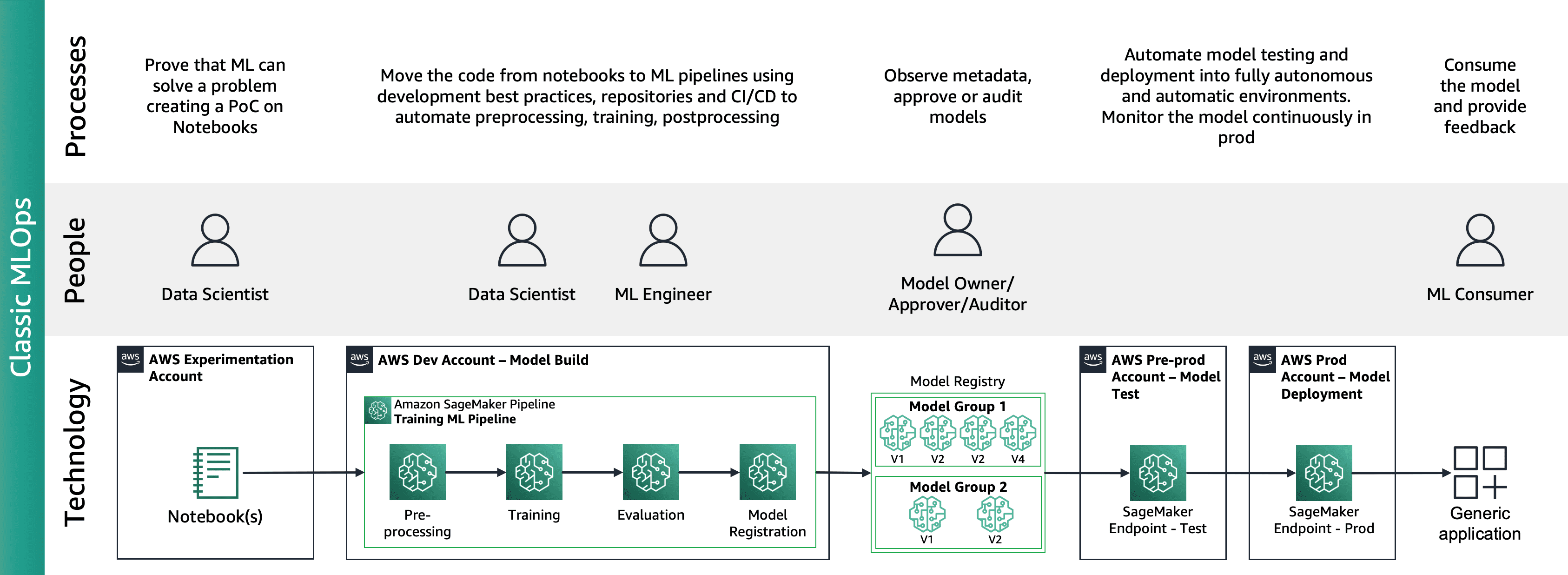

Amazon SageMaker MLOps lifecycle

As the post “MLOps foundation roadmap for enterprises with Amazon SageMaker” describes, MLOps is the combination of processes, people, and technology to productionise ML use cases efficiently.

The following figure shows the end-to-end MLOps lifecycle:

A typical journey starts with a data scientist creating a proof-of-concept (PoC) notebook to prove that ML can solve a business problem. Throughout the Proof of Concept (PoC) development, it falls to the data scientist to convert the business Key Performance Indicators (KPIs) into machine learning model metrics, such as precision or false-positive rate, and utilize a limited test dataset to evaluate these metrics. Data scientists collaborate with ML engineers to transition code from notebooks to repositories, creating ML pipelines using Amazon SageMaker Pipelines, which connect various processing steps and tasks, including pre-processing, training, evaluation, and post-processing, all while continually incorporating new production data. Deployment of Amazon SageMaker Pipelines relies on repository interactions and CI/CD pipeline activation. The ML pipeline maintains top-performing models, container images, evaluation results, and status information in a model registry, where model stakeholders assess performance and decide on progression to production based on performance results and benchmarks, followed by activation of another CI/CD pipeline for staging and production deployment. Once in production, ML consumers utilize the model via application-triggered inference through direct invocation or API calls, with feedback loops to model owners for ongoing performance evaluation.

Amazon SageMaker Clarify and MLOps integration

Following MLOps lifecycle, fine-tuners or users of open-source models productionize fine-tuned models or FM using Amazon SageMaker Jumpstart and MLOps services, as described in Implementing MLOps practices with Amazon SageMaker JumpStart pre-trained models. This lead to a new domain for foundation model operations (FMOps) and LLM Operations (LLMOps) FMOps/LLMOps: Operationalize generative AI and differences with MLOps.

The following figure shows end-to-end LLMOps lifecycle:

In LLMOps the main differences compared to MLOps are model selection and model evaluation involving different processes and metrics. In the initial experimentation phase, the data scientists (or fine-tuners) select the FM that will be used for a specific Generative AI use case.

This often results in the testing and fine-tuning of multiple FMs, some of which may yield comparable results. After the selection of the model(s), prompt engineers are responsible for preparing the necessary input data and expected output for evaluation (e.g. input prompts comprising input data and query) and define metrics like similarity and toxicity. In addition to these metrics, data scientists or fine-tuners must validate the outcomes and choose the appropriate FM not only on precision metrics, but on other capabilities like latency and cost. Then, they can deploy a model to a SageMaker endpoint and test its performance on a small scale. While the experimentation phase may involve a straightforward process, transitioning to production requires customers to automate the process and enhance the robustness of the solution. Therefore, we need to deep dive on how to automate evaluation, enabling testers to perform efficient evaluation at scale and implementing real-time monitoring of model input and output.

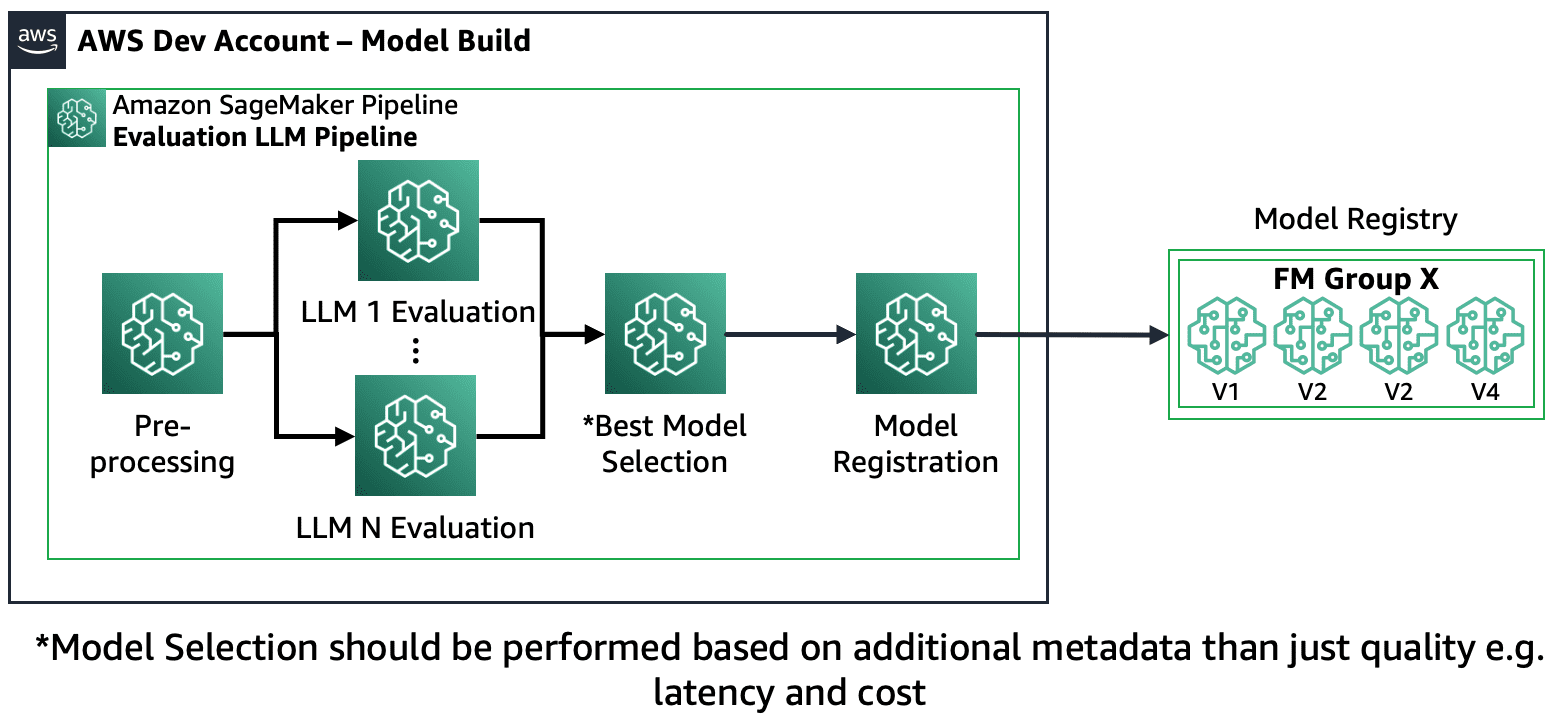

Automate FM evaluation

Amazon SageMaker Pipelines automate all the phases of preprocessing, FM fine-tuning (optionally) and evaluation at scale. Given the selected models during experimentation, prompt engineers need to cover a larger set of cases by preparing many prompts and storing them to a designated storage repository called prompt catalog. For more information, refer to FMOps/LLMOps: Operationalize generative AI and differences with MLOps. Then, Amazon SageMaker Pipelines can be structured as follows:

Scenario 1 – Evaluate multiple FMs: In this scenario, the FMs can cover the business use case without fine-tuning. The Amazon SageMaker Pipeline consists of the following steps: data pre-processing, parallel evaluation of multiple FMs, models comparison, and selection based on accuracy and other properties like cost or latency, registration of selected model artifacts, and metadata.

The following diagram illustrates this architecture.

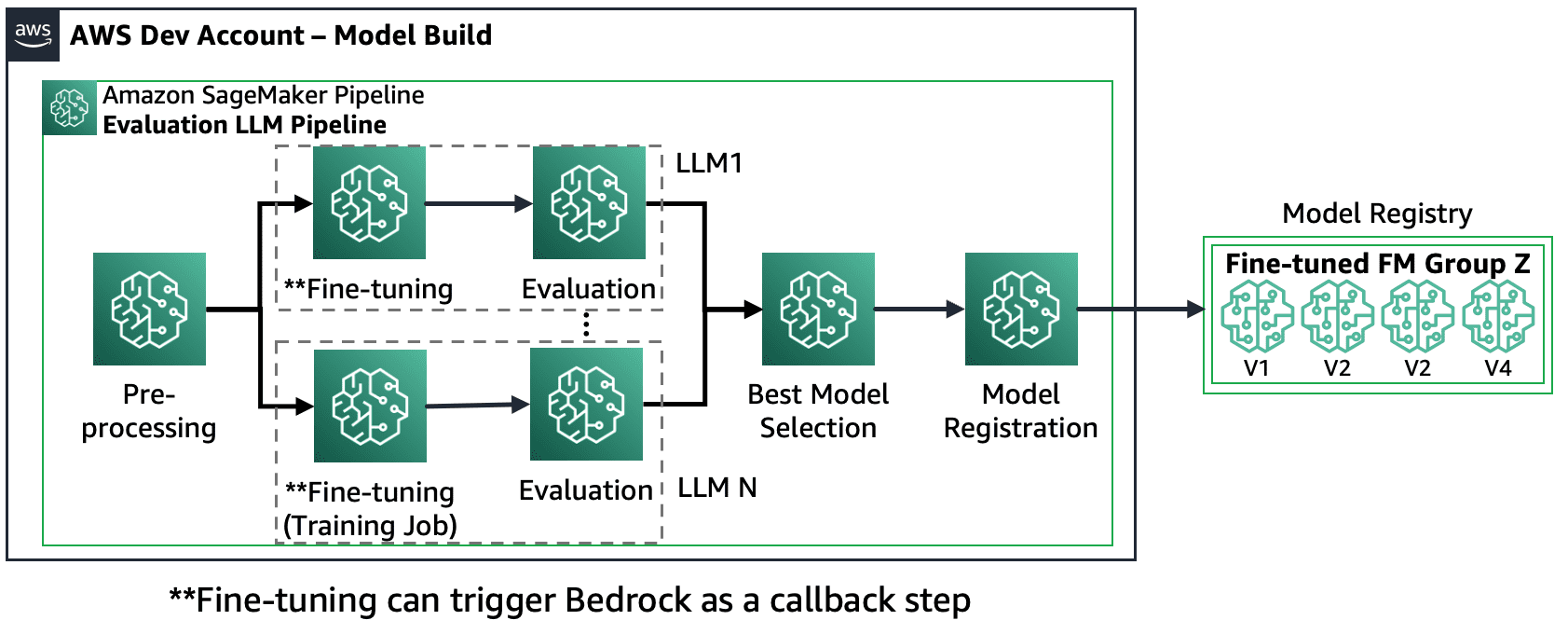

Scenario 2 – Fine-tune and evaluate multiple FMs: In this scenario, the Amazon SageMaker Pipeline is structured much like Scenario 1, but it runs in parallel both fine-tuning and evaluation steps for each FM. The best fine-tuned model will be registered to the Model Registry.

The following diagram illustrates this architecture.

Scenario 3 – Evaluate multiple FMs and fine-tuned FMs: This scenario is a combination of evaluating general purpose FMs and fine-tuned FMs. In this case, the customers want to check if a fine-tuned model can perform better than a general-purpose FM.

The following figure shows the resulting SageMaker Pipeline steps.

Note that model registration follows two patterns: (a) store an open-source model and artifacts or (b) store a reference to a proprietary FM. For more information, refer to FMOps/LLMOps: Operationalize generative AI and differences with MLOps.

Solution overview

To accelerate your journey into LLM evaluation at scale, we created a solution that implements the scenarios using both Amazon SageMaker Clarify and the new Amazon SageMaker Pipelines SDK. The code example, including datasets, source notebooks and SageMaker Pipelines (steps and ML pipeline), is available on GitHub. To develop this example solution, we have used two FMs: Llama2 and Falcon-7B. In this post, our primary focus is on the key elements of the SageMaker Pipeline solution that pertain to the evaluation process.

Evaluation configuration: For the purpose of standardizing the evaluation procedure, we have created a YAML configuration file, (evaluation_config.yaml), that contains the necessary details for the evaluation process including the dataset, the model(s), and the algorithms to be run during the evaluation step of the SageMaker Pipeline. The following example illustrates the configuration file:

pipeline:

name: "llm-evaluation-multi-models-hybrid"

dataset:

dataset_name: "trivia_qa_sampled"

input_data_location: "evaluation_dataset_trivia.jsonl"

dataset_mime_type: "jsonlines"

model_input_key: "question"

target_output_key: "answer"

models:

- name: "llama2-7b-f"

model_id: "meta-textgeneration-llama-2-7b-f"

model_version: "*"

endpoint_name: "llm-eval-meta-textgeneration-llama-2-7b-f"

deployment_config:

instance_type: "ml.g5.2xlarge"

num_instances: 1

evaluation_config:

output: '[0].generation.content'

content_template: [[{"role":"user", "content": "PROMPT_PLACEHOLDER"}]]

inference_parameters:

max_new_tokens: 100

top_p: 0.9

temperature: 0.6

custom_attributes:

accept_eula: True

prompt_template: "$feature"

cleanup_endpoint: True

- name: "falcon-7b"

...

- name: "llama2-7b-finetuned"

...

finetuning:

train_data_path: "train_dataset"

validation_data_path: "val_dataset"

parameters:

instance_type: "ml.g5.12xlarge"

num_instances: 1

epoch: 1

max_input_length: 100

instruction_tuned: True

chat_dataset: False

...

algorithms:

- algorithm: "FactualKnowledge"

module: "fmeval.eval_algorithms.factual_knowledge"

config: "FactualKnowledgeConfig"

target_output_delimiter: "" Evaluation step: The new SageMaker Pipeline SDK provides users the flexibility to define custom steps in the ML workflow using the ‘@step’ Python decorator. Therefore, the users need to create a basic Python script that conducts the evaluation, as follows:

def evaluation(data_s3_path, endpoint_name, data_config, model_config, algorithm_config, output_data_path,):

from fmeval.data_loaders.data_config import DataConfig

from fmeval.model_runners.sm_jumpstart_model_runner import JumpStartModelRunner

from fmeval.reporting.eval_output_cells import EvalOutputCell

from fmeval.constants import MIME_TYPE_JSONLINES

s3 = boto3.client("s3")

bucket, object_key = parse_s3_url(data_s3_path)

s3.download_file(bucket, object_key, "dataset.jsonl")

config = DataConfig(

dataset_name=data_config["dataset_name"],

dataset_uri="dataset.jsonl",

dataset_mime_type=MIME_TYPE_JSONLINES,

model_input_location=data_config["model_input_key"],

target_output_location=data_config["target_output_key"],

)

evaluation_config = model_config["evaluation_config"]

content_dict = {

"inputs": evaluation_config["content_template"],

"parameters": evaluation_config["inference_parameters"],

}

serializer = JSONSerializer()

serialized_data = serializer.serialize(content_dict)

content_template = serialized_data.replace('"PROMPT_PLACEHOLDER"', "$prompt")

print(content_template)

js_model_runner = JumpStartModelRunner(

endpoint_name=endpoint_name,

model_id=model_config["model_id"],

model_version=model_config["model_version"],

output=evaluation_config["output"],

content_template=content_template,

custom_attributes="accept_eula=true",

)

eval_output_all = []

s3 = boto3.resource("s3")

output_bucket, output_index = parse_s3_url(output_data_path)

for algorithm in algorithm_config:

algorithm_name = algorithm["algorithm"]

module = importlib.import_module(algorithm["module"])

algorithm_class = getattr(module, algorithm_name)

algorithm_config_class = getattr(module, algorithm["config"])

eval_algo = algorithm_class(algorithm_config_class(target_output_delimiter=algorithm["target_output_delimiter"]))

eval_output = eval_algo.evaluate(model=js_model_runner, dataset_config=config, prompt_template=evaluation_config["prompt_template"], save=True,)

print(f"eval_output: {eval_output}")

eval_output_all.append(eval_output)

html = markdown.markdown(str(EvalOutputCell(eval_output[0])))

file_index = (output_index + "/" + model_config["name"] + "_" + eval_algo.eval_name + ".html")

s3_object = s3.Object(bucket_name=output_bucket, key=file_index)

s3_object.put(Body=html)

eval_result = {"model_config": model_config, "eval_output": eval_output_all}

print(f"eval_result: {eval_result}")

return eval_resultSageMaker Pipeline: After creating the necessary steps, such as data preprocessing, model deployment and model evaluation, the user needs to link the steps together by using SageMaker Pipeline SDK. The new SDK automatically generates the workflow by interpreting the dependencies between different steps when a SageMaker Pipeline creation API is invoked as shown in the following example:

import os

import argparse

from datetime import datetime

import sagemaker

from sagemaker.workflow.pipeline import Pipeline

from sagemaker.workflow.function_step import step

from sagemaker.workflow.step_outputs import get_step

# Import the necessary steps

from steps.preprocess import preprocess

from steps.evaluation import evaluation

from steps.cleanup import cleanup

from steps.deploy import deploy

from lib.utils import ConfigParser

from lib.utils import find_model_by_name

if __name__ == "__main__":

os.environ["SAGEMAKER_USER_CONFIG_OVERRIDE"] = os.getcwd()

sagemaker_session = sagemaker.session.Session()

# Define data location either by providing it as an argument or by using the default bucket

default_bucket = sagemaker.Session().default_bucket()

parser = argparse.ArgumentParser()

parser.add_argument("-input-data-path", "--input-data-path", dest="input_data_path", default=f"s3://{default_bucket}/llm-evaluation-at-scale-example", help="The S3 path of the input data",)

parser.add_argument("-config", "--config", dest="config", default="", help="The path to .yaml config file",)

args = parser.parse_args()

# Initialize configuration for data, model, and algorithm

if args.config:

config = ConfigParser(args.config).get_config()

else:

config = ConfigParser("pipeline_config.yaml").get_config()

evalaution_exec_id = datetime.now().strftime("%Y_%m_%d_%H_%M_%S")

pipeline_name = config["pipeline"]["name"]

dataset_config = config["dataset"] # Get dataset configuration

input_data_path = args.input_data_path + "/" + dataset_config["input_data_location"]

output_data_path = (args.input_data_path + "/output_" + pipeline_name + "_" + evalaution_exec_id)

print("Data input location:", input_data_path)

print("Data output location:", output_data_path)

algorithms_config = config["algorithms"] # Get algorithms configuration

model_config = find_model_by_name(config["models"], "llama2-7b")

model_id = model_config["model_id"]

model_version = model_config["model_version"]

evaluation_config = model_config["evaluation_config"]

endpoint_name = model_config["endpoint_name"]

model_deploy_config = model_config["deployment_config"]

deploy_instance_type = model_deploy_config["instance_type"]

deploy_num_instances = model_deploy_config["num_instances"]

# Construct the steps

processed_data_path = step(preprocess, name="preprocess")(input_data_path, output_data_path)

endpoint_name = step(deploy, name=f"deploy_{model_id}")(model_id, model_version, endpoint_name, deploy_instance_type, deploy_num_instances,)

evaluation_results = step(evaluation, name=f"evaluation_{model_id}", keep_alive_period_in_seconds=1200)(processed_data_path, endpoint_name, dataset_config, model_config, algorithms_config, output_data_path,)

last_pipeline_step = evaluation_results

if model_config["cleanup_endpoint"]:

cleanup = step(cleanup, name=f"cleanup_{model_id}")(model_id, endpoint_name)

get_step(cleanup).add_depends_on([evaluation_results])

last_pipeline_step = cleanup

# Define the SageMaker Pipeline

pipeline = Pipeline(

name=pipeline_name,

steps=[last_pipeline_step],

)

# Build and run the Sagemaker Pipeline

pipeline.upsert(role_arn=sagemaker.get_execution_role())

# pipeline.upsert(role_arn="arn:aws:iam::<...>:role/service-role/AmazonSageMaker-ExecutionRole-<...>")

pipeline.start()The example implements the evaluation of a single FM by pre-processing the initial data set, deploying the model, and running the evaluation. The generated pipeline directed acyclic graph (DAG) is shown in the following figure.

Following a similar approach and by using and tailoring the example in Fine-tune LLaMA 2 models on SageMaker JumpStart, we created the pipeline to evaluate a fine-tuned model, as shown in the following figure.

By using the previous SageMaker Pipeline steps as “Lego” blocks, we developed the solution for Scenario 1 and Scenario 3, as shown in the following figures. Specifically, the GitHub repository enables the user to evaluate multiple FMs in parallel or to perform more complex evaluation combining evaluation of both foundation and fine-tuned models.

Additional functionalities available in the repository include the following:

- Dynamic evaluation step generation: Our solution generates all the necessary evaluation steps dynamically based on the configuration file to enable users to evaluate any number of models. We have extended the solution to support an easy integration of new types of models, such as Hugging Face or Amazon Bedrock.

- Prevent endpoint redeployment: If an endpoint is already in place, we skip the deployment process. This allows the user to re-use endpoints with FMs for evaluation, resulting in cost savings and reduced deployment time.

- End-point clean up: After the completion of the evaluation the SageMaker Pipeline decommission the deployed endpoints. This functionality can be extended to keep the best model endpoint alive.

- Model selection step: We have added a model selection step placeholder that requires the business logic of the final model selection, including criteria such as cost or latency.

- Model registration step: The best model can be registered into Amazon SageMaker Model Registry as a new version of a specific model group.

- Warm pool: SageMaker managed warm pools let you retain and reuse provisioned infrastructure after the completion of a job to reduce latency for repetitive workloads

The following figure illustrates these capabilities and a multi-model evaluation example that the users can create easily and dynamically using our solution in this GitHub repository.

We intentionally kept the data preparation out of scope as it will be described in a different post in depth, including prompt catalog designs, prompt templates, prompt optimization. For more information and related component definitions, refer to FMOps/LLMOps: Operationalize generative AI and differences with MLOps.

Conclusion

In this post, we focused on how to automate and operationalize LLMs evaluation at scale using Amazon SageMaker Clarify LLM evaluation capabilities and Amazon SageMaker Pipelines. In addition to theoretical architecture designs, we have example code in this GitHub repository (featuring Llama2 and Falcon-7B FMs) to enable customers to develop their own scalable evaluation mechanisms.

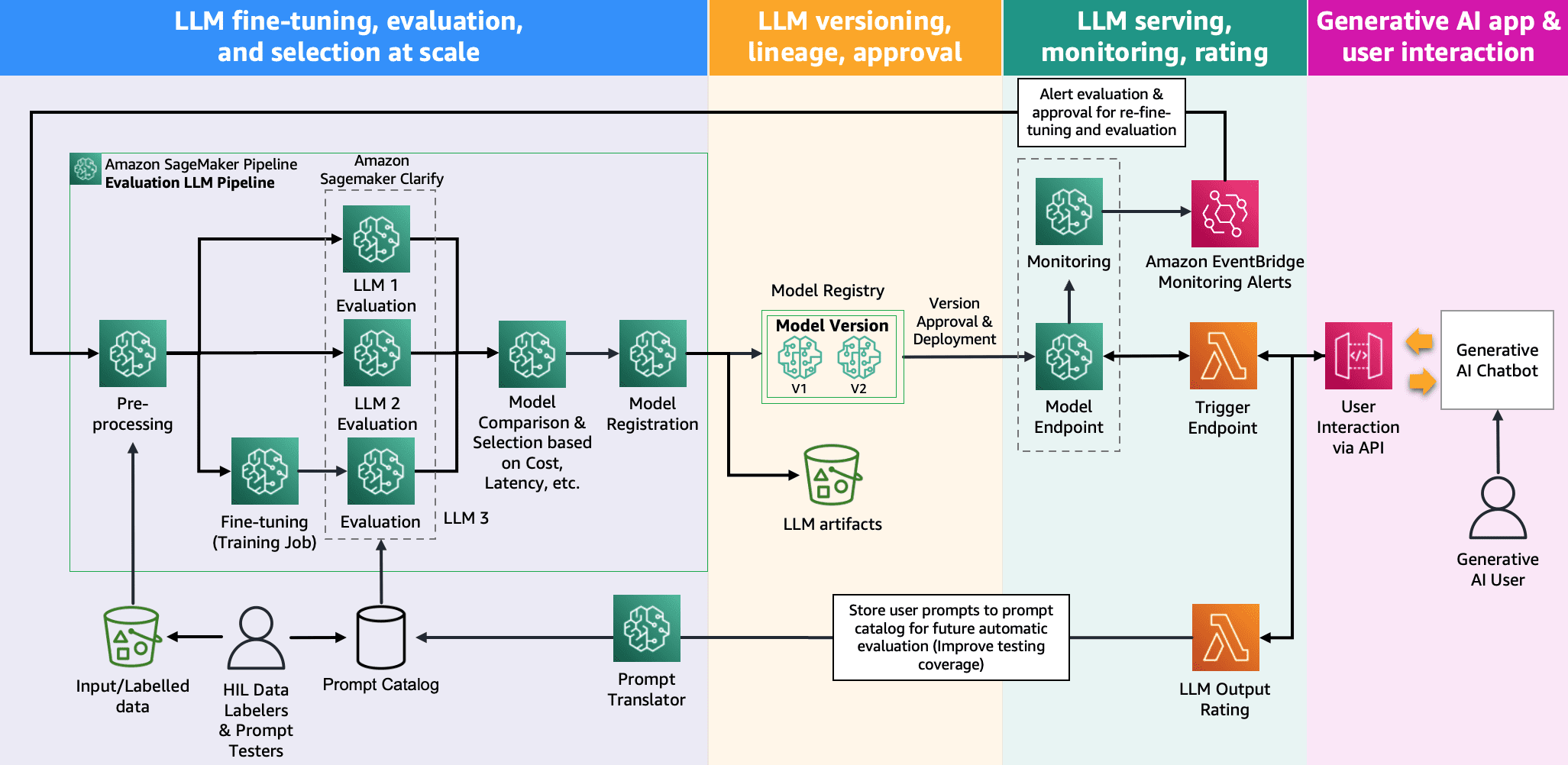

The following illustration shows model evaluation architecture.

In this post, we focused on operationalizing the LLM evaluation at scale as shown on the left side of the illustration. In the future, we ’ll focus on developing examples fulfilling the end-to-end lifecycle of FMs to production by following the guideline described in FMOps/LLMOps: Operationalize generative AI and differences with MLOps. This includes LLM serving, monitoring, storing of output rating that will eventually trigger automatic re-evaluation and fine-tuning and, lastly, using humans-in-the-loop to work on labeled data or prompts catalog.

About the authors

Dr. Sokratis Kartakis is a Principal Machine Learning and Operations Specialist Solutions Architect for Amazon Web Services. Sokratis focuses on enabling enterprise customers to industrialize their Machine Learning (ML) and generative AI solutions by exploiting AWS services and shaping their operating model, i.e. MLOps/FMOps/LLMOps foundations, and transformation roadmap leveraging best development practices. He has spent 15+ years on inventing, designing, leading, and implementing innovative end-to-end production-level ML and AI solutions in the domains of energy, retail, health, finance, motorsports etc.

Dr. Sokratis Kartakis is a Principal Machine Learning and Operations Specialist Solutions Architect for Amazon Web Services. Sokratis focuses on enabling enterprise customers to industrialize their Machine Learning (ML) and generative AI solutions by exploiting AWS services and shaping their operating model, i.e. MLOps/FMOps/LLMOps foundations, and transformation roadmap leveraging best development practices. He has spent 15+ years on inventing, designing, leading, and implementing innovative end-to-end production-level ML and AI solutions in the domains of energy, retail, health, finance, motorsports etc.

Jagdeep Singh Soni is a Senior Partner Solutions Architect at AWS based in Netherlands. He uses his passion for DevOps, GenAI and builder tools to help both system integrators and technology partners. Jagdeep applies his application development and architecture background to drive innovation within his team and promote new technologies.

Jagdeep Singh Soni is a Senior Partner Solutions Architect at AWS based in Netherlands. He uses his passion for DevOps, GenAI and builder tools to help both system integrators and technology partners. Jagdeep applies his application development and architecture background to drive innovation within his team and promote new technologies.

Dr. Riccardo Gatti is a Senior Startup Solution Architect based in Italy. He is a technical advisor for customers, helping them growing their business by selecting the right tools and technologies to innovate, scale fast and go global in minutes. He has always been passionate about machine learning and generative AI, having studied and applied these technologies across different domains throughout his working career. He is host and editor for the AWS Italian podcast “Casa Startup”, dedicated to stories of startup founders and new technological trends.

Dr. Riccardo Gatti is a Senior Startup Solution Architect based in Italy. He is a technical advisor for customers, helping them growing their business by selecting the right tools and technologies to innovate, scale fast and go global in minutes. He has always been passionate about machine learning and generative AI, having studied and applied these technologies across different domains throughout his working career. He is host and editor for the AWS Italian podcast “Casa Startup”, dedicated to stories of startup founders and new technological trends.

Leave a Reply