Improve LLM performance with human and AI feedback on Amazon SageMaker for Amazon Engineering

The Amazon EU Design and Construction (Amazon D&C) team is the engineering team designing and constructing Amazon warehouses. The team navigates a large volume of documents and locates the right information to make sure the warehouse design meets the highest standards. In the post A generative AI-powered solution on Amazon SageMaker to help Amazon EU Design and Construction, we presented a question answering bot solution using a Retrieval Augmented Generation (RAG) pipeline with a fine-tuned large language model (LLM) for Amazon D&C to efficiently retrieve accurate information from a large volume of unorganized documents, and provide timely and high-quality services in their construction projects. The Amazon D&C team implemented the solution in a pilot for Amazon engineers and collected user feedback.

In this post, we share how we analyzed the feedback data and identified limitations of accuracy and hallucinations RAG provided, and used the human evaluation score to train the model through reinforcement learning. To increase training samples for better learning, we also used another LLM to generate feedback scores. This method addressed the RAG limitation and further improved the bot response quality. We present the reinforcement learning process and the benchmarking results to demonstrate the LLM performance improvement. The solution uses Amazon SageMaker JumpStart as the core service for model deployment, fine-tuning, and reinforcement learning.

Collect feedback from Amazon engineers in a pilot project

After developing the solution described in A generative AI-powered solution on Amazon SageMaker to help Amazon EU Design and Construction, the Amazon D&C team deployed the solution and ran a pilot project with Amazon engineers. The engineers accessed the pilot system through a web application developed by Streamlit, connected with the RAG pipeline. In the pipeline, we used Amazon OpenSearch Service for the vector database, and deployed a fine-tuned Mistral-7B-Instruct model on Amazon SageMaker.

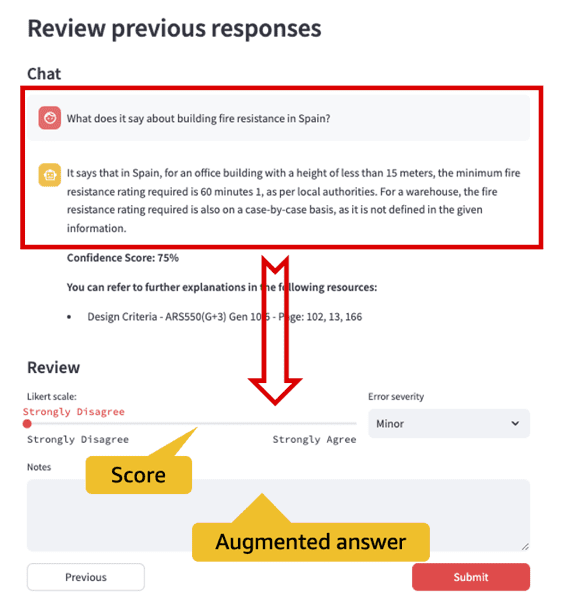

One of the key objectives of the pilot is to collect feedback from Amazon engineers and use the feedback to further reduce LLM hallucinations. To achieve this, we developed a feedback collection module in the UI, as shown in the following figure, and stored the web session information and user feedback in Amazon DynamoDB. Through the feedback collection UI, Amazon engineers can select from five satisfaction levels: strongly disagree, disagree, neutral, agree, and strongly agree, corresponding to the feedback scores from 1–5. They can also provide a better answer to the question or comment on why the LLM response is not satisfactory.

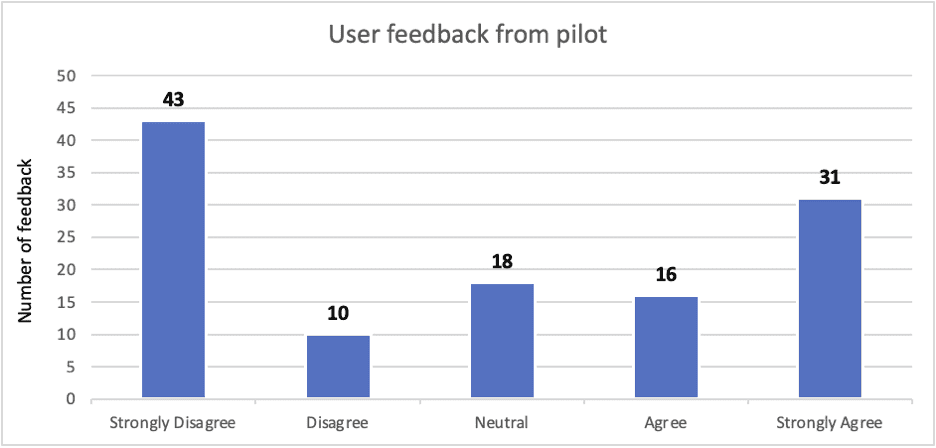

During the pilot, users provided 118 feedback responses. The following chart shows the distribution from negative to positive. We observed the incorrect answers marked by the negative feedback (disagree and strongly disagree) are 45% among the total feedback (53 out of 118). Some of the errors (about 10% of negative feedback and 7.5% of overall responses) can be addressed by user education and prompt engineering. Additionally, we can address the issue with the solution of LLM fine-tuning and reinforcement learning, described in the next section.

Some users simply left a note, such as “Great!” for the strongly agree answers, or “Doesn’t answer the question,” for the strongly disagree answers. Other users provided scores and explained how they justify the LLM answers in their notes. In the following example, the user specifically provided the adequate document and content to correct the LLM hallucination.

| Question | How many car parking spaces do we have? |

| Bot Answer |

Based on the given context, we don’t have enough information to determine the number of car parking spaces. The context discusses the minimum size of parking spaces and the requirements for external lighting levels, but it does not provide a specific number of parking spaces. **You can refer to further explanations in the following resources:** * ARS GEN 10.0/05.01.02. Design Criteria & Appendices/Performance Package AR Sortable Design Criteria v20.1.1.pdf – page: 10 * ARS GEN 10.0/05.01.02. Design Criteria & Appendices/Performance Package AR Sortable Design Criteria v20.1.1.pdf – page: 79 |

| User score | Strongly Disagree |

| User notes | This is specified on page 21 of design criteria section 01 13 10 |

Improve bot response with supervised fine-tuning and reinforcement learning

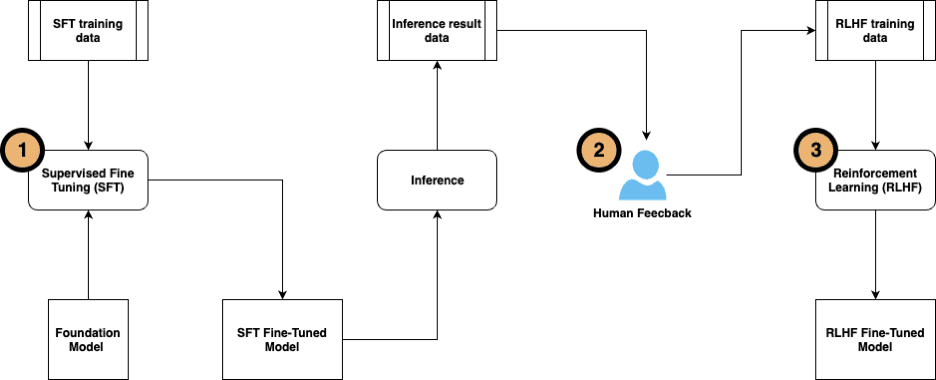

The solution consists of three steps of fine-tuning:

- Conduct supervised fine-tuning using labeled data. This method was described in A generative AI-powered solution on Amazon SageMaker to help Amazon EU Design and Construction.

- Collect user feedback to label the question-answer pairs for further LLM tuning.

- When the training data is ready, further tune the model using reinforcement learning from human feedback (RLHF).

RLHF is widely used throughout generative artificial intelligence (AI) and LLM applications. It incorporates human feedback in the rewards function and trains the model with a reinforcement learning algorithm to maximize rewards, which makes the model perform tasks more aligned with human goals. The following diagram shows the pipeline of the steps.

We tested the methodology using the Amazon D&C documents with a Mistral-7B model on SageMaker JumpStart.

Supervised fine-tuning

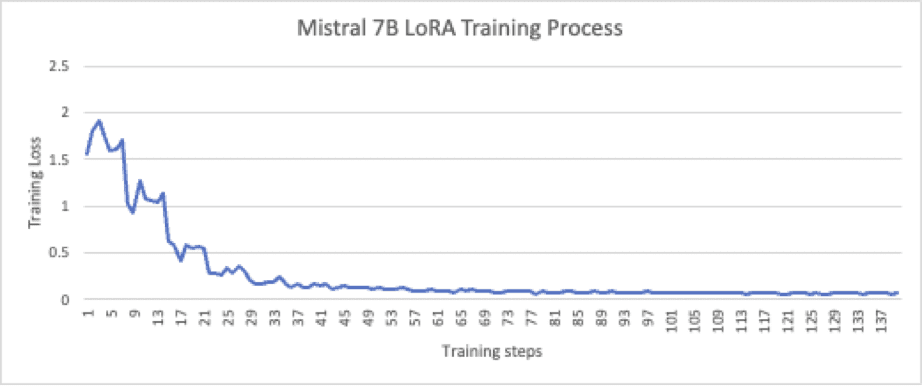

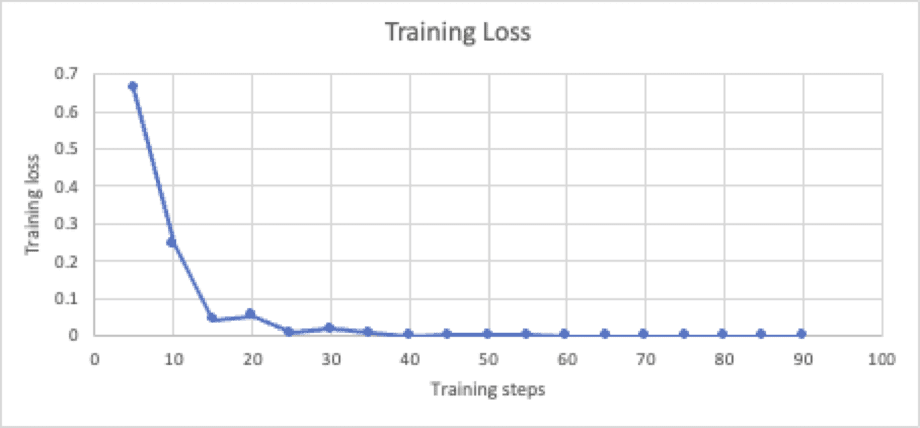

In the previous post, we demonstrated how the fine-tuned Falcon-7B model outperforms the RAG pipeline and improves the quality and accuracy of QA bot response. For this post, we performed supervised fine-tuning on the Mistral-7B model. The supervised fine-tuning used the PEFT/LoRA technique (LoRA_r = 512, LoRA_alpha = 1024) on 436,207,616 parameters (5.68% of the total 7,677,964,288 parameters). The training was conducted on a p3.8x node with 137 samples synthetically generated by LLM and validated by humans; the process is well converged after 20 epochs, as shown in the following figure.

The fine-tuned model was validated by 274 samples, and the inference results were compared with the reference answers by the semantic similarity score. The score is 0.8100, which is higher than the score of 0.6419 from the traditional RAG.

Collect human and AI feedback for reinforcement learning

For RLHF, a sufficient amount of high-quality training samples labeled by subject matter experts (SMEs) are essential. However, poor-quality human labels will likely cause worse model performance than the original model after RLHF training. SMEs’ time is a scarce resource in any organization; reviewing hundreds or thousands of LLM responses and providing feedback requires a significant time investment from SMEs that may not have a clear return on investment.

To address this challenge, we adopted the reinforcement learning from AI feedback (RLAIF) method. RLAIF employs an AI assistant (another LLM) to provide evaluation scores, rather than from humans. In this hybrid learning approach, the learning agent refines the actions not only based on the interaction with a human but also from feedback provided by another AI model. It is much more scalable to provide sufficient training data for reinforcement learning, and much less subjective because the training is not dependent on particular preferences from a small group of SMEs. The SMEs may still be involved, but only to supervise the process and examine the AI feedback quality. This tremendously reduces the SMEs’ workload, because the AI-generated scores and labels are much less complicated for SMEs to analyze, filter, and sort.

In this use case, we provide the following prompt to an Anthropic Claude 2 model to generate the evaluation scores as the training samples for the reinforcement learning step:

The Claude 2 model generates an evaluation score and a comment on why the score is given, as shown in the following example.

| Question | What is meant by ‘Fit Out Works’ in this context? |

| Reference Answer | Fit Out Works refers to Works carried out under the construction contract by or on behalf of Amazon via the Developer up to First Receive Date from a notional Developer Shell & Core Base-build position. These Works include Amazon’s required Fit-Outs, big-box installations, and custom |

| QA Bot response | Fit Out Works refers to Works carried out under the construction contract by or on behalf of Amazon via the Developer up to First Receive Date from a notional Developer Shell & Core Base-build position. |

| AI score | 4.5 |

| AI comment | The machine answer provides the key information from the standard answer – that Fit Out Works refers to Works carried out under the construction contract by or on behalf of Amazon via the Developer up to First Receive Date from a notional Developer Shell & Core Base-build position. The additional details about including Amazon’s required Fit-Outs, big-box installations, and customizations are relevant and help provide further context, so I’ve scored the machine answer 4.5 out of 5. It captures the essence of the standard answer and provides some useful extra details. |

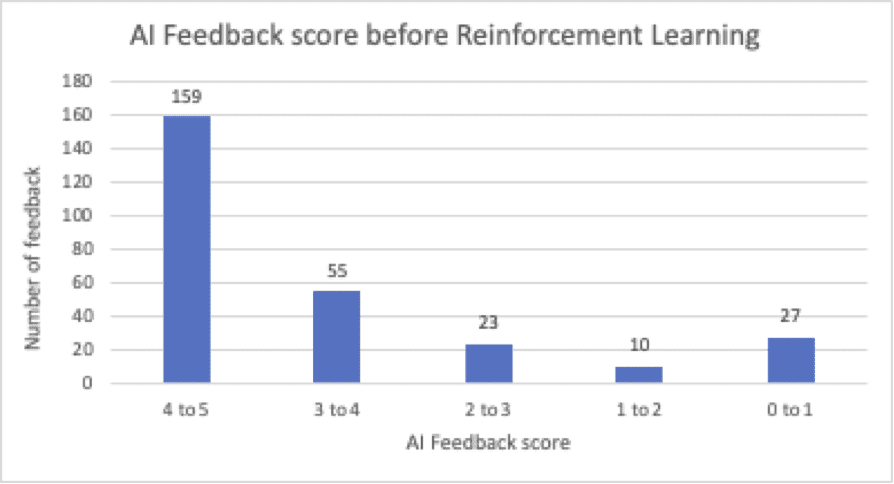

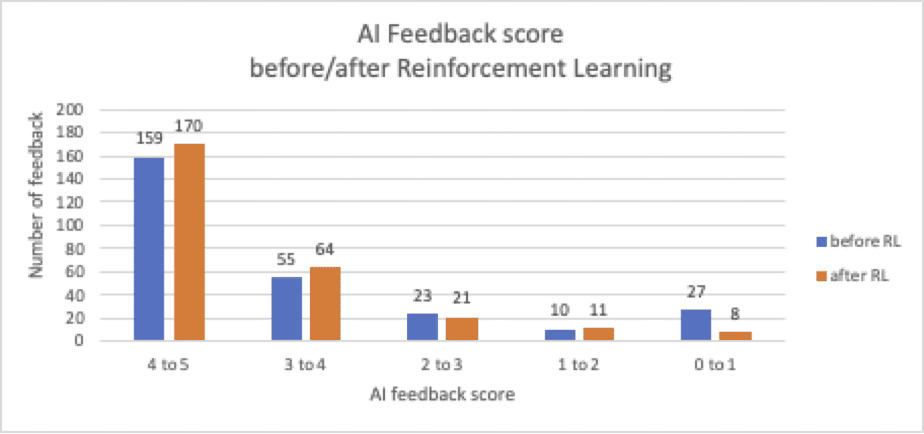

Out of the 274 validation questions, the supervised fine-tuned model generated 159 responses that have AI scores greater than 4. We observed 60 answers with scores lower than 3; there is space to improve the overall response quality.

The Amazon Engineering SMEs validated this AI feedback and acknowledged the benefits of using AI scores. Without AI feedback, the SMEs would need some time to review and analyze each LLM response to identify the cut-off answers and hallucinations, and to judge whether the LLM is returning correct contents and key concepts. AI feedback provides AI scores automatically and enables the SMEs to use filtering, sorting, and grouping to validate the scores and identify trends in the responses. This reduces the average SME’s review time by 80%.

Reinforcement learning from human and AI feedback

When the training samples are ready, we use the proximal policy optimization (PPO) algorithm to perform reinforcement learning. PPO uses a policy gradient method, which takes small steps to update the policy in the learning process, so that the learning agents can reliably reach the optimal policy network. This makes the training process more stable and reduces the possibility of divergence.

During the training, first we use the human- and AI-labeled data to build a reward model, which will be used guide the weights update in the learning process. For this use case, we select a distilroberta-base reward model and train it by samples in the following format:

[Instruction, Chosen_response, Rejected_response]

The following is an example of a training record.

| Instruction | According to the context, what is specified for inclusive and accessible design? |

| Chosen_response | BREEAM Credit HEA06 – inclusive and accessible design – The building is designed to be fit for purpose, appropriate and accessible by all potential users. An access strategy is developed in line with the BREEAM Check list A3 |

| Rejected_response | The context states that |

The reward model is trained with the learning rate 1e-5. As shown in the following chart, the training well converges after 10 epochs.

Then the reward model is used for reinforcement learning. For this use case, we use PEFT/LoRA to accelerate the learning. The LoRA_r is 32 and LoRA_alpha is 64. The PPO-based learning is on a rate of 1.41e-5 in three epochs. Reinforcement training is highly volatile and very sensitive to the label data and feedback data. We use the reward_mean metric, the average reward obtained from the environment by specifically monitoring the reward model, to observe the learning process.

After RLAIF, we can see the number of bot responses with positive scores (above 3) increase from 78.1% to 85.5%, and those with negative scores (below 3) decrease from 21.9% to 14.5%. In total, the average AI feedback score increases up to 8%, from 3.9 to 4.2.

You can see the improvement in the following example.

| Question | For floor areas being tiled, what screed is needed? |

| Reference | Concrete Screed flooring is applicable only in floor-tiled areas. Refer to drawing series A-32 to see where floor-tiles are applicable. |

| Bot response before RLAIF | Concrete Screed flooring is applicable only in |

| AI score before RLAIF | 3 |

| Bot response after RLAIF | Concrete Screed flooring is applicable only in areas being tiled. Refer to drawing series A-32 to see where tile flooring is applicable. |

| AI score after RLAIF | 4.5 |

Conclusion

This post is a continuation of our work with the Amazon EU Design and Construction team as discussed in A generative AI-powered solution on Amazon SageMaker to help Amazon EU Design and Construction. In this post, we showed how we generated human and AI feedback data to fine-tune the Mistral-7B model with reinforcement learning. The model after RLAIF provided better performance for Amazon Engineering’s question answering bot, improved the AI feedback score by 8%. In the Amazon D&C team’s pilot project, using RLAIF reduced the validation workload for SMEs by an estimated 80%. As the next step, we will scale up this solution by connecting with Amazon Engineering’s data infrastructure, and design a framework to automate the continuous learning process with a human in the loop. We will also further improve the AI feedback quality by tuning the prompt template.

Through this process, we learned how to further improve the quality and performance of question answering tasks through RLHF and RLAIF.

- Human validation and augmentation are essential to provide accurate and responsible outputs from LLM. The human feedback can be used in RLHF to further improve the model response.

- RLAIF automates the evaluation and learning cycle. The AI-generated feedback is less subjective because it doesn’t depend on a particular preference from a small pool of SMEs.

- RLAIF is more scalable to improve the bot quality through continued reinforcement learning while minimizing the efforts required from SMEs. It is especially useful for developing domain-specific generative AI solutions within large organizations.

- This process should be done on a regular basis, especially when new domain data is available to be covered by the solution.

In this use case, we used SageMaker JumpStart to test multiple LLMs and experiment with multiple LLM training approaches. It significantly accelerates the AI feedback and learning cycle with maximized efficiency and quality. For your own project, you can introduce the human-in-the-loop approach to collect your users’ feedback, or generate AI feedback using another LLM. Then you can follow the three-step process defined in this post to fine-tune your models using RLHF and RLAIF. We recommend experimenting with the methods using SageMaker JumpStart to speed up the process.

About the Author

Yunfei Bai is a Senior Solutions Architect at AWS. With a background in AI/ML, data science, and analytics, Yunfei helps customers adopt AWS services to deliver business results. He designs AI/ML and data analytics solutions that overcome complex technical challenges and drive strategic objectives. Yunfei has a PhD in Electronic and Electrical Engineering. Outside of work, Yunfei enjoys reading and music.

Yunfei Bai is a Senior Solutions Architect at AWS. With a background in AI/ML, data science, and analytics, Yunfei helps customers adopt AWS services to deliver business results. He designs AI/ML and data analytics solutions that overcome complex technical challenges and drive strategic objectives. Yunfei has a PhD in Electronic and Electrical Engineering. Outside of work, Yunfei enjoys reading and music.

Elad Dwek is a Construction Technology Manager at Amazon. With a background in construction and project management, Elad helps teams adopt new technologies and data-based processes to deliver construction projects. He identifies needs and solutions, and facilitates the development of the bespoke attributes. Elad has an MBA and a BSc in Structural Engineering. Outside of work, Elad enjoys yoga, woodworking, and traveling with his family.

Elad Dwek is a Construction Technology Manager at Amazon. With a background in construction and project management, Elad helps teams adopt new technologies and data-based processes to deliver construction projects. He identifies needs and solutions, and facilitates the development of the bespoke attributes. Elad has an MBA and a BSc in Structural Engineering. Outside of work, Elad enjoys yoga, woodworking, and traveling with his family.

Luca Cerabone is a Business Intelligence Engineer at Amazon. Drawing from his background in data science and analytics, Luca crafts tailored technical solutions to meet the unique needs of his customers, driving them towards more sustainable and scalable processes. Armed with an MSc in Data Science, Luca enjoys engaging in DIY projects, gardening and experimenting with culinary delights in his leisure moments.

Luca Cerabone is a Business Intelligence Engineer at Amazon. Drawing from his background in data science and analytics, Luca crafts tailored technical solutions to meet the unique needs of his customers, driving them towards more sustainable and scalable processes. Armed with an MSc in Data Science, Luca enjoys engaging in DIY projects, gardening and experimenting with culinary delights in his leisure moments.

Leave a Reply