Using Amazon Rekognition to improve bicycle safety

Cycling is a fun way to stay fit, enjoy nature, and connect with friends and acquaintances. However, riding is becoming increasingly dangerous, especially in situations where cyclists and cars share the road. According to the NHTSA, in the United States an average of 883 people on bicycles are killed in traffic crashes, with an average of about 45,000 injury-only crashes reported annually. While total bicycle fatalities only account for just over 2% of all traffic fatalities in the United States, as a cyclist, it’s still terrifying to be pushed off the road by a large SUV or truck. To better protect themselves, many cyclists are starting to ride with cameras mounted to the front or back of their bicycle. In this blog post, I will demonstrate a machine learning solution that cyclists can use to better identify close calls.

Many US states and countries throughout the world have some sort of 3-feet law. A 3-feet law requires motor vehicles to provide about 3 feet (1 meter) of distance when passing a bicycle. To promote safety on the road, cyclists are increasingly recording their rides, and if they encounter a dangerous situation where they aren’t given an appropriate safe distance, they can provide a video of the encounter to local law enforcement to help correct behavior. However, finding a single encounter in a recording of a multi-hour ride is time consuming and often requires specialized video skills to generate a short clip of the encounter.

To solve some of these problems, I have developed a simple solution using Amazon Rekognition video analysis. Amazon Rekognition can detect labels (essentially objects) and the timestamp of when that object is detected in a video. Amazon Rekognition can be used to quickly find any vehicles that appear in the video of a recorded ride.

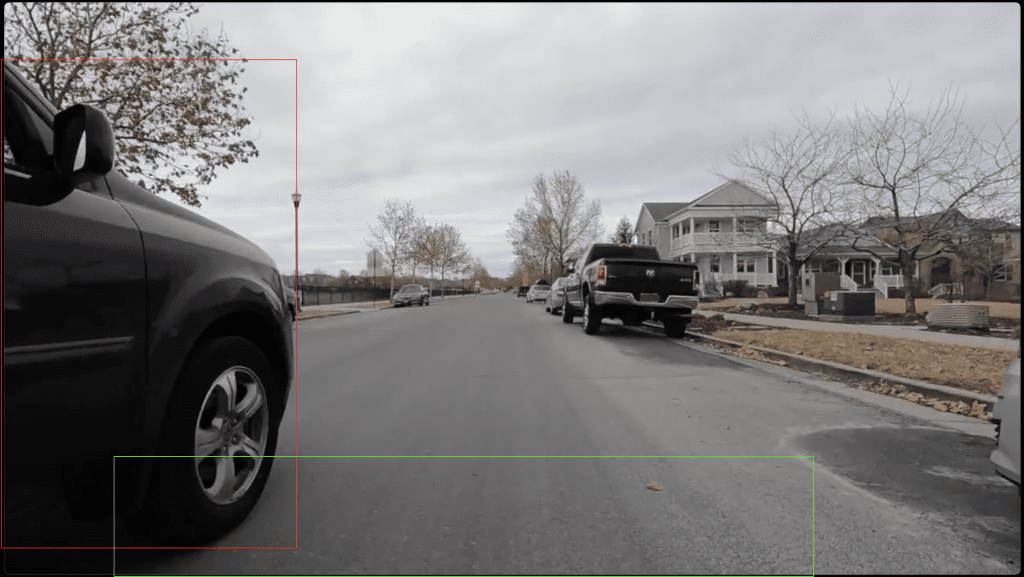

If a cyclist’s camera records a passing vehicle, it must then determine if the vehicle is too close to the bicycle—in other words, if the vehicle is within the 3-foot range set by law. If it is, then I want to generate a clip of the encounter, which can be provided to the relevant authorities. The following figure shows the view from a cyclist’s camera with bounding boxes that identify a vehicle that’s passing too close to the bicycle. A box at the bottom of the image shows the approximate 3-foot area around the bicycle.

Solution overview

The architecture of the solution is shown in the following figure.

The steps of the solution are:

- When a cyclist completes a ride, they upload their MP4 videos from the ride into an Amazon Simple Storage Service (Amazon S3)

- The bucket has been configured with an S3 event notification that sends object created notifications to an AWS Lambda

- The Lambda function kicks off an AWS Step Functions workflow that begins by calling the StartLabelDetection API as part of Amazon Rekognition videos. The

StartLabelDetectionAPI is configured to detectBus,Car,Fire Truck,Pickup Truck,Truck,Limo, andMoving Vanas labels. It ignores other related non-vehicle labels likeLicense Plate,Wheel,Tire, andCar Mirror. - The Amazon Rekognition API returns a set of JSON identifying the selected labels and timestamps of detected objects.

- This JSON result is sent to a Lambda function to perform the geometry math to determine if a vehicle box overlapped with the bicycle safe area.

- Any detected encounters are generated and passed off to AWS Elemental MediaConvert, which can create snippets of video corresponding to the detected encounters, using the

CreateJobAPI - MediaConvert creates these videos and uploads them to an S3 bucket.

- Another Lambda function is called to generate pre-signed URLs of the videos. This allows the videos to be temporarily downloaded by anyone with the pre-signed URL.

- Amazon Simple Notification Service (Amazon SNS) sends an email message with links to the pre-signed URLs.

Prerequisites

To use the solution outlined in this post, you must have:

- An AWS account with appropriate permissions to allow you to deploy AWS CloudFormation stacks

- A video recording in MP4 format with the .MP4 extension using the H.264 codec. The video should be from a front or rear-facing camera, from any off-the-shelf vendor (for example GoPro, DJI, or Cycliq). The maximum file size is 10 GB.

Deploying the solution

- Deploy this solution in your environment or select Launch Stack. This solution will deploy in the AWS US East (N. Virginia) us-east-1 AWS Region.

- The Create stack page from the CloudFormation dashboard appears. At the bottom of the page, choose Next.

- On the Specify stack details page, enter the email address where you’d like to receive notifications. Choose Next.

- Select the box that says I acknowledge that AWS CloudFormation might create IAM resources and Choose Next. Choose Submit and the installation will begin. The solution takes about 5 minutes to be installed.

- You will receive an email confirming your Amazon SNS subscription. You will not receive emails from the solution unless you confirm your subscription.

- After the stack completes, select the Outputs tab and take note of the bucket name listed under InputBucket.

Using the solution

To test the solution, I have a sample video where I asked a stunt driver to drive very closely to me.

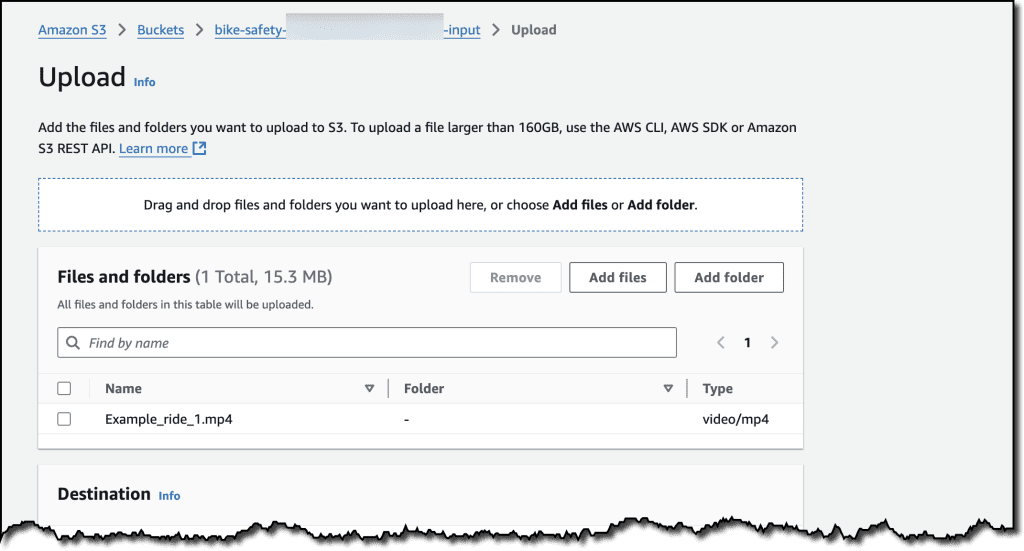

To begin the video processing, I upload the video to the S3 bucket (the InputBucket from the Outputs tab). The bucket has encryption enabled, so under Properties, I choose Specify an encryption key and select Use bucket settings for default encryption. Choosing Upload begins the upload process, as shown in the following figure.

After a moment, the step function begins processing. After a few minutes, you will receive an email with links to any encounters identified, as shown in the following figure.

In my case, it identified two encounters. In the first encounter identified, I rode too close to a parked car. However, in the second encounter identified, it shows a dangerous encounter that I experienced with my stunt driver.

Had this been an actual dangerous encounter, the video clip could be provided to the appropriate authorities to help change behavior and make the road safer for everyone.

Pricing

Because this is a fully serverless solution, you only pay for what you use. With Amazon Rekognition, you pay for the minutes of video that are processed. With MediaConvert, you pay for normalized minutes of video processed, which is each minute of video output with multipliers that apply based on features used. The solution’s use of Lambda, Step Functions, and SNS are minimal and will likely fall under the free tier for most users.

Clean up

To delete the resources created as part of this solution, go to the CloudFormation console, select the stack that was deployed, and choose Delete.

Conclusion

In this example I demonstrated how to use Amazon Rekognition video analysis in a unique scenario. Amazon Rekognition is a powerful computer vision tool that allows you to get insights out of images or video without the overhead of building or managing a machine learning model. Of course, Amazon Rekognition can also handle more advanced use cases than the one I demonstrated here.

In this example I demonstrated how using Amazon Rekognition with other serverless services can yield a serverless video processing workflow that—in this case—can help improve the safety of cyclists. While you might not be an avid cyclist, the solution demonstrated here can be extended to a variety of use cases and industries. For example, this solution could be extended to detect wildlife on nature cameras or you could use Amazon Rekognition streaming video events to detect people and packages in security video.

Get started today by using Amazon Rekognition for your computer vision use case.

About the Author

Mike George is a Principal Solutions Architect at Amazon Web Services (AWS) based in Salt Lake City, Utah. He enjoys helping customers solve their technology problems. His interests include software engineering, security, artificial intelligence (AI), and machine learning (ML).

Mike George is a Principal Solutions Architect at Amazon Web Services (AWS) based in Salt Lake City, Utah. He enjoys helping customers solve their technology problems. His interests include software engineering, security, artificial intelligence (AI), and machine learning (ML).

Leave a Reply