Deploying machine learning models as serverless APIs

Machine learning (ML) practitioners gather data, design algorithms, run experiments, and evaluate the results. After you create an ML model, you face another problem: serving predictions at scale cost-effectively.

Serverless technology empowers you to serve your model predictions without worrying about how to manage the underlying infrastructure. Services like AWS Lambda only charge for the amount of time that you run your code, which allows for significant cost savings. Depending on latency and memory requirements, AWS Lambda can be an excellent choice for easily deploying ML models. This post provides an example of how to easily expose your ML model to end-users as a serverless API.

| About this blog post | |

| time to read | 5 minutes |

| Time to complete | 15 minutes |

| Cost to complete | Free Tier or Under $1 (at publication time) |

| Learning level | Intermediate (200) |

Prerequisites

To implement this solution, you must have an AWS account with access to the following services:

Overview of solution

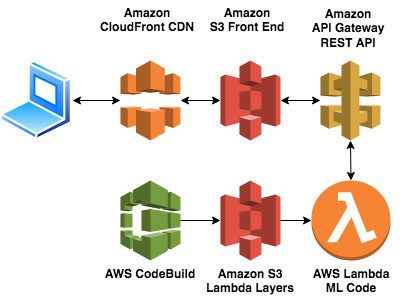

The following diagram illustrates the architecture of this solution.

The solution employs the following services:

- AWS Lambda – Lets you run ML inference code without provisioning or managing servers and only paying for the time it takes to run. The Lambda function loads a deep learning model and detects objects in an image. Lambda layers contain packaged code that you can import across several functions.

- AWS CodeBuild – Lets you run commands that install the required dependencies for the application and upload the Lambda layer packages to Amazon S3. The template builds packages commonly used in ML, including Apache MXNet, Keras-MXNet, Scikit-Learn, PIL, GluonCV, and GluonNLP. You only incur charges for the number of minutes that it takes for the build process to complete.

- Amazon S3 – Stores the layer packages and also the front-end site that lets you test a deep learning object detection model.

- Amazon API Gateway – Provides a REST API for your front end to interface with your deep learning Lambda function.

- Amazon CloudFront – Serves your static front-end site from Amazon S3. This lets you upload a URL of an image and see what objects the model detects.

Building the API

Launch the following CloudFormation template to build an example of a serverless ML API in US-East-1 or use this URL to launch it in your Region. You can use this example as a starting point for deploying custom ML models or artifacts created by many built-in Amazon SageMaker algorithms. The CloudFormation template also provides a front end that detects objects in images provided by the user.

Launching the API

After you launch the CloudFormation template, you can use the default settings or customize the deployment by providing a different path for the AWS Lambda inference code and Amazon S3 front end. In the front-end code, replace the text API_GATEWAY_ENDPOINT_URL with the URL for the API Gateway endpoint by AWS CloudFormation.

After you create the CloudFormation stack, you can find the link to the site for detecting custom objects on the Outputs tab on the AWS CloudFormation console.

The link leads to a simple website where you can enter the URL of an image and see the objects that the model detected. The following screenshot shows an example image with highlighted objects.

In this post, an object detection model from GluonCV performs inference on images. To deploy custom models, you can include code for the Lambda function to download the model, as in the preceding object detection example. Alternatively, you can improve latency by including the model parameter files directly in the AWS Lambda code package, as in the following example.

Customizing the API

To deploy a custom model, complete the following steps:

- On the AWS Lambda console, choose the function with the format

serverless-ml-Inference-xxxxxxxxxxxx. The following code shows that inference is performed with fewer than 25 lines of code:Instead of performing object detection, you can upload custom inference code with a custom model trained from the Amazon SageMaker example in the following GitHub repo. This detects whether a sentence has positive or negative sentiment.

- Upload the file inference.zip to the AWS Lambda IDE.The following screenshot shows the Function code section on the AWS Lambda console.

- Download the front-end interface frontend.html, which presents the sentiment analysis model to users.

- To find the URL for your API Gateway endpoint, on the API Gateway console, choose Machine Learning REST API.

- In the Production Stage Editor section, locate the Invoke URL.

- Open the frontend.html file and replace the text

API_GATEWAY_ENDPOINT_URLwith the URL for your API Gateway endpoint. The following is the original code:The following is the updated code:

You are now ready to replace the frontend.html file in your S3 bucket with your new file.

- On the Amazon S3 console, choose the bucket with the format

serverless-ml-inferenceapp-xxxxxxxxxxxx. - Drag the new frontend.html file into the bucket.

- Choose Upload.

When you go to the URL for the sample website, it should perform sentiment analysis. See the following screenshot.

Now that you have your front-end code and the inference code for AWS Lambda, you can alternatively relaunch your CloudFormation template and enter the links to your new files for BuildCode, FrontendCode, and InferenceCode.

Conclusion

This post showed how to launch the building blocks you need to deploy ML models as serverless APIs. To do so, you created a process for building Lambda layers that lets you import dependencies for ML code into your Lambda functions. You also deployed a sample application with a REST API and front end. This lets you focus on building ML models by providing an easy, scalable, cost-effective way to serve predictions.

Additional Reading

- Seamlessly Scale Predictions with AWS Lambda and MXNet

- How to Deploy Deep Learning Models with AWS Lambda and TensorFlow

- Serving deep learning at Curalate with Apache MXNet, AWS Lambda, and Amazon Elastic Inference

- Build, test, and deploy your Amazon SageMaker inference models to AWS Lambda

About the Author

Anders Christiansen is a Data Scientist with AWS Professional Services, where he helps customers implement machine learning solutions for their businesses.

Anders Christiansen is a Data Scientist with AWS Professional Services, where he helps customers implement machine learning solutions for their businesses.

Tags: Archive

Leave a Reply