Using Amazon Textract with Amazon Augmented AI for processing critical documents

Documents are a primary tool for record keeping, communication, collaboration, and transactions across many industries, including financial, medical, legal, and real estate. For example, millions of mortgage applications and hundreds of millions of tax forms are processed each year. Documents are often unstructured, which means the content’s location or format may vary between two otherwise similar forms. Unstructured documents require time-consuming and complex processes to enable search and discovery, business process automation, and compliance control. When using machine learning (ML) to automate processing of these unstructured documents, you can now build in human reviews to aid in managing sensitive workflows that require human judgment.

Amazon Textract lets you easily extract text and data from virtually any document, and Amazon Augmented AI (Amazon A2I) lets you easily implement human review of machine learning predictions. This post shows how you can take advantage of Amazon Textract and Amazon A2I to automatically extract highly accurate data from both structured and unstructured documents without any ML experience. Amazon Textract is directly integrated with Amazon A2I so you can, for example, easily get humans to review low-quality scans or documents with poor handwriting reviewed. Amazon A2I provides human reviewers with a web interface with the instructions and tools they need to complete their review tasks.

AWS takes care of building, training, and deploying advanced ML models in a highly available and scalable environment, and you can take advantage of these services with simple-to-use API actions. You can define the conditions in which you need a human reviewer by using the Amazon Textract form data extraction API and Amazon A2I. You can adjust these business conditions at any time to achieve the right balance between accuracy and cost-effectiveness. For example, you can specify that a human review the predictions (or inferences) an ML model makes about the document content if the model is less than 90% confident about its prediction. You can also specify which form fields are important in your documents and send those to human review.

You can also use Amazon A2I to send a random sample of Amazon Textract predictions to human reviewers. You can use these results to inform stakeholders about the model’s performance and to audit model predictions.

Prerequisites

This post requires you to have completed the following prerequisites:

- Create an IAM role – To create a human review workflow, you need to provide an IAM role that grants Amazon A2I permission to access Amazon S3 both for reading objects to render in a human task UI and for writing the results of the human review. This role also needs an attached trust policy to give Amazon SageMaker permission to assume the role. This allows Amazon A2I to perform actions in accordance with permissions that you attach to the role. For example policies that you can modify and attach to the role you use to create a flow definition, see Enable Flow Definition Creation.

- Configure permission to invoke the Amazon Textract Analyze Document API – You also need to attach the

AmazonAugmentedAIFullAccesspolicy to the IAM user that you use to invoke the Amazon Textract Analyze Document API. For instructions, see Create an IAM User That Can Invoke Amazon Augmented AI Operations.

Step 1: Creating a private work team

A work team is a group of people that you select to review your documents. You can create a work team from a workforce, which is made up of Amazon Mechanical Turk workers, vendor-managed workers, or your own private workers that you invite to work on your tasks. Whichever workforce type you choose, Amazon A2I takes care of sending tasks to workers. For this post, you create a work team using a private workforce and add yourself to the team to preview the Amazon A2I workflow.

To create and manage your private workforce, you can use the Labeling workforces page on the Amazon SageMaker console. In the console, you have the option to create a private workforce by entering worker emails or importing a pre-existing workforce from an Amazon Cognito user pool.

If you already have a work team for Amazon SageMaker Ground Truth, you can use the same work team with Amazon A2I and skip to the following section.

To create your private work team, complete the following steps:

- Navigate to the Labeling workforces page in the Amazon SageMaker console. Ensure that you are in the AWS Region us-east-1 (N. Virginia).

- On the Private tab, choose Create private team.

- Choose Invite new workers by email.

- For this post, enter your email address to work on your document-processing tasks.

You can enter a list of up to 50 email addresses, separated by commas, into the Email addresses box. - Enter an organization name and contact email.

- Choose Create private team.

After you create the private team, you get an email invitation. The following screenshot shows an example email:

After you click the link and change your password, you are registered as a verified worker for this team. The following screenshot shows the updated information on the Private tab.

Your one-person team is now ready, and you can create a human review workflow.

Step 2: Creating a human review workflow

You use a human review workflow to do the following:

- Define the business conditions under which the Amazon Textract predictions of the document content go to a human for review. For example, you can set confidence thresholds for important words in the form that the model must meet. If inference confidence for that word (or form key) falls below your confidence threshold, the form and prediction go for human review.

- Create instructions to help workers complete your document review task. Navigate to the Human review workflows page in the Augmented AI section of the Amazon SageMaker console: https://console.aws.amazon.com/a2i/home. Click on Create human review workflow button.

- In the Workflow settings section, for Name, enter a unique workflow name.

- For S3 bucket, enter the S3 bucket where you want to store the human review results. The bucket must be located in the same Region as the workflow. For example, if you create a bucket called

a2i-demos, enter the paths3://a2i-demos/.

- For IAM role, choose Create a new role from the drop-down menu. Amazon A2I can create a role automatically for you.

- For S3 buckets you specify, select Specific S3 buckets.

- Enter the S3 bucket you specified earlier; for example,

a2i-demos. - Choose Create. You see a confirmation when role creation is complete, and your role is now pre-populated in the IAM role drop-down menu.

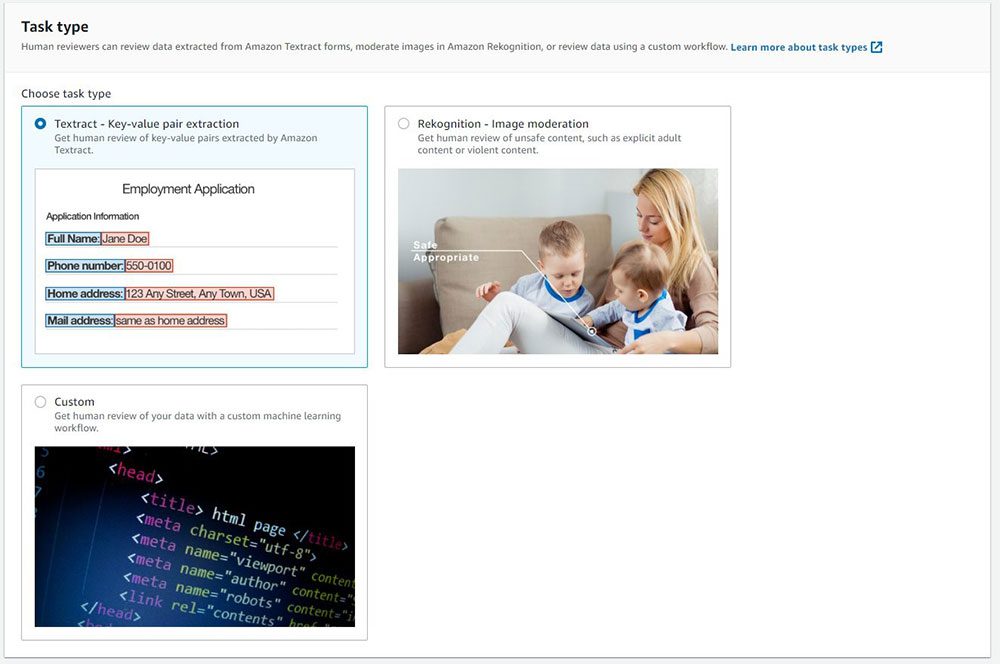

- For Task type, select Amazon Textract – Key-value pair extraction.

Next, define the conditions that will trigger a human review.

For this post, you want to trigger a human review if the key Mail Address is identified with a confidence score of less than 99% or not identified by Amazon Textract in the document. For all other keys, a human review starts if a key is identified with a confidence score less than 90%.

- Select Trigger a human review for specific form keys based on the form key confidence score or when specific form keys are missing.

- For Key name, enter

Mail Address. - Set the identification confidence threshold between 0 and 99.

- Set the qualification confidence threshold between 0 and 99.

- Select Trigger a human review for all form keys identified by Amazon Textract with confidence scores in a specific range.

- Set Identification confidence threshold between 0 and 90.

- Set Qualification confidence threshold between 0 and 90.

For model-monitoring purposes, you can also randomly send a specific percent of pages for human review. This is the third option on the Conditions for invoking human review page: Randomly send a sample of forms to humans for review. This post does not include this condition.

In the next steps, you create a UI template that the worker sees for document review. Amazon A2I provides pre-built templates that workers use to identify key-value pairs in documents.

- In the Worker task template creation section, select Create from a default template.

- For Template name, enter a name.

When you use the default template, you can provide task-specific instructions to help the worker complete your task. For this post, you can enter instructions similar to the default instructions you see in the console. - Under Task Description, add something similar to “Please review the Key Value pairs in this document”.

- Under Instructions review the default instructions provided and make modifications as needed.

- In the Workers section, select Private.

- For Private teams, choose the work team you created earlier.

- Choose Create.

You are redirected to the Human review workflows page and see a confirmation message similar to the following screenshot.

- Record your new human review workflow ARN, which you use to configure your human loop in the next section.

Step 3: Sending the document to Amazon Textract and Amazon A2I

In this section, you start a human loop using the Amazon Textract API and send a document for human review.

- Upload the following document to your

a2i-demosS3 bucket.

Following screenshot shows uploading file in my a2i-demos bucket.

- To see the file’s details, choose the file you uploaded.

- To copy the location of the document in Amazon S3, choose Copy path.

Call Amazon Textract Analyze Document API operation

This post uses AWS CLI for the following steps. If you prefer using Jupyter, see the following sample notebook in the GitHub repo.

You call the Amazon Textract Analyze Document API to do the following:

- Get the inference for the document from Amazon Textract.

- Evaluate the Amazon Textract output against the conditions you specified in the human review workflow.

- Create a human loop if your conditions evaluate to true (i.e. send a document for human review).

For this post, you create the input payload to send to the Amazon Textract Analyze Document API call. In the following code, replace the following values:

- {s3_bucket} and {s3_key} for the document image you want to analyze through Amazon Textract and Amazon A2I.

- {human-loop-name} with a unique identifier for this call. This demo uses

a2i-textract-demo-1. - {flow_def_arn} is the Human Review Workflow ARN you created in Step 2.

- Save the preceding text as a JSON file. In this example, the file is saved in

/tmp/and is namedtextract-a2i-input.json. - When the input payload is set up, use the AWS CLI to call Amazon Textract. See the following code:

The response to this call contains the inference from Amazon Textract and the evaluated activation conditions that may or may not have led to a human loop creation. If a human loop is created, the output contains HumanLoopArn. You can track its status using the DescribeHumanLoop API. The following code for the output-format from the CLI command above:

If a Human Loop was not created, the output looks like following and will not contain a HumanLoopArn.

Repeat this step for each document that you want analyzed.

Step 4: Completing Human Review of your document

To complete a human review of your document, complete the following steps:

- Open the URL in the email you received. You see a list of reviews you are assigned to.

- Select the document you want to review.

- Choose Start working.

![]()

You see instructions and the first document to work on. You can use the toolbox to zoom in and out, fit image, and reposition document. See the following screenshot.

This UI is specifically designed for document-processing tasks. On the right side of the preceding screenshot, the key-value pairs are automatically pre-filled with the Amazon Textract response. As a worker, you can quickly refer to this sidebar to make sure the key-values are identified correctly (which is the case for this post).

When you select any field on the right, a corresponding bounding box appears, which highlights its location on the document. See the following screenshot.

In the following screenshot, Amazon Textract did not identify Mail Address. The human review workflow identified this as an important field. Even though Amazon Textract didn’t identify it, the worker task UI asks you to enter Mail Address details on the right side.

- When you are satisfied, submit the work.

Step 5: Seeing results in your S3 bucket

After you submit your review of the document, the results are written back to the Amazon S3 output location you specified in your human review workflow. The following are written to a JSON file in this location:

- The human review response

- The original request

- The response from Amazon Textract

You can use this information to track and correlate ML output with human-reviewed output. To see the results, complete the following steps:

- On the Amazon A2I console, choose the human review workflow you created earlier.

- Scroll to the bottom of description page to see associated HumanLoops. In the Human loops section, you can see the associated human loops and their status.

- To see the details and access the output file, choose the name of the human loop.

The output file (output.json) is structured as follows:

Conclusion

This post has merely scratched the surface of what Amazon A2I can do. As of this writing, the service is available in the US East (N. Virginia) Region.

For more information about use cases like content moderation and sentiment analysis, see the Jupyter notebook page on GitHub. For more information about integrating Amazon A2I into any custom ML workflow, see over 60 pre-built worker templates on the GitHub repo and Use Amazon Augmented AI with Custom Task Types.

About the Authors

Anuj Gupta is the Product Manager for Amazon Augmented AI. He focusing on delivering products that make it easier for customers to adopt machine learning. In his spare time, he enjoys road trips and watching Formula 1.

Anuj Gupta is the Product Manager for Amazon Augmented AI. He focusing on delivering products that make it easier for customers to adopt machine learning. In his spare time, he enjoys road trips and watching Formula 1.

Pranav Sachdeva is a Software Development Engineer in AWS AI. He is passionate about building high performance distributed systems to solve real life problems. He is currently focused on innovating and building capabilities in the AWS AI ecosystem that allow customers to give AI the much needed human aspect.

Pranav Sachdeva is a Software Development Engineer in AWS AI. He is passionate about building high performance distributed systems to solve real life problems. He is currently focused on innovating and building capabilities in the AWS AI ecosystem that allow customers to give AI the much needed human aspect.

Talia Chopra is a Technical Writer in AWS specializing in machine learning and artificial intelligence. She has worked with multiple teams in AWS to create technical documentation and tutorials for customers using Amazon SageMaker, Amazon Augmented AI, MxNet, and AutoGluon. In her spare time she enjoys taking walks in nature and meditating.

Talia Chopra is a Technical Writer in AWS specializing in machine learning and artificial intelligence. She has worked with multiple teams in AWS to create technical documentation and tutorials for customers using Amazon SageMaker, Amazon Augmented AI, MxNet, and AutoGluon. In her spare time she enjoys taking walks in nature and meditating.

Tags: Archive

Leave a Reply