Coding with R on Amazon SageMaker notebook instances

Many AWS customers already use the popular open-source statistical computing and graphics software environment R for big data analytics and data science. Amazon SageMaker is a fully managed service that lets you build, train, and deploy machine learning (ML) models quickly. Amazon SageMaker removes the heavy lifting from each step of the ML process to make it easier to develop high-quality models. In August 2019, Amazon SageMaker announced the availability of the pre-installed R kernel in all Regions. This capability is available out-of-the-box and comes with the reticulate library pre-installed, which offers an R interface for the Amazon SageMaker Python SDK so you can invoke Python modules from within an R script.

This post describes how to train, deploy, and retrieve predictions from an ML model using R on Amazon SageMaker notebook instances. The model predicts abalone age as measured by the number of rings in the shell. You use the reticulate package as an R interface to the Amazon SageMaker Python SDK to make API calls to Amazon SageMaker. The reticulate package translates between R and Python objects, and Amazon SageMaker provides a serverless data science environment to train and deploy ML models at scale.

To follow this post, you should have a basic understanding of R and be familiar with the following tidyverse packages: dplyr, readr, stringr, and ggplot2.

Creating an Amazon SageMaker notebook instance with the R kernel

To create an Amazon SageMaker Jupyter notebook instance with the R kernel, complete the following steps:

You can create the notebook with the instance type and storage size of your choice. In addition, you should select the Identity and Access Management (IAM) role that allows you to run Amazon SageMaker and grants access to the Amazon Simple Storage Service (Amazon S3) bucket you need for your project. You can also select any VPC, subnets, and Git repositories, if any. For more information, see Creating IAM Roles.

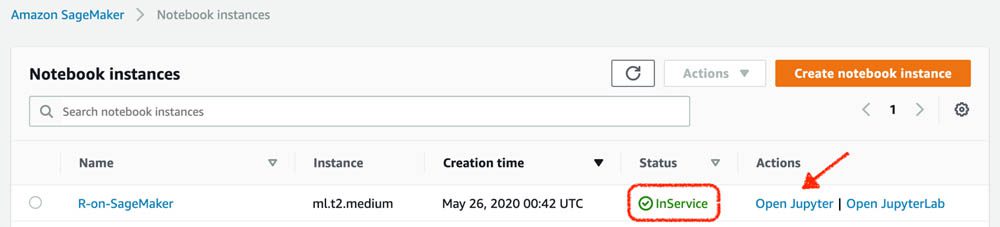

- When the status of the notebook is

InService, choose Open Jupyter.

- In the Jupyter environment, from the New drop-down menu, choose R.

The R kernel in Amazon SageMaker is built using the IRKernel package and comes with over 140 standard packages. For more information about creating a custom R environment for Amazon SageMaker Jupyter notebook instances, see Creating a persistent custom R environment for Amazon SageMaker.

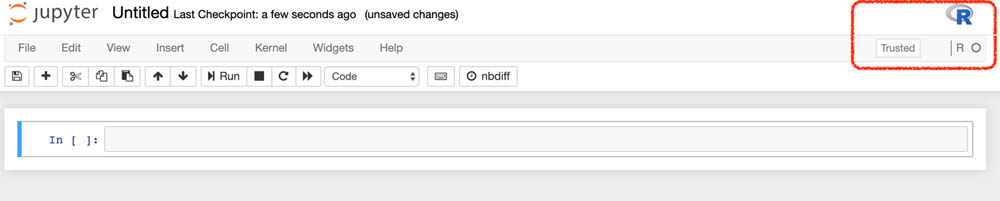

When you create the new notebook, you should see the R logo in the upper right corner of the notebook environment, and also R as the kernel under that logo. This indicates that Amazon SageMaker has successfully launched the R kernel for this notebook.

End-to-end ML with R on Amazon SageMaker

The sample notebook in this post is available on the Using R with Amazon SageMaker GitHub repo.

Load the reticulate library and import the sagemaker Python module. See the following code:

After the module loads, use the $ notation in R instead of the . notation in Python to use available classes.

Creating and accessing the data storage

The Session class provides operations for working with the following boto3 resources with Amazon SageMaker:

For this use case, you create an S3 bucket using the default bucket for Amazon SageMaker. The default_bucket function creates a unique S3 bucket with the following name: sagemaker-. See the following code:

Specify the IAM role’s ARN to allow Amazon SageMaker to access the S3 bucket. You can use the same IAM role used to create this notebook. See the following code:

Downloading and processing the dataset

The model uses the Abalone dataset from the UCI Machine Learning Repository. Download the data and start the exploratory data analysis. Use tidyverse packages to read the data, plot the data, and transform the data into an ML format for Amazon SageMaker. See the following code:

The following table summarizes the output.

The output shows that sex is a factor data type but is currently a character data type (F is female, M is male, and I is infant). Change sex to a factor and view the statistical summary of the dataset with the following code:

The following screenshot shows the output of this code snippet, which provides the statistical summary of the abalone dataframe.

The summary shows that the minimum value for height is 0. You can visually explore which abalones have a height equal to 0 by plotting the relationship between rings and height for each value of sex using the following code and the ggplot2 library:

The following graph shows the plotted data.

The plot shows multiple outliers: two infant abalones with a height of 0 and a few female and male abalones with greater heights than the rest. To filter out the two infant abalones with a height of 0, enter the following code:

Preparing the dataset for model training

The model needs three datasets: training, testing, and validation. Complete the following steps:

- Convert sex into a dummy variable and move the target, rings, to the first column:

The Amazon SageMaker algorithm requires the target to be in the first column of the dataset.

The following table summarizes the output data.

- Sample 70% of the data for training the ML algorithm and split the remaining 30% into two halves, one for testing and one for validation:

You can now upload the training and validation data to Amazon S3 so you can train the model. Please note, for CSV training, the XGBoost algorithm assumes that the target variable is in the first column and that the CSV does not have a header record. For CSV inference, the algorithm assumes that CSV input does not have the label column. The code below does not save the column names to the CSV files.

- Write the training and validation datasets to the local file system in .csv format:

- Upload the two datasets to the S3 bucket into the data key:

- Define the Amazon S3 input types for the Amazon SageMaker algorithm:

Training the model

The Amazon SageMaker algorithm is available via Docker containers. To train an XGBoost model, complete the following steps:

- Specify the training containers in Amazon Elastic Container Registry (Amazon ECR) for your Region. See the following code:

- Define an Amazon SageMaker Estimator, which can train any supplied algorithm that has been containerized with Docker. When creating the Estimator, use the following arguments:

- image_name – The container image to use for training

- role – The Amazon SageMaker service role

- train_instance_count – The number of EC2 instances to use for training

- train_instance_type – The type of EC2 instance to use for training

- train_volume_size – The size in GB of the Amazon Elastic Block Store (Amazon EBS) volume for storing input data during training

- train_max_run – The timeout in seconds for training

- input_mode – The input mode that the algorithm supports

- output_path – The Amazon S3 location for saving the training results (model artifacts and output files)

- output_kms_key – The AWS Key Management Service (AWS KMS) key for encrypting the training output

- base_job_name – The prefix for the name of the training job

- sagemaker_session – The

Sessionobject that manages interactions with the Amazon SageMaker API

The equivalent to None in Python is NULL in R.

- Specify the XGBoost hyperparameters and fit the model.

- Set the number of rounds for training to 100, which is the default value when using the XGBoost library outside of Amazon SageMaker.

- Specify the input data and job name based on the current timestamp.

When training is complete, Amazon SageMaker copies the model binary (a gzip tarball) to the specified Amazon S3 output location. Get the full Amazon S3 path with the following code:

Deploying the model

Amazon SageMaker lets you deploy your model by providing an endpoint that you can invoke by a secure and simple API call using an HTTPS request. To deploy your trained model to a ml.t2.medium instance, enter the following code:

Generating predictions with the model

You can now use the test data to generate predictions. Complete the following steps:

- Pass comma-separated text to be serialized into JSON format by specifying

text/csvandcsv_serializerfor the endpoint. See the following code: - Remove the target column and convert the first 500 observations to a matrix with no column names:

This post uses 500 observations because it doesn’t exceed the endpoint limitation.

- Generate predictions from the endpoint and convert the returned comma-separated string:

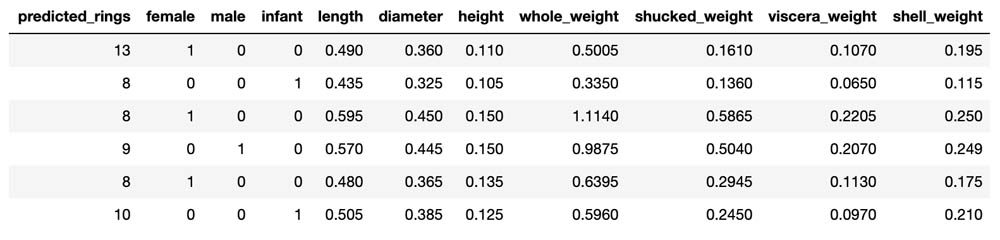

- Column-bind the predicted rings to the test data:

The following table shows the output of the code, which adds the predicted_rings to the abalone_test table. Note that the output of your code will be different than this. The reason for that is the train/validation/test split of the dataset in step 2 under “Preparing the dataset for model training” section is a random split, and thus your split will be different.

Deleting the endpoint

When you’re done with the model, delete the endpoint to avoid incurring deployment costs. See the following code:

Conclusion

This post walked you through an end-to-end ML project, from collecting data, to data processing, training the model, deploying the model as an endpoint, and finally making inferences using the deployed model. For more information about creating a custom R environment for Amazon SageMaker Jupyter notebook instances, see Creating a persistent custom R environment for Amazon SageMaker. For example notebooks for R on Amazon SageMaker, see the Amazon SageMaker examples GitHub repository. You can visit R User Guide to Amazon SageMaker on the developer guide for more details on ways of leveraging Amazon SageMaker features using R. In addition, visit the AWS Machine Learning Blog to read the latest news and updates about Amazon SageMaker and other AWS AI and ML services.

About the author

Nick Minaie is an Artificial Intelligence and Machine Learning (AI/ML) Specialist Solution Architect, helping customers on their journey to well-architected machine learning solutions at scale. In his spare time, Nick enjoys abstract painting and loves to explore the nature.

Nick Minaie is an Artificial Intelligence and Machine Learning (AI/ML) Specialist Solution Architect, helping customers on their journey to well-architected machine learning solutions at scale. In his spare time, Nick enjoys abstract painting and loves to explore the nature.

Tags: Archive

Leave a Reply