Developing NER models with Amazon SageMaker Ground Truth and Amazon Comprehend

Named entity recognition (NER) involves sifting through text data to locate noun phrases called named entities and categorizing each with a label, such as person, organization, or brand. For example, in the statement “I recently subscribed to Amazon Prime,” Amazon Prime is the named entity and can be categorized as a brand. Building an accurate in-house custom entity recognizer can be a complex process, and requires preparing large sets of manually annotated training documents and selecting the right algorithms and parameters for model training.

This post explores an end-to-end pipeline to build a custom NER model using Amazon SageMaker Ground Truth and Amazon Comprehend.

Amazon SageMaker Ground Truth enables you to efficiently and accurately label the datasets required to train machine learning systems. Ground Truth provides built-in labeling workflows that take human labelers step-by-step through tasks and provide tools to efficiently and accurately build the annotated NER datasets required to train machine learning (ML) systems.

Amazon Comprehend is a natural language processing (NLP) service that uses machine learning to find insights and relationships in text. Amazon Comprehend processes any text file in UTF-8 format. It develops insights by recognizing the entities, key phrases, language, sentiments, and other common elements in a document. To use this custom entity recognition service, you need to provide a dataset for model training purposes, with either a set of annotated documents or a list of entities and their type label (such as PERSON) and a set of documents containing those entities. The service automatically tests for the best and most accurate combination of algorithms and parameters to use for model training.

The following diagram illustrates the solution architecture.

The end-to-end process is as follows:

- Upload a set of text files to Amazon Simple Storage Service (Amazon S3).

- Create a private work team and a NER labeling job in Ground Truth.

- The private work team labels all the text documents.

- On completion, Ground Truth creates an augmented manifest named manifest in Amazon S3.

- Parse the augmented output manifest file and create the annotations and documents file in CSV format, which is acceptable by Amazon Comprehend. We mainly focus on a pipeline that automatically converts the augmented output manifest file, and this pipeline can be one-click deployed using an AWS CloudFormation In addition, we also show how to use the convertGroundtruthToComprehendERFormat.sh script from the Amazon Comprehend GitHub repo to parse the augmented output manifest file and create the annotations and documents file in CSV format. Although you need only one method for the conversion, we highly encourage you to explore both options.

- On the Amazon Comprehend console, launch a custom NER training job, using the dataset generated by the AWS Lambda

To minimize the time spent on manual annotations while following this post, we recommend using the small accompanying corpus example. Although this doesn’t necessarily lead to performant models, you can quickly experience the whole end-to-end process, after which you can further experiment with larger corpus or even replace the Lambda function with another AWS service.

Setting up

You need to install the AWS Command Line Interface (AWS CLI) on your computer. For instructions, see Installing the AWS CLI.

You create a CloudFormation stack that creates an S3 bucket and the conversion pipeline. Although the pipeline allows automatic conversions, you can also use the conversion script directly and the setup instructions described later in this post.

Setting up the conversion pipeline

This post provides a CloudFormation template that performs much of the initial setup work for you:

- Creates a new S3 bucket

- Creates a Lambda function with Python 3.8 runtime and a Lambda layer for additional dependencies

- Configures the S3 bucket to auto-trigger the Lambda function on arrivals of

output.manifestfiles

The pipeline source codes are hosted in the GitHub repo. To deploy from the template, in another browser window or tab, sign in to your AWS account in us-east-1.

Launch the following stack:

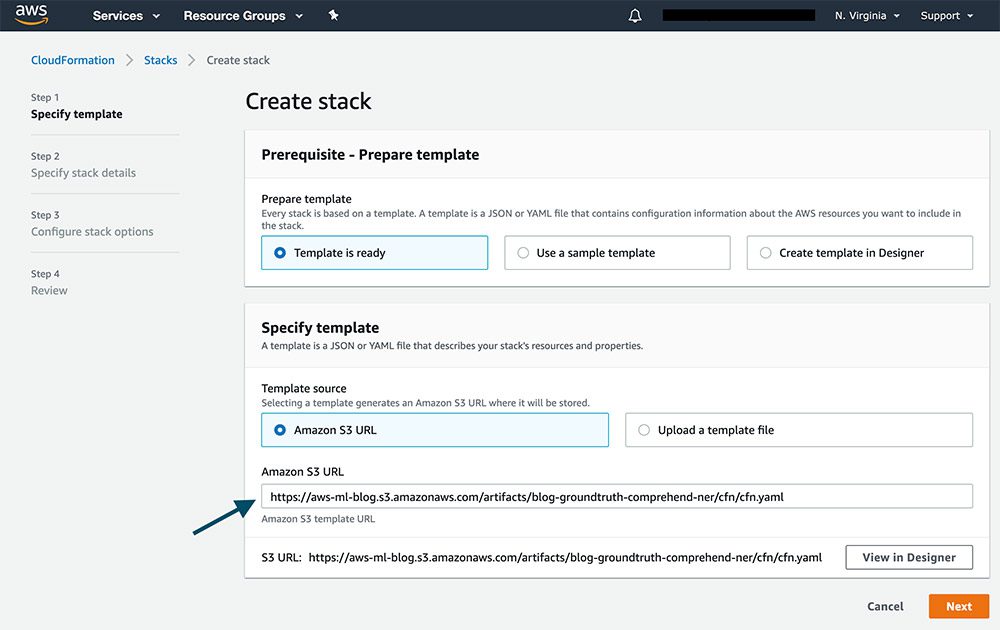

Complete the following steps:

- For Amazon S3 URL, enter the URL for the template.

- Choose Next.

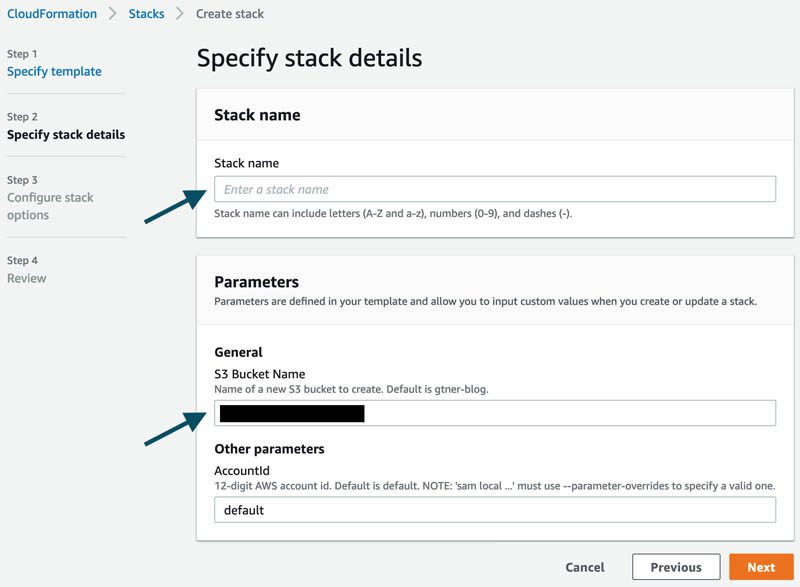

- For Stack name, enter a name for your stack.

- For S3 Bucket Name, enter a name for your new bucket.

- Leave the remaining parameters at their default.

- Choose Next.

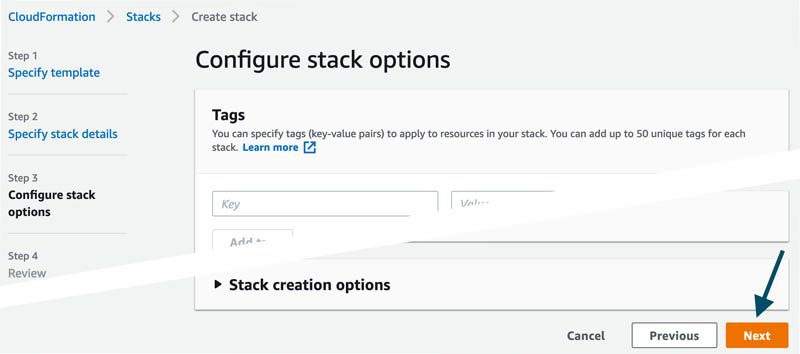

- On the Configure stack options page, choose Next.

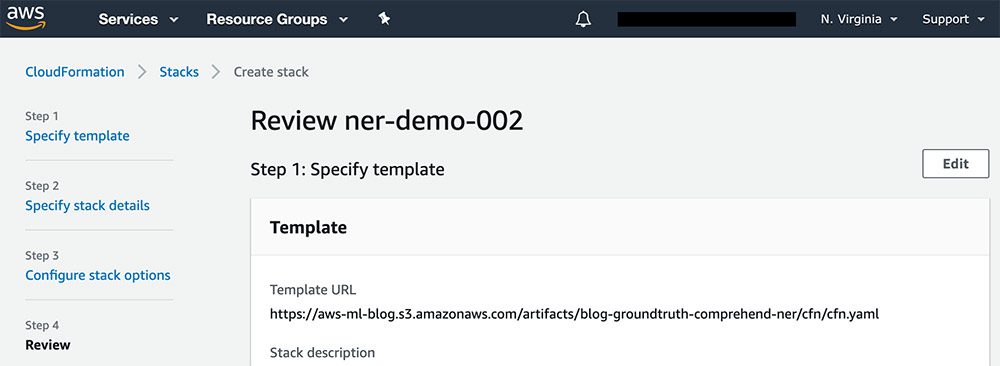

- Review your stack details.

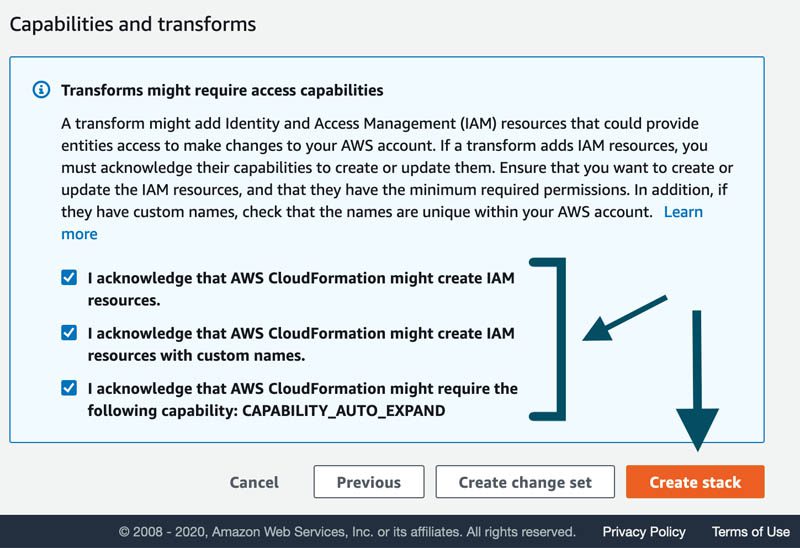

- Select the three check-boxes acknowledging that AWS CloudFormation might create additional resources and capabilities.

- Choose Create stack.

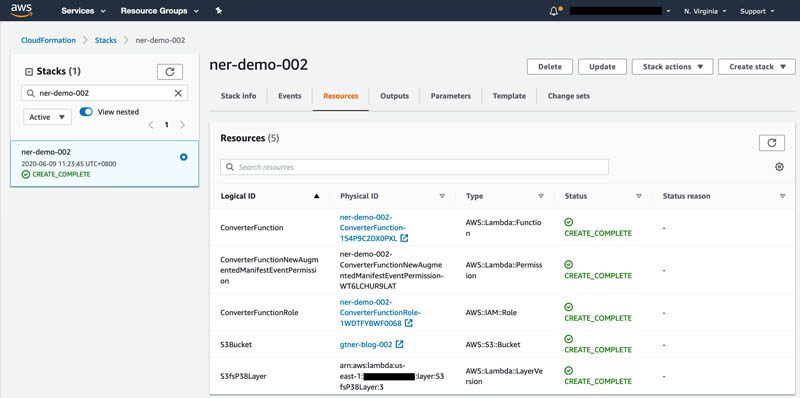

CloudFormation is now creating your stack. When it completes, you should see something like the following screenshot.

Setting up the conversion script

To set up the conversion script on your computer, complete the following steps:

- Download and install Git on your computer.

- Decide where you want to store the repository on your local machine. We recommend making a dedicated folder so you can easily navigate to it using the command prompt later.

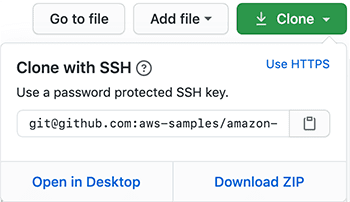

- In your browser, navigate to the Amazon Comprehend GitHub repo.

- Under Contributors, choose Clone or download.

- Under Clone with HTTPS, choose the clipboard icon to copy the repo URL.

To clone the repository using an SSH key, including a certificate issued by your organization’s SSH certificate authority, choose Use SSH and choose the clipboard icon to copy the repo URL to your clipboard.

- In the terminal, navigate to the location in which you want to store the cloned repository. You can do so by entering

$ cd. - Enter the following code:

After you clone the repository, follow the steps in the README file on how to use the script to integrate the Ground Truth NER labeling job with Amazon Comprehend custom entity recognition.

Uploading the sample unlabeled corpus

Run the following commands to copy the sample data files to your S3 bucket:

The sample data shortens the time you spent annotating, and isn’t necessarily optimized for best model performance.

Running the NER labeling job

This step involves three manual steps:

- Create a private work team.

- Create a labeling job.

- Annotate your data.

You can reuse the private work team over different jobs.

When the job is complete, it writes an output.manifest file, which a Lambda function picks up automatically. The function converts this augmented manifest file into two files: .csv and .txt. Assuming that the output manifest is s3://, the two files for Amazon Comprehend are located under s3://.

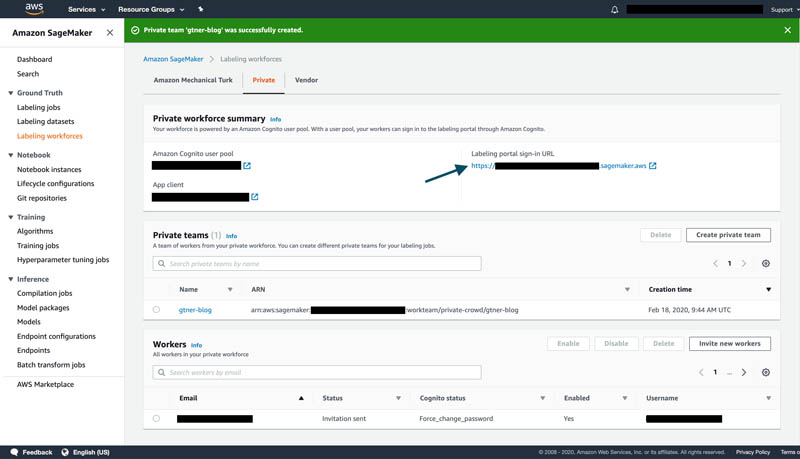

Creating a private work team

For this use case, you form a private work team with your own email address as the only worker. Ground Truth also allows you to use Amazon Mechanical Turk or a vendors workforce.

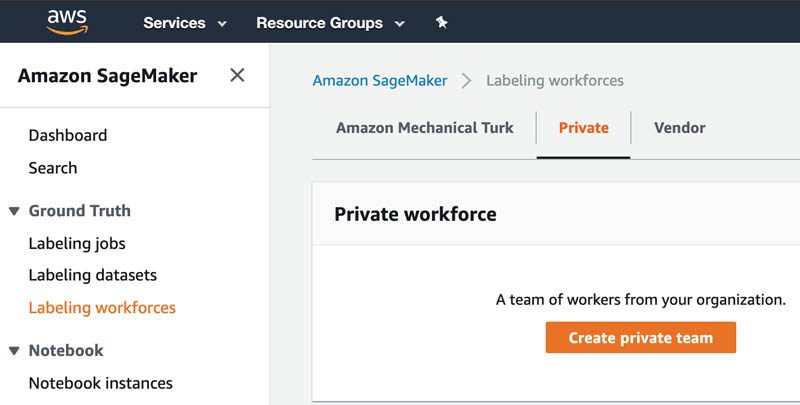

- On the Amazon SageMaker console, under Ground Truth, choose Labeling workforces.

- On the Private tab, choose Create private team.

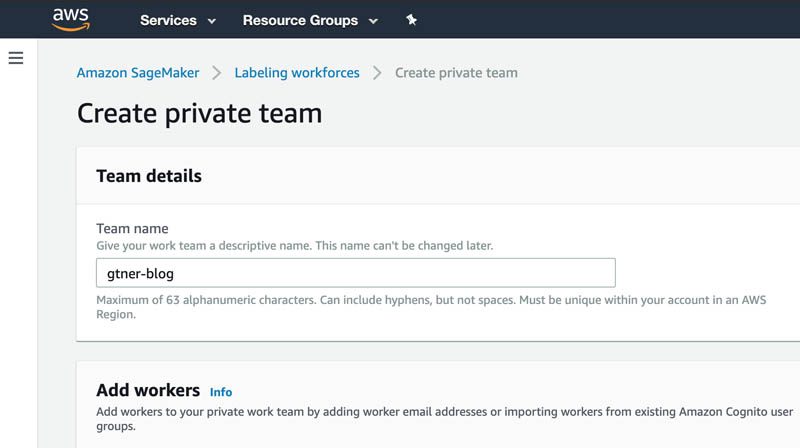

- For Team name, enter a name for your team.

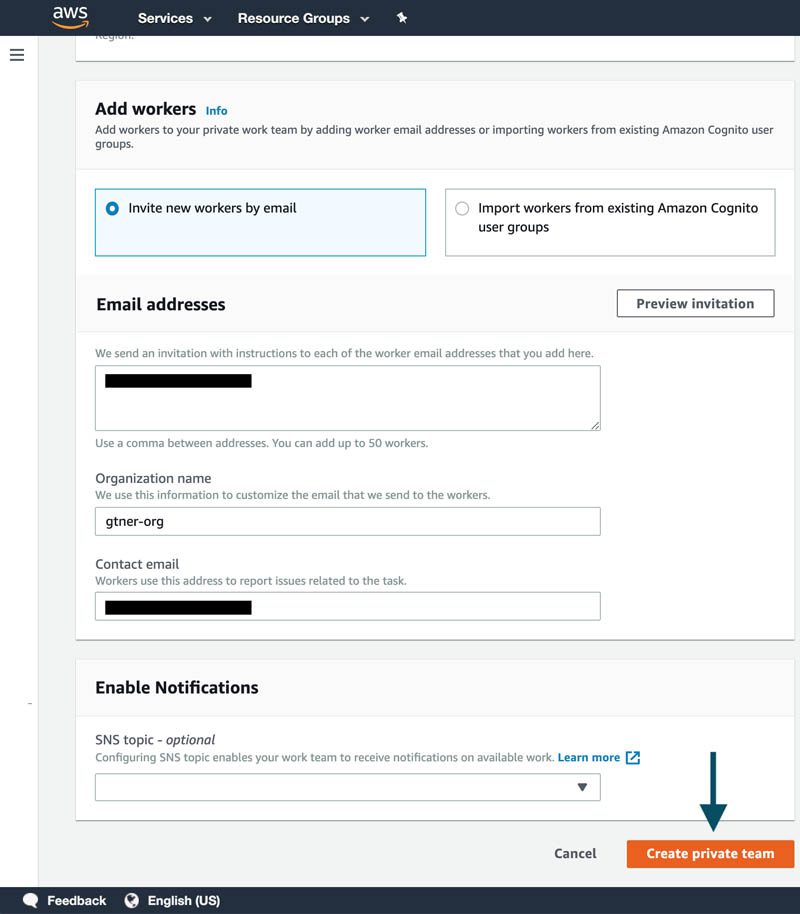

- For Add workers, select Invite new workers by email.

- For Email addresses, enter your email address.

- For Organization name, enter a name for your organization.

- For Contact email, enter your email.

- Choose Create private team.

- Go to the new private team to find the URL of the labeling portal.

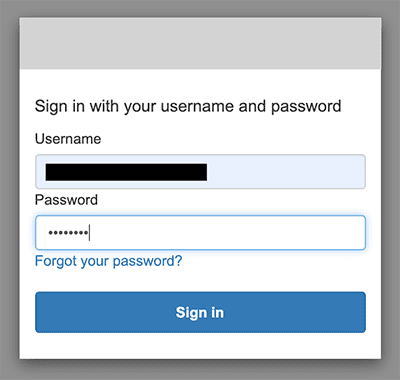

You also receive an enrollment email with the URL, user name, and a temporary password, if this is your first time being added to this team (or if you set up Amazon Simple Notification Service (Amazon SNS) notifications). - Sign in to the labeling URL.

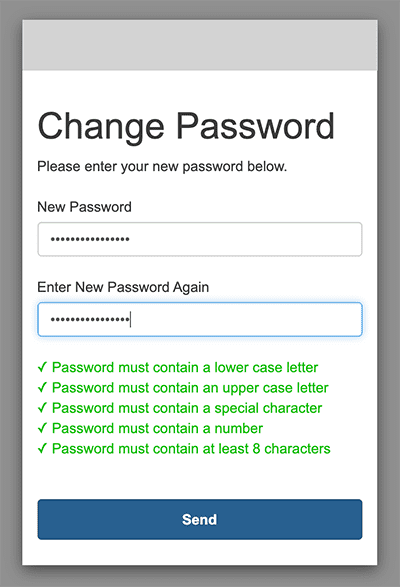

- Change the temporary password to a new password.

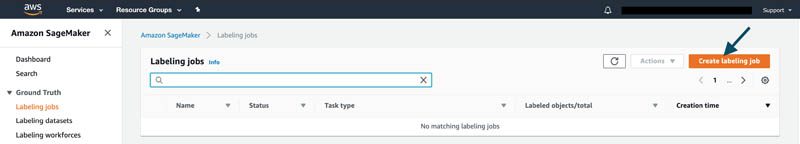

Creating a labeling job

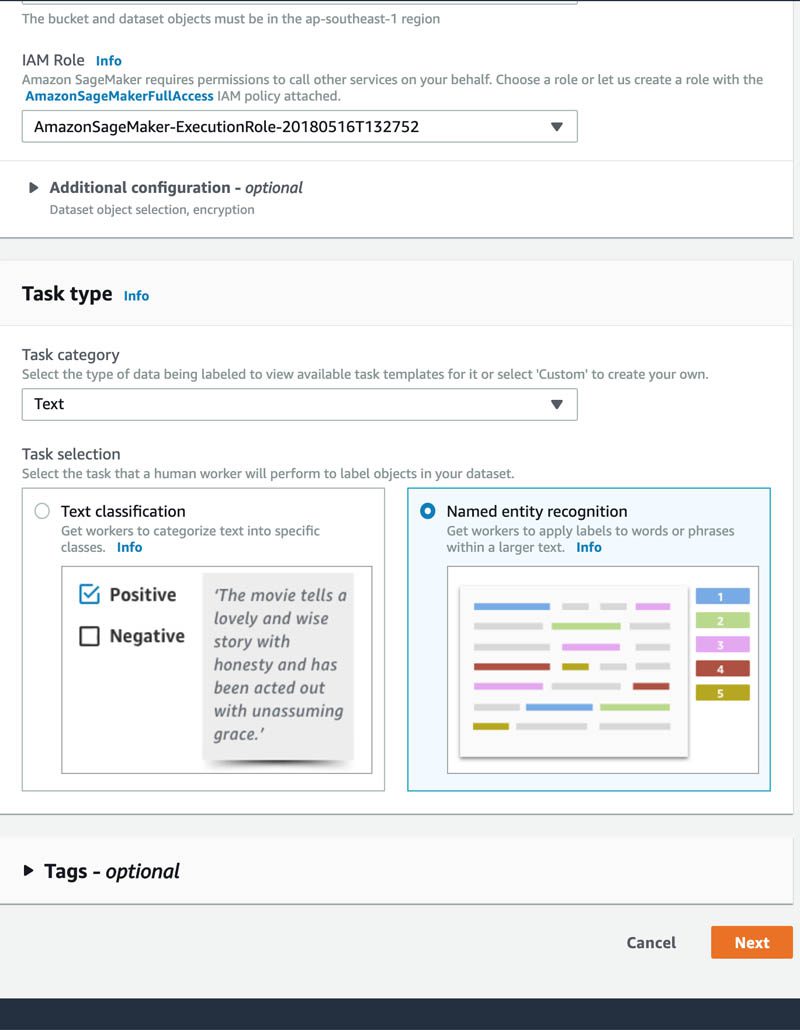

The next step is to create a NER labeling job. This post highlights the key steps. For more information, see Adding a data labeling workflow for named entity recognition with Amazon SageMaker Ground Truth.

To reduce the amount of annotation time, use the sample corpus that you have copied to your S3 bucket as the input to the Ground Truth job.

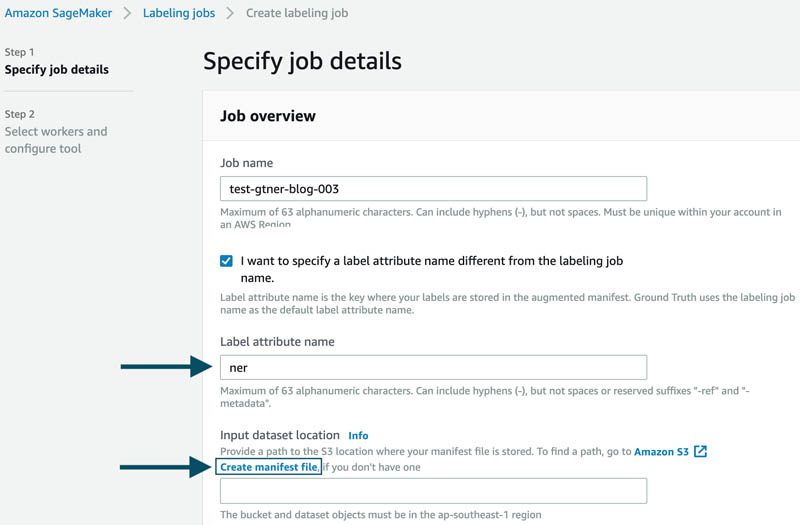

- On the Amazon SageMaker console, under Ground Truth, choose Labeling jobs.

- Choose Create labeling job.

- For Job name, enter a job name.

- Select I want to specify a label attribute name different from the labeling job name.

- For Label attribute name, enter ner.

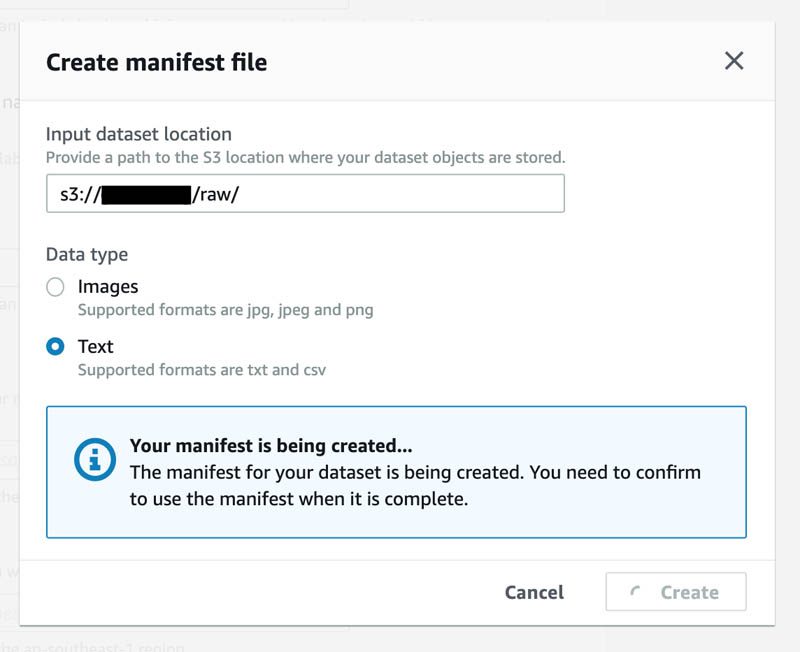

- Choose Create manifest file.

This step lets Ground Truth automatically convert your text corpus into a manifest file.

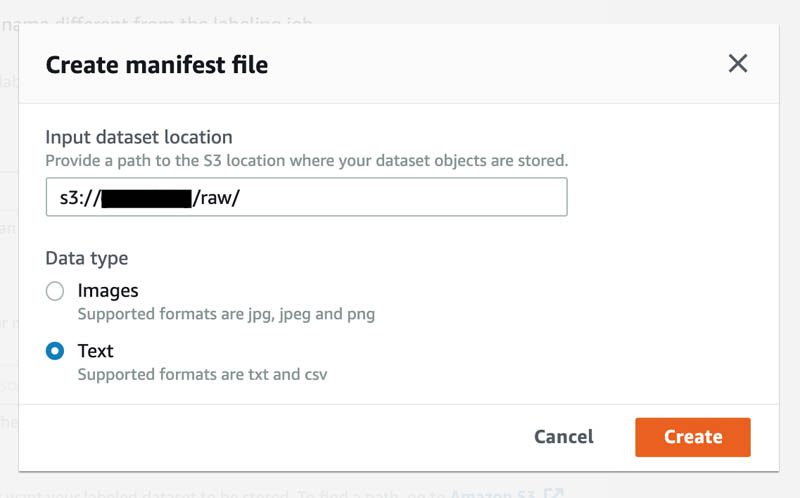

A pop-up window appears.

- For Input dataset location, enter the Amazon S3 location.

- For Data type, select Text.

- Choose Create.

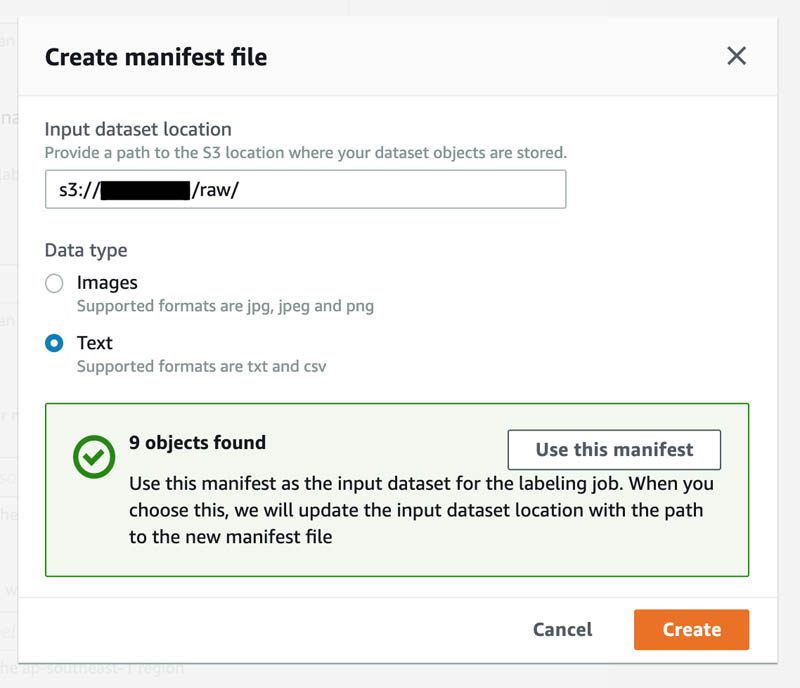

You see a message that your manifest is being created.

- When the manifest creation is complete, choose Create.

You can also prepare the input manifest yourself. Be aware that the NER labeling job requires its input manifest in the {"source": "embedded text"} format rather than the refer style {"source-ref": "s3://bucket/prefix/file-01.txt"}. In addition, the generated input manifest automatically detects line break n and generates one JSON line per line per document, whereas if you generate it on your own, you may decide for one JSON line per document (although you may still need to preserve the n for your downstream).

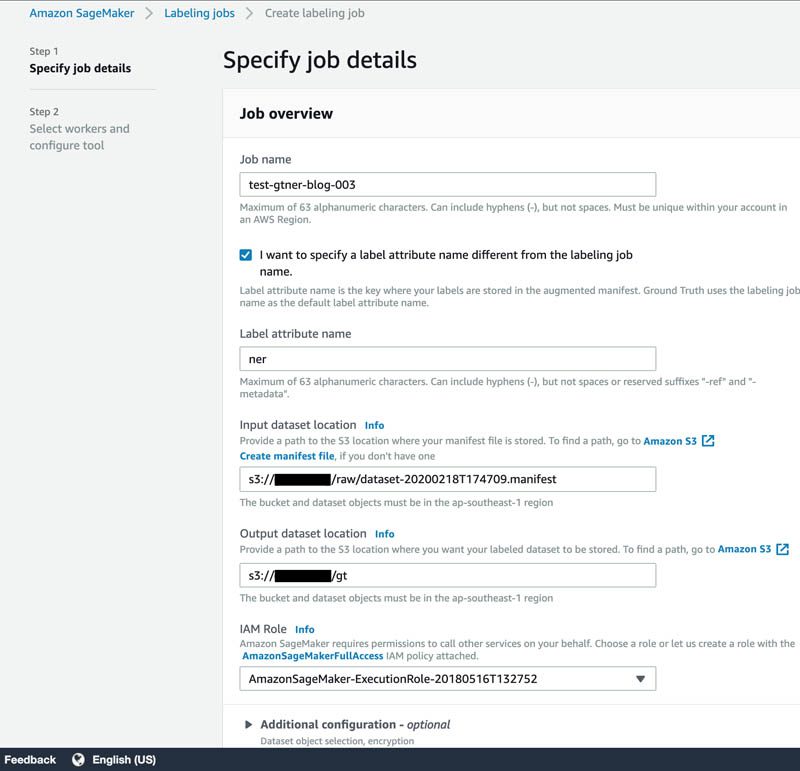

- For IAM role, choose the role the CloudFormation template created.

- For Task selection, choose Named entity recognition.

- Choose Next.

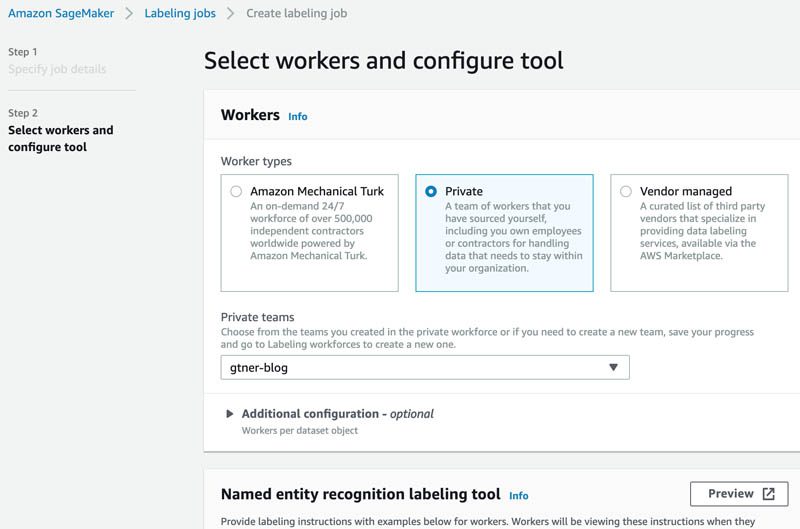

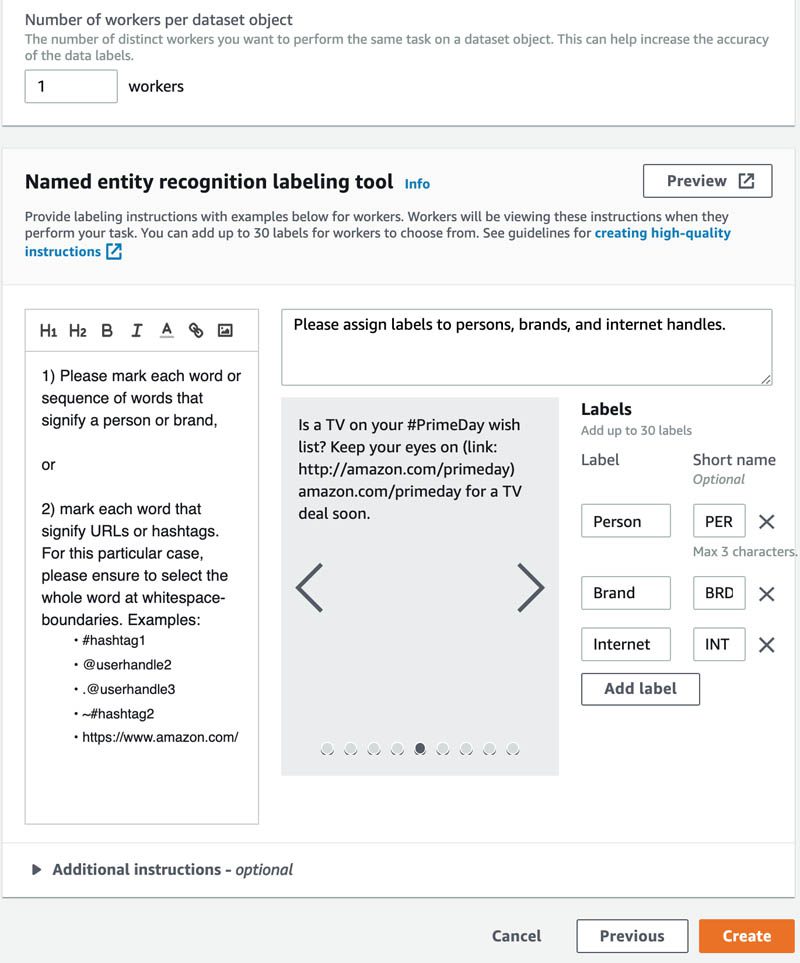

- On the Select workers and configure tool page, for Worker types, select Private.

- For Private teams, choose the private work team you created earlier.

- For Number of workers per dataset object, make sure the number of workers (1) matches the size of your private work team.

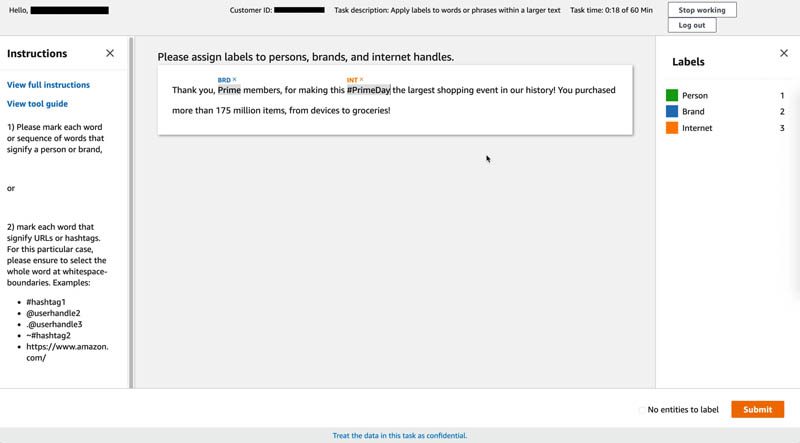

- In the text box, enter labeling instructions.

- Under Labels, add your desired labels. See the next screenshot for the labels needed for the recommended corpus.

- Choose Create.

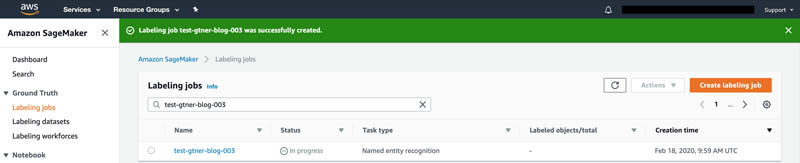

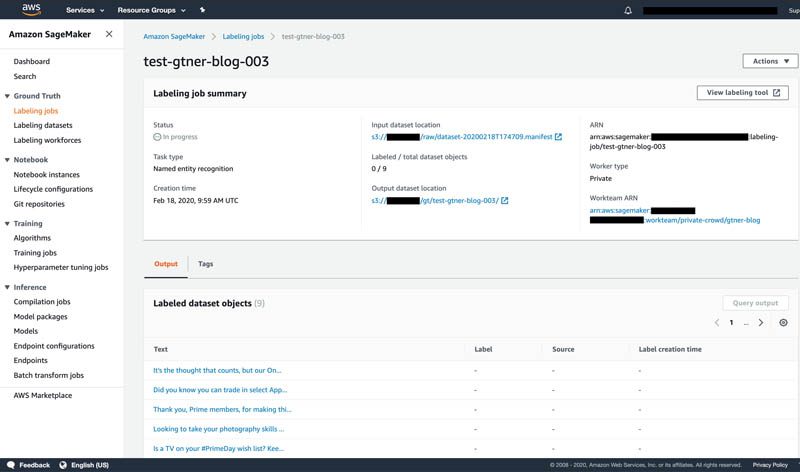

The job has been created, and you can now track the status of the individual labeling tasks on the Labeling jobs page.

The following screenshot shows the details of the job.

Labeling data

If you use the recommended corpus, you should complete this section in a few minutes.

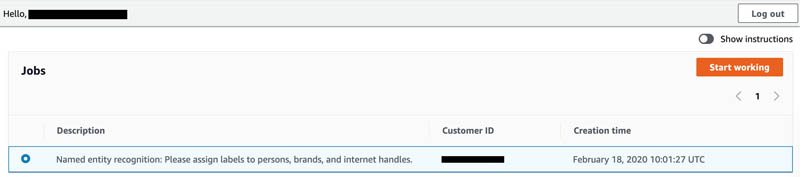

After you create the job, the private work team can see this job listed in their labeling portal, and can start annotating the tasks assigned.

The following screenshot shows the worker UI.

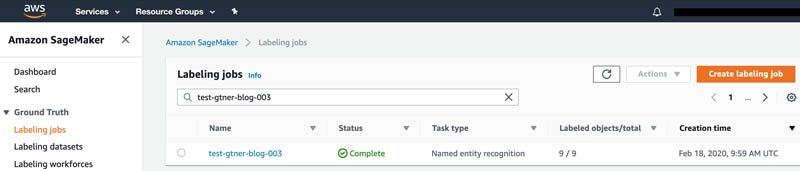

When all tasks are complete, the labeling job status shows as Completed.

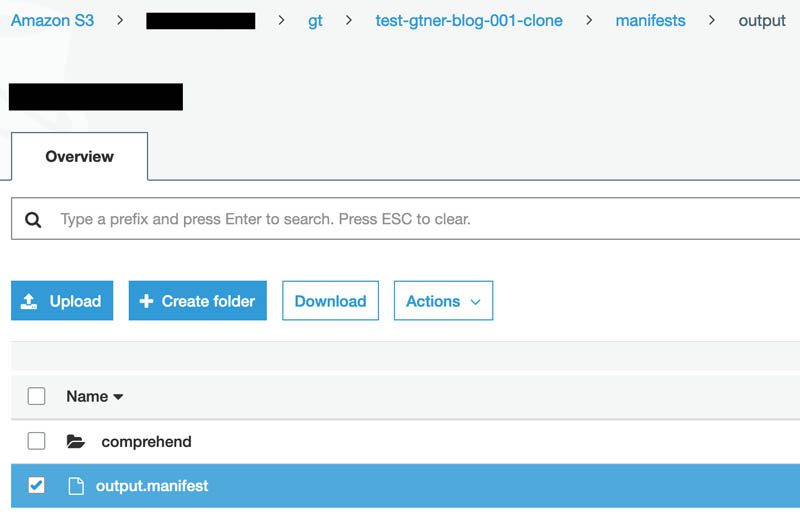

Post-check

The CloudFormation template configures the S3 bucket to emit an Amazon S3 put event to a Lambda function whenever new objects with the prefix manifests/output/output.manifest lands in the specific S3 bucket. However, AWS recently added Amazon CloudWatch Events support for labeling jobs, which you can use as another mechanism to trigger the conversion. For more information, see Amazon SageMaker Ground Truth Now Supports Multi-Label Image and Text Classification and Amazon CloudWatch Events.

The Lambda function loads the augmented manifest and converts the augmented manifest into comprehend/output.csv and comprehend/output.txt, located under the same prefix as the output.manifest. For example, s3:// yields the following:

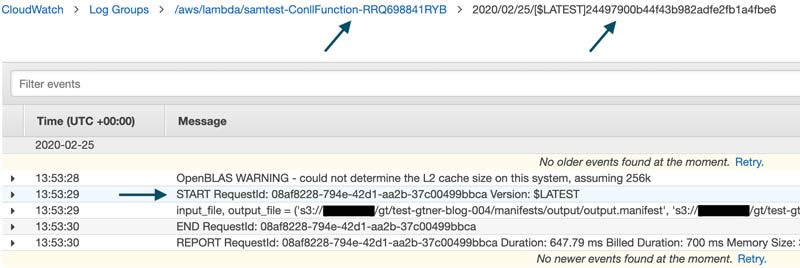

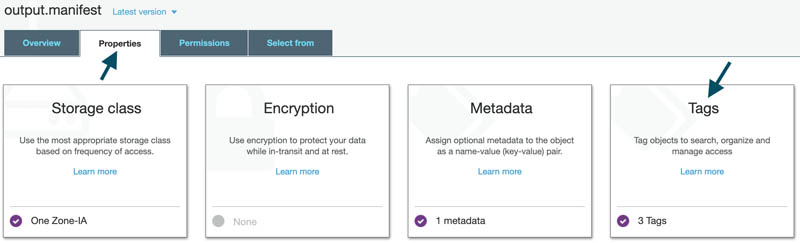

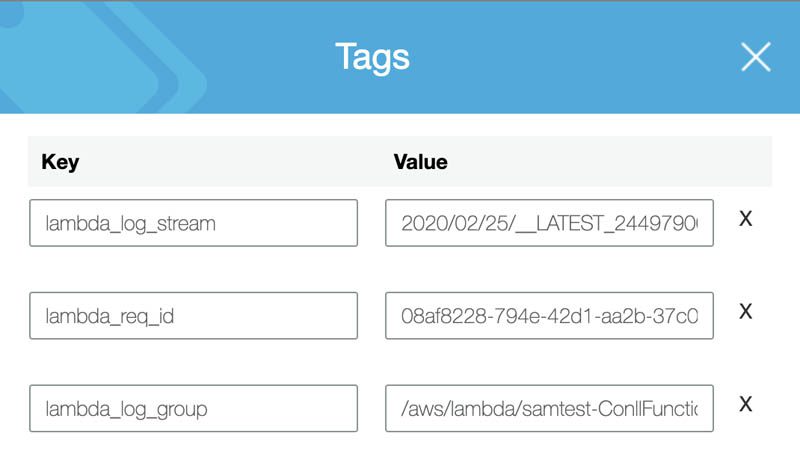

You can further track the Lambda execution context in CloudWatch Logs, starting by inspecting the tags that the Lambda function adds to output.manifest, which you can do on the Amazon S3 console or AWS CLI.

To track on the Amazon S3 console, complete the following steps:

- On the Amazon S3 console, navigate to the output

- Select

output.manifest.

- On the Properties tab, choose Tags.

- View the tags added by the Lambda function.

To use the AWS CLI, enter the following code (the log stream tag __LATEST_xxx denotes CloudWatch log stream [$LATEST]xxx, because [$] aren’t valid characters for Amazon S3 tags, and therefore substituted by the Lambda function):

You can now go to the CloudWatch console and trace the actual log groups, log streams, and the RequestId. See the following screenshot.

Training a custom NER model on Amazon Comprehend

Amazon Comprehend requires the input corpus to obey these minimum requirements per entity:

- 1000 samples

- Corpus size of 5120 bytes

- 200 annotations

The sample corpus you used for Ground Truth doesn’t meet this minimum requirement. Therefore, we have provided you with additional pre-generated Amazon Comprehend input. This sample data is meant to let you quickly start training your custom model, and is not necessarily optimized for model performance.

On your computer, enter the following code to upload the pre-generated data to your bucket:

Your s3:// folder should end up with two files: output.txt and output-x112.txt.

Your s3:// folder should contain output.csv and output-x112.csv.

You can now start your custom NER training.

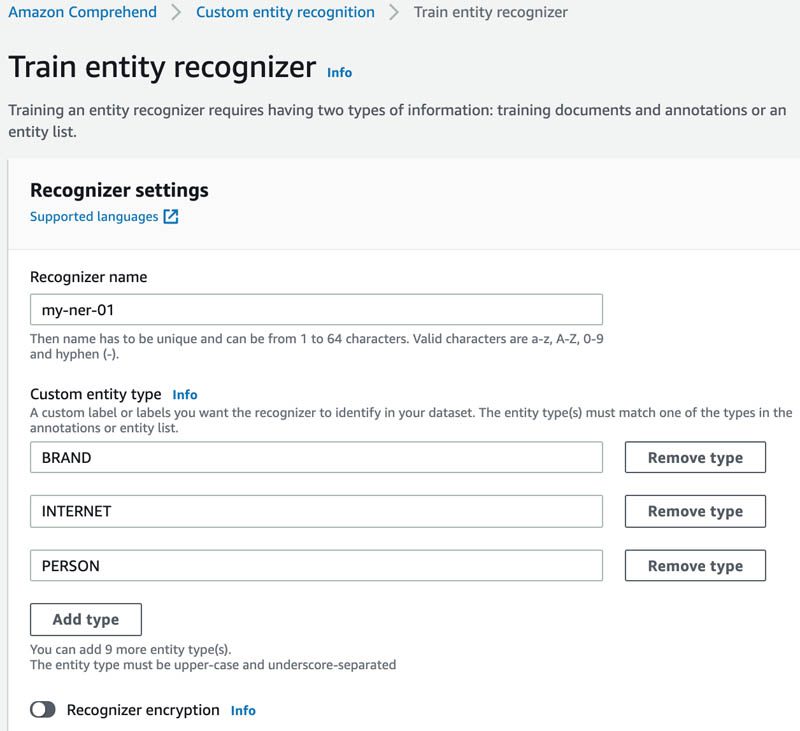

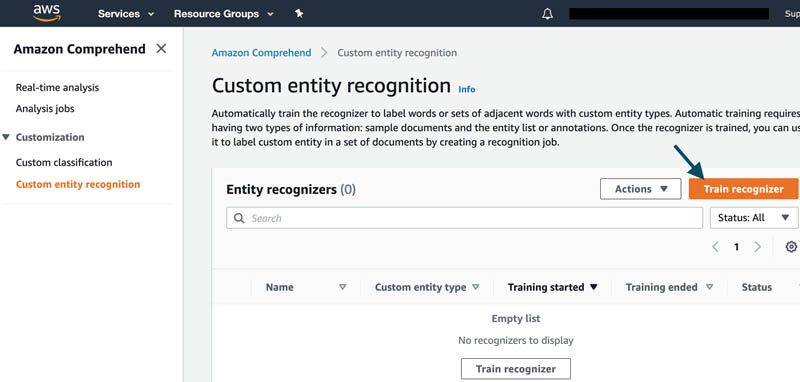

- On the Amazon Comprehend console, under Customization, choose Custom entity recognition.

- Choose Train recognizer.

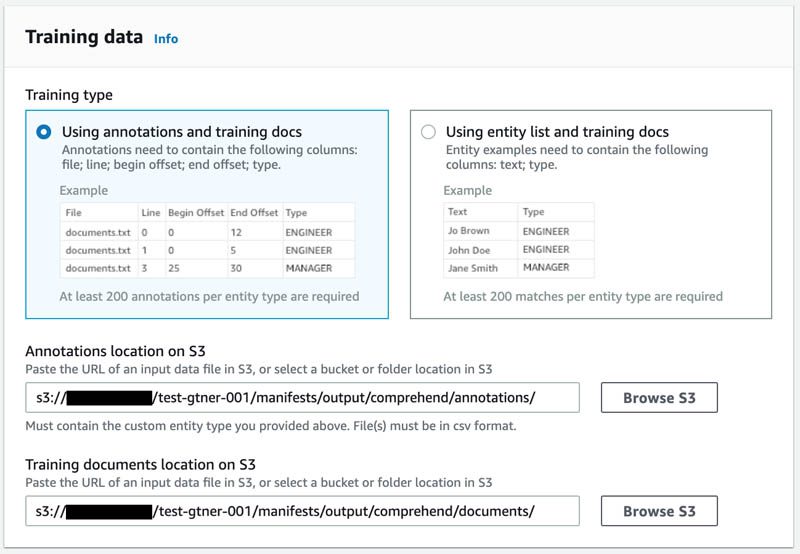

- For Recognizer name, enter a name.

- For Custom entity type, enter your labels.

Make sure the custom entity types match what you used in the Ground Truth job.

- For Training type, select Using annotations and training docs.

- Enter the Amazon S3 locations for your annotations and training documents.

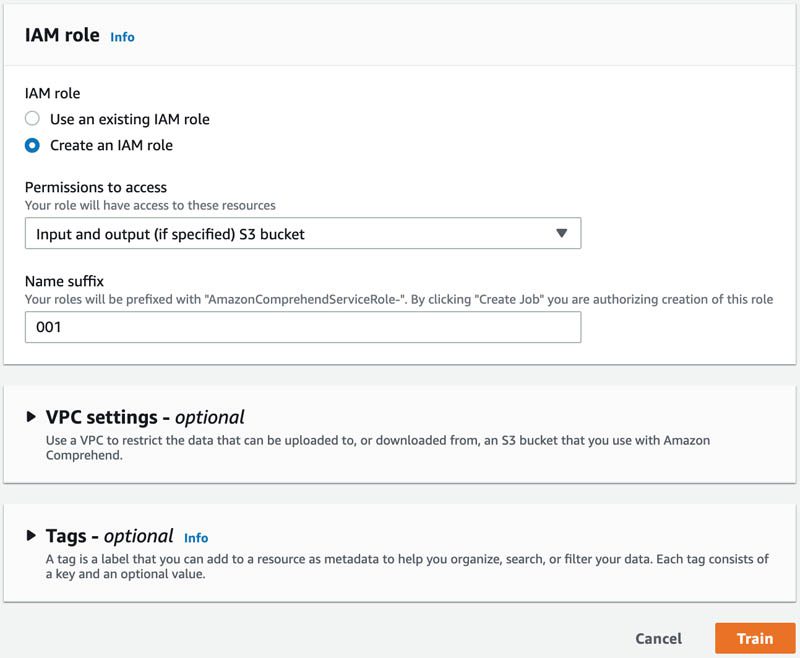

- For IAM role, if this is the first time you’re using Amazon Comprehend, select Create an IAM role.

- For Permissions to access, choose Input and output (if specified) S3 bucket.

- For Name suffix, enter a suffix.

- Choose Train.

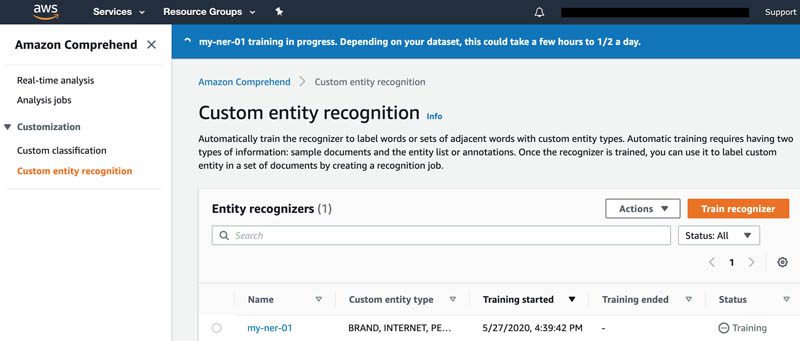

You can now see your recognizer listed.

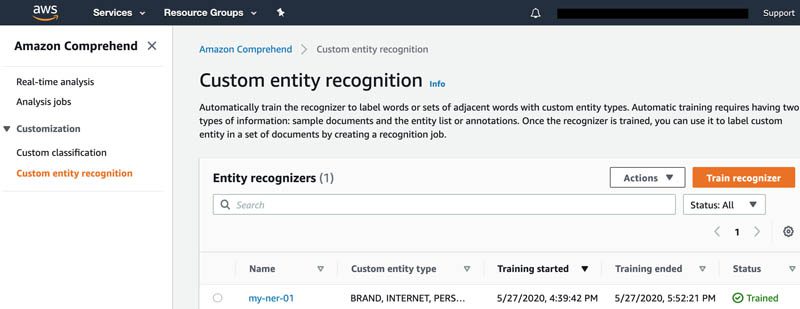

The following screenshot shows your view when training is complete, which can take up to 1 hour.

Cleaning up

When you finish this exercise, remove your resources with the following steps:

- Empty the S3 bucket (or delete the bucket)

- Terminate the CloudFormation stack

Conclusion

You have learned how to use Ground Truth to build a NER training dataset and automatically convert the produced augmented manifest into the format that Amazon Comprehend can readily digest.

As always, AWS welcomes feedback. Please submit any comments or questions.

About the Authors

Verdi March is a Senior Data Scientist with AWS Professional Services, where he works with customers to develop and implement machine learning solutions on AWS. In his spare time, he enjoys honing his coffee-making skills and spending time with his families.

Verdi March is a Senior Data Scientist with AWS Professional Services, where he works with customers to develop and implement machine learning solutions on AWS. In his spare time, he enjoys honing his coffee-making skills and spending time with his families.

Jyoti Bansal is a software development engineer on the AWS Comprehend team where she is working on implementing and improving NLP based features for Comprehend Service. In her spare time, she loves to sketch and read books.

Jyoti Bansal is a software development engineer on the AWS Comprehend team where she is working on implementing and improving NLP based features for Comprehend Service. In her spare time, she loves to sketch and read books.

Nitin Gaur is a Software Development Engineer with AWS Comprehend, where he works implementing and improving NLP based features for AWS. In his spare time, he enjoys recording and playing music.

Nitin Gaur is a Software Development Engineer with AWS Comprehend, where he works implementing and improving NLP based features for AWS. In his spare time, he enjoys recording and playing music.

Tags: Archive

Leave a Reply