Building a Pictionary-style game with AWS DeepLens and Amazon Alexa

Are you bored of the same old board games? Tired of going through the motions with charades week after week? In need of a fun and exciting way to mix up game night? Well we have a solution for you!

From the makers of AWS DeepLens, Guess My Drawing with DeepLens is a do-it-yourself recipe for building your very own Machine Learning (ML)-enabled Pictionary-style game! In this post, you learn how to harness the power of AWS DeepLens, the AWS programmable video camera for developers to learn ML, and Amazon Alexa, the Amazon cloud-based voice service.

You start by learning to deploy a trained model to AWS DeepLens that can recognize sketches drawn on a whiteboard and pair it with an Alexa skill that serves as the official scorekeeper.

When your recipe is complete, the fun begins!

Solution overview

Guess My Drawing with AWS DeepLens uses Alexa to host a multi-player drawing challenge game. To get started, gather your game supplies mentioned in the Prerequisites section.

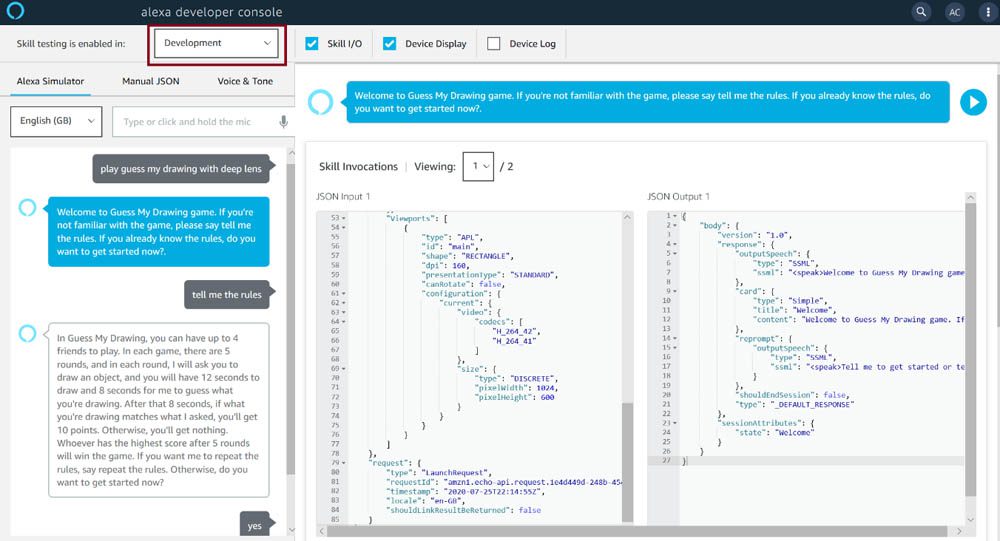

To initiate gameplay, simply say, “Alexa, play Guess My Drawing with DeepLens.” Alexa explains the game rules and asks how many players are playing the game. The players decide the turn order.

Alexa provides each player with a common word. For example, Alexa may say, “Your object to draw is bowtie.” The player has 12 seconds to draw it on a whiteboard without writing letters or words.

When time runs out, the player stops drawing and asks Alexa to share the results. The ML model running on AWS DeepLens predicts the object that you drew. If the object matches with what Alexa asks, Alexa awards 10 points. If DeepLens can’t correctly guess the drawing or the player takes more than 12 seconds to draw, no points are earned.

Alexa prompts the next participant with their word, repeating until all participants have taken a turn. After each round, Alexa provides a score update. The game ends after five rounds, and whoever has the highest score wins the game!

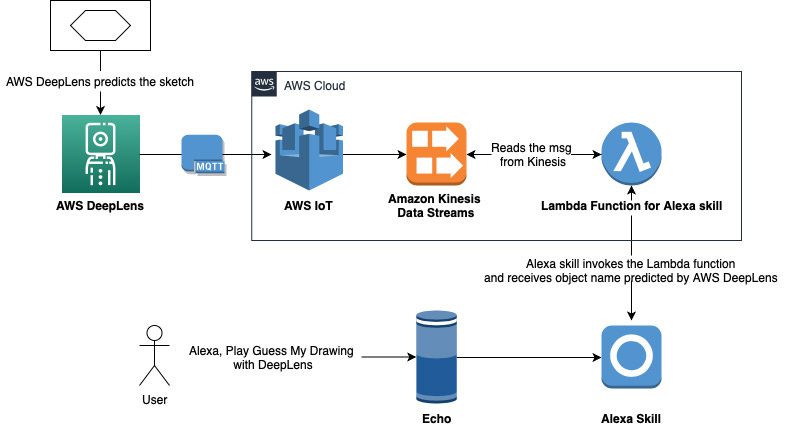

The following diagram shows the architecture of our solution.

This tutorial includes the following steps:

- Create an AWS DeepLens inference AWS Lambda function to isolate the drawing area and feed each camera frame into the model to generate predictions on the sketches.

- Deploy a pre-trained trained model included in this post to AWS DeepLens to perform image classification.

- Create an AWS IoT Core rule to send the results to Amazon Kinesis Data Streams.

- Create a custom Alexa skill with a different Lambda function to retrieve the detected objects from the Kinesis data stream and have Alexa verbalize the result to you.

Prerequisites

Before you begin this tutorial, make sure you have the following prerequisites:

- An AWS account

- An AWS DeepLens device, available from the following:

- Amazon.com (US)

- Amazon.ca (Canada)

- Amazon.co.jp (Japan)

- Amazon.de (Germany)

- Amazon.co.uk (UK)

- Amazon.fr (France)

- Amazon.es (Spain)

- Amazon.it (Italy)

- An Amazon Alexa device

- A whiteboard or paper pad (unlined)

- A marker or writing utensil

Creating an AWS DeepLens inference Lambda function

In this section, you create an inference function that you deploy to AWS DeepLens. The inference function isolates the drawing area, optimizes the model to run on AWS DeepLens, and feeds each camera frame into the model to generate predictions.

To create your function, complete the following steps:

- Download aws-deeplens-pictionary-lambda.zip.

- On the Lambda console, choose Create function.

- Choose Author from scratch and choose the following options:

- For Runtime, choose Python 2.7.

- For Choose or create an execution role, choose Use an existing role.

- For Existing role, enter

service-role/AWSDeepLensLambdaRole.

- After you create the function, go to the Function code

- From the Actions drop-down menu in Function code, choose Upload a .zip file.

- Upload the

aws-deeplens-pictionary-lambda.zipfile you downloaded earlier. - Choose Save.

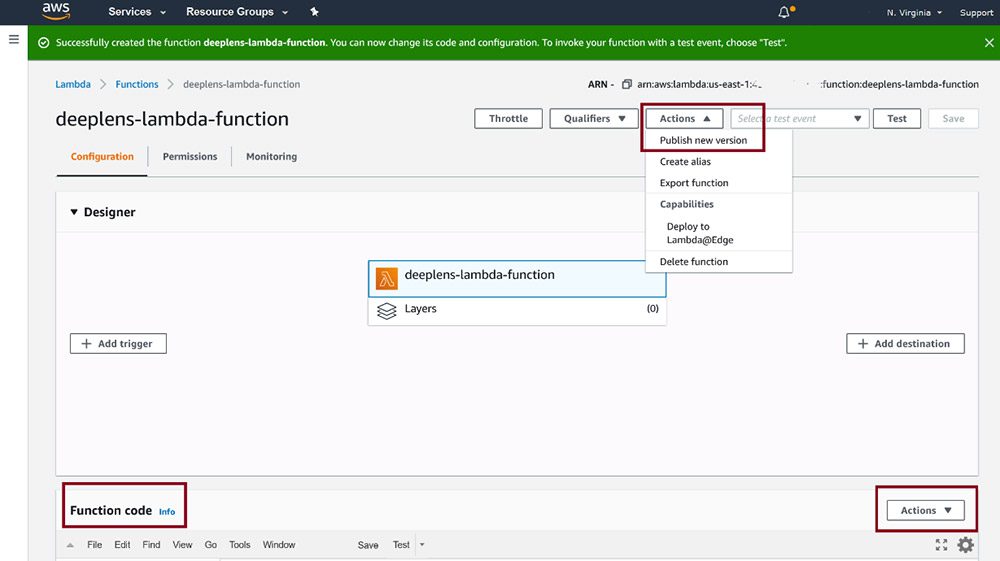

- From the Actions drop-down menu, choose Publish new version.

- Enter a version number and choose Publish.

Publishing the function makes it available on the AWS DeepLens console so you can add it to your custom project.

Understanding the Lambda function

You should pay attention to the following two files:

- labels.txt – This file is for the inference function to translate the numerical result from the model into human readable labels used in our game. It contains a list of 36 objects on which the model has been trained to recognize sketches.

- lambda_function.py – This file contains the preprocessing algorithm and the function being called to generate predictions on drawings and send back results.

Because the model was trained on digital sketches with clean, white backgrounds, we have a preprocessing algorithm that helps isolate the drawing and remove any clutter in the background. You can find the algorithm to do this in the isolate_image() function inside the lambda_function.py file. In this section, we walk you through some important parts of the preprocessing algorithm.

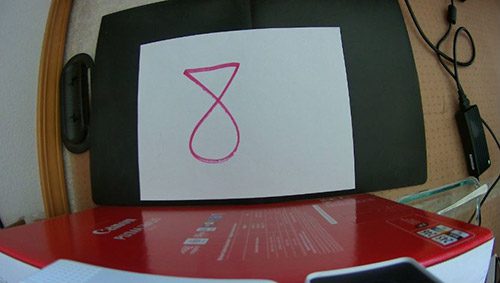

Fisheye calibration

AWS DeepLens uses a wide-angle lens to get as much information as possible in the frame. As a result, any input frame is distorted, especially for the rectangular shape of a whiteboard. Therefore, you need to perform fisheye calibration to straighten the edges. As part of this post, we provide the calibration code to undistort your AWS DeepLens images. The following code straightens the edges and eliminates the distortion:

The following screenshots shows the raw image captured by AWS DeepLens.

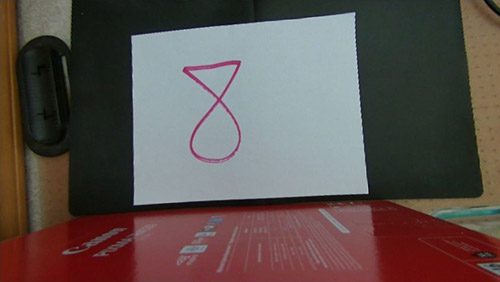

The following screenshot shows the results of the undistort function with fisheye calibration.

The next code section enhances the images to eliminate the effects caused by different lighting conditions:

The following screenshot shows the results of the contrast enhancement.

Canny Edge Detection

The next part of the preprocessing algorithm uses OpenCV’s Canny Edge Detection technique to find the edges in the image. See the following code:

The following screenshot shows the results from applying the Canny Edge Detector.

For more information about how Canny Edge Detection works, see the Canny Edge Detection tutorial on the OpenCV website.

Finding contours

After the edges are found, you can use OpenCV’s findContours() function to extract the polygon contours from the image. This function returns a list of polygon shapes that are closed and ignores any open edges or lines. See the following code:

For more information, see Contours: Getting Started.

Perspective transformation

Finally, the preprocessing algorithm does perspective transformation to correct for any skew. The following code helps achieve perspective transformation and crop a rectangular area:

The following image is the input of the preprocessing algorithm.

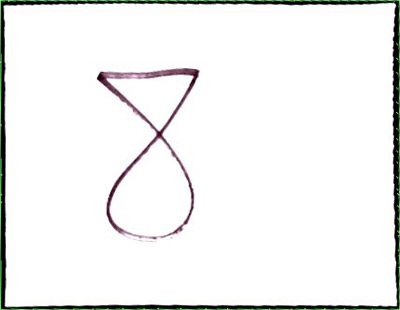

The following image is the final result.

Performing inference

In this section, you learn how to perform inference with an ML model and send back results from AWS DeepLens.

AWS DeepLens uses the Intel OpenVino model optimizer to optimize the ML model to run on DeepLens hardware. The following code optimizes a model to run locally:

The following code loads the model:

The following code helps run the model frame-per-frame over the images from the camera:

Viewing the text results in the cloud is a convenient way to make sure the model is working correctly. Each AWS DeepLens device has a dedicated iot_topic automatically created to receive the inference results. The following code sends the messages from AWS DeepLens to the IoT Core console:

Deploying the model to AWS DeepLens

In this section, you set up your AWS DeepLens device, import a pre-trained model, and deploy the model to AWS DeepLens.

Setting up your AWS DeepLens device

You first need to register your AWS DeepLens device, if you haven’t already.

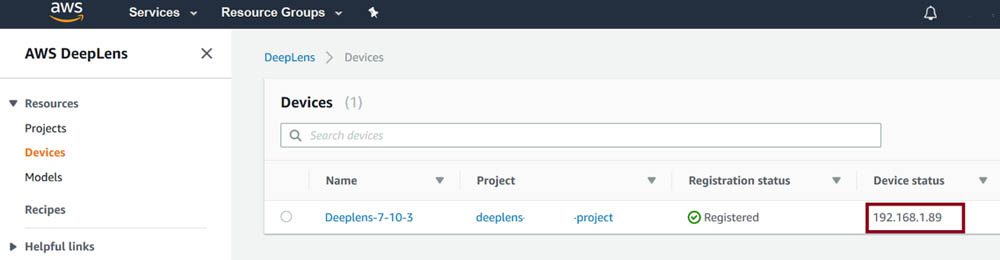

After you register your device, you need to install the latest OpenCV (version 4.x) packages and Pillow libraries to enable the preprocessing algorithm in the DeepLens inference Lambda function. To do so, you need the IP address of AWS DeepLens on the local network, which is listed in the Device details section. You also need to ensure that Secure Shell (SSH) is enabled for your device. For more information about enabling SSH on your device, see View or Update Your AWS DeepLens 2019 Edition Device Settings.

Open a terminal application on your computer. SSH into your DeepLens by entering the following code into your terminal application:

Then enter the following commands in the SSH terminal:

Importing the model to AWS DeepLens

For this post, you use a pre-trained model. We trained the model for 36 objects on The Quick Draw Dataset made available by Google, Inc., under the CC BY 4.0 license. For each object, we took 1,600 images for training and 400 images for testing the model from the dataset. Holding back 400 images for testing allows us to measure the accuracy of our model against images that it has never seen.

For instructions on training a model using Amazon SageMaker as the development environment, see AWS DeepLens Recipes and Amazon SageMaker: Build an Object Detection Model Using Images Labeled with Ground Truth.

To import your model, complete the following steps:

- Download the model aws-deeplens-pictionary-game.tar.gz.

- Create an Amazon Simple Storage Service (Amazon S3) bucket to store this model. For instructions, see How do I create an S3 Bucket?

The S3 bucket name must contain the term deeplens. The AWS DeepLens default role has permission only to access the bucket with the name containing deeplens.

- After the bucket is created, upload

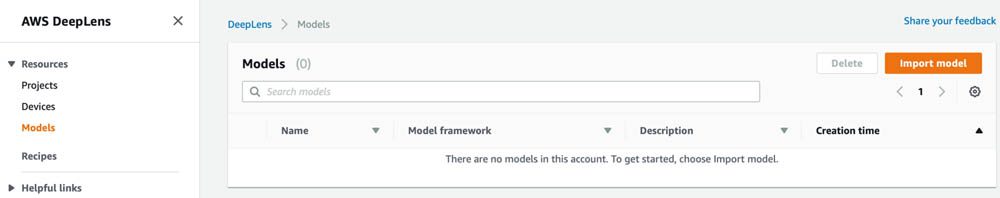

aws-deeplens-pictionary-game.tar.gzto the bucket and copy the model artifact path. - On the AWS DeepLens console, under Resources, choose Models.

- Choose Import model.

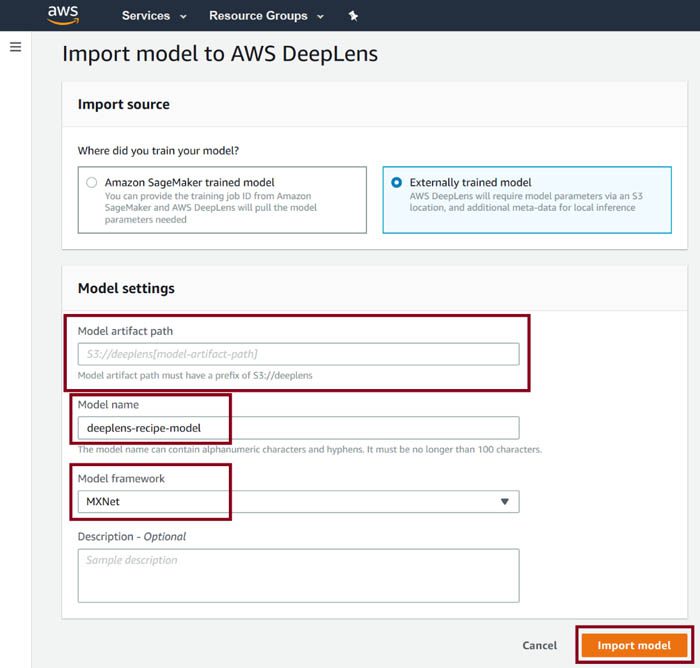

- On the Import model to AWS DeepLens page, choose Externally trained model.

- For Model artifact path, enter the Amazon S3 location for the model you uploaded earlier.

- For Model name, enter a name.

- For Model framework, choose MXNet.

- Choose Import model.

Deploying the model to your AWS DeepLens device

To deploy your model, complete the following steps:

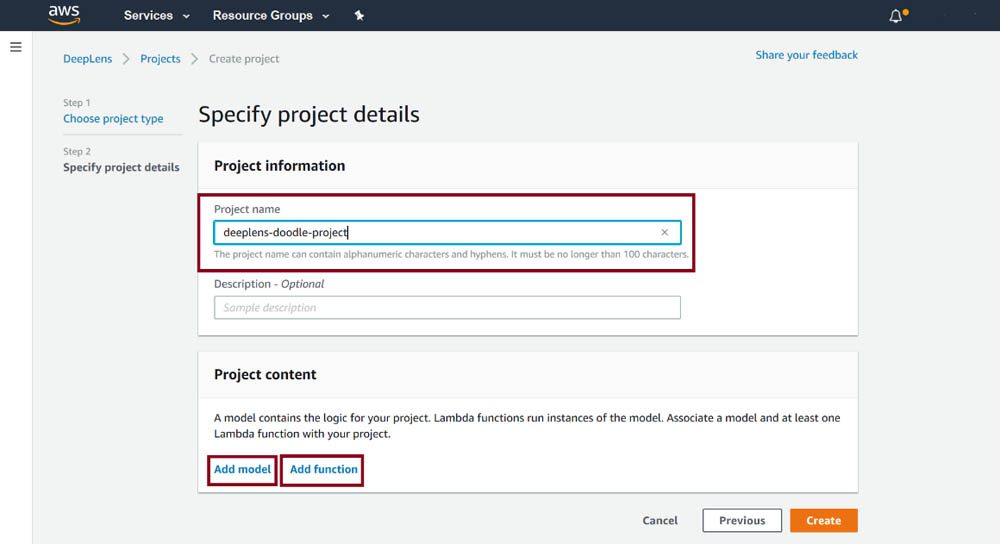

- On the AWS DeepLens console, under Resources, choose Projects.

- Choose Create new project.

- Choose Create a new blank project.

- For Project name, enter a name.

- Choose Add model and choose the model you imported earlier.

- Choose Add function and choose the Lambda function you created earlier.

- Choose Create.

- Select your newly created project and choose Deploy to device.

- On the Target device page, select your device from the list.

- On the Review and deploy page, choose Deploy.

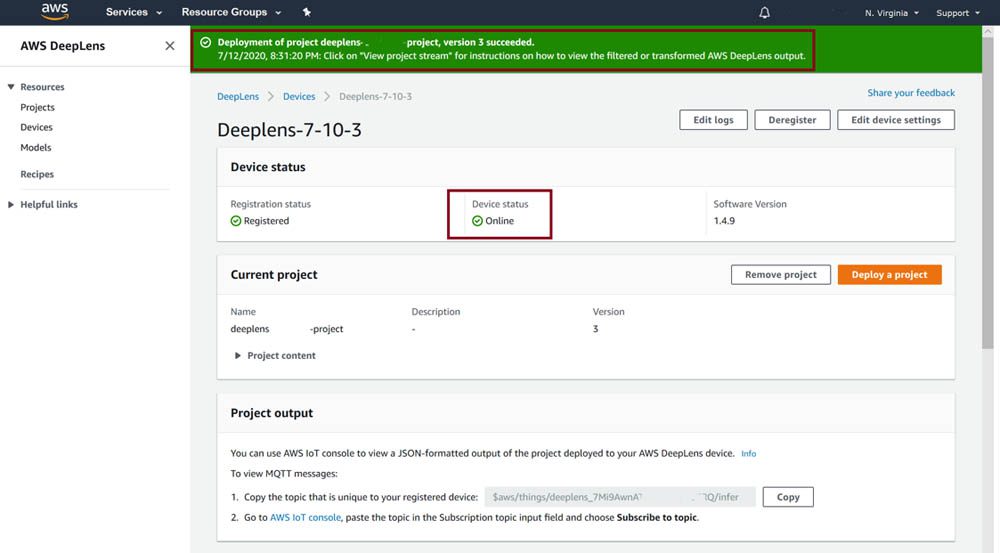

The deployment can take up to 5 minutes to complete, depending on the speed of the network your AWS DeepLens is connected to. When the deployment is complete, you should see a green banner message that the deployment succeeded.

To verify that the project was deployed successfully, you can check the text prediction results sent to the cloud via AWS IoT Greengrass. For instructions, see Using the AWS IoT Greengrass Console to View the Output of Your Custom Trained Model (Text Output).

In addition to the text results, you can view the pose detection results overlaid on top of your AWS DeepLens live video stream. For instructions, see Viewing AWS DeepLens Output Streams.

Sending results from AWS DeepLens to a data stream

In this section, you learn how to send messages from AWS DeepLens to a Kinesis data stream by configuring an AWS IoT rule.

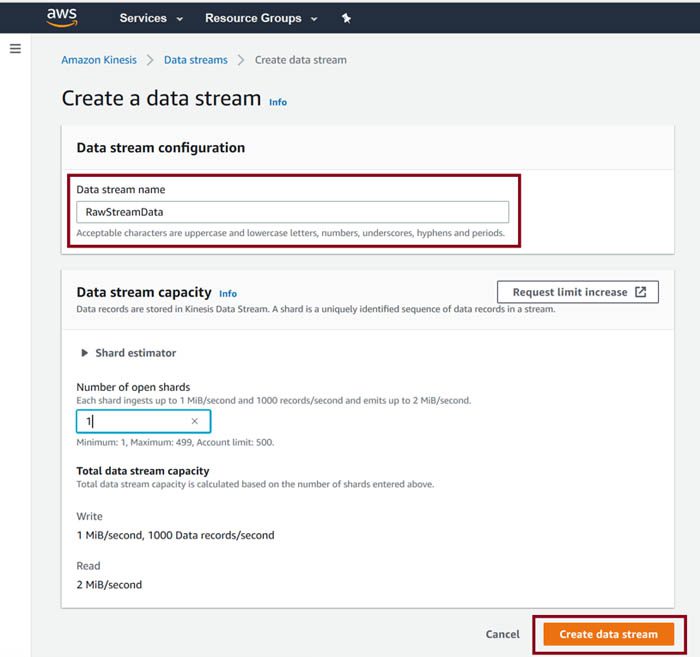

- On the Kinesis console, create a new data stream.

- For Data stream name, enter a name.

- For Number of shards, choose 1.

- Choose Create data stream.

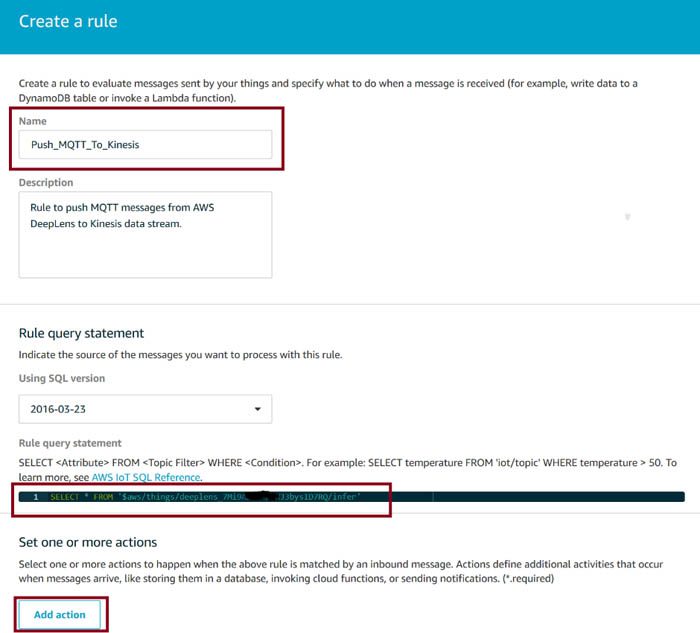

- On the AWS IoT console, under Act, choose Rules.

- Choose Create to set up a rule to push MQTT messages from AWS DeepLens to the newly created data stream.

- On the Create a rule page, enter a name for your rule.

- For Rule query statement, enter the DeepLens device MQTT topic.

- Choose Add action.

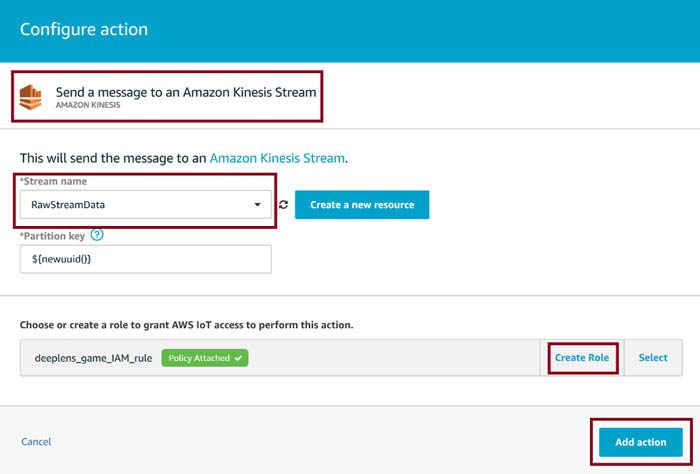

- Choose Send a message to an Amazon Kinesis Stream.

- Choose Configuration.

- Choose the data stream you created earlier.

- For Partition key, enter

${newuuid()}. - Choose Create a new role or Update role.

- Choose Add action.

- Choose Create rule to finish the setup.

Now that the rule is set up, MQTT messages are loaded into the data stream.

Amazon Kinesis Data Streams is not currently available in the AWS Free Tier, which offers a free trial for a group of AWS services. For more information about the pricing of Amazon Kinesis Data Streams, see link.

We recommend that you delete the data stream after completing the tutorial because charges occur on an active data stream even when you aren’t sending and receiving messages.

Creating an Alexa skill

In this section, you first create a Lambda function that queries a data stream and returns the sketches detected by AWS DeepLens to Alexa. Then, you create a custom Alexa skill to start playing the game.

Creating a custom skill with Lambda

To create your custom skill in Lambda, complete the following steps:

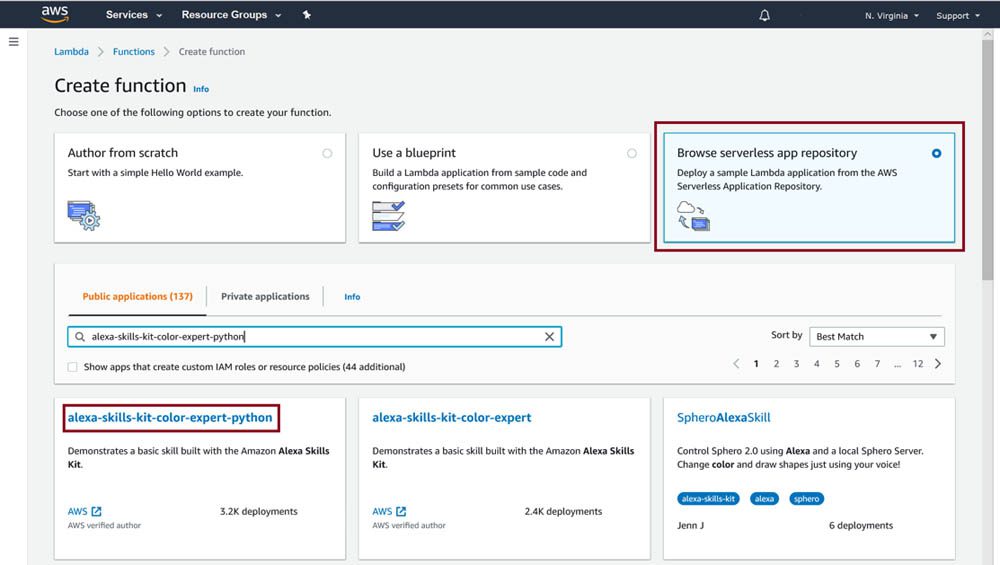

- On the Lambda console, create a new function.

The easiest way to create an Alexa skill is to create the function from the existing blueprints or serverless app repository provided by Lambda and overwrite the code with your own.

- For Create function, choose Browse serverless app repository.

- For Public repositories, search for and choose alexa-skills-kit-color-expert-python.

- Under Application settings, enter an application name and

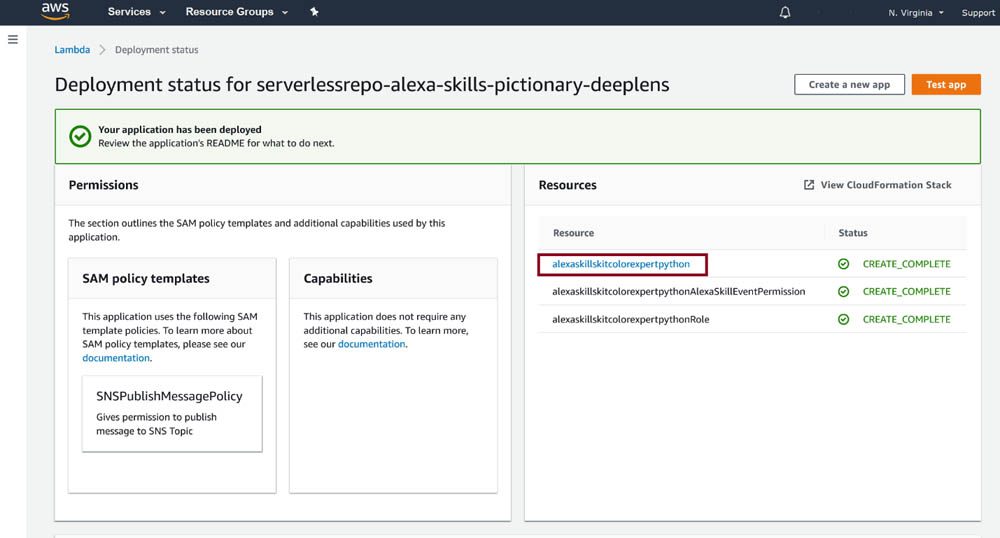

TopicNameParameter. - Choose Deploy.

- When the application has been deployed, open the Python file.

- Download the alexa-lambda-function.py file onto your computer.

- Copy the Python code from the file and replace the sample code in the

lambda_function.pyfile in the Function code section.

This function includes the entire game logic, reads data from the data stream, and returns the result to Alexa. Be sure to change the Region from the default (us-east-1) if you’re in a different Region. See the following code:

- Set the Timeout value to 20 seconds.

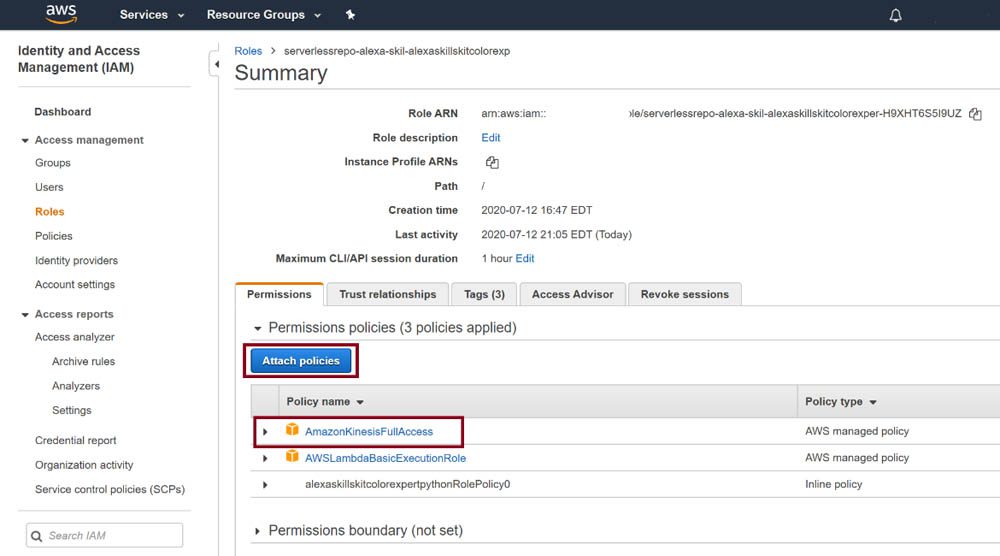

You now need to give your Lambda function IAM permissions to read data from the data stream.

- In your Lambda function editor, choose Permissions.

- Choose the Role name under the Execution role.

You’re directed to the IAM role editor.

- In the editor, choose Attach policies.

- Enter

Kinesisand choose AmazonKinesisFullAccess. - Choose Attach policy.

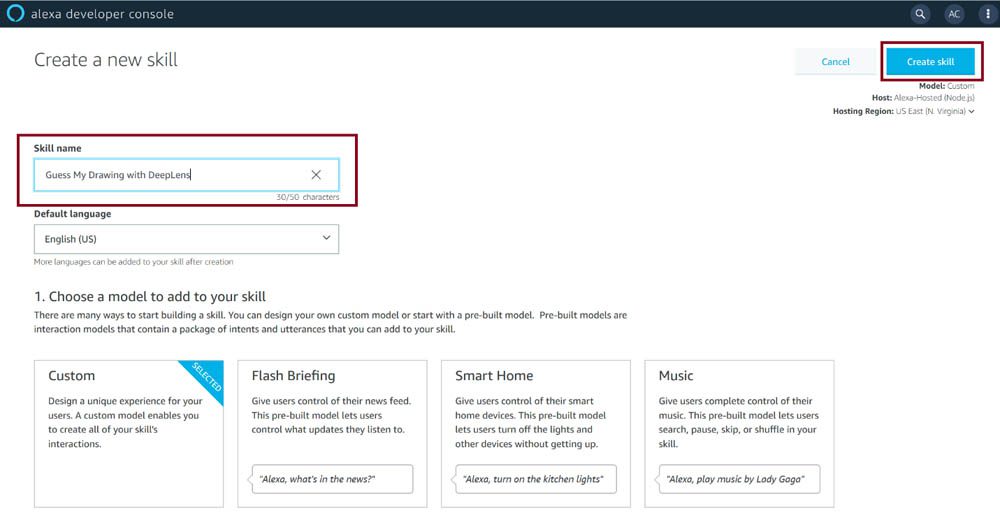

Creating a custom skill to play the game

To create your second custom skill to start playing the game, complete the following steps:

- Log in to the Alexa Developer Console.

- Create a new custom Alexa skill.

- On the Create a new skill page, for Skill name, enter a skill name

- For Choose a model to add to your skill, choose Custom.

- For Choose a method to host your skill’s backend resources, choose Provision your own.

- Choose Create skill.

- On the next page, choose the default template to add your skill.

- Choose Continue with template.

After about 1–2 minutes, your skill appears on the console.

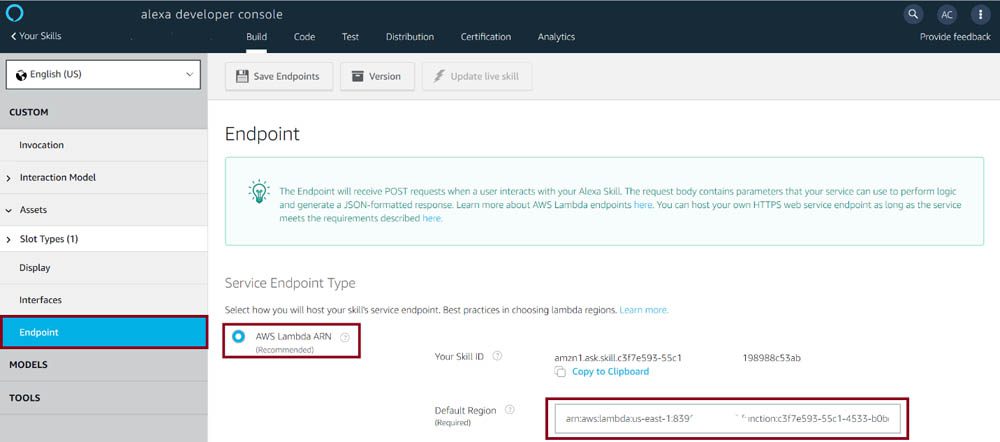

- In the Endpoint section, enter the Amazon Resource Name (ARN) of the Lambda function created for the Alexa skill in the previous step.

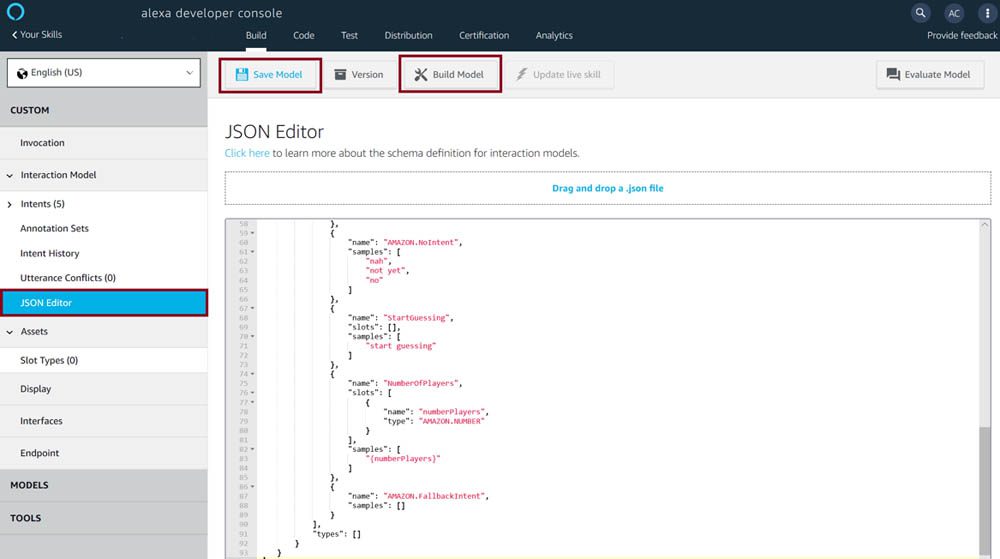

- Download alexa-skill-json-code.txt onto your computer.

- Copy the code from the file and paste in the Alexa skill JSON editor to automatically configure intents and sample utterances for the custom skill.

In the Alexa architecture, intents can be thought of as distinct functions that a skill can perform. Intents can take arguments that are known here as slots.

- Choose Save Model to apply the changes.

- Choose Build Model.

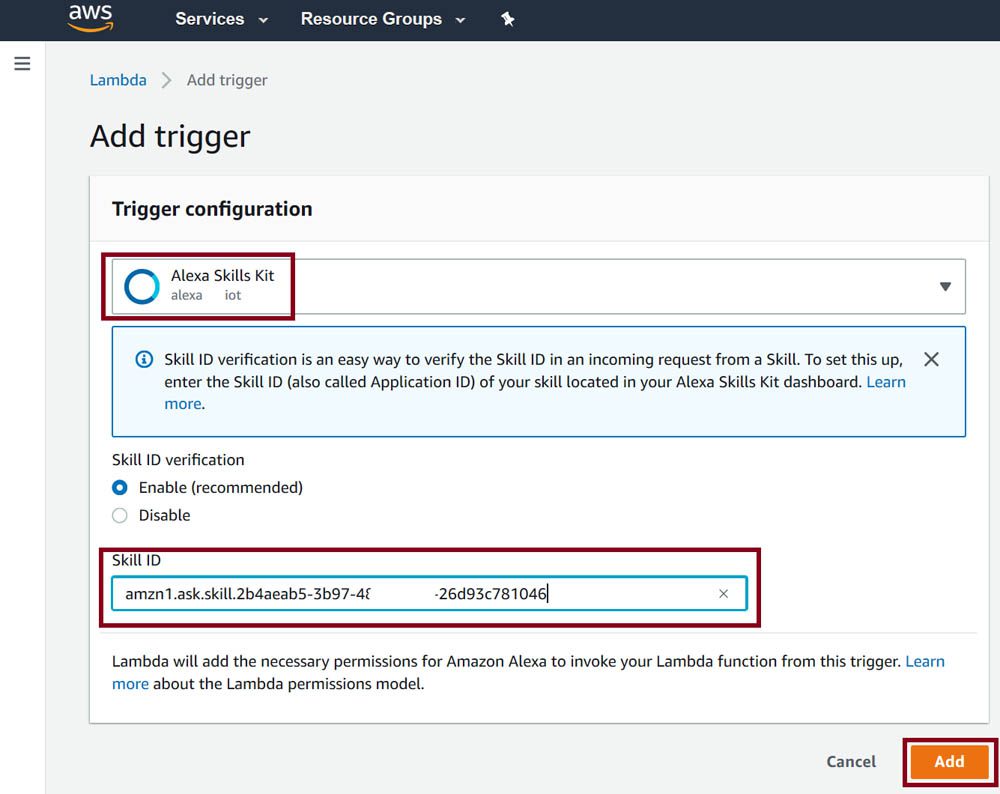

- On the Lambda console, open the Lambda function for the Alexa skill you created earlier.

You need to enable the skill by adding a trigger to the Lambda function.

- Choose Add trigger.

- Choose Alexa Skills Kit.

- For Skill ID, enter the ID for the Alexa skill you created.

- Choose Add.

Testing the skill

Your Alexa skill is now ready to tell you the drawings detected by AWS DeepLens. To test with an Alexa-enabled device (such as an Amazon Echo), register the device with the same email address you used to sign up for your developer account on the Amazon Developer Portal. You can invoke your skill with the wake word and your invocation name: “Alexa, Play Guess My Drawing with DeepLens.”

The language in your Alexa companion app should match with the language chosen in your developer account. Alexa considers English US and English UK to be separate languages.

Alternatively, the Test page includes a simulator that lets you test your skill without a device. For Skill testing is enabled in, choose Development. You can test your skill with the phrase, “Alexa, Play Guess My Drawing with DeepLens.”

Windows 10 users can download the free Alexa app from the Microsoft Store and interact with it from their PC.

For more information on testing your Alexa skill, see Test Your Skill. For information on viewing the logs, check Amazon CloudWatch logs for AWS Lambda.

The following diagram shows the user interaction flow of our game.

The following images show the prediction outputs of our model with the name of an object and its probability. You need to have your AWS DeepLens located in front of a rectangular-shaped whiteboard or a piece of white paper to ensure that the edges are visible in the frame.

Conclusion

In this post, you learned about the preprocessing algorithm to isolate a drawing area and how to deploy a pre-trained model onto AWS DeepLens to recognize sketches. Next, you learned how to send results from AWS IoT to Kinesis Data Streams. Finally, you learned how to create a custom Alexa skill with Lambda to retrieve the detected objects in the data stream and return the results to players via Alexa.

For other tutorials, samples, and project ideas with AWS DeepLens, see AWS DeepLens Recipes.

About the Authors

Amit Choudhary is a Senior Product Manager Technical Intern. He loves to build products that help developers learn about various machine learning techniques in a fun and hands-on manner.

Amit Choudhary is a Senior Product Manager Technical Intern. He loves to build products that help developers learn about various machine learning techniques in a fun and hands-on manner.

Phu Nguyen is a Product Manager for AWS DeepLens. He builds products that give developers of any skill level an easy, hands-on introduction to machine learning.

Phu Nguyen is a Product Manager for AWS DeepLens. He builds products that give developers of any skill level an easy, hands-on introduction to machine learning.

Brian Nguyen is an undergraduate senior in majoring Electrical Engineering with a concentration of Digital Signal & Image Processing at University of Washington Seattle.

Hanwen Guo is an undergraduate senior majoring in Electrical Engineering with a concentration of Digital Signal & Image Processing at University of Washington Seattle.

Jack Ma is an undergraduate senior majoring in Electrical Engineering with a concentration of Embedded Systems at University of Washington Seattle.

Sairam Tabibu is pursuing a master’s degree in Electrical Engineering with an interest in machine learning/deep learning at University of Washington Seattle

Aaron Liang is pursuing a master’s degree in Electrical Engineering with an interest in software engineering at University of Washington Seattle

Tags: Archive

Leave a Reply