Build more effective conversations on Amazon Lex with confidence scores and increased accuracy

In the rush of our daily lives, we often have conversations that contain ambiguous or incomplete sentences. For example, when talking to a banking associate, a customer might say, “What’s my balance?” This request is ambiguous and it is difficult to disambiguate if the intent of the customer is to check the balance on her credit card or checking account. Perhaps she only has a checking account with the bank. The agent can provide a good customer experience by looking up the account details and identifying that the customer is referring to the checking account. In the customer service domain, agents often have to resolve such uncertainty in interpreting the user’s intent by using the contextual data available to them. Bots face the same ambiguity and need to determine the correct intent by augmenting the contextual data available about the customer.

Today, we’re launching natural language understanding improvements and confidence scores on Amazon Lex. We continuously improve the service based on customer feedback and advances in research. These improvements enable better intent classification accuracy. You can also achieve better detection of user inputs not included in the training data (out of domain utterances). In addition, we provide confidence score support to indicate the likelihood of a match with a certain intent. The confidence scores for the top five intents are surfaced as part of the response. This better equips you to handle ambiguous scenarios such as the one we described. In such cases, where two or more intents are matched with reasonably high confidence, intent classification confidence scores can help you determine when you need to use business logic to clarify the user’s intent. If the user only has a credit card, then you can trigger the intent to surface the balance on the credit card. Alternately, if the user has both a credit card and a checking account, you can pose a clarification question such as “Is that for your credit card or checking account?” before proceeding with the query. You now have better insights to manage the conversation flow and create more effective conversations.

This post shows how you can use these improvements with confidence scores to trigger the best response based on business knowledge.

Building a Lex bot

This post uses the following conversations to model a bot.

If the customer has only one account with the bank:

User: What’s my balance?

Agent: Please enter your ATM card PIN to confirm

User: 5555

Agent: Your checking account balance is $1,234.00

As well as an alternate conversation path, where the customer has multiple types of accounts:

User: What’s my balance?

Agent: Sure. Is this for your checking account, or credit card?

User: My credit card

Agent: Please enter your card’s CVV number to confirm

User: 1212

Agent: Your credit card balance is $3,456.00

The first step is to build an Amazon Lex bot with intents to support transactions such as balance inquiry, funds transfer, and bill payment. The GetBalanceCreditCard, GetBalanceChecking, and GetBalanceSavings intents provide account balance information. The PayBills intent processes payments to payees, and TransferFunds enables the transfer of funds from one account to another. Lastly, you can use the OrderChecks intent to replenish checks. When a user makes a request that the Lex bot can’t process with any of these intents, the fallback intent is triggered to respond.

Deploying the sample Lex bot

To create the sample bot, perform the following steps. For this post, you create an Amazon Lex bot BankingBot, and an AWS Lambda function called BankingBot_Handler.

- Download the Amazon Lex bot definition and Lambda code.

- On the Lambda console, choose Create function.

- Enter the function name

BankingBot_Handler. - Choose the latest Python runtime (for example, Python 3.8).

- For Permissions, choose Create a new role with basic Lambda permissions.

- Choose Create function.

- When your new Lambda function is available, in the Function code section, choose Actions and Upload a .zip file.

- Choose the

BankingBot.zipfile that you downloaded. - Choose Save.

- On the Amazon Lex console, choose Actions, Import.

- Choose the file

BankingBot.zipthat you downloaded and choose Import. - Select the

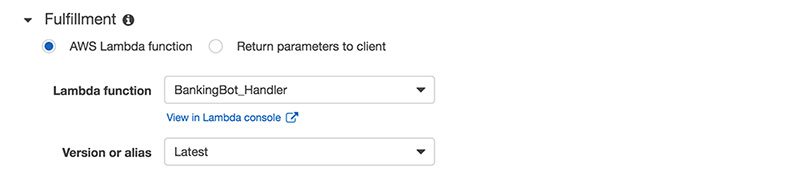

BankingBotbot on the Amazon Lex console. - In the Fulfillment section, for each of the intents, including the fallback intent (

BankingBotFallback), choose AWS Lambda function and choose theBankingBot_Handlerfunction from the drop-down menu.

- When prompted to Add permission to Lambda function, choose OK.

- When all the intents are updated, choose Build.

At this point, you should have a working Lex bot.

Setting confidence score thresholds

You’re now ready to set an intent confidence score threshold. This setting controls when Amazon Lex will default to Fallback Intent based on the confidence scores of intents. To configure the settings, complete the following steps:

- On the Amazon Lex console, choose Settings, and the choose General.

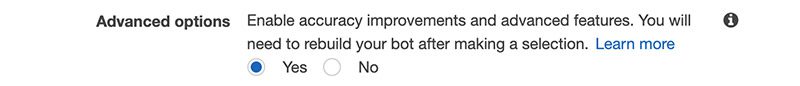

- For

us-east-1,us-west-2,ap-southeast-2, oreu-west-1, scroll down to Advanced options and select Yes to opt in to the accuracy improvements and features to enable the confidence score feature.

These improvements and confidence score support are enabled by default in other Regions.

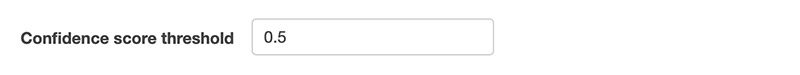

- For Confidence score threshold, enter a number between 0 and 1. You can choose to leave it at the default value of 0.4.

- Choose Save and then choose Build.

When the bot is configured, Amazon Lex surfaces the confidence scores and alternative intents in the PostText and PostContent responses:

Using a Lambda function and confidence scores to identify the user intent

When the user makes an ambiguous request such as “Can I get my account balance?” the Lambda function parses the list of intents that Amazon Lex returned. If multiple intents are returned, the function checks whether the top intents have similar scores as defined by an AMBIGUITY_RANGE value. For example, if one intent has a confidence score of 0.95 and another has a score of 0.65, the first intent is probably correct. However, if one intent has a score of 0.75 and another has a score of 0.72, you may be able to discriminate between the two intents using business knowledge in your application. In our use case, if the customer holds multiple accounts, the function is configured to respond with a clarification question such as, “Is this for your credit card or for your checking account?” But if the customer holds only a single account (for example, checking), the balance for that account is returned.

When you use confidence scores, Amazon Lex returns the most likely intent and up to four alternative intents with their associated scores in each response. If all the confidence scores are less than the threshold you defined, Amazon Lex includes the AMAZON.FallbackIntent intent, the AMAZON.KendraSearchIntent intent, or both. You can use the default threshold or you can set your own threshold value.

The following code samples are from the Lambda code you downloaded when you deployed this sample bot. You can adapt it for use with any Amazon Lex bot.

The Lambda function’s dispatcher function forwards requests to handler functions, but for the GetBalanceCreditCard, GetBalanceChecking, and GetBalanceSavings intents, it forwards to determine_intent instead.

The determine_intent function inspects the top event as reported by Lex, as well as any alternative intents. If an alternative intent is valid for the user (based on their accounts), and is within the AMBIGUITY_RANGE of the top event, it is added to a list of possible events.

If there is only one possible intent for the user, it is fulfilled (after first eliciting any missing slots).

If there are multiple possible intents, ask the user for clarification.

If there are no possible intents for the user, the fallback intent is triggered.

To test this, you can change the test user configuration in the code by changing the return value from the check_available_accounts function:

You can see the Lex confidence scores in the Amazon Lex console, or in your Lambda functions CloudWatch Logs log file.

Confidence scores can also be used to test different versions of your bot. For example, if you add new intents, utterances, or slot values, you can test the bot and inspect the confidence scores to see if your changes had the desired effect.

Conclusion

Although people aren’t always precise in their wording when they interact with a bot, we still want to provide them with a natural user experience. With natural language understanding improvements and confidence scores now available on Amazon Lex, you have additional information available to design a more intelligent conversation. You can couple the machine learning-based intent matching capabilities of Amazon Lex with your own business logic to zero in on your user’s intent. You can also use the confidence score threshold while testing during bot development, to determine if changes to the sample utterances for intents have the desired effect. These improvements enable you to design more effective conversation flows. For more information about incorporating these techniques into your bots, see Amazon Lex documentation.

About the Authors

Trevor Morse works as a Software Development Engineer at Amazon AI. He focuses on building and expanding the NLU capabilities of Lex. When not at a keyboard, he enjoys playing sports and spending time with family and friends.

Trevor Morse works as a Software Development Engineer at Amazon AI. He focuses on building and expanding the NLU capabilities of Lex. When not at a keyboard, he enjoys playing sports and spending time with family and friends.

Brian Yost is a Senior Consultant with the AWS Professional Services Conversational AI team. In his spare time, he enjoys mountain biking, home brewing, and tinkering with technology.

Brian Yost is a Senior Consultant with the AWS Professional Services Conversational AI team. In his spare time, he enjoys mountain biking, home brewing, and tinkering with technology.

As a Product Manager on the Amazon Lex team, Harshal Pimpalkhute spends his time trying to get machines to engage (nicely) with humans.

As a Product Manager on the Amazon Lex team, Harshal Pimpalkhute spends his time trying to get machines to engage (nicely) with humans.

Tags: Archive

Leave a Reply