Relevance tuning with Amazon Kendra

Amazon Kendra is a highly accurate and easy-to-use enterprise search service powered by machine learning (ML). As your users begin to perform searches using Amazon Kendra, you can fine-tune which search results they receive. For example, you might want to prioritize results from certain data sources that are more actively curated and therefore more authoritative. Or if your users frequently search for documents like quarterly reports, you may want to display the more recent quarterly reports first.

Relevance tuning allows you to change how Amazon Kendra processes the importance of certain fields or attributes in search results. In this post, we walk through how you can manually tune your index to achieve the best results.

It’s important to understand the three main response types of Amazon Kendra: matching to FAQs, reading comprehension to extract suggested answers, and document ranking. Relevance tuning impacts document ranking. Additionally, relevance tuning is just one of many factors that impact search results for your users. You can’t change specific results, but you can influence how much weight Amazon Kendra applies to certain fields or attributes.

Faceting

Because you’re tuning based on fields, you need to have those fields faceted in your index. For example, if you want to boost the signal of the author field, you need to make the author field a searchable facet in your index. For more information about adding facetable fields to your index, see Creating custom document attributes.

Performing relevance tuning

You can perform relevance tuning in several different ways, such as on the AWS Management Console through the Amazon Kendra search console or with the Amazon Kendra API. You can also use several different types of fields when tuning:

- Date fields – Boost more recent results

- Number fields – Amplify content based on number fields, such as total view counts

- String fields – Elevate results based on string fields, for example those that are tagged as coming from a more authoritative data source

Prerequisites

This post requires you to complete the following prerequisites: set up your environment, upload the example dataset, and create an index.

Setting up your environment

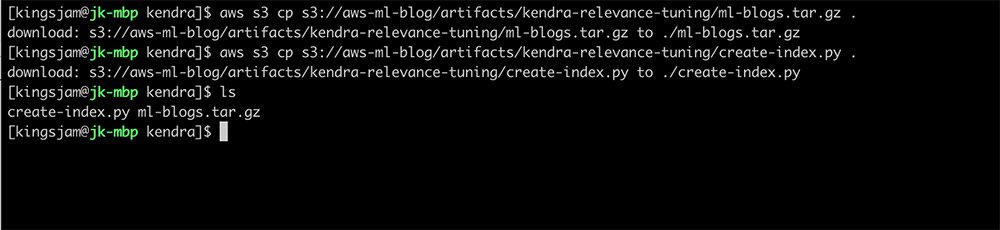

Ensure you have the AWS CLI installed. Open a terminal window and create a new working directory. From that directory, download the following files:

- The sample dataset, available from:

s3://aws-ml-blog/artifacts/kendra-relevance-tuning/ml-blogs.tar.gz - The Python script to create your index, available from:

s3://aws-ml-blog/artifacts/kendra-relevance-tuning/create-index.py

The following screenshot shows how to download the dataset and the Python script.

Uploading the dataset

For this use case, we use a dataset that is a selection of posts from the AWS Machine Learning Blog. If you want to use your own dataset, make sure you have a variety of metadata. You should ideally have varying string fields and date fields. In the example dataset, the different fields include:

- Author name – Author of the post

- Content type – Blog posts and whitepapers

- Topic and subtopic – The main topic is

Machine Learningand subtopics includeComputer VisionandML at the Edge - Content language – English, Japanese, and French

- Number of citations in scientific journals – These are randomly fabricated numbers for this post

To get started, create two Amazon Simple Storage Service (Amazon S3) buckets. Make sure to create them in the same Region as your index. Our index has two data sources.

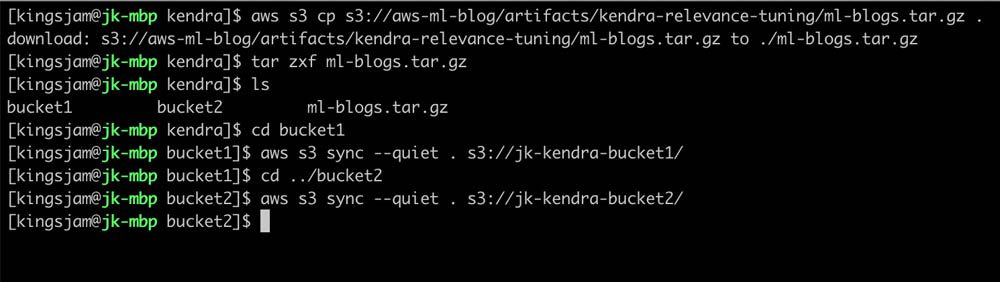

Within the ml-blogs.tar.gz tarball there are two directories. Extract the tarball and sync the contents of the first directory, ‘bucket1’ to your first S3 bucket. Then sync the contents of the second directory, ‘bucket2’, to your second S3 bucket.

The following screenshot shows how to download the dataset and upload it to your S3 buckets.

Creating the index

Using your preferred code editor, open the Python script ‘create-index.py’ that you downloaded previously. You will need to set your bucket name variables to the names of the Amazon S3 buckets you created earlier. Make sure you uncomment those lines.

Once this is done, run the script by typing python create-index.py. This does the following:

- Creates an AWS Identity and Access Management (IAM) role to allow your Amazon Kendra index to read data from Amazon S3 and write logs to Amazon CloudWatch Logs

- Creates an Amazon Kendra index

- Adds two Amazon S3 data sources to your index

- Adds new facets to your index, which allows you to search based on the different fields in the dataset

- Initiates a data source sync job

Working with relevance tuning

Now that our data is properly indexed and our metadata is facetable, we can test different settings to understand how relevance tuning affects search results. In the following examples, we will boost based on several different attributes. These include the data source, document type, freshness, and popularity.

Boosting your authoritative data sources

The first kind of tuning we look at is based on data sources. Perhaps you have one data source that is well maintained and curated, and another with information that is less accurate and dated. You want to prioritize the results from the first data source so your users get the most relevant results when they perform searches.

When we created our index, we created two data sources. One contains all our blog posts—this is our primary data source. The other contains only a single file, which we’re treating as our legacy data source.

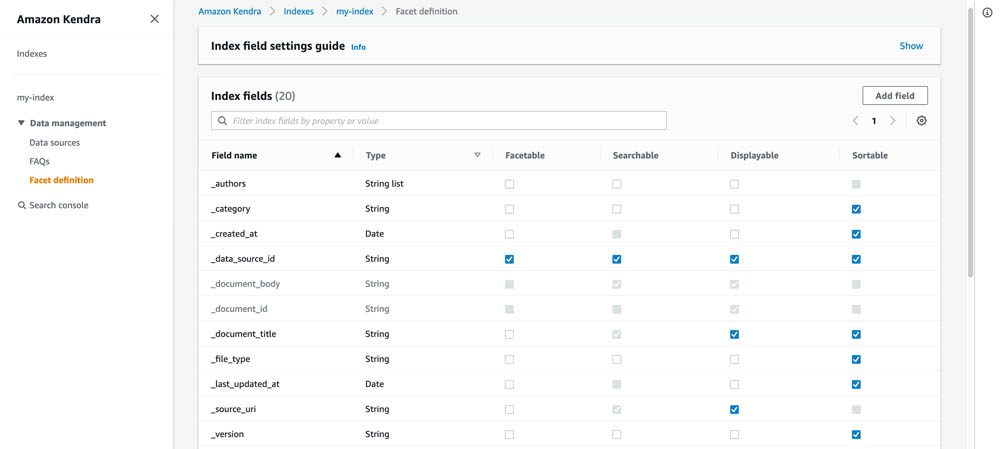

Our index creation script set the field _data_source_id to be facetable, searchable, and displayable. This is an essential step in boosting particular data sources.

The following screenshot shows the index fields of our Amazon Kendra index.

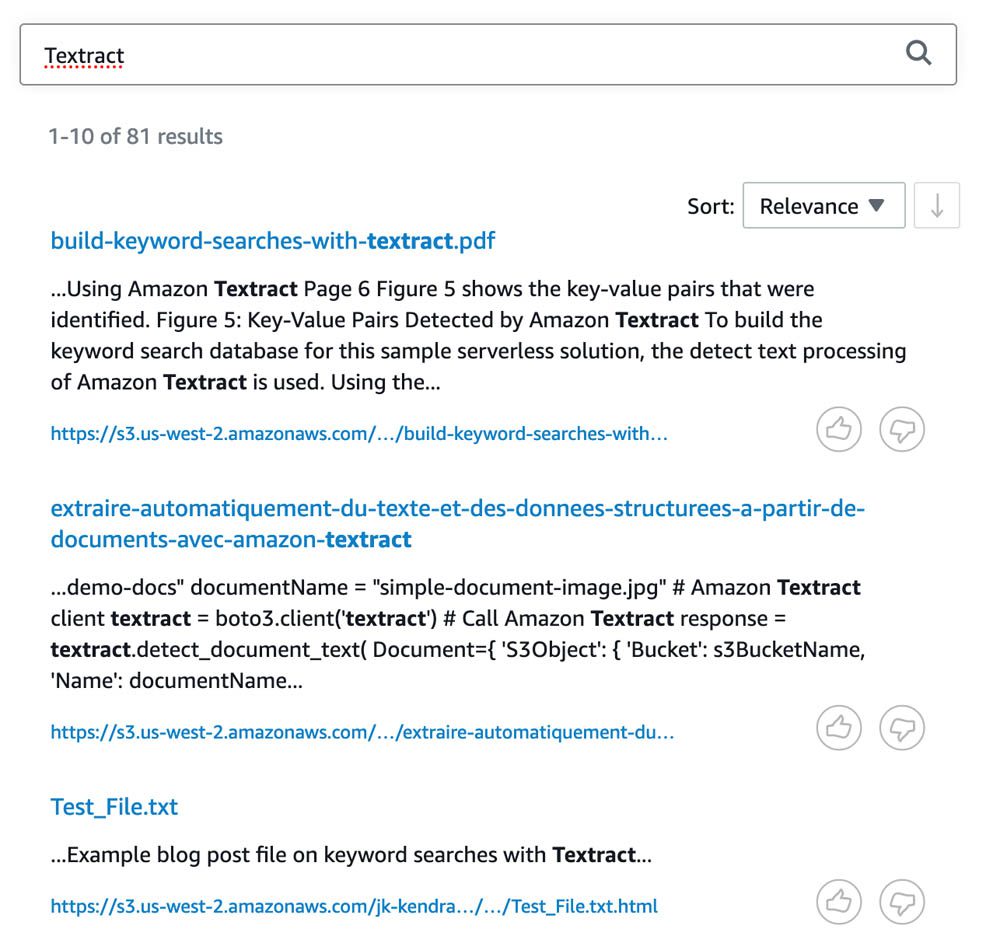

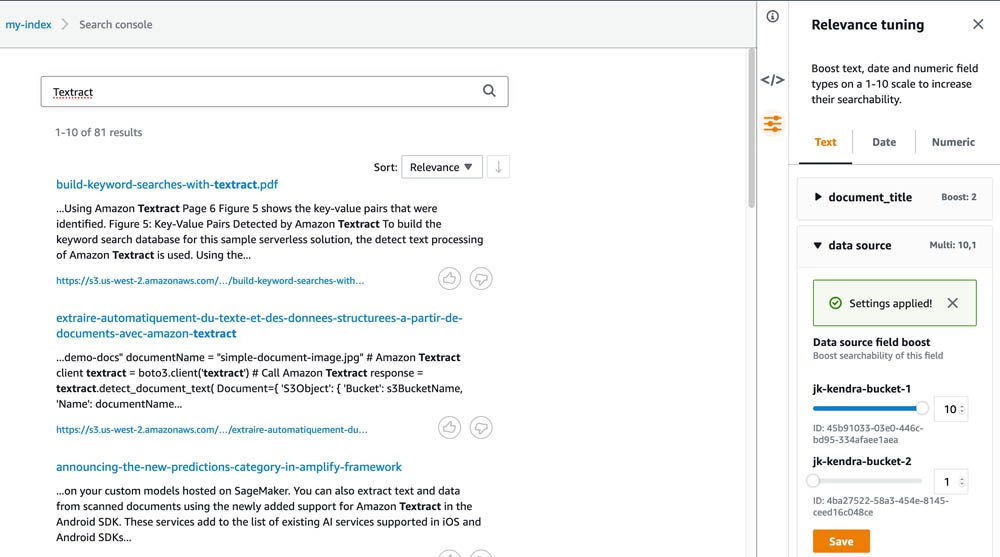

- On the Amazon Kendra search console, search for

Textract.

Your results should reference posts about Amazon Textract, a service that can automatically extract text and data from scanned documents.

The following screenshot shows the results of a search for ‘Textract’.

Also in the results should be a file called Test_File.txt. This is a file from our secondary, less well-curated data source. Make a note of where this result appears in your search results. We want to de-prioritize this result and boost the results from our primary source.

- Choose Tuning to open the Relevance tuning

- Under Text fields, expand data source.

- Drag the slider for your first data source to the right to boost the results from this source. For this post, we start by setting it to 8.

- Perform another search for

Textract.

You should find that the file from the second data source has moved down the search rankings.

- Drag the slider all the way to the right, so that the boost is set to 10, and perform the search again.

You should find that the result from the secondary data source has disappeared from the first page of search results.

The following screenshot shows the relevance tuning panel with data source field boost applied to one data source, and the search results excluding the results from our secondary data source.

Although we used this approach with S3 buckets as our data sources, you can use it to prioritize any data source available in Amazon Kendra. You can boost the results from your Amazon S3 data lake and de-prioritize the results from your Microsoft SharePoint system, or vice-versa.

Boosting certain document types

In this use case, we boost the results of our whitepapers over the results from the AWS Machine Learning Blog. We first establish a baseline search result.

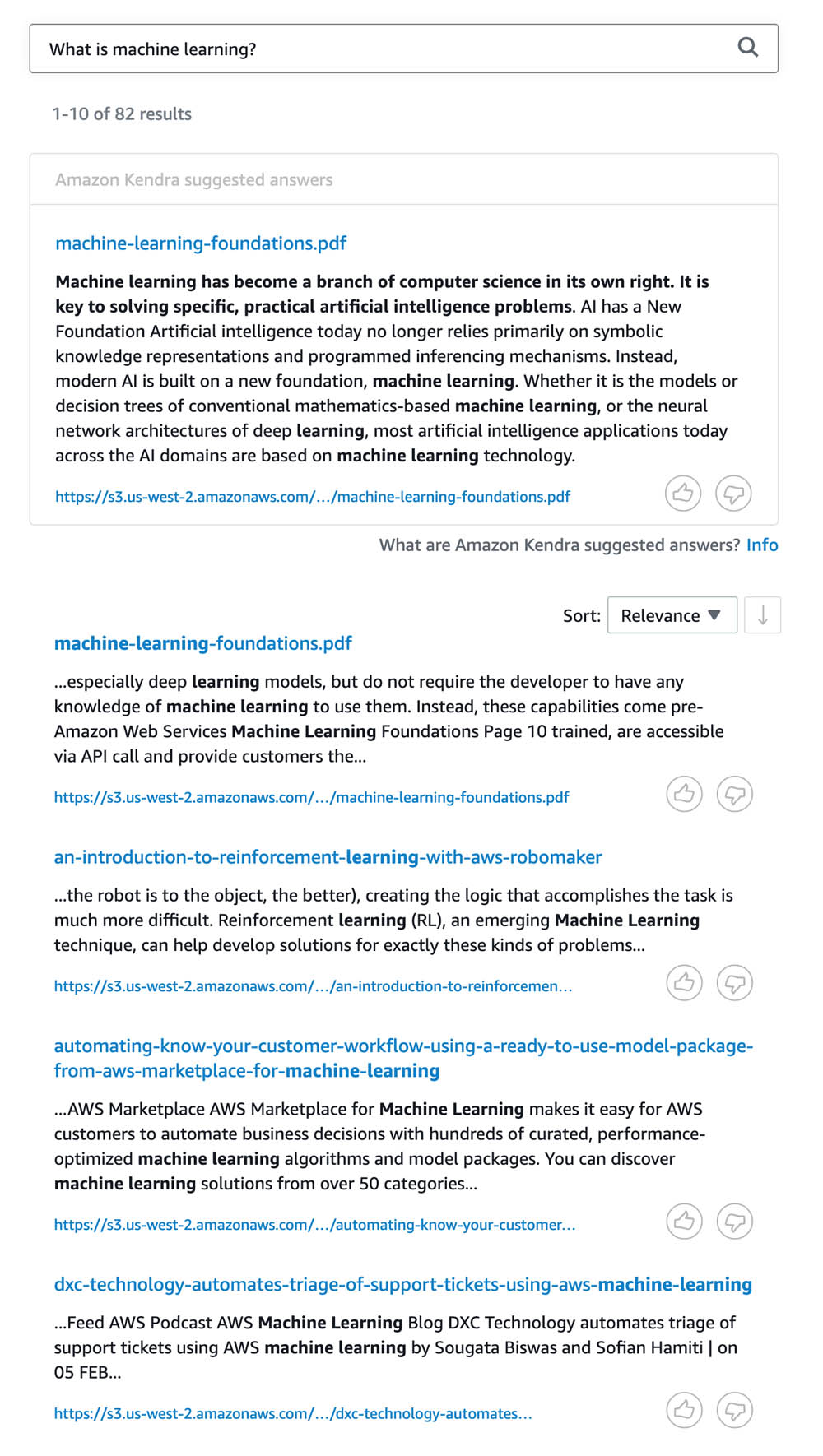

- Open the Amazon Kendra search console and search for

What is machine learning?

Although the top result is a suggested answer from a whitepaper, the next results are likely from blog posts.

The following screenshot shows the results of a search for ‘What is machine learning?’

How do we influence Amazon Kendra to push whitepapers towards the top of its search results?

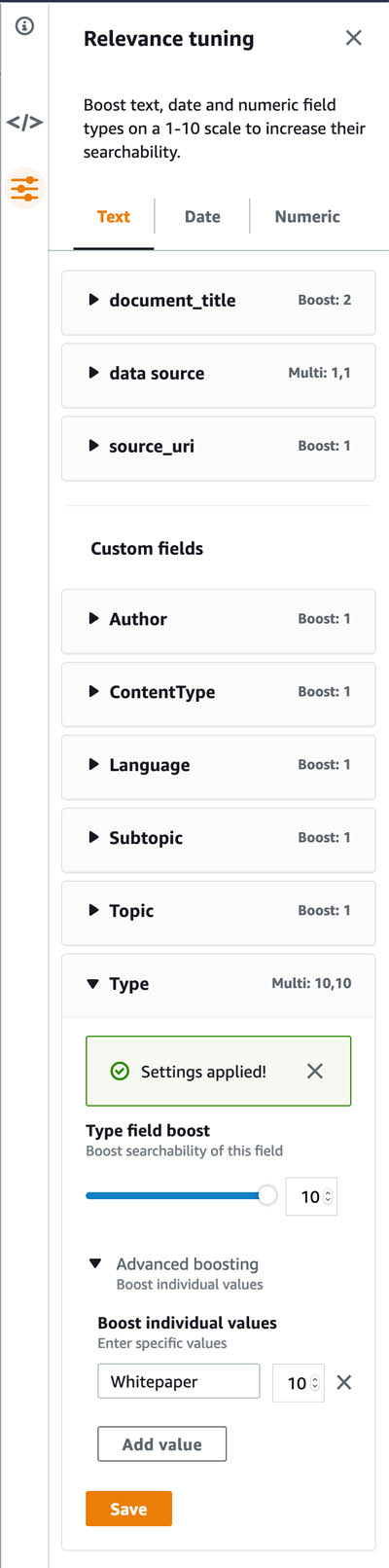

First, we want to tune the search results based on the content Type field.

- Open the Relevance tuning panel on the Amazon Kendra console.

- Under Custom fields, expand Type.

- Drag the Type field boost slider all the way to the right to set the relevancy of this field to 10.

We also want to boost the importance of a particular Type value, namely Whitepapers.

- Expand Advanced boosting and choose Add value.

- Whitepapers are indicated in our metadata by the field

“Type”: “Whitepaper”, so enter a value ofWhitepaperand set the value to 10. - Choose Save.

The following screenshot shows the relevance tuning panel with type field boost applied to the ‘Whitepaper’ document type.

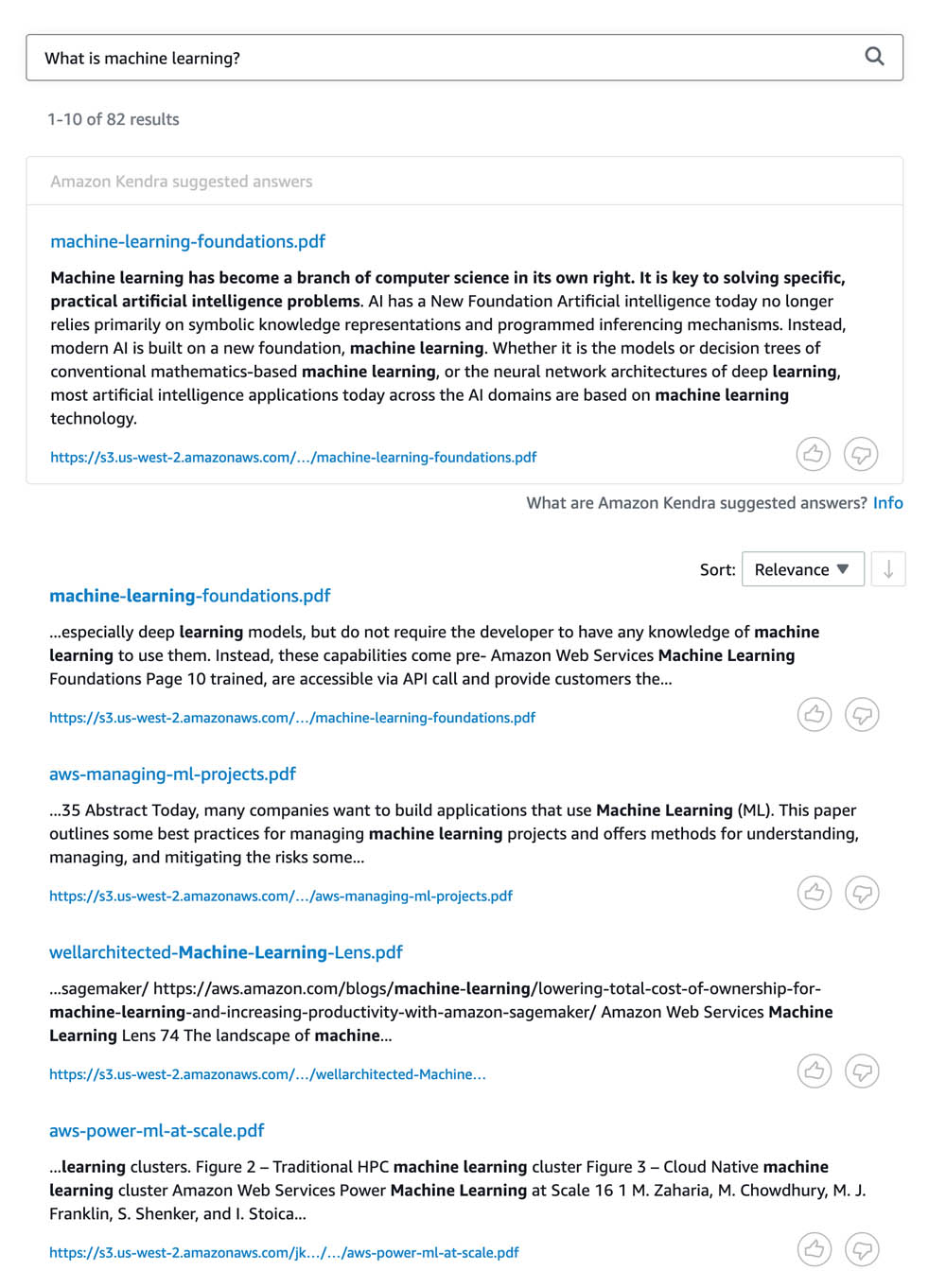

Wait for up to 10 seconds before you rerun your search. The top results should all be whitepapers, and blog post results should appear further down the list.

The following screenshot shows the results of a search for ‘What is machine learning?’ with type field boost applied.

- Return your Type field boost settings back to their normal values.

Boosting based on document freshness

You might have a large archive of documents spanning multiple decades, but the more recent answers are more useful. For example, if your users ask, “Where is the IT helpdesk?” you want to make sure they’re given the most up-to-date answer. To achieve this, you can give a freshness boost based on date attributes.

In this use case, we boost the search results to include more recent posts.

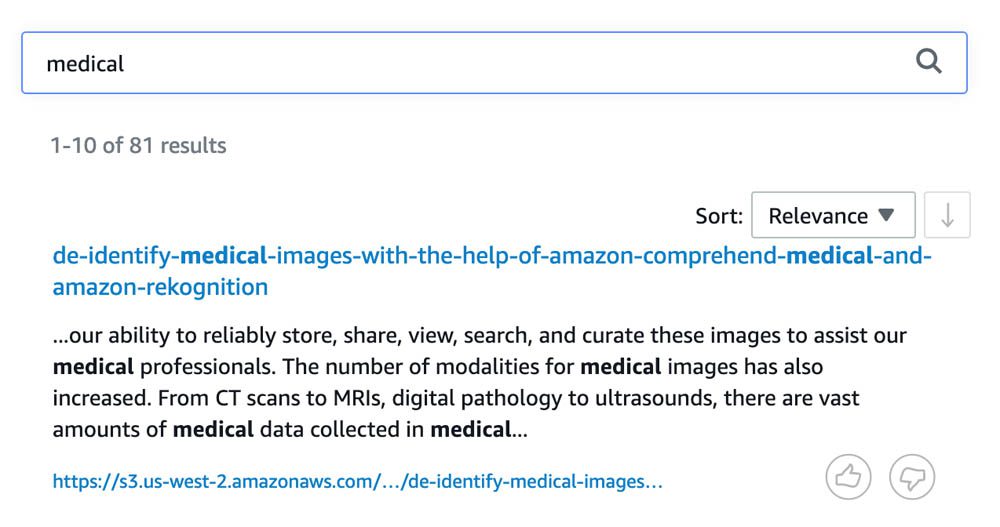

- On the Amazon Kendra search console, search for

medical.

The first result is De-identify medical images with the help of Amazon Comprehend Medical and Amazon Rekognition, published March 19, 2019.

The following screenshot shows the results of a search for ‘medical’.

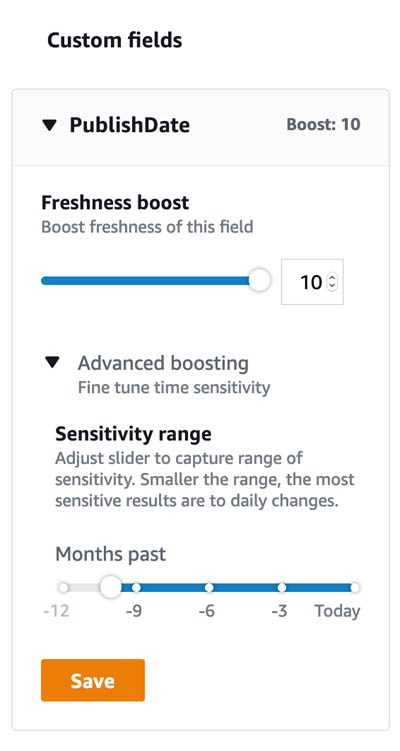

- Open the Relevance tuning panel again.

- On the Date tab, open Custom fields.

- Adjust the Freshness boost of PublishDate to 10.

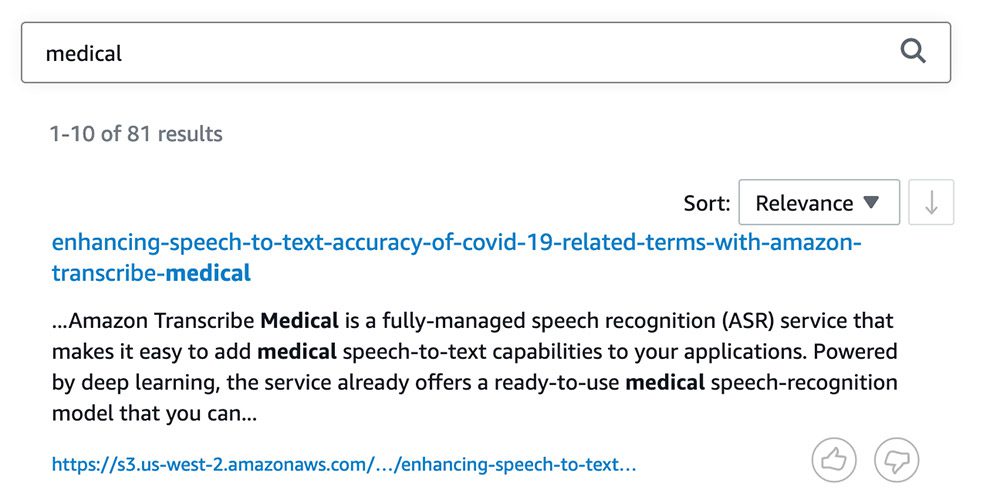

- Search again for

medical.

This time the first result is Enhancing speech-to-text accuracy of COVID-19-related terms with Amazon Transcribe Medical, published May 15, 2020.

The following screenshot shows the results of a search for ‘medical’ with freshness boost applied.

You can also expand Advanced boosting to boost results from a particular period of time. For example, if you release quarterly business results, you might want to set the sensitivity range to the last 3 months. This boosts documents released in the last quarter so users are more likely to find them.

The following screenshot shows the section of the relevance tuning panel related to freshness boost, showing the Sensitivity slider to capture range of sensitivity.

Boosting based on document popularity

The final scenario is tuning based on numerical values. In this use case, we assigned a random number to each post to represent the number of citations they received in scientific journals. (It’s important to reiterate that these are just random numbers, not actual citation numbers!) We want the most frequently cited posts to surface.

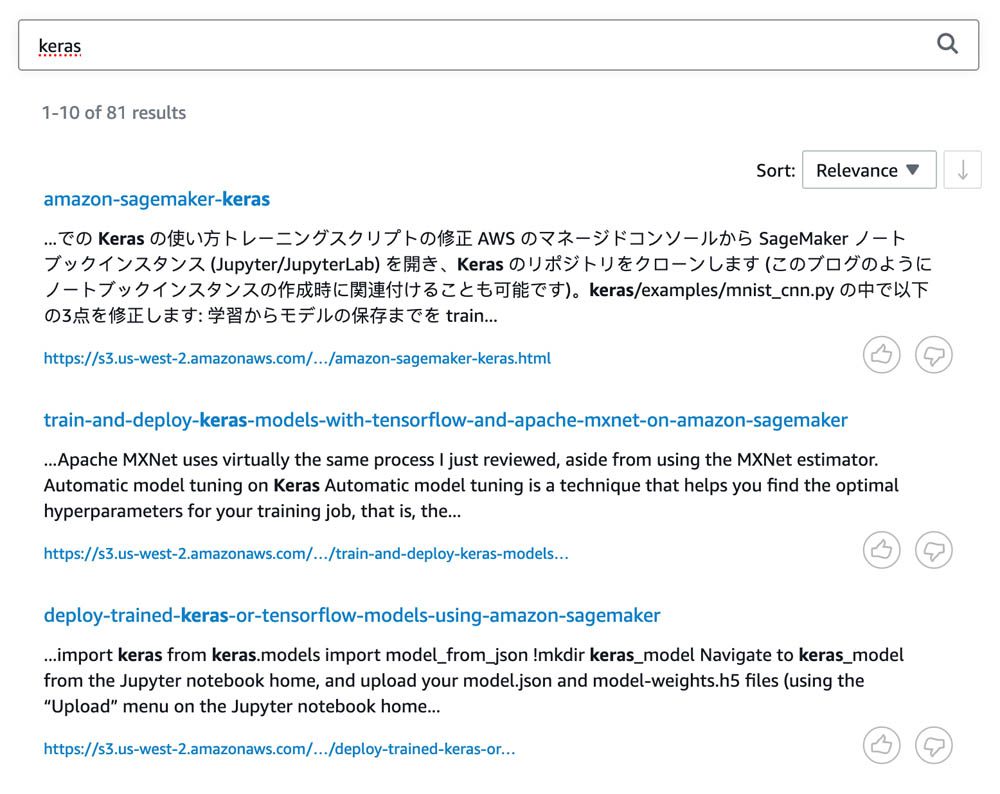

- Run a search for

keras, which is the name of a popular library for ML.

You might see a suggested answer from Amazon Kendra, but the top results (and their synthetic citation numbers) are likely to include:

- Amazon SageMaker Keras – 81 citations

- Deploy trained Keras or TensorFlow models using Amazon SageMaker – 57 citations

- Train Deep Learning Models on GPUs using Amazon EC2 Spot Instances – 68 citations

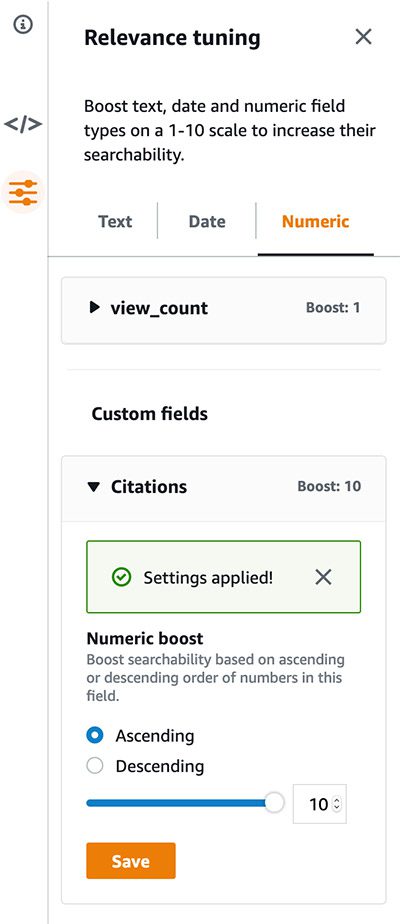

- On the Relevance tuning panel, on the Numeric tab, pull the slider for Citations all the way to 10.

- Select Ascending to boost the results that have more citations.

The following screenshot shows the relevance tuning panel with numeric boost applied to the Citations custom field.

- Search for

kerasagain and see which results appear.

At the top of the search results are:

- Train and deploy Keras models with TensorFlow and Apache MXNet on Amazon SageMaker – 1,197 citations

- Using TensorFlow eager execution with Amazon SageMaker script mode – 2,434 citations

Amazon Kendra prioritized the results with more citations.

Conclusion

This post demonstrated how to use relevance tuning to adjust your users’ Amazon Kendra search results. We used a small and somewhat synthetic dataset to give you an idea of how relevance tuning works. Real datasets have a lot more complexity, so it’s important to work with your users to understand which types of search results they want prioritized. With relevance tuning, you can get the most value out of enterprise search with Amazon Kendra! For more information about Amazon Kendra, see AWS re:Invent 2019 – Keynote with Andy Jassy on YouTube, Amazon Kendra FAQs, and What is Amazon Kendra?

Thanks to Tapodipta Ghosh for providing the sample dataset and technical review. This post couldn’t have been written without his assistance.

About the Author

James Kingsmill is a Solution Architect in the Australian Public Sector team. He has a longstanding interest in helping public sector customers achieve their transformation, automation, and security goals. In his spare time, you can find him canyoning in the Blue Mountains near Sydney.

James Kingsmill is a Solution Architect in the Australian Public Sector team. He has a longstanding interest in helping public sector customers achieve their transformation, automation, and security goals. In his spare time, you can find him canyoning in the Blue Mountains near Sydney.

Tags: Archive

Leave a Reply