Build alerting and human review for images using Amazon Rekognition and Amazon A2I

The volume of user-generated content (UGC) and third-party content has been increasing substantially in sectors like social media, ecommerce, online advertising, and photo sharing. However, such content needs to be reviewed to ensure that end-users aren’t exposed to inappropriate or offensive material, such as nudity, violence, adult products, or disturbing images. Today, some companies simply react to user complaints to take down offensive images, ads, or videos, whereas many employ teams of human moderators to review small samples of content. However, human moderators alone can’t scale to meet these needs, leading to a poor user experience or even a loss of brand reputation.

With Amazon Rekognition, you can automate or streamline your image and video analysis workflows using machine learning (ML). Amazon Rekognition provides an image moderation API that can detect unsafe or inappropriate content containing nudity, suggestiveness, violence, and more. You get a hierarchical taxonomy of labels that you can use to define your business rules, without needing any ML experience. Each detection by Amazon Rekognition comes with a confidence score between 0–100, which provides a measure of how confident the ML model is in its prediction.

Content moderation still requires human reviewers to audit results and judge nuanced situations where AI may not be certain in its prediction. Combining machine predictions with human judgment and managing the infrastructure needed to set up such workflows is hard, expensive, and time-consuming to do at scale. This is why we built Amazon Augmented AI (Amazon A2I), which lets you implement a human review of ML predictions and is directly integrated with Amazon Rekognition. Amazon A2I allows you to use in-house, private, or third-party vendor workforces with a web interface that has instructions and tools they need to complete their review tasks.

You can easily set up the criteria that triggers a human review of a machine prediction; for example, you can send an image for further human review if Amazon Rekognition’s confidence score is between 50–90. Amazon Rekognition handles the bulk of the work and makes sure that every image gets scanned, and Amazon A2I helps send the remaining content for further review to best utilize human judgment. Together, this helps ensure that you get full moderation coverage while maintaining very high accuracy, at a fraction of the cost to review each image manually.

In this post, we show you how to use Amazon Rekognition image moderation APIs to automatically detect explicit adult, suggestive, violent, and disturbing content in an image and use Amazon A2I to onboard human workforces, set up human review thresholds of the images, and define human review tasks. When these conditions are met, images are sent to human reviewers for further review, which is performed according to the instructions in the human review task definition.

Prerequisites

This post requires you to complete the following prerequisites:

- Create an AWS Identity and Access Management (IAM) role. To create a human review workflow, you need to provide an IAM role that grants Amazon A2I permission to access Amazon Simple Storage Service (Amazon S3) for reading objects to render in a human task UI and writing the results of the human review. This role also needs an attached trust policy to give Amazon SageMaker permission to assume the role. This allows Amazon A2I to perform actions in accordance with permissions that you attach to the role. For example policies that you can modify and attach to the role you use to create a flow definition, see Add Permissions to the IAM Role Used to Create a Flow Definition.

- Configure permission to invoke the Amazon Rekognition DetectModerationLabels You need to attach the

AmazonRekognitionFullAccesspolicy to the AWS Lambda function that calls the Amazon Rekognitiondetect_moderation_labelsAPI. - Provide Amazon S3 Access, Put, and Get permission to Lambda if you wish to have Lambda use Amazon S3 to access images for analysis.

- Give the Lambda function

AmazonSageMakerFullAccessaccess to the Amazon A2I services for the human review.

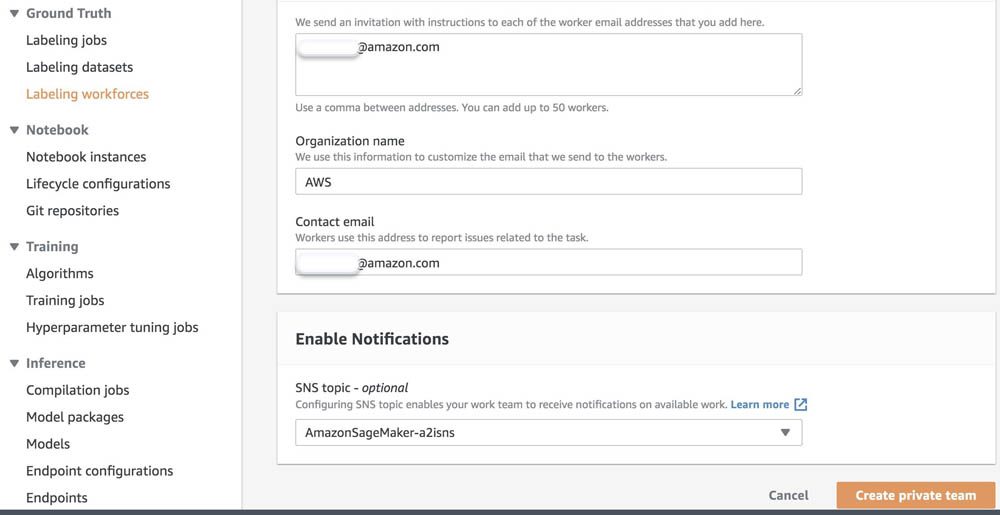

Creating a private work team

A work team is a group of people that you select to review your documents. You can create a work team from a workforce, which is made up of Amazon Mechanical Turk workers, vendor-managed workers, or your own private workers that you invite to work on your tasks. Whichever workforce type you choose, Amazon A2I takes care of sending tasks to workers. For this post, you create a work team using a private workforce and add yourself to the team to preview the Amazon A2I workflow.

To create your private work team, complete the following steps:

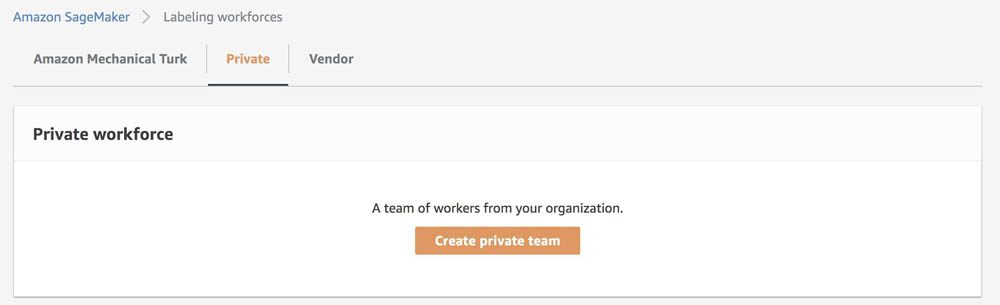

- Navigate to the Labeling workforces page on the Amazon SageMaker console.

- On the Private tab, choose Create private team.

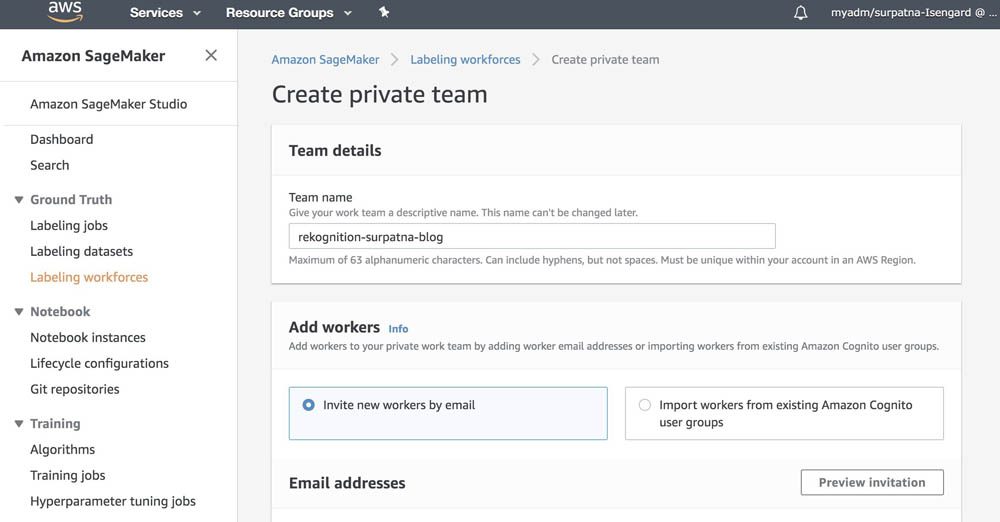

- For Team name, enter an appropriate team name.

- For Add workers, you can choose to add workers to your workforce by importing workers from an existing user group in AWS Cognito or by inviting new workers by email.

For this post, we suggest adding workers by email. If you create a workforce using an existing AWS Cognito user group, be sure that you can access an email in that workforce to complete this use case.

- Choose Create private team.

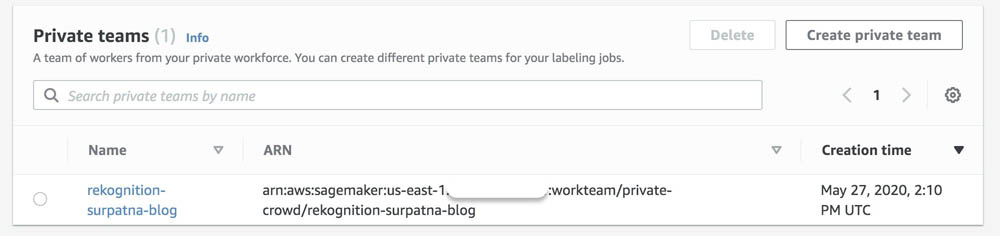

- On the Private tab, choose the work team you just created to view your work team ARN.

- Record the ARN to use when you create a flow definition in the next section.

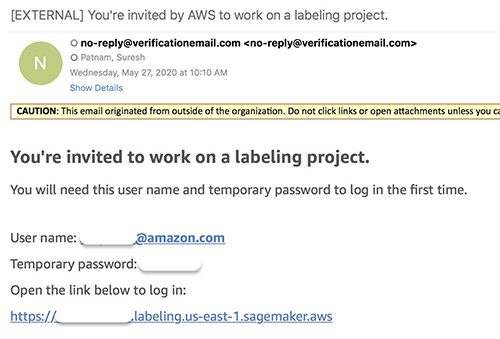

After you create the private team, you get an email invitation. The following screenshot shows an example email.

- Choose the link to log in and change your password.

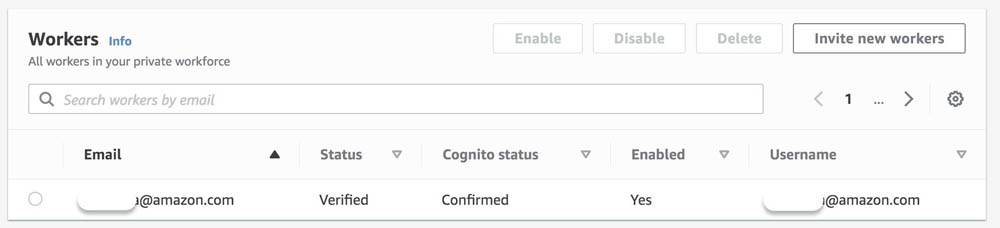

You’re now registered as a verified worker for this team. The following screenshot shows the updated information on the Private tab.

Your one-person team is now ready, and you can create a human review workflow.

Creating a human review workflow

In this step, you create a human review workflow, where you specify your work team, identify where you want output data to be stored in Amazon S3, and create instructions to help workers complete your document review task.

To create a human review workflow, complete the following:

- In the Augmented AI section on the Amazon SageMaker console, navigate to the Human review workflows

- Choose Create human review workflow.

On this page, you configure your workflow.

- Enter a name for your workflow.

- Choose an S3 bucket where you want Amazon A2I to store the output of the human review.

- Choose an IAM role for the workflow.

You can create a new role automatically with Amazon S3 access and an Amazon SageMaker execution policy attached, or you can choose a role that already has these permissions attached.

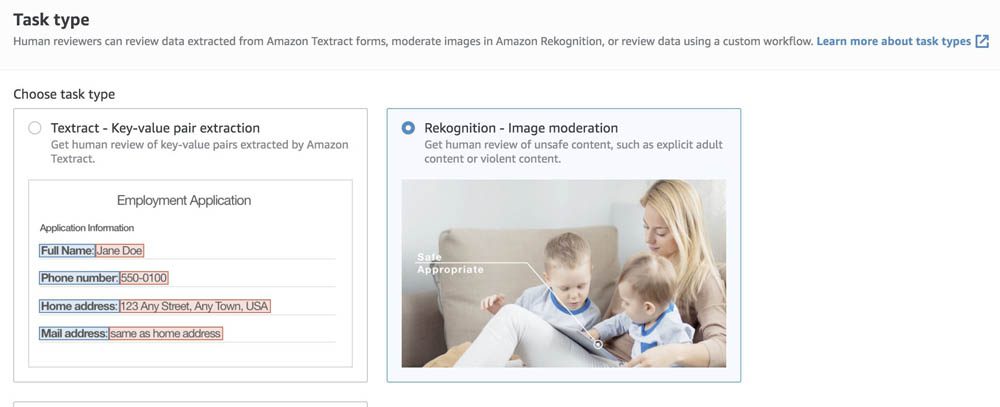

- In the Task type section, select Rekognition – Image moderation.

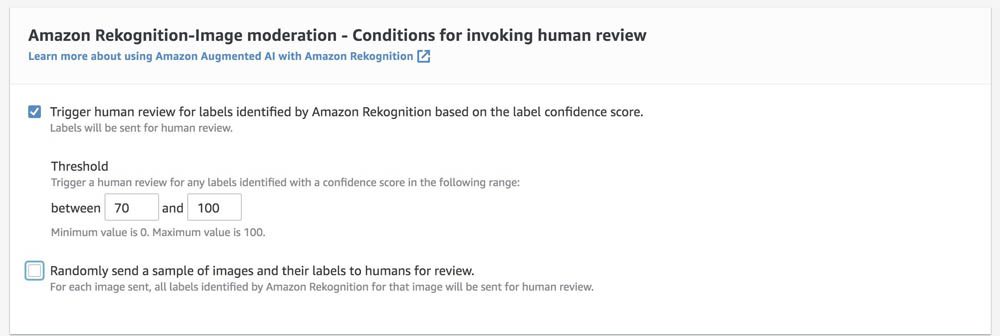

- In the Amazon Rekognition-Image Moderation – Conditions for invoking human review section, you can specify conditions that trigger a human review.

For example, if the confidence of the output label produced by Amazon Rekognition is between the range provided (70–100, for this use case), the document is sent to the portal for human review. You can also select different confidence thresholds for each image moderation output label through Amazon A2I APIs.

- In the Worker task template creation section, if you already have an A2I worker task template, you can choose Use your own template. Otherwise, select Create from a default template and enter a name and task description. For this use case, you can use the default worker instructions provided.

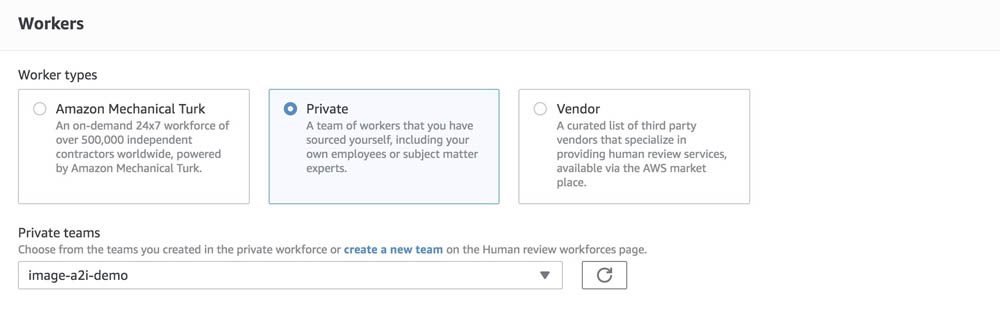

- In the Workers section, select Private.

- For Private teams, choose the private work team you created earlier.

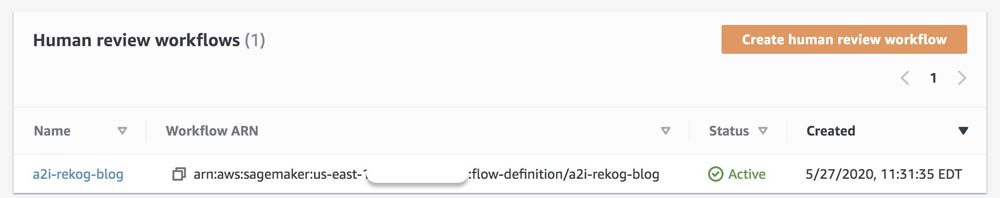

- Choose Create.

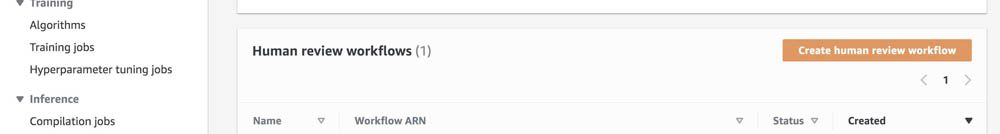

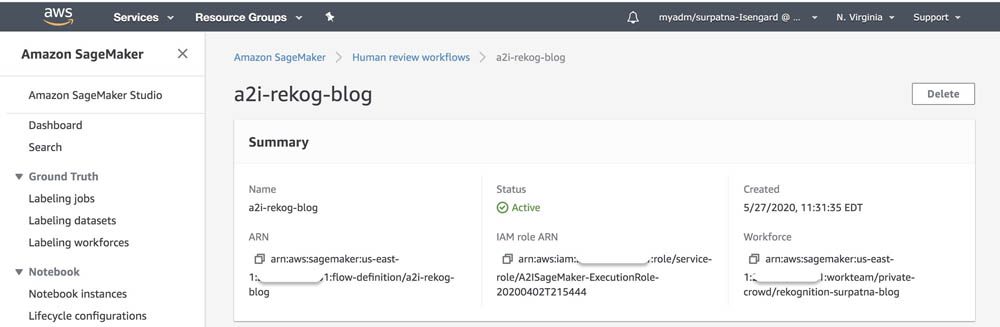

You’re redirected to the Human review workflows page, where you can see the name and ARN of the human review workflow you just created.

- Record the ARN to use in the next section.

Configuring Lambda to run Amazon Rekognition

In this step, you create a Lambda function to call the Amazon Rekognition API detect_moderation_labels. You use the HumanLoopConfig parameter of detect_moderation_labels to integrate an Amazon A2I human review workflow into your Amazon Rekognition image moderation job.

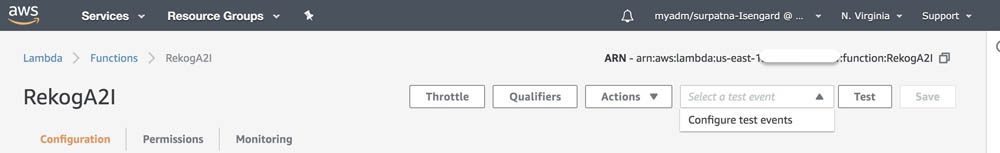

- On the Lambda console, create a new function called

A2IRegok. - For Runtime, choose Python 3.7.

- Under Permission, choose Use an existing role.

- Choose the role you created.

- In the Function code section, remove the function code and replace it with the following code.

- Inside the Lambda function, import two libraries:

uuidandboto3. - Modify the function code as follows:

- Replace the

FlowDefinationArnin line 12 with one you saved in the last step. - On line 13, provide a unique name to the

HumanLoopNameor useuuidto generate a unique ID. - You use the

detect_moderation_labelsAPI operation to analyze the picture (JPG, PNG). To use the picture from the Amazon S3 bucket, specify the bucket name and key of the file inside the API call as shown in lines 7 and 8.

- Replace the

- Inside the Lambda function, import two libraries:

Calling Amazon Rekognition using Lambda

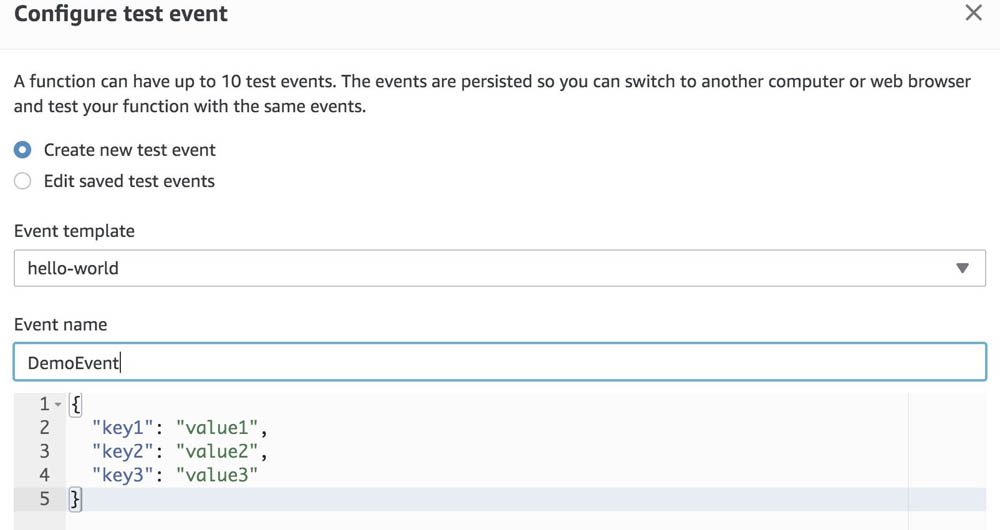

To configure and run a serverless function, complete the following steps:

- On the Lambda console, choose your function.

- Choose Configure test events from the drop-down menu.

The editor appears to enter an event to test your function.

- On the Configure test event page, select Create new test event.

- For Event template, choose hello-world.

- For Event name, enter a name; for example,

DemoEvent. - You can change the values in the sample JSON. For this use case, no change is needed.

For more information, see Run a Serverless “Hello, World!” and Create a Lambda function with the console.

- Choose Create.

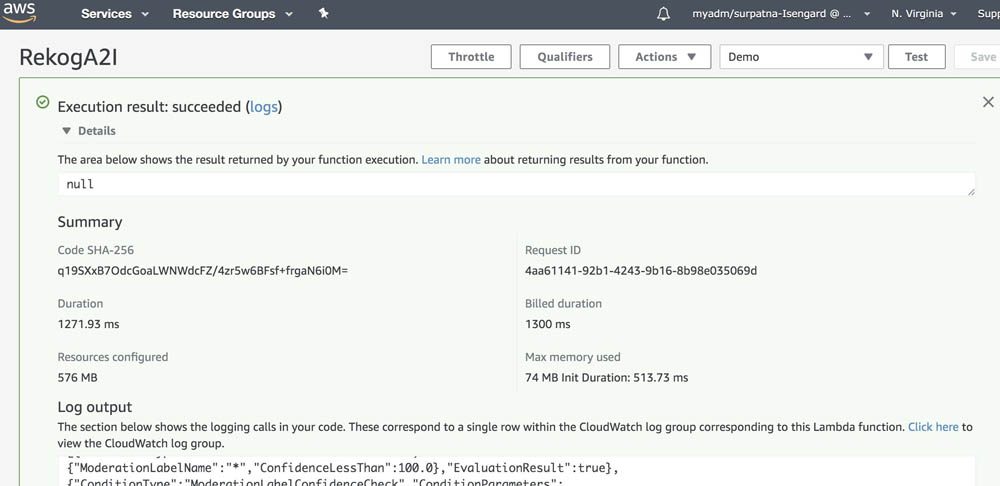

- To run the function, choose Test.

When the test is complete, you can view the results on the console:

- Execution result – Verifies that the test succeeded

- Summary – Shows the key information reported in the log output

- Log output – Shows the logs the Lambda function generated

The response to this call contains the inference from Amazon Rekognition and the evaluated activation conditions that may or may not have led to a human loop creation. If a human loop is created, the output contains HumanLoopArn. You can track its status using the Amazon A2I API operation DescribeHumanLoop.

Completing a human review of your image

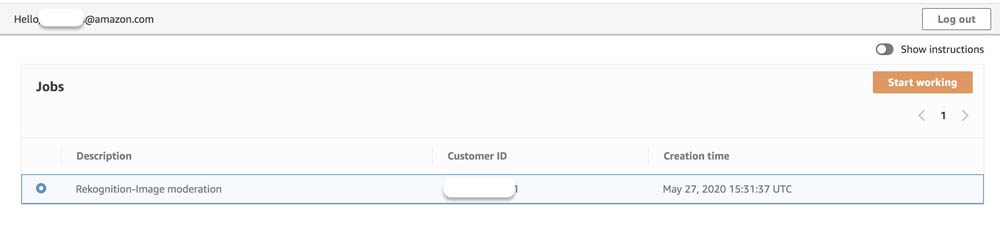

To complete a human review of your image, complete the following steps:

- Open the URL in the email you received.

You see a list of reviews you are assigned to.

- Choose the image you want to review.

- Choose Start working.

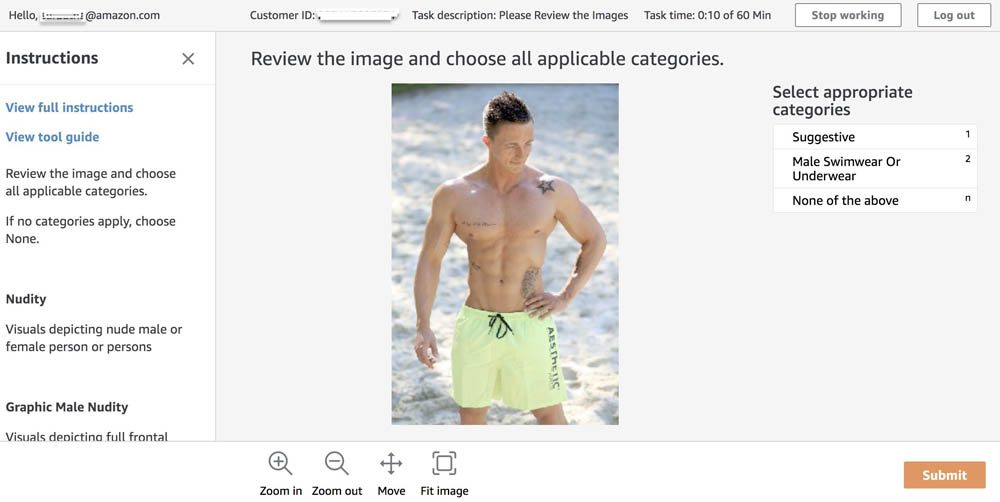

After you start working, you must complete the task within 60 minutes.

- Choose an appropriate category for the image.

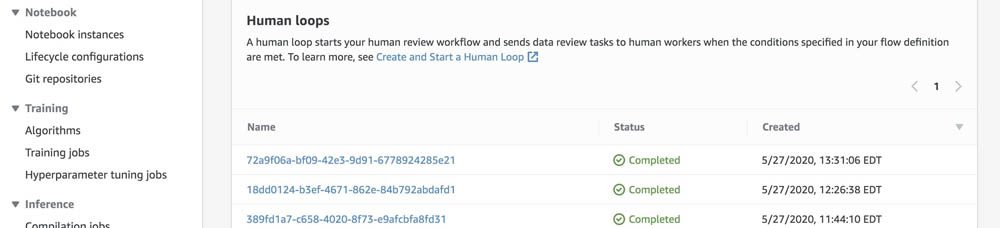

Before choosing Submit, if you go to the Human review workflow page on the Amazon SageMaker console and choose the human review workflow you created, you can see a Human loops summary section for that workflow.

- In your worker portal, when you’re done working, choose Submit.

After you complete your job, the status of the human loop workflow is updated.

If you navigate back to the Human review workflow page, you can see the human loop you just completed has the status Completed.

Processing the output

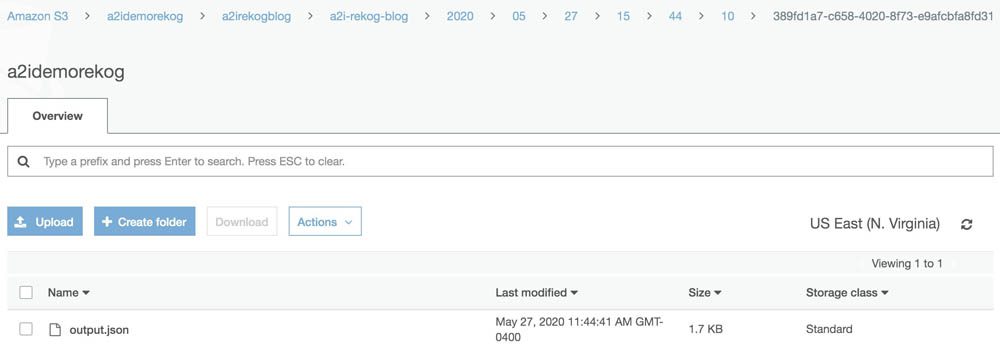

The output data from your review is located in Bucket when you configured your human review workflow on the Amazon A2I console. The path to the data uses the following pattern: YYYY/MM/DD/hh/mm/ss.

The output file (output.json) is structured as follows:

In this JSON object, you have all the input and output content in one place so that you can parse one file to get the following:

- humanAnswers – Contains

answerContent, which lists the labels chosen by the human reviewer, andworkerMetadata, which contains information that you can use to track private workers - inputContent – Contains information about the input data object that was reviewed, the label category options available to workers, and the responses workers submitted

For more information about the location and format of your output data, see Monitor and Manage Your Human Loop.

Conclusion

This post has merely scratched the surface of what Amazon A2I can do. Amazon A2I is available in 12 Regions. For more information, see Region Table. To learn more about the Amazon Rekognition DetectModerationLabels API integration with Amazon A2I, see Use Amazon Augmented AI with Amazon Rekognition.

For video presentations, sample Jupyter notebooks, or more information about use cases like document processing, object detection, sentiment analysis, text translation, and others, see Amazon Augmented AI Resources.

About the Author

Suresh Patnam is a Solutions Architect at AWS. He helps customers innovate on the AWS platform by building highly available, scalable, and secure architectures on Big Data and AI/ML. In his spare time, Suresh enjoys playing tennis and spending time with his family.

Suresh Patnam is a Solutions Architect at AWS. He helps customers innovate on the AWS platform by building highly available, scalable, and secure architectures on Big Data and AI/ML. In his spare time, Suresh enjoys playing tennis and spending time with his family.

Tags: Archive

Leave a Reply