Collaborating with AI to create Bach-like compositions in AWS DeepComposer

AWS DeepComposer provides a creative and hands-on experience for learning generative AI and machine learning (ML). We recently launched the Edit melody feature, which allows you to add, remove, or edit specific notes, giving you full control of the pitch, length, and timing for each note. In this post, you can learn to use the Edit melody feature to collaborate with the autoregressive convolutional neural network (AR-CNN) algorithm and create interesting Bach-style compositions.

Through human-AI collaboration, we can surpass what humans and AI systems can create independently. For example, you can seek inspiration from AI to create art or music outside their area of expertise or offload the more routine tasks, like creating variations on a melody, and focus on the more interesting and creative tasks. Alternatively, you can assist the AI by correcting mistakes or removing artifacts it creates. You can also influence the output generated by the AI system by controlling the various training and inference parameters.

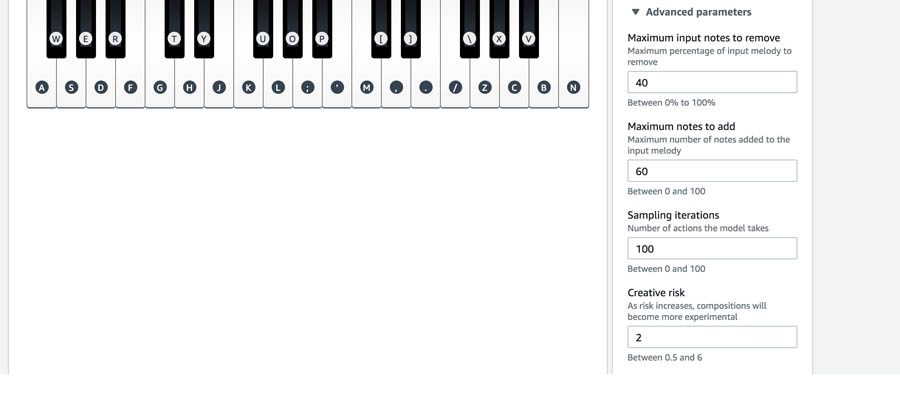

You can co-create music in the AWS DeepComposer Music Studio by collaborating with the AI (AR-CNN) model using the Edit melody feature. The AR-CNN Bach model modifies a melody note by note to guide the track towards sounding more Bach-like. You can modify four advanced parameters when you perform inference to influence how the input melody is modified:

- Maximum notes to add – Changes the maximum number of notes added to your original melody

- Maximum notes to remove – Changes the maximum number of notes removed from your original melody

- Sampling iterations – Changes the exact number of times you add or remove a note based on note-likelihood distributions inferred by the model

- Creative risk – Allows the AI model to deviate from creating Bach-like harmonies

The values you choose directly impact the composition created by the model by nudging the model in one way or another. For more information about these parameters, see AWS DeepComposer Learning Capsule on using the AR-CNN model.

Although the advanced parameters allow you to guide the output the AR-CNN model creates, they don’t provide note-level control over the music produced. For example, the AR-CNN model allows you to control the number of notes to add or remove during inference, but you don’t have control over the exact notes the model adds or removes.

The Edit melody feature bridges this gap by providing an interactive view of the generated melody so you can add missing notes, remove out-of-tune notes, or even change a note’s pitch and length. This granular level of editing facilitates better human-AI collaboration. It enables you to correct mistakes the model makes and harmonize the output to your liking, giving you more ownership of the creation process.

For this post, we explore the use case of co-creating Bach-like background music to match the following video.

Collaborating with AI using the AWS DeepComposer Music Studio

To start composing your melody, complete the following steps:

- Open the AWS DeepComposer Music Studio console.

- Choose an Input melody.

You can record a custom melody, import a melody, or choose a sample melody on the console. For this post, we experimented with two melodies: the New World sample melody and a custom melody we created using the MIDI keyboard.

New World melody:

Custom melody:

- Choose the Autoregressive generative AI technique.

- Choose the Autoregressive CNN Bach model.

There are several considerations when choosing the advanced parameters. First, we wanted the original input melody to be recognizable. After some iterating, we found that setting the Maximum notes to add to 60 and Maximum notes to remove to 40 created a desirable outcome. For Creative risk, we wanted the model to create something interesting and adventurous. At the same time, we realized that a very high Creative risk value would deviate too much from the Bach style, so we took a moderate approach and chose a Creative risk of 2.

- You can repeat these steps a few times to iteratively create music.

Editing your input melody

After the AR-CNN model has generated a composition to your satisfaction, you can use the Edit melody feature to modify the melody and try to match the video’s transitions as much as possible.

- Choose the right arrow to open the input melody section.

- Choose Edit melody.

- On the Edit melody page, edit your track in any of the following ways:

- Choose a cell (double-click) to add or remove a note at that pitch or time.

- Drag a cell up or down to change a note’s pitch.

- Drag the edge of a cell left or right to change a note’s length.

- When finished, choose Apply changes.

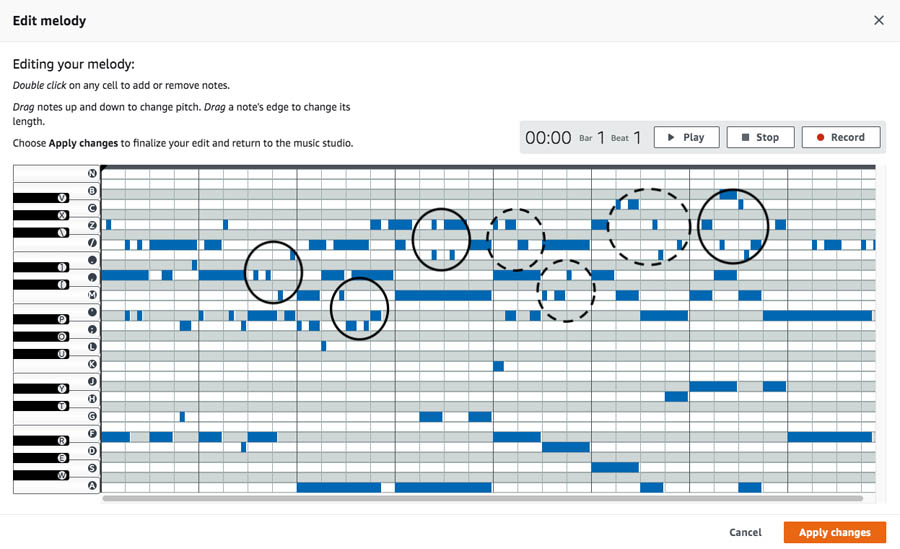

We drew inspiration from the AI-generated notes in different ways. For the New World melody, we noticed the model added short and bouncy notes (the circles with solid lines in the following screenshot), which made the composition sound similar to an American folk song. To match that style, we added a few notes in the second half of the composition (the dotted-lined circles).

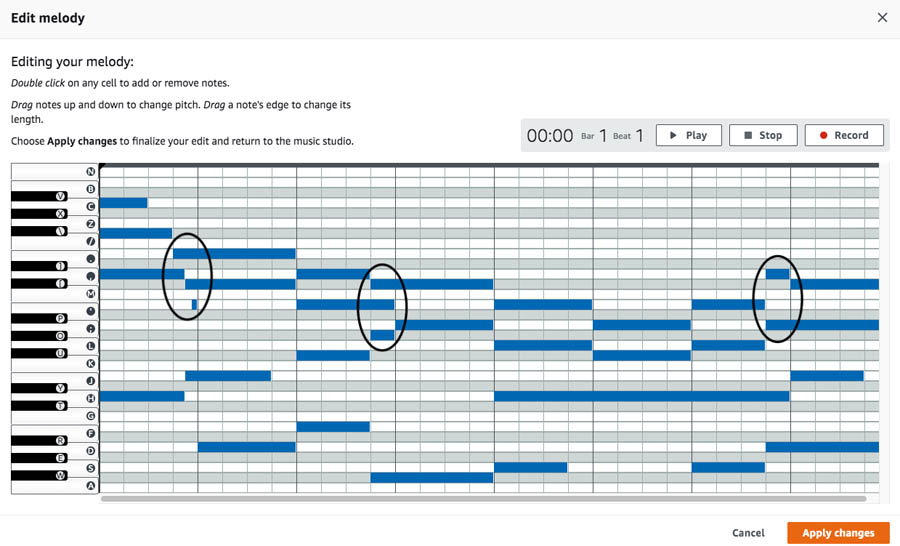

For our custom melody, we noticed the model changed the chords slightly earlier than expected (see the following screenshot). This created lingering and overlapping sounds that we liked for the mountain road scenes.

On the other hand, we noticed the AI model needed our help to remove some notes that sounded out of place. After we listened to the track a few times, we decided to change some pitches manually to nudge the track towards something that sounded a bit more harmonious.

Generating accompaniments using the GAN generative AI technique

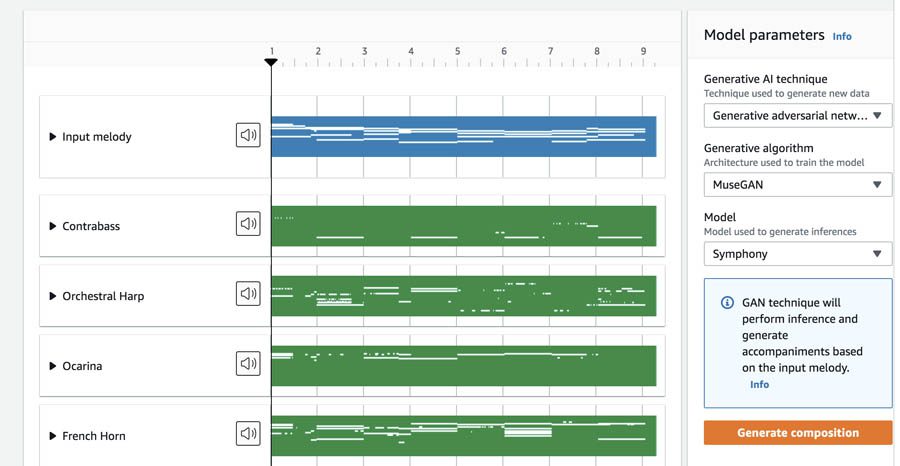

After using the AR-CNN Bach model to explore options for our melody track, we decided to try using a different generative AI model (GAN) to create musical accompaniments.

- Under Model parameters, for Generative AI technique, choose Generative adversarial network.

- Feed the edited compositions to the GAN model to generate accompaniments.

We chose the MuseGAN generative algorithm and the Symphony model because we wanted to create accompaniments to match the serene and somber setting in the video.

- You can optionally export your compositions into a music-editing tool of your choice to change the instrument set and perform post-processing.

Let’s watch the videos containing our AI-inspired creations in the background.

The first video uses the New World melody.

The following video uses our custom melody.

Conclusion

In this post, we demonstrated how to use the Edit melody feature in the AWS DeepComposer Music Studio to collaborate with generative AI models and create interesting Bach-style compositions. You can modify a melody to your liking by adding, removing, and editing specific notes. This gives you full control of the pitch, length, and timing for each note to produce an original melody.

About the Authors

Rahul Suresh is an Engineering Manager with the AWS AI org, where he has been working on AI based products for making machine learning accessible for all developers. Prior to joining AWS, Rahul was a Senior Software Developer at Amazon Devices and helped launch highly successful smart home products. Rahul is passionate about building machine learning systems at scale and is always looking for getting these advanced technologies in the hands of customers. In addition to his professional career, Rahul is an avid reader and a history buff.

Rahul Suresh is an Engineering Manager with the AWS AI org, where he has been working on AI based products for making machine learning accessible for all developers. Prior to joining AWS, Rahul was a Senior Software Developer at Amazon Devices and helped launch highly successful smart home products. Rahul is passionate about building machine learning systems at scale and is always looking for getting these advanced technologies in the hands of customers. In addition to his professional career, Rahul is an avid reader and a history buff.

Enoch Chen is a Senior Technical Program Manager for AWS AI Devices. He is a big fan of machine learning and loves to explore innovative AI applications. Recently he helped bring DeepComposer to thousands of developers. Outside of work, Enoch enjoys playing piano and listening to classical music.

Enoch Chen is a Senior Technical Program Manager for AWS AI Devices. He is a big fan of machine learning and loves to explore innovative AI applications. Recently he helped bring DeepComposer to thousands of developers. Outside of work, Enoch enjoys playing piano and listening to classical music.

Carlos Daccarett is a Front-End Engineer at AWS. He loves bringing design mocks to life. In his spare time, he enjoys hiking, golfing, and snowboarding.

Carlos Daccarett is a Front-End Engineer at AWS. He loves bringing design mocks to life. In his spare time, he enjoys hiking, golfing, and snowboarding.

Dylan Jackson is a Senior ML Engineer and AI Researcher at AWS. He works to build experiences which facilitate the exploration of AI/ML, making new and exciting techniques accessible to all developers. Before AWS, Dylan was a Senior Software Developer at Goodreads where he leveraged both a full-stack engineering and machine learning skillset to protect millions of readers from spam, high-volume robotic traffic, and scaling bottlenecks. Dylan is passionate about exploring both the theoretical underpinnings and the real-world impact of AI/ML systems. In addition to his professional career, he enjoys reading, cooking, and working on small crafts projects.

Dylan Jackson is a Senior ML Engineer and AI Researcher at AWS. He works to build experiences which facilitate the exploration of AI/ML, making new and exciting techniques accessible to all developers. Before AWS, Dylan was a Senior Software Developer at Goodreads where he leveraged both a full-stack engineering and machine learning skillset to protect millions of readers from spam, high-volume robotic traffic, and scaling bottlenecks. Dylan is passionate about exploring both the theoretical underpinnings and the real-world impact of AI/ML systems. In addition to his professional career, he enjoys reading, cooking, and working on small crafts projects.

Tags: Archive

Leave a Reply