Using Transformers to create music in AWS DeepComposer Music studio

AWS DeepComposer provides a creative and hands-on experience for learning generative AI and machine learning (ML). We recently launched a Transformer-based model that iteratively extends your input melody up to 20 seconds. This newly created extension will use the style and musical motifs found in your input melody and create additional notes that sound like they’ve come from your input melody. In this post, we show you how the Transformer model extends the duration of your existing compositions. You can create new and interesting musical scores by using various parameters, including the Edit melody feature.

Introduction to Transformers

The Transformer is a recent deep learning model for use with sequential data such as text, time series, music, and genomes. Whereas older sequence models such as recurrent neural networks (RNNs) or long short-term memory networks (LSTMs) process data sequentially, the Transformer processes data in parallel. This allows them to process massive amounts of available training data by using powerful GPU-based compute resources.

Furthermore, traditional RNNs and LSTMs can have difficulty modeling the long-term dependencies of a sequence because they can forget earlier parts of the sequence. Transformers use an attention mechanism to overcome this memory shortcoming by directing each step of the output sequence to pay “attention” to relevant parts of the input sequence. For example, when a Transformer-based conversational AI model is asked “How is the weather now?” and the model replies “It is warm and sunny today,” the attention mechanism guides the model to focus on the word “weather” when answering with “warm” and “sunny,” and to focus on “now” when answering with “today.” This is different from traditional RNNs and LSTMs, which process sentences from left to right and forget the context of each word as the distance between the words increases.

Training a Transformer model to generate music

To work with musical datasets, the first step is to convert the data into a sequence of tokens. Each token represents a distinct musical event in the score. A token might represent something like the timestamp for when a note is struck, or the pitch of a note. The relationship between these tokens to musical notes is similar to the relationship between words in a sentence or paragraph. Tokens in music can represent notes or other musical features just like how tokens in language can represent words or punctuation. This differs from previous models supported by AWS DeepComposer such as GAN and AR-CNN, which treat music generation like an image generation problem.

These sequences of tokens are then used to train the Transformer model. During training, the model attempts to learn a distribution that matches the underlying distribution of the training dataset. During inference, the model generates a sequence of tokens by sampling from the distribution learned during training. The new musical score is created by turning the sequence of tokens back into music. Music Transformer and MuseNet are examples of other algorithms that use the Transformer architecture for music generation.

In AWS DeepComposer, we use the TransformerXL architecture to generate music because it’s capable of capturing long-term dependencies that are 4.5 times longer than a traditional Transformer. Furthermore, it has been shown to be 18 times faster than a traditional Transformer during inference. This means that AWS DeepComposer can provide you with higher quality musical compositions at lower latency when generating new compositions.

Extending your input melody using AWS DeepComposer

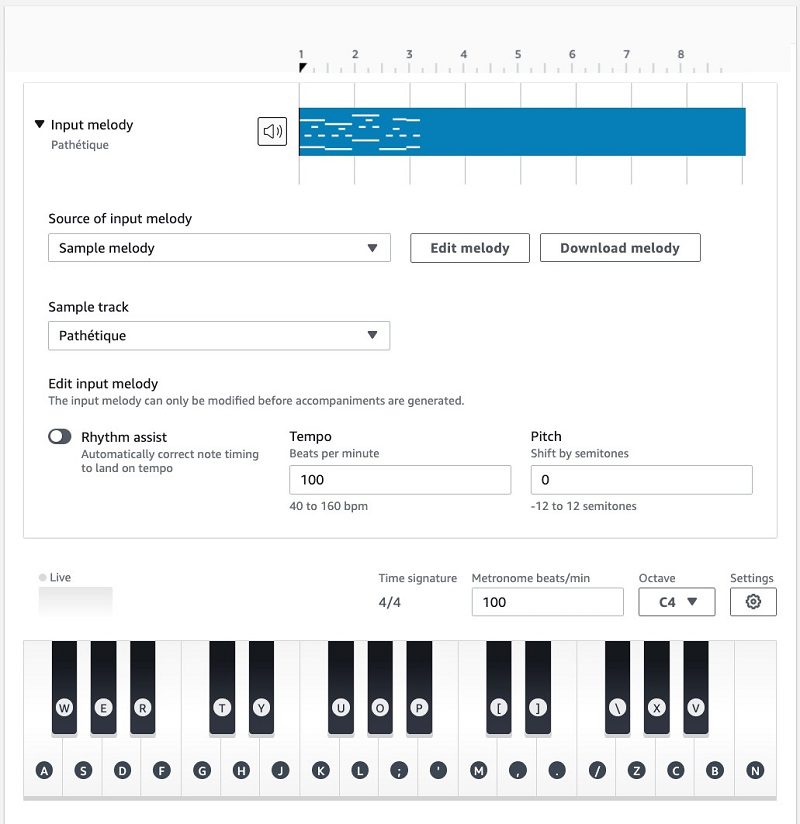

The Transformers technique extends your input melody by up to 20 seconds. The following screenshot shows the view of your input melody on the AWS DeepComposer console.

To extend your input melody, complete the following steps:

- On the AWS DeepComposer console, in the navigation pane, choose Music studio.

- Choose the arrow next to Input melody to expand that section.

- For Sample track, choose a melody specifically recommended for the Transformers technique.

These options represent the kinds of complex classical-genre melodies that will work best with the Transformer technique. You can also import a MIDI file or create your own melody using a MIDI keyboard.

- Under Generative AI technique, for Model parameters, choose Transformers.

The available model, TransformerXLClassical, is preselected.

- Under Advanced parameters, for Model parameters, you have seven parameters that you can adjust (more details about these parameters are provided in the next section of this post).

- Choose Extend input melody.

- To listen to your new composition, choose Play (►).

This model works by extending your input melody by up to 20 seconds.

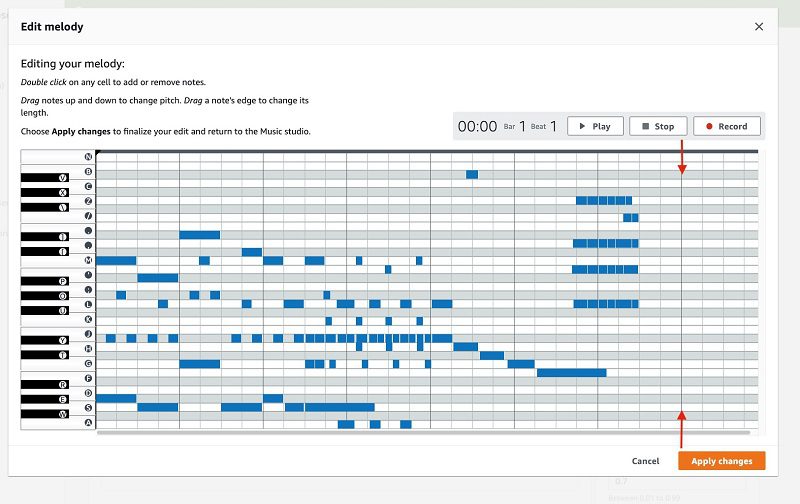

- After performing inference, you can use the Edit melody tool to add or remove notes, or change the pitch or the length of notes in the track generated.

- You can repeat these steps to create compositions up to 2 minutes long.

The following compositions were created using the TransformersXLClassical model in AWS DeepComposer:

Beethoven:

Mozart:

Bach:

In the next section of this post, we look at how different inference parameters affect your output and how we can effectively use these parameters to create interesting and diverse music.

Configuring the advanced parameters for Transformers

In AWS DeepComposer Music studio, you can choose from seven different Advanced parameters that can be used to change how your extended melody is created:

- Sampling technique and sampling threshold

- Creative risk

- Input duration

- Track extension duration

- Maximum rest time

- Maximum note length

Sampling technique and sampling threshold

You have three sampling techniques to choose from: TopK, Nucleus, and Random. You can also set the Sampling threshold value for your chosen technique. We first discuss each technique and provide some examples of how it affects the output below.

TopK sampling

When you choose the TopK sampling technique, the model chooses the K-tokens that have the highest probability of occurring. To set the value for K, change the Sampling threshold.

If your sampling threshold is set high, the number of available tokens (K) is large. A large number of available tokens means the model can choose from a wider variety of musical tokens. In your extended melody, this means the generated notes are likely to be more diverse, but it comes at the cost of potentially creating less coherent music.

On the other hand, if you choose a threshold value that is too low, the model is limited to choosing from a smaller set of tokens (that the model believes has a higher probability of being correct). In your extended melody, you might notice less musical diversity and more repetitive results.

Nucleus sampling

At a high level, Nucleus sampling is very similar to TopK. Setting a higher sampling threshold allows for more diversity at the cost of coherence or consistency. There is a subtle difference between the two approaches. Nucleus sampling chooses the top probability tokens that sum up to the value set for the sampling threshold. We do this by sorting the probabilities from greatest to least, and calculating a cumulative sum for each token.

For example, we might have six musical tokens with the probabilities {0.3, 0.3, 0.2, 0.1, 0.05, 0.05}. If we choose TopK with a sampling threshold equal to 0.5, we choose three tokens (six total musical tokens * 0.5). Then we sample between the tokens with probabilities equal to 0.3, 0.3, and 0.2. If we choose Nucleus sampling with a 0.5 sampling threshold, we only sample between two tokens {0.3, 0.3} as the cumulative probability (0.6) exceeds the threshold (0.5).

Random sampling

Random sampling is the most basic sampling technique. With random sampling, the model is free to sample between all the available tokens and is “randomly” sampled from the output distribution. The output of this sampling technique is identical to that of TopK or Nucleus sampling when the sampling threshold is set to 1. The following are some audio clips generated using different sampling thresholds paired with the TopK sampling threshold.

The following audio uses TopK and a Sampling threshold equal to 0.1:

Notice how the notes quickly start to form a pattern.

The following audio uses TopK and a Sampling threshold equal to 0.9:

You can decide which one sounds better, but did you hear the difference?

The notes are very diverse, but as a whole the notes lose coherence and sound somewhat random at times. This general trend holds for Nucleus sampling as well, but the results differ from TopK depending on the shape of the output distribution. Play around and see for yourself!

Creative risk

Creative risk is a parameter used to control the randomness of predictions. A low creative risk makes the model more confident but also more conservative in its samples (it’s less likely to sample from unlikely candidate tokens). On the other hand, a high creative risk produces a softer (flatter) probability distribution over the list of musical tokens, so the model takes more risks in its samples (it’s more likely to sample from unlikely candidate tokens), resulting in more diversity and probably more mistakes. Mistakes might include creating longer or shorter notes, longer or shorter periods of rest in the generated melody, or adding wrong notes to the generated melody.

Input duration

This parameter tells the model what portion of the input melody to use during inference. The portion used is defined as the number of seconds selected counting backwards from the end of the input track. When extending the melody, the model conditions the output it generates based on the portion of the input melody you provide. For example, if you choose 5 seconds as the input duration, the model only uses the last 5 seconds of the input melody for conditioning and ignores the remaining portion when performing inference. The following audio clips were generated using different input durations.

The following audio has an input duration of 5 seconds:

The following audio has an input duration of 30 seconds:

The output conditioned on 30 seconds of input draws more inspiration from the input melody.

Track extension duration

When extending the melody, the Transformer continuously generates tokens until the generated portion reaches the track extension duration you have selected. The reason the model sometimes generates less than the value you selected is because the model generates values in terms of tokens, not time. Tokens, however, can represent different lengths of the time. For example, a token could represent a note duration of 0.1 seconds or 1 second depending on what the model thinks is appropriate. That token, however, takes the same amount of run time for the model to generate. Because the model can generate hundreds of tokens, this difference adds up. To make sure the model doesn’t have extreme runtime latencies, sometimes the model stops before generating your entire output.

Maximum rest time

During inference, the Transformers model can create musical artifacts. Changing the value of maximum rest time limits the periods of silence, in seconds, the model can generate while performing inference.

Maximum note length

Changing the value of maximum note length limits the amount of time a single note can be held for while performing inference. The following audio clips are some example tracks generated using different maximum rest time and maximum note length.

In the first example audio, we set the maximum note length to 10 seconds.

In the second sample, we set it to 1 second, but set the maximum rest period to 11 seconds.

In the third sample, we set the maximum note length to 1 second and maximum rest period to 2 seconds.

The first sample contains extremely long notes. The second sample doesn’t contain long notes, but contains many missing gaps in the music. On the other hand, the third sample contains both shorter notes and shorter gaps.

Creating compositions using different AWS DeepComposer techniques

What’s great about AWS DeepComposer is that you can mix and match the new Transformers technique with the other techniques found in AWS DeepComposer, such as the AR-CNN and GAN techniques.

To create a sample, we completed the following steps:

- Choose the sample melody Pathétique.

- Use the Transformers technique to extend the melody.

For this track, we extended the melody to 11 bars. Transformers tries to extend the melody up to the value you choose for the extension duration.

- The AR-CNN and GAN techniques only work with eight bars of input, so we use the Edit melody feature to cut the track down to eight bars.

- Use the AR-CNN technique to fill in notes and enhance the melody.

For this post, we set Sampling iterations equal to 100.

- We use the GAN technique, paired with the MuseGAN algorithm and the Rock model, to generate accompaniments.

The following audio is our final output:

We think the output sounds pretty impressive. What do you think? Play around and see what kind of composition you can create yourself!

Conclusion

You’ve now learned about the Transformer model and how AWS DeepComposer uses it to extend your input melody. You can also better understand how each parameter for the Transformers technique can affect the characteristics of your composition.

To continue exploring AWS DeepComposer, consider some of the following:

- Choose a different input melody. You can try importing a track or recording your own.

- Use the Edit melody feature to assist your AI or correct mistakes.

- Try feeding the output of the AR-CNN model into the Transformers model.

- Iteratively extend your melody to create a musical composition up to 2 minutes long.

Although you don’t need a physical device to experiment with AWS DeepComposer, you can take advantage of a limited-time offer and purchase the AWS DeepComposer keyboard at a special price of $79.20 (20% off) on Amazon.com. The pricing includes the keyboard and a 3-month free trial of AWS DeepComposer.

We’re excited for you to try out various combinations to generate your creative musical piece. Start composing in the AWS DeepComposer Music Studio now!

About the Authors

Rahul Suresh is an Engineering Manager with the AWS AI org, where he has been working on AI based products for making machine learning accessible for all developers. Prior to joining AWS, Rahul was a Senior Software Developer at Amazon Devices and helped launch highly successful smart home products. Rahul is passionate about building machine learning systems at scale and is always looking for getting these advanced technologies in the hands of customers. In addition to his professional career, Rahul is an avid reader and a history buff.

Rahul Suresh is an Engineering Manager with the AWS AI org, where he has been working on AI based products for making machine learning accessible for all developers. Prior to joining AWS, Rahul was a Senior Software Developer at Amazon Devices and helped launch highly successful smart home products. Rahul is passionate about building machine learning systems at scale and is always looking for getting these advanced technologies in the hands of customers. In addition to his professional career, Rahul is an avid reader and a history buff.

Wayne Chi is a ML Engineer and AI Researcher at AWS. He works on researching interesting Machine Learning problems to teach new developers and then bringing those ideas into production. Prior to joining AWS he was a Software Engineer and AI Researcher at JPL, NASA where he worked on AI Planning and Scheduling systems for the Mars 2020 Rover (Perseverance). In his spare time he enjoys playing tennis, watching movies, and learning more about AI.

Liang Li is an AI Researcher at AWS, where she works on AI based products to bring new cutting-edge ideas about Deep Learning to teach developers. Prior to joining AWS, Liang graduated from the University of Tennessee Knoxville with a Ph. D in EE, and she has been focusing on ML projects since graduation. In her spare time, she enjoys cooking and hiking.

Liang Li is an AI Researcher at AWS, where she works on AI based products to bring new cutting-edge ideas about Deep Learning to teach developers. Prior to joining AWS, Liang graduated from the University of Tennessee Knoxville with a Ph. D in EE, and she has been focusing on ML projects since graduation. In her spare time, she enjoys cooking and hiking.

Suri Yaddanapudi is an AI Researcher and ML Engineer at AWS. He works on researching and implementing modern machine learning algorithms across different domains and teaching them to customers in a fun way. Prior to joining AWS, Suri graduated with his Ph.D. degree from University of Cincinnati and his thesis was focused on implementing AI techniques to Drug Repurposing. In his spare time, he enjoys reading, watching anime and playing futsal.

Suri Yaddanapudi is an AI Researcher and ML Engineer at AWS. He works on researching and implementing modern machine learning algorithms across different domains and teaching them to customers in a fun way. Prior to joining AWS, Suri graduated with his Ph.D. degree from University of Cincinnati and his thesis was focused on implementing AI techniques to Drug Repurposing. In his spare time, he enjoys reading, watching anime and playing futsal.

Aashiq Muhamed is an AI Researcher and ML Engineer at AWS. He believes that AI can change the world and that democratizing AI is key to making this happen. At AWS, he works on creating meaningful AI products and translating ideas from academia into industry. Prior to joining AWS, he was a graduate student at Stanford where he worked on model reduction in robotics, learning and control. In his spare time he enjoys playing the violin and thinking about healthcare on MARS.

Patrick L. Cavins is a Programmer Writer for DeepComposer and DeepLens. Previously, he worked in radiochemistry using isotopically labelled compounds to study how plants communicate. In his spare time, he enjoys skiing, playing the piano, and writing.

Maryam Rezapoor is a Senior Product Manager with AWS AI Devices team. As a former biomedical researcher and entrepreneur, she finds her passion in working backward from customers’ needs to create new impactful solutions. Outside of work, she enjoys hiking, photography, and gardening.

Tags: Archive

Leave a Reply