Optimizing ML models for iOS and MacOS devices with Amazon SageMaker Neo and Core ML

Core ML is a machine learning (ML) model format created and supported by Apple that compiles, deploys, and runs on Apple devices. Developers who train their models in popular frameworks such as TensorFlow and PyTorch convert models to Core ML format to deploy them on Apple devices.

AWS has automated the model conversion to Core ML in the cloud using Amazon SageMaker Neo. Neo is an ML model compilation service on AWS that enables you to automatically convert models trained in TensorFlow, PyTorch, MXNet, and other popular frameworks, and optimize them for the target of your choice. With the new automated model conversion to Core ML, Neo now makes it easier to build apps on Apple’s platform to convert models from popular libraries like TensorFlow and PyTorch to Core ML format.

In this post, we show how to set up automatic model conversion, add a model to your app, and deploy and test your new model.

Prerequisites

To get started, you first need to create an AWS account and create an AWS Identity and Access Management (IAM) administrator user and group. For instructions, see Set Up Amazon SageMaker. You will also need Xcode 12 installed on your machine.

Converting models automatically

One of the biggest benefits of using Neo is automating model conversion from a framework format such as TensorFlow or PyTorch to Core ML format by hosting the coremltools library in the cloud. You can do this via the AWS Command Line Interface (AWS CLI), Amazon SageMaker console, or SDK. For more information, see Use Neo to Compile a Model.

You can train your models in SageMaker and convert them to Core ML format with the click of a button. To set up your notebook instance, generate a Core ML model, and create your compilation job on the SageMaker console, complete the following steps:

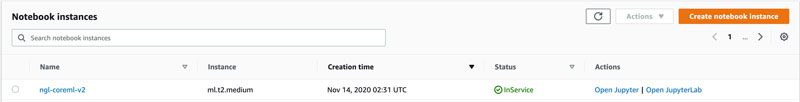

- On the SageMaker console, under Notebook, choose Notebook instances.

- Choose Create notebook instance.

- For Notebook instance name, enter a name for your notebook.

- For Notebook instance type¸ choose your instance (for this post, the default ml.t2.medium should be enough.

- For IAM role, choose your role or let AWS create a role for you.

After the notebook instance is created, the status changes to InService.

- Open the instance or JupyterLab.

You’re ready to start with your first Core ML model.

- Begin your notebook by importing some libraries:

- Choose the following image (right-click) and save it.

You use this image for testing the model later, and it helps make the segmentation model.

- Upload this image in your local directory. If you’re using a SageMaker notebook instance, you can upload the image by choosing Upload.

- To use this image, you need to format it so that it works with the segmentation model when testing the model’s output. See the following code:

You now need a model to work with. For this post, we use the TorchVision deeplabv3 segmentation model, which is publicly available.

The deeplabv3 model returns a dictionary, but we want only a specific tensor for our output.

- To remedy this, wrap the model in a module that extracts the output we want:

- Trace the PyTorch model using a sample input:

Your model is now ready.

- Save your model. The following code saves it with the .pth file extension:

Your model artifacts must be in a compressed tarball file format (.tar.gz) for Neo.

- Convert your model with the following code:

- Upload the model to your Amazon Simple Storage Service (Amazon S3) bucket.

If you don’t have an S3 bucket, you can let SageMaker make one for you by creating a sagemaker.Session(). The bucket has the following format: sagemaker-{region}-{aws-account-id}. Your model must be saved in an S3 bucket in order for Neo to access it. See the following code:

- Specify the directory where you want to store the model:

- Upload the model to Amazon S3 and print out the S3 bucket path URI for future reference:

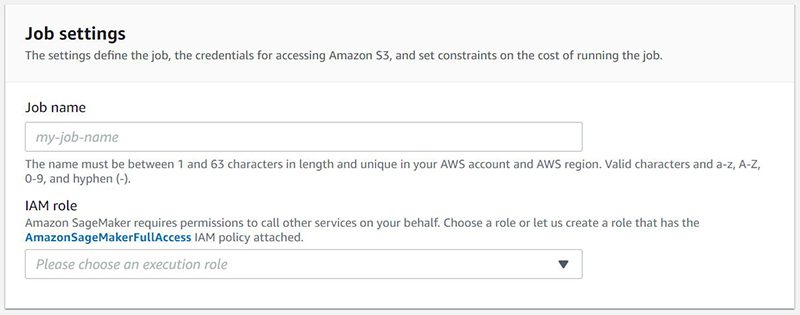

- On the SageMaker console, under Inference, choose Compilation jobs.

- Choose Create compilation job.

- In the Job settings section, for Job name, enter a name for your job.

- For IAM role, choose a role.

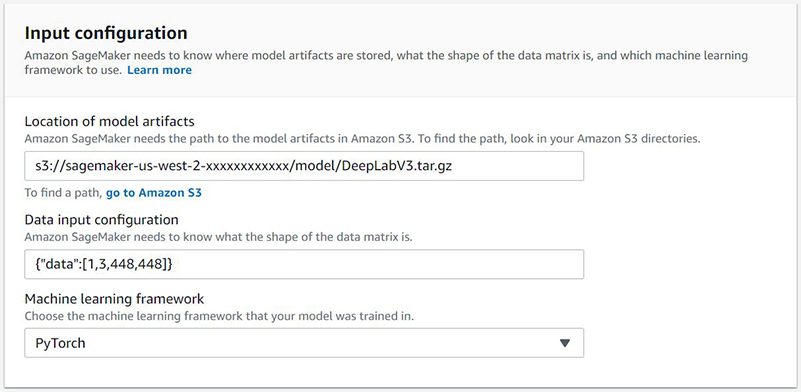

- In the Input configuration section, for Location of model artifacts, enter the location of your model. Use the path from the print statement earlier (

print(model_path)). - For Data input configuration, enter the shape of the model tensor. For this post, the TorchVision

deeplabv3segmentation model has the shape[1,3,448,448]. - For Machine learning framework, choose PyTorch.

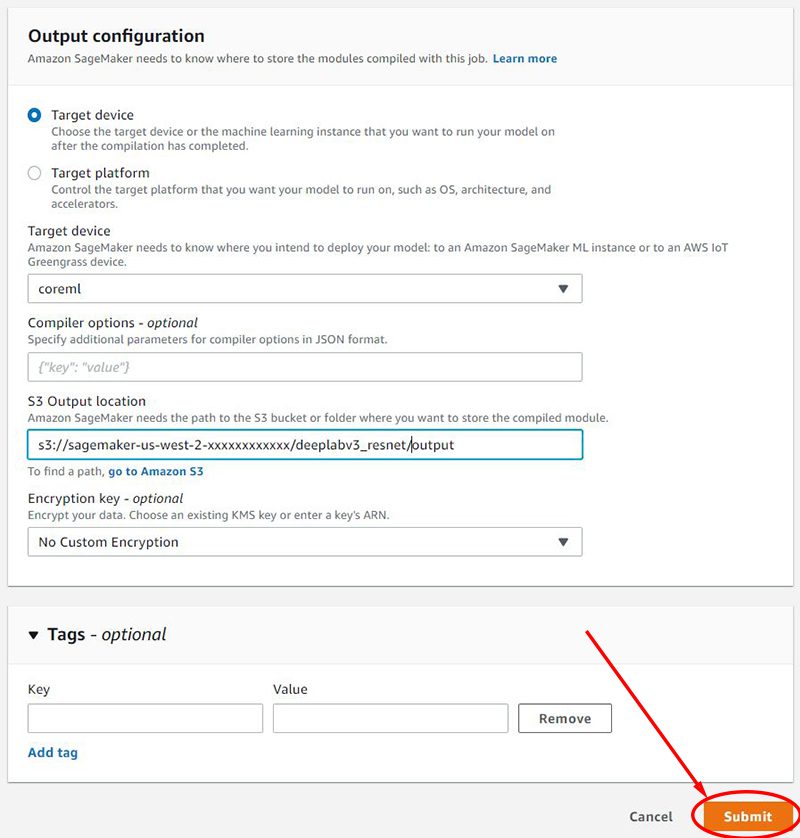

- In the Output configuration section, select Target device.

- For Target device, choose coreml.

- For S3 Output location, enter the output location of the compilation job (for this post,

/output). - Choose Submit.

You’re redirected to the Compilation jobs page on the SageMaker console. When the compilation job is complete, you see the status COMPLETED.

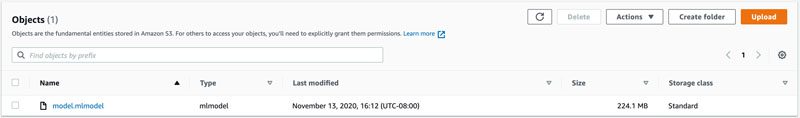

If you go to your S3 bucket, you can see the output of the compilation job. The output has an .mlmodel file extension.

The output of the Neo service CreateCompilationJob is a model in Core ML format, which you can download from the S3 bucket location to your Mac. You can use this conversion process with any type of model that coremltools supports—from image classification or segmentation, to object detection or question answering text recognition.

Adding the model to your app

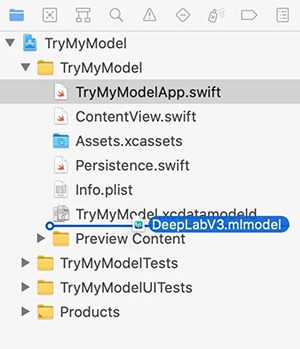

Make sure that you have installed Xcode version 12.0 or later. For more information about using Xcode, see the Xcode documentation. To add the converted Core ML model to your app in Xcode, complete the following steps:

- Download the model from the S3 bucket location.

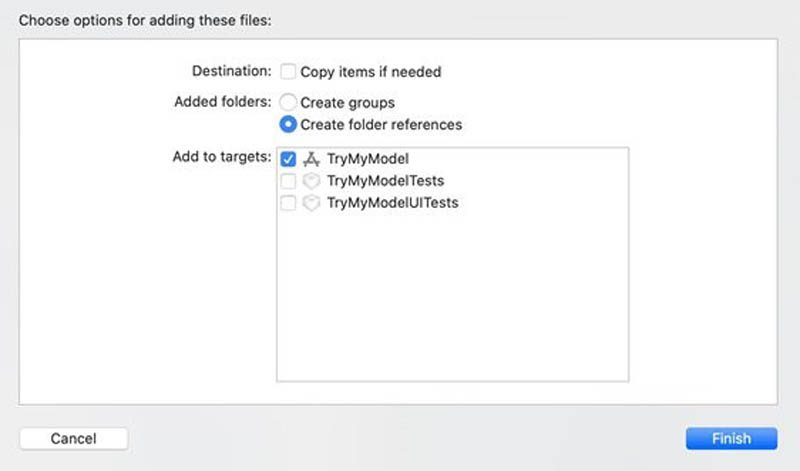

- Drag the model to the Project Navigator in your Xcode app.

- Select any preferred options.

- Choose Finish.

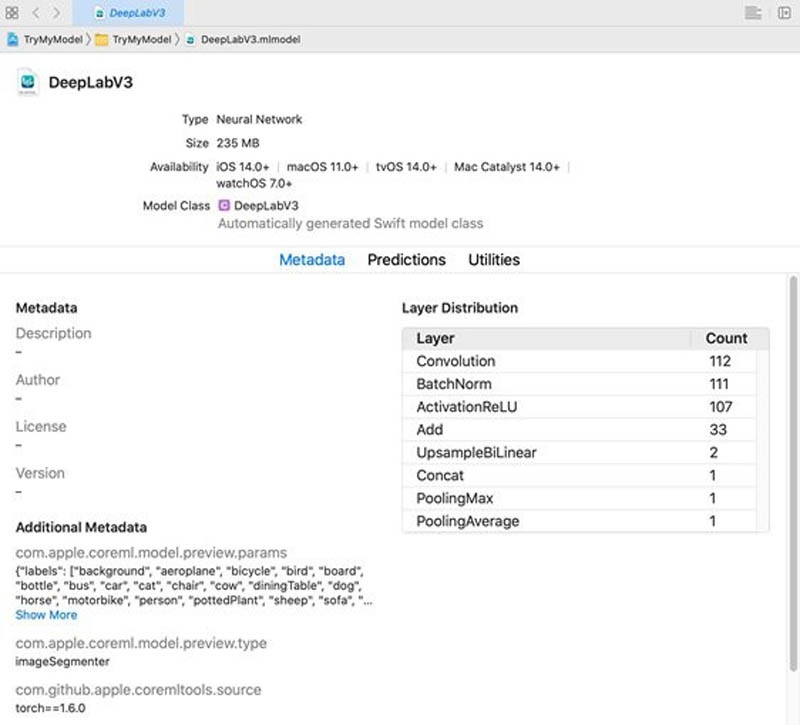

- Choose the model in the Xcode Project Navigator to see its details, including metadata, predictions, and utilities.

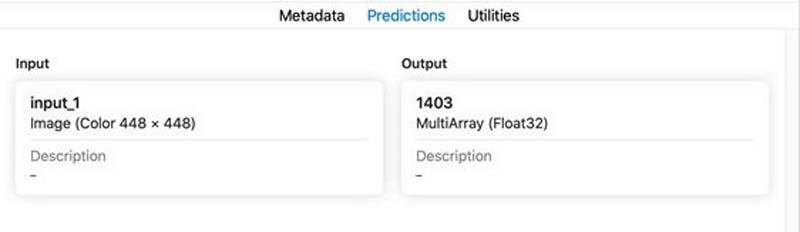

- Choose the Predictions tab to see the model’s input and output.

Deploying and testing the model

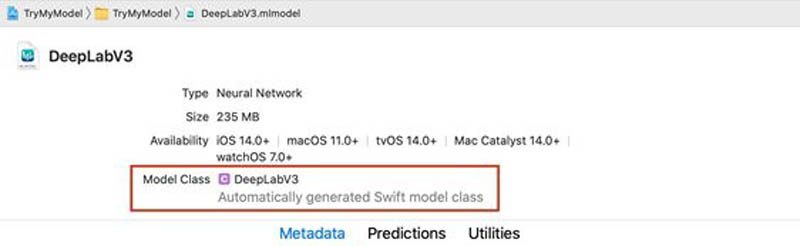

Xcode automatically generates a Swift model class for the Core ML model, which you can use in your code to pass inputs.

For example, to load the model in your code, use the following model class:

You can now pass through input values using the model class. To test that your app is performing as expected with the model, launch the app in the Xcode simulator and target a specific device. If it works in the Xcode simulator, it works on the device!

Conclusion

Neo has created a way to generate Core ML format models from TensorFlow and PyTorch. Once you convert your model to Core ML format, there is a well-defined path to compile and deploy your model to an iOS device or Mac computers. If you’re already a SageMaker customer, you can train your model in SageMaker and convert it to Core ML format using Neo with the click of a button.

For more information, see the following resources:

- Amazon SageMaker Neo

- Use Neo to Compile a Model

- Set Up Amazon SageMaker

- DeepLabv3-Resnet 101 model

- Converting a PyTorch Segmentation Model to Core ML

- Core ML developer documentation

- Coremltools documentation

- Xcode documentation

- Integrating a Core ML Model into Your App

- coremltools GitHub repo

About the Author

Lokesh Gupta is a Software Development Manager at AWS AI service.

Lokesh Gupta is a Software Development Manager at AWS AI service.

Tags: Archive

Leave a Reply