Training a reinforcement learning Agent with Unity and Amazon SageMaker RL

Unity is one of the most popular game engines that has been adopted not only for video game development but also by industries such as film and automotive. Unity offers tools to create virtual simulated environments with customizable physics, landscapes, and characters. The Unity Machine Learning Agents Toolkit (ML-Agents) is an open-source project that enables developers to train reinforcement learning (RL) agents against the environments created on Unity.

Reinforcement learning is an area of machine learning (ML) that teaches a software agent how to take actions in an environment in order to maximize a long-term objective. For more information, see Amazon SageMaker RL – Managed Reinforcement Learning with Amazon SageMaker. ML-Agents is becoming an increasingly popular tool among many gaming companies for use cases such as game level difficulty design, bug fixing, and cheat detection. Currently, ML-Agents is used to train agents locally, and can’t scale to efficiently use more computing resources. You have to train RL agents on a local Unity engine for an extensive amount of time before obtaining the trained model. The process is time-consuming and not scalable for processing large amounts of data.

In this post, we demonstrate a solution by integrating the ML-Agents Unity interface with Amazon SageMaker RL, allowing you to train RL agents on Amazon SageMaker in a fully managed and scalable fashion.

Overview of solution

SageMaker is a fully managed service that enables fast model development. It provides many built-in features to assist you with training, tuning, debugging, and model deployment. SageMaker RL builds on top of SageMaker, adding pre-built RL libraries and making it easy to integrate with different simulation environments. You can use built-in deep learning frameworks such as TensorFlow and PyTorch with various built-in RL algorithms from the RLlib library to train RL policies. Infrastructures for training and inference are fully managed by SageMaker, so you can focus on RL formulation. SageMaker RL also provides a set of Jupyter notebooks, demonstrating varieties of domain RL applications in robotics, operations research, finance, and more.

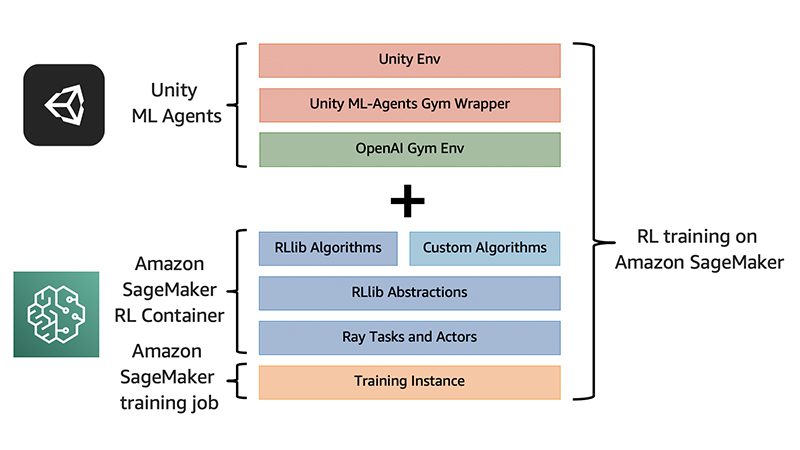

The following diagram illustrates our solution architecture.

In this post, we walk through the specifics of training an RL agent on SageMaker by interacting with the sample Unity environment. To access the complete notebook for this post, see the SageMaker notebook example on GitHub.

Setting up your environments

To get started, we import the needed Python libraries and set up environments for permissions and configurations. The following code contains the steps to set up an Amazon Simple Storage Service (Amazon S3) bucket, define the training job prefix, specify the training job location, and create an AWS Identity and Access Management (IAM) role:

Building a Docker container

SageMaker uses Docker containers to run scripts, train algorithms, and deploy models. A Docker container is a standalone package of software that manages all the code and dependencies, and it includes everything needed to run an application. We start by building on top of a pre-built SageMaker Docker image that contains dependencies for Ray, then install the required core packages:

- gym-unity – Unity provides a wrapper to wrap Unity environment into a gym interface, an open-source library that gives you access to a set of classic RL environments

- mlagents-envs – Package that provides a Python API to allow direct interaction with the Unity game engine

Depending on the status of the machine, the Docker building process may take up to 10 minutes. For all pre-built SageMaker RL Docker images, see the GitHub repo.

Unity environment example

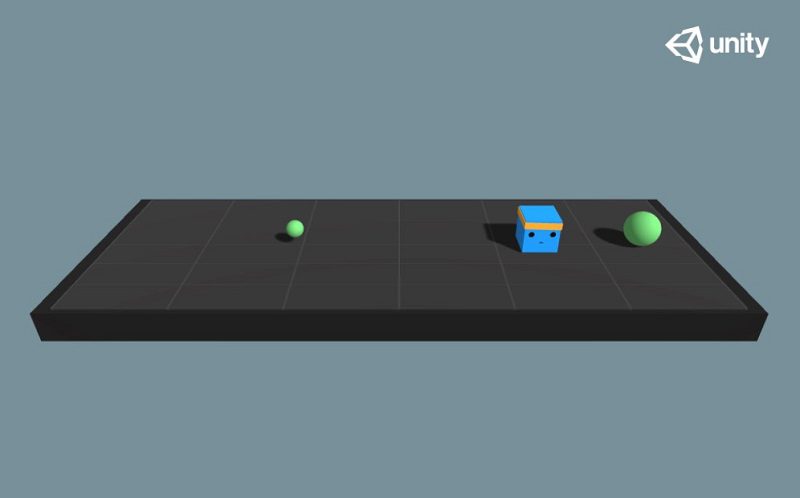

In this post, we use a simple example Unity environment called Basic. In the following visualization, the agent we’re controlling is the blue box that moves left or right. For each step it takes, it costs the agent some energy, incurring small negative rewards (-0.01). Green balls are targets with fixed locations. The agent is randomly initialized between the green balls, and collects rewards when it collides with the green balls. The large green ball offers a reward of +1, and the small green ball offers a reward of +0.1. The goal of this task is to train the agent to move towards the ball that offers the most cumulative rewards.

Model training, evaluation, and deployment

In this section, we walk you through the steps to train, evaluate, and deploy models.

Writing a training script

Before launching the SageMaker RL training job, we need to specify the configurations of the training process. It’s usually achieved in a single script outside the notebook. The training script defines the input (the Unity environment) and the algorithm for RL training. The following code shows what the script looks like:

The training script has two components:

- UnityEnvWrapper – The Unity environment is stored as a binary file. To load the environment, we need to use the Unity ML-Agents Python API.

UnityEnvironmenttakes the name of the environment and returns an interactive environment object. We then wrap the object withUnityToGymWrapperand return an object that is trainable using Ray-RLLib and SageMaker RL. - MyLauncher – This class inherits the

SageMakerRayLauncherbase class for SageMaker RL applications to use Ray-RLLib. Inside the class, we register the environment to be recognized by Ray and specify the configurations we want during training. Example hyperparameters include the name of the environment, discount factor in cumulative rewards, learning rate of the model, and number of iterations to run the model. For a full list of commonly used hyperparameters, see Common Parameters.

Training the model

After setting up the configuration and model customization, we’re ready to start the SageMaker RL training job. See the following code:

Inside the code, we specify a few parameters:

- entry_point – The path to the training script we wrote that specifies the training process

- source_dir – The path to the directory with other training source code dependencies aside from the entry point file

- dependencies – A list of paths to directories with additional libraries to be exported to the container

In addition, we state the container image name, training instance information, output path, and selected metrics. We are also allowed to customize any Ray-related parameters using the hyperparameters argument. We launch the SageMaker RL training job by calling estimator.fit, and start the model training process based on the specifications in the training script.

At a high level, the training job initiates a neural network and updates the network gradually towards the direction in which the agent collects higher reward. Through multiple trials, the agent eventually learns how to navigate to the high-rewarding location efficiently. SageMaker RL handles the entire process and allows you to view the training job status in the Training jobs page on the SageMaker console.

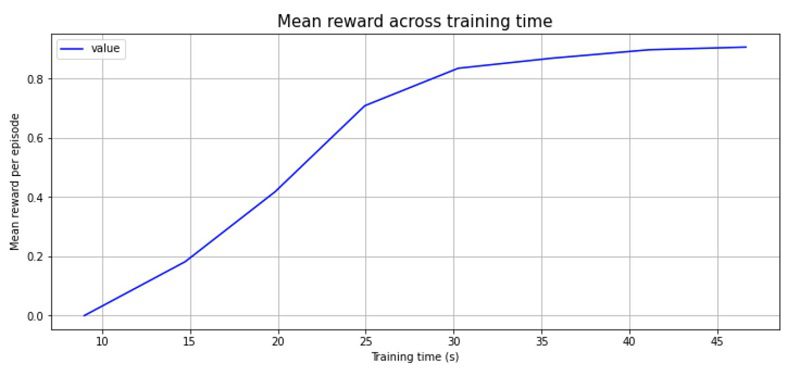

It’s also possible to monitor model performance by examining the training logs recorded in Amazon CloudWatch. Due to the simplicity of the task, the model completes training (10,000 agent movements) with roughly 800 episodes (number of times the agent reaches a target ball) in under 1 minute. The following plot shows the average reward collected converges around 0.9. The maximum reward the agent can get from this environment is 1, and each step costs 0.01, so a mean reward around 0.9 seems to be the results of optimal policy, indicating our training process is successful!

Evaluating the model

When model training is complete, we can load the trained model to evaluate its performance. Similar to the setup in the training script, we wrap the Unity environment with a gym wrapper. We then create an agent by loading the trained model.

To evaluate the model, we run the trained agent multiple times against the environment with a fixed agent and target initializations, and add up the cumulative rewards the agent collects at each step for each episode.

Out of five episodes, the average episode reward is 0.92 with the maximum reward of 0.93 and minimum reward of 0.89, suggesting the trained model indeed performs well.

Deploying the model

We can deploy the trained RL policy with just a few lines of code using the SageMaker model deployment API. You can pass an input and get out the optimal actions based on the policy. The input shape needs to match the observation input shape from the environment.

For the Basic environment, we deploy the model and pass an input to the predictor:

The model predicts an indicator corresponding to moving left or right. The recommended direction of movement for the blue box agent always points towards the larger green ball.

Cleaning up

When you’re finished running the model, call predictor.delete_endpoint() to delete the model deployment endpoint to avoid incurring future charges.

Customizing training algorithms, models, and environments

In addition to the preceding use case, we encourage you to explore the customization capabilities this solution supports.

In the preceding code example, we specify Proximal Policy Optimization (PPO) to be the training algorithm. PPO is a popular RL algorithm that performs comparably to state-of-the-art approaches but is much simpler to implement and tune. Depending on your use case, you can choose the most-fitted algorithm for training by either selecting from a list of comprehensive algorithms already implemented in RLLib or building a custom algorithm from scratch.

By default, RLLib applies a pre-defined convolutional neural network or fully connected neural network. However, you can create a custom model for training and testing. Following the examples from RLLib, you can register the custom model by calling ModelCatalog.register_custom_model, then refer to the newly registered model using the custom_model argument.

In our code example, we invoke a predefined Unity environment called Basic, but you can experiment with other pre-built Unity environments. However, as of this writing, our solution only supports a single-agent environment. When new environments are built, register it by calling register_env and refer to the environment with the env parameter.

Conclusion

In this post, we walk through how to train an RL agent to interact with Unity game environments using SageMaker RL. We use a pre-built Unity environment example for the demonstration, but encourage you to explore using custom or other pre-built Unity environments.

SageMaker RL offers a scalable and efficient way of training RL gaming agents to play game environments powered by Unity. For the notebook containing the complete code, see Unity 3D Game with Amazon SageMaker RL.

If you’d like help accelerating your use of ML in your products and processes, please contact the Amazon ML Solutions Lab.

About the Authors

Yohei Nakayama is a Deep Learning Architect at Amazon Machine Learning Solutions Lab, where he works with customers across different verticals to accelerate their use of artificial intelligence and AWS Cloud services to solve their business challenges. He is interested in applying ML/AI technologies to the space industry.

Henry Wang is a Data Scientist at Amazon Machine Learning Solutions Lab. Prior to joining AWS, he was a graduate student at Harvard in Computational Science and Engineering, where he worked on healthcare research with reinforcement learning. In his spare time, he enjoys playing tennis and golf, reading, and watching StarCraft II tournaments.

Henry Wang is a Data Scientist at Amazon Machine Learning Solutions Lab. Prior to joining AWS, he was a graduate student at Harvard in Computational Science and Engineering, where he worked on healthcare research with reinforcement learning. In his spare time, he enjoys playing tennis and golf, reading, and watching StarCraft II tournaments.

Yijie Zhuang is a Software Engineer with Amazon SageMaker. He did his MS in Computer Engineering from Duke. His interests lie in building scalable algorithms and reinforcement learning systems. He contributed to Amazon SageMaker built-in algorithms and Amazon SageMaker RL.

Yijie Zhuang is a Software Engineer with Amazon SageMaker. He did his MS in Computer Engineering from Duke. His interests lie in building scalable algorithms and reinforcement learning systems. He contributed to Amazon SageMaker built-in algorithms and Amazon SageMaker RL.

Tags: Archive

Leave a Reply