Translating JSON documents using Amazon Translate

JavaScript Object Notation (JSON) is a schema-less, lightweight format for storing and transporting data. It’s a text-based, self-describing representation of structured data that is based on key-value pairs. JSON is supported either natively or through libraries in most major programming languages, and is commonly used to exchange information between web clients and web servers. Over the last 15 years, JSON has become ubiquitous on the web and is the format of choice for almost all web services.

To reach more users, you often want to localize your content and applications that may be in JSON format. This post shows you a serverless approach for easily translating JSON documents using Amazon Translate. Serverless architecture is ideal because it is event-driven and can automatically scale, making it a cost effective solution. In this approach, JSON tags are left as they are, and the content within those tags is translated. This allows you to preserve the context of the text, so that translations can be handled with greater precision. The approach presented here was recently used by a large higher education customer of AWS for translating media documents that are in JSON format.

Amazon Translate is a neural machine translation service that delivers fast, high-quality, affordable, and customizable language translation. Neural machine translation uses deep learning models to deliver more accurate and natural-sounding translation than traditional statistical and rule-based translation algorithms. The translation service is trained on a wide variety of content across different use cases and domains to perform well on many kinds of content. Its asynchronous batch processing capability enables you to translate a large collection of text, HTML, and OOXML documents with a single API call.

In this post, we walk you through creating an automated and serverless pipeline for translating JSON documents using Amazon Translate.

Solution overview

Amazon Translate currently supports the ability to ignore tags and only translate text content in XML documents. In this solution, we therefore first convert JSON documents to XML documents, use Amazon Translate to convert text content in the XML document, and then covert the XML document back to JSON.

The solution uses serverless technologies and managed services to provide maximum scalability and cost-effectiveness. In addition to Amazon Translate, the solution uses the following services:

- AWS Lambda – Runs code in response to triggers such as changes in data, changes in application state, or user actions. Because services like Amazon S3 and Amazon SNS can directly trigger a Lambda function, you can build a variety of real-time serverless data-processing systems.

- Amazon Simple Notification Service (Amazon SNS) – Enables you to decouple microservices, distributed systems, and serverless applications with a highly available, durable, secure, fully managed publish/subscribe messaging service.

- Amazon Simple Storage Service (Amazon S3) – Stores your documents and allows for central management with fine-tuned access controls.

- AWS Step Functions – Coordinates multiple AWS services into serverless workflows.

Solution architecture

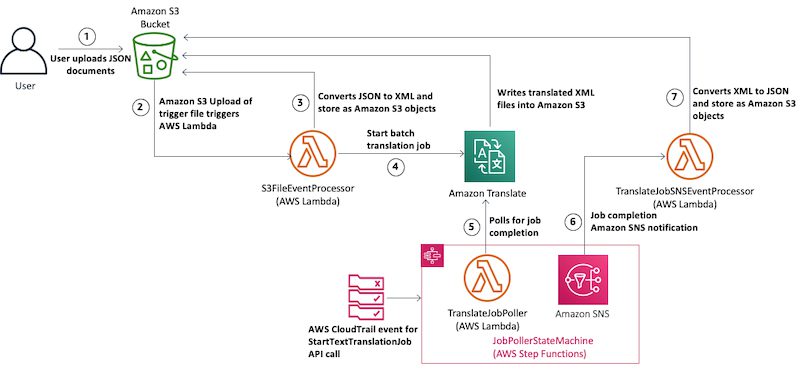

The architecture workflow contains the following steps:

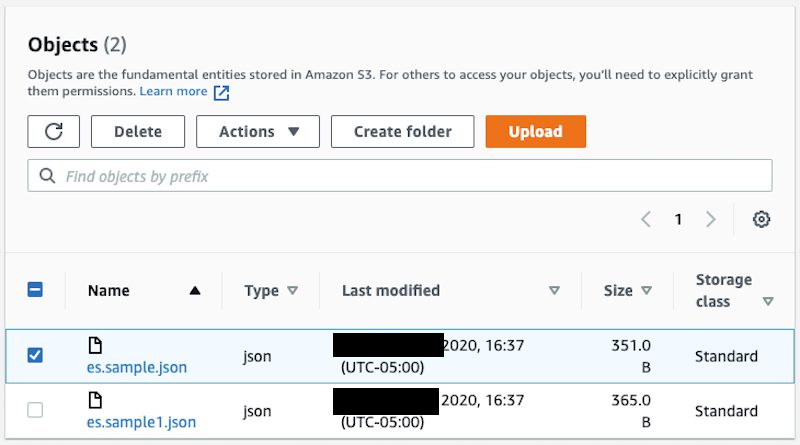

- Users upload one or more JSON documents to Amazon S3.

- The Amazon S3 upload triggers a Lambda function.

- The function converts the JSON documents into XML, stores them in Amazon S3, and invokes Amazon Translate in batch mode to translate the XML documents texts into the target language.

- The Step Functions-based job poller polls for the translation job to complete.

- Step Functions sends an SNS notification when the translation is complete.

- A Lambda function reads the translated XML documents in Amazon S3, converts them to JSON documents, and stores them back in Amazon S3.

The following diagram illustrates this architecture.

Deploying the solution with AWS CloudFormation

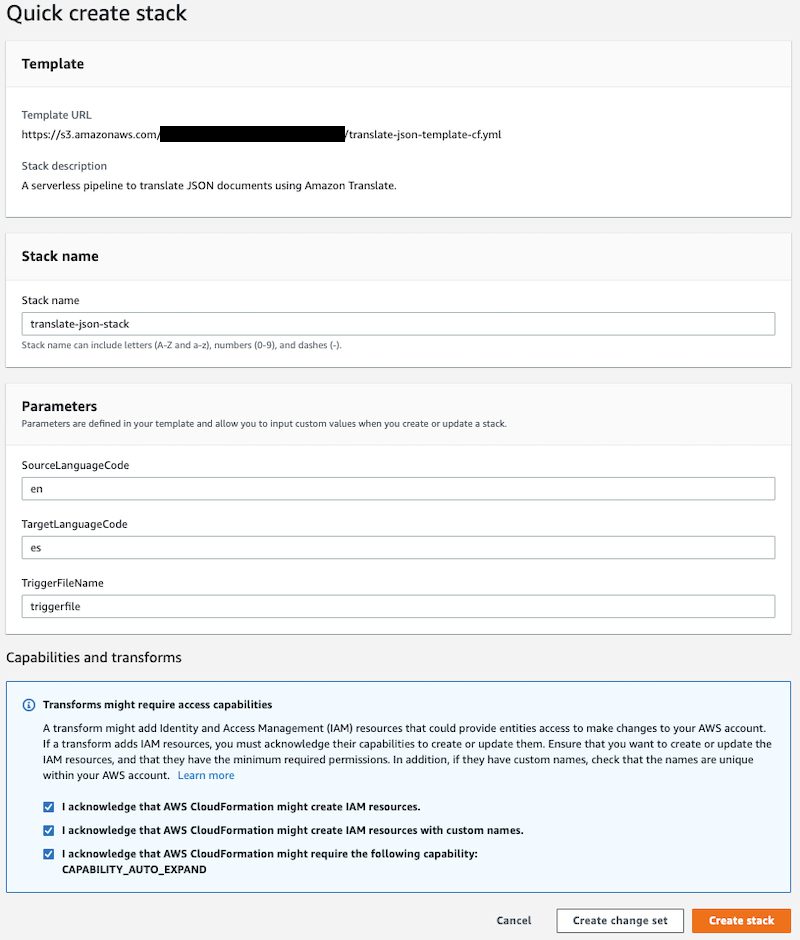

The first step is to use an AWS CloudFormation template to provision the necessary resources needed for the solution, including the AWS Identity and Access Management (IAM) roles, IAM policies, and SNS topics.

- Launch the AWS CloudFormation template by choosing Launch Stack (this creates the stack the

us-east-1Region):

- For Stack name, enter a unique stack name for this account; for example,

translate-json-document. - For SourceLanguageCode, enter the language code for the current language of the JSON documents; for example,

enfor English. - For TargetLanguageCode, enter the language code that you want your translated documents in; for example,

esfor Spanish.

For more information about supported languages, see Supported Languages and Language Codes.

- For TriggerFileName, enter the name of the file that triggers the translation serverless pipeline; the default is

triggerfile. - In the Capabilities and transforms section, select the check boxes to acknowledge that AWS CloudFormation will create IAM resources and transform the AWS Serverless Application Model (AWS SAM) template.

AWS SAM templates simplify the definition of resources needed for serverless applications. When deploying AWS SAM templates in AWS CloudFormation, AWS CloudFormation performs a transform to convert the AWS SAM template into a CloudFormation template. For more information, see Transform.

- Choose Create stack.

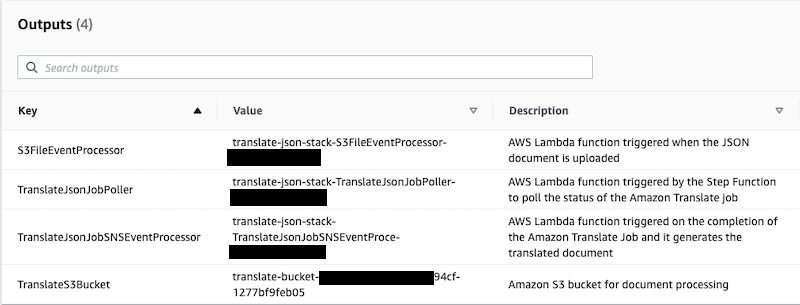

The stack creation may take up to 20 minutes, after which the status changes to CREATE_COMPLETE. You can see the name of the newly created S3 bucket on the Outputs tab.

Translating JSON documents

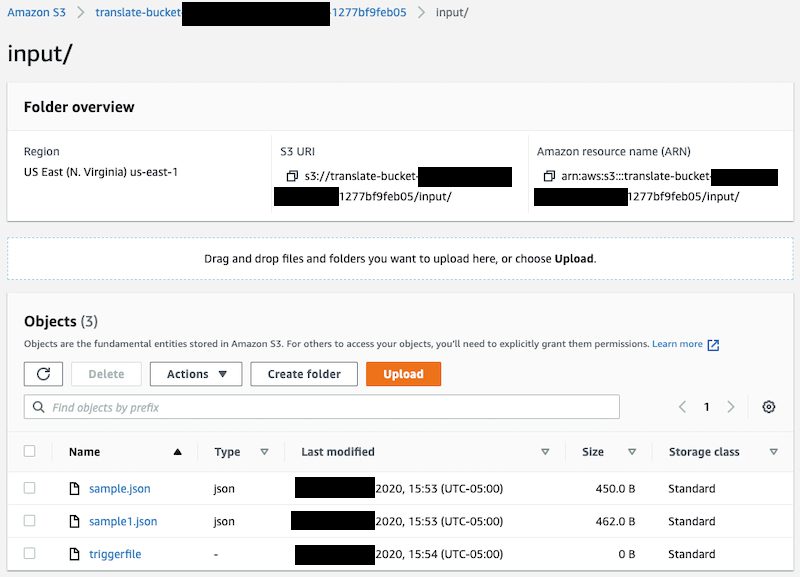

To translate your documents, upload one or more JSON documents to the input folder of the S3 bucket you created in the previous step. For this post, we use the following JSON file:

After you upload all the JSON documents, upload the file that triggers the translation workflow. This file can be a zero-byte file, but the filename should match the TriggerFileName parameter in the CloudFormation stack. The default name for the file is triggerfile.

This upload event triggers the Lambda function xmlin folder of the S3 bucket. The function then invokes the Amazon Translate startTextTranslationJob, with the xmlin folder in the S3 bucket location as the input location and the xmlout folder as the output location for the translated XML files.

The following code is the processRequest method in the

The Amazon Translate job completion SNS notification from the job poller triggers the Lambda function TargetLanguageCode-.json.

The following code shows the JSON document translated in Spanish.

The following code is the processRequest method containing the logic in the -TranslateJsonJobSNSEventProcessor-

For any pipeline failures, check the Amazon CloudWatch Logs for the corresponding Lambda function and look for potential errors that caused the failure.

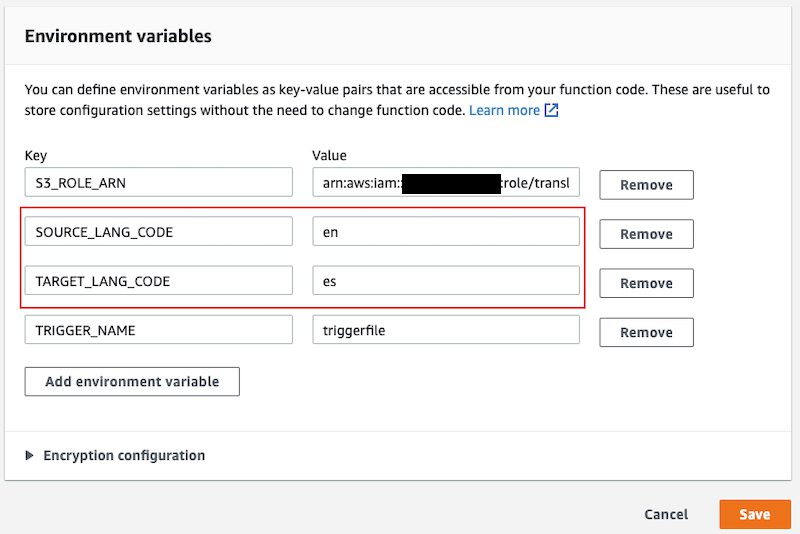

To do a translation for a different source-target language combination, you can update the SOURCE_LANG_CODE and TARGET_LANG_CODE environment variable for the TriggerFileName into the input folder of the S3 bucket.

All code used in this post is available in the GitHub repo. If you want to build your own pipeline and don’t need to use the CloudFormation template provided, you can use the file s3_event_handler.py under the directory translate_json in the GitHub repo. That file carries code to convert a JSON file into XML as well as to call the Amazon Translate API. The code for converting translated XML back to JSON format is available in the file sns_event_handler.py.

Conclusion

In this post, we demonstrated how to translate JSON documents using Amazon Translate asynchronous batch processing.

You can easily integrate the approach into your own pipelines as well as handle large volumes of JSON text given the scalable serverless architecture. This methodology works for translating JSON documents between over 70 languages that are supported by Amazon Translate (as of this writing). Because this solution uses asynchronous batch processing, you can customize your machine translation output using parallel data. For more information on using parallel data, see Customizing Your Translations with Parallel Data (Active Custom Translation). For a low-latency, low-throughput solution translating smaller JSON documents, you can perform the translation through the real-time Amazon Translate API.

For further reading, we recommend the following:

- Asynchronous Batch Processing with Amazon Translate

- Translating documents with Amazon Translate, AWS Lambda, and the new Batch Translate API

- Getting a batch job completion message from Amazon Translate

- Amazon Translate FAQs

About the Authors

Siva Rajamani is a Boston-based Enterprise Solutions Architect for AWS. He enjoys working closely with customers and supporting their digital transformation and AWS adoption journey. His core areas of focus are serverless, application integration, and security. Outside of work, he enjoys outdoors activities and watching documentaries.

Siva Rajamani is a Boston-based Enterprise Solutions Architect for AWS. He enjoys working closely with customers and supporting their digital transformation and AWS adoption journey. His core areas of focus are serverless, application integration, and security. Outside of work, he enjoys outdoors activities and watching documentaries.

Raju Penmatcha is a Senior AI/ML Specialist Solutions Architect at AWS. He works with education, government, and non-profit customers on machine learning and artificial intelligence related projects, helping them build solutions using AWS. When not helping customers, he likes traveling to new places with his family.

Raju Penmatcha is a Senior AI/ML Specialist Solutions Architect at AWS. He works with education, government, and non-profit customers on machine learning and artificial intelligence related projects, helping them build solutions using AWS. When not helping customers, he likes traveling to new places with his family.

Tags: Archive

Leave a Reply