Translate All: Automating multiple file type batch translation with AWS CloudFormation

This is a guest post by Cyrus Wong, an AWS Machine Learning Hero. You can learn more about and connect with AWS Machine Learning Heroes at the community page.

On July 29, 2020, AWS announced that Amazon Translate now supports Microsoft Office documents, including .docx, .xlsx, and .pptx.

The world is full of bilingual countries and cities like Hong Kong. I find myself always needing to prepare Office documents and presentation slides in both English and Chinese. Previously, it could be quite time-consuming to prepare the translated documents manually, and this approach can also lead to more errors. If I try to just select all, copy, and paste into a translation tool, then copy and paste the result into a new file, I lose all the formatting and images! My old method was to copy content piece by piece, translate it, then copy and paste it into the original document, over and over again. The new support for Office documents in Amazon Translate is really great news for teachers like me. It saves you a lot of time!

Still, we have to sort the documents by their file types and call Amazon Translate separately for different file types. For example, if I have notes in .docx files, presentations in .pptx files, and data in .xlsx files, I still have to sort them by their file type and send different TextTranslation API calls. In this post, I show how to sort content by document type and make batch translation calls. This solution automates the undifferentiated task of sorting files.

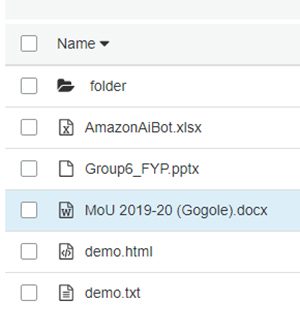

For my workflow, I need to upload all my course materials in a single Amazon Simple Storage Service (Amazon S3) bucket. This bucket often includes different types of files in one folder and subfolders.

However, when I start the Amazon Translate job on the console, I have to choose the file content type. The problem arises when different file types are in one folder without subfolders.

Therefore, our team developed a solution that we’ve called Translate All—a simple AWS serverless application to resolve those challenges and make it easier to integrate with other projects. A serverless architecture is a way to build and run applications and services without having to manage infrastructure. Your application still runs on servers, but all the server management is done by AWS. You no longer have to provision, scale, and maintain servers to run your applications, databases, and storage systems. For more information about serverless computing, see Serverless on AWS.

Solution overview

We use the following AWS services to run this solution:

- AWS Lambda – This serverless compute service lets you run code without provisioning or managing servers, creating workload-aware cluster scaling logic, maintaining event integrations, or managing runtimes. With Lambda, you can run code for virtually any type of application or backend service—all with zero administration.

- Amazon Simple Notification Service – Amazon SNS is a fully managed messaging service for both application-to-application (A2A) and application-to-person (A2P) communication. The A2A pub/sub functionality provides topics for high-throughput, push-based, many-to-many messaging between distributed systems, microservices, and event-driven serverless applications.

- Amazon Simple Queue Service – Amazon SQS is a fully managed message queuing service that enables you to decouple and scale microservices, distributed systems, and serverless applications. Amazon SQS eliminates the complexity and overhead associated with managing and operating message-oriented middleware, and empowers developers to focus on differentiating work.

- AWS Step Functions – This serverless function orchestrator makes it easy to sequence Lambda functions and multiple AWS services into business-critical applications. Through its visual interface, you can create and run a series of checkpointed and event-driven workflows that maintain the application state. The output of one step acts as an input to the next. Each step in your application runs in order, as defined by your business logic.

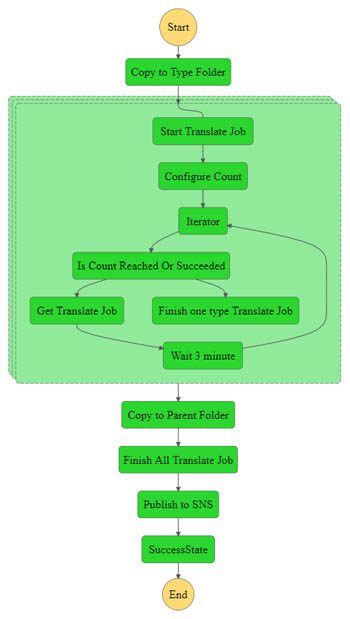

In our solution, if a JSON message is sent to the SQS queue, it triggers a Lambda function to start a Step Functions state machine (see the following diagram).

The state machine includes the following high-level steps:

- In the Copy to Type Folder stage, the state machine gets all the keys under

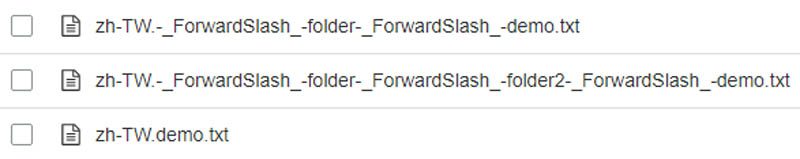

InputS3Uriand copies each type of file into a type-specific, individual folder. If subfolders exist, it replaces / with_ForwardSlash_.

The following screenshot shows the code for this step.

The following screenshot shows the output files.

- The Parallel Map stage arranges the data according to

contentTypesand starts the translation job workflow in parallel.

- The Start Translation Job workflow loops for completion status until all translate jobs are complete.

- In the Copy to Parent Folder stage, the step machine reconstructs the original input folder structure and generates a signed URL that remains valid for 7 days.

- The final stage publishes the results to Amazon SNS.

Additional considerations

When implementing this solution, consider the following:

- As of this writing, we just handle the happy path and assume that all jobs are in Completed status at the end

- The default job completion maximum period is 180 minutes; you can change the

NumberOfIterationvariable to extend it as needed - You can’t use the reserved words for file name or folder name:

!!plain!!,!!html!!,!!document!!,!!presentation!!,!!sheet!!,!!document!!, or-_ForwardSlash_-

Deploy the solution

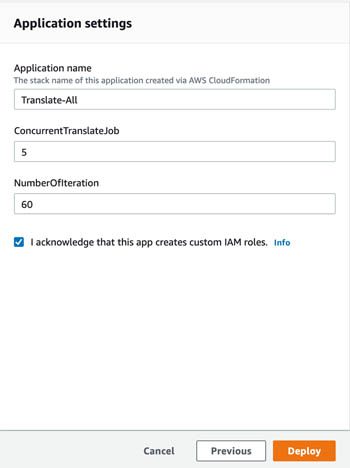

To deploy this solution, complete the following steps:

- Open the Serverless Application Repository link.

- Select I acknowledge that this app creates custom IAM roles.

- Choose Deploy.

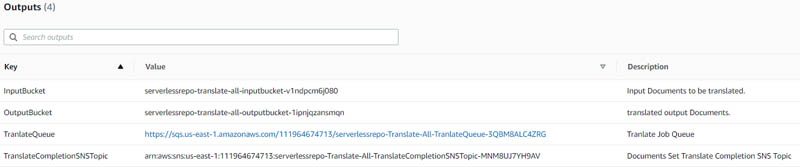

- When the AWS CloudFormation console appears, note the input and output parameters on the Outputs

Test the solution

In this section, we walk you through using the application.

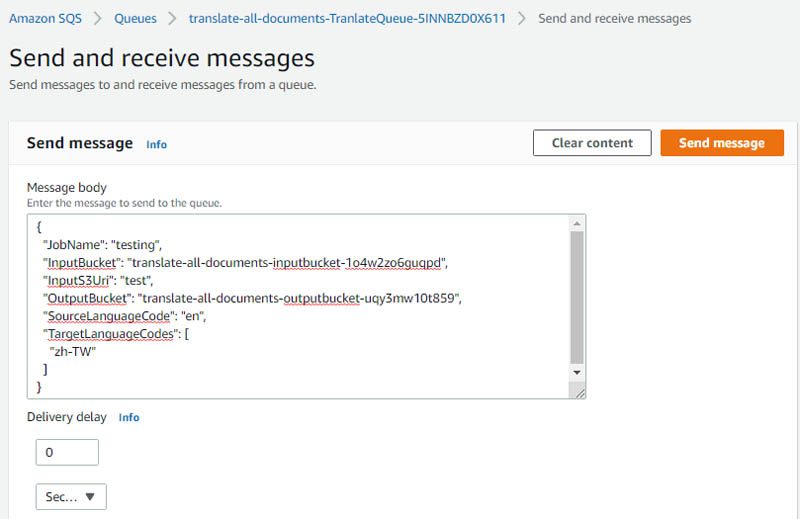

- On the Amazon SQS console, subscribe your email to

TranslateCompletionSNSTopic. - Upload all files into the folder

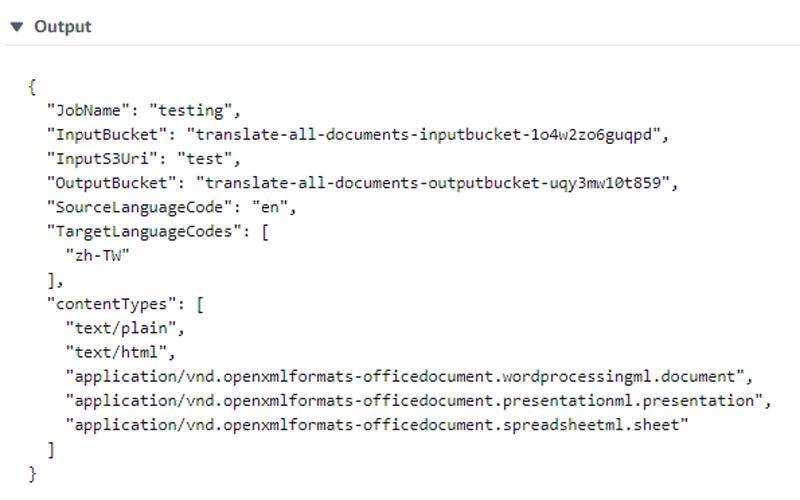

InputBucket. - Send a message to

TranlateQueue. See the following example code:

You receive the translation job result as an email.

The email contains a presigned URL with 7-day valid period. You can share the translated file without having to sign in to the AWS Management Console.

Conclusion

With this solution, my colleagues and I easily resolved our course materials translation problem. We saved a lot of time compared to opening the files one by one and copy-and-pasting repeatedly. The translation quality is good and eliminates the potential for errors that can often come with undifferentiated manual workflows. Now we can just use the AWS Command Line Interface (AWS CLI) to run the Amazon S3 sync command to upload our files into an S3 bucket and translate the all the course materials at once. Using this tool to leverage a suite of powerful AWS services has empowered my team to spend less time processing course materials and more time educating the next generation of cloud technology professionals!

Project collaborators include Mike Ng, Technical Program Intern at AWS, Brian Cheung, Sam Lam, and Pearly Law from the IT114115 Higher Diploma in Cloud and Data Centre Administration. This post was edited with Greg Rushing’s contribution.

The content and opinions in this post are those of the third-party author and AWS is not responsible for the content or accuracy of this post.

About the Author

Cyrus Wong is Data Scientist of Cloud Innovation Centre at the IT Department of the Hong Kong Institute of Vocational Education (Lee Wai Lee). He has achieved all 13 AWS Certifications and actively promotes the use of AWS in different media and events. His projects received four Hong Kong ICT Awards in 2014, 2015, and 2016, and all winning projects are running solely on AWS with Data Science and Machine Learning.

Tags: Archive

Leave a Reply