Create a serverless pipeline to translate large documents with Amazon Translate

In our previous post, we described how to translate documents using the real-time translation API from Amazon Translate and AWS Lambda. However, this method may not work for files that are too large. They may take time too much time, triggering the 15-minute timeout limit of Lambda functions. One can use batch API, but this is available only in seven AWS Regions (as of this blog’s publication). To enable translation of large files in regions where Batch Translation is not supported, we created the following solution.

In this post, we walk you through performing translation of large documents.

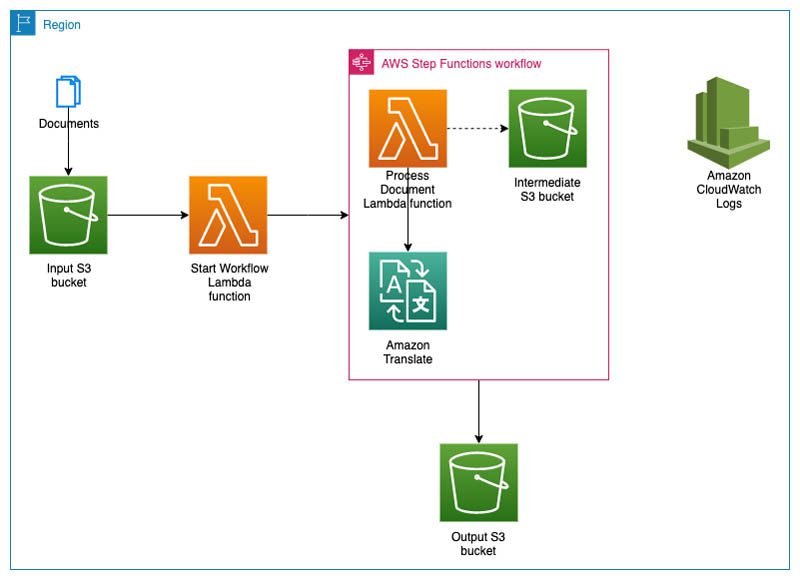

Architecture overview

Compared to the architecture featured in the post Translating documents with Amazon Translate, AWS Lambda, and the new Batch Translate API, our architecture has one key difference: the presence of AWS Step Functions, a serverless function orchestrator that makes it easy to sequence Lambda functions and multiple services into business-critical applications. Step Functions allows us to keep track of running the translation, managing retrials in case of errors or timeouts, and orchestrating event-driven workflows.

The following diagram illustrates our solution architecture.

This event-driven architecture shows the flow of actions when a new document lands in the input Amazon Simple Storage Service (Amazon S3) bucket. This event triggers the first Lambda function, which acts as the starting point of the Step Functions workflow.

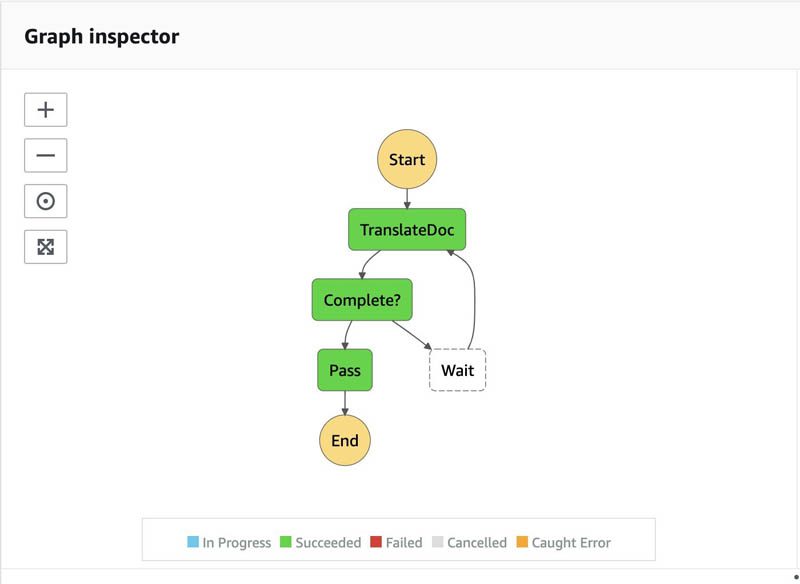

The following diagram illustrates the state machine and the flow of actions.

The Process Document Lambda function is triggered when the state machine starts; this function performs all the activities required to translate the documents. It accesses the file from the S3 bucket, downloads it locally in the environment in which the function is run, reads the file contents, extracts short segments from the document that can be passed through the real-time translation API, and uses the API’s output to create the translated document.

Other mechanisms are implemented within the code to avoid failures, such as handling an Amazon Translate throttling error and Lambda function timeout by taking action and storing the progress that was made in a /temp folder 30 seconds before the function times out. These mechanisms are critical for handling large text documents.

When the function has successfully finished processing, it uploads the translated text document in the output S3 bucket inside a folder for the target language code, such as en for English. The Step Functions workflow ends when the Lambda function moves the input file from the /drop folder to the /processed folder within the input S3 bucket.

We now have all the pieces in place to try this in action.

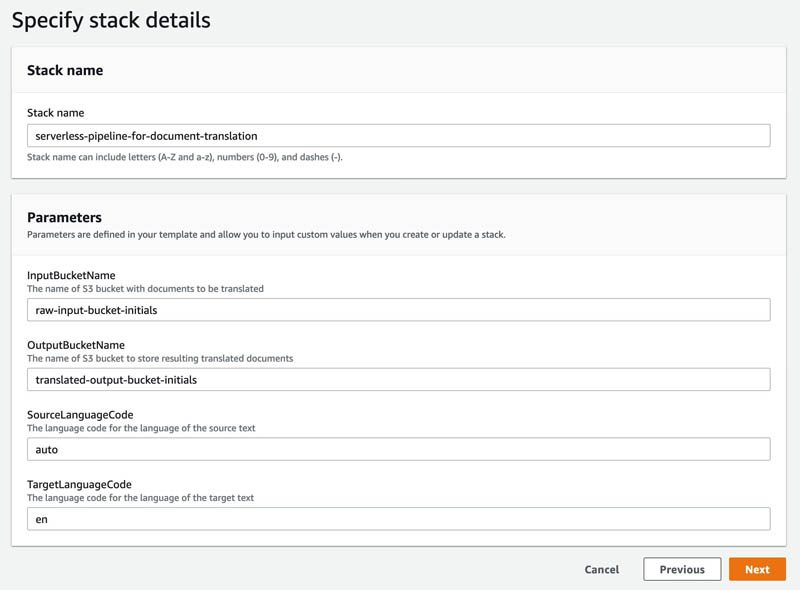

Deploy the solution using AWS CloudFormation

You can deploy this solution in your AWS account by launching the provided AWS CloudFormation stack. The CloudFormation template provisions the necessary resources needed for the solution. The template creates the stack the us-east-1 Region, but you can use the template to create your stack in any Region where Amazon Translate is available. As of this writing, Amazon Translate is available in 16 commercial Regions and AWS GovCloud (US-West). For the latest list of Regions, see the AWS Regional Services List.

To deploy the application, complete the following steps:

- Launch the CloudFormation template by choosing Launch Stack:

- Choose Next.

Alternatively, on the AWS CloudFormation console, choose Create stack with new resources (standard), choose Amazon S3 URL as the template source, enter https://s3.amazonaws.com/aws-ml-blog/artifacts/create-a-serverless-pipeline-to-translate-large-docs-amazon-translate/translate.yml, and choose Next.

- For Stack name, enter a unique stack name for this account; for example, serverless-document-translation.

- For InputBucketName, enter a unique name for the S3 bucket the stack creates; for example, serverless-translation-input-bucket.

The documents are uploaded to this bucket before they are translated. Use only lower-case characters and no spaces when you provide the name of the input S3 bucket. This operation creates a new bucket, so don’t use the name of an existing bucket. For more information, see Bucket naming rules.

- For OutputBucketName, enter a unique name for your output S3 bucket; for example, serverless-translation-output-bucket.

This bucket stores the documents after they are translated. Follow the same naming rules as your input bucket.

- For SourceLanguageCode, enter the language code that your input documents are in; for this post we enter auto to detect the dominant language.

- For TargetLanguageCode, enter the language code that you want your translated documents in; for example, en for English.

For more information about supported language codes, see Supported Languages and Language Codes.

- Choose Next.

- On the Configure stack options page, set any additional parameters for the stack, including tags.

- Choose Next.

- Select I acknowledge that AWS CloudFormation might create IAM resources with custom names.

- Choose Create stack.

Stack creation takes about a minute to complete.

Translate your documents

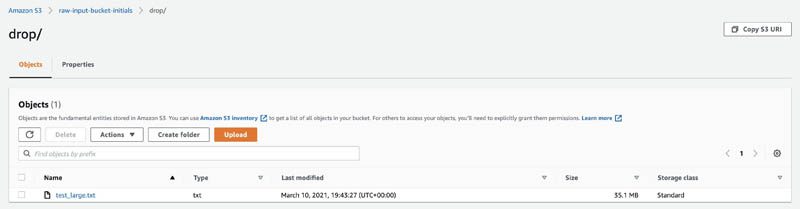

You can now upload a text document that you want to translate into the input S3 bucket, under the drop/ folder.

The following screenshot shows our sample document, which contains a sentence in Greek.

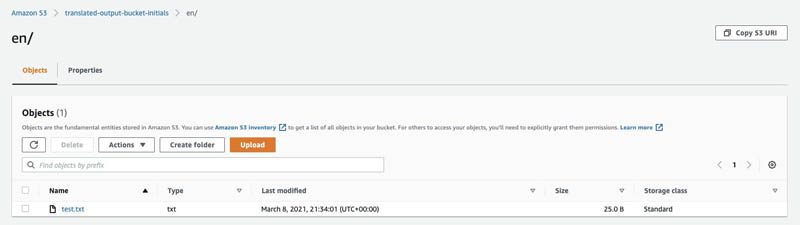

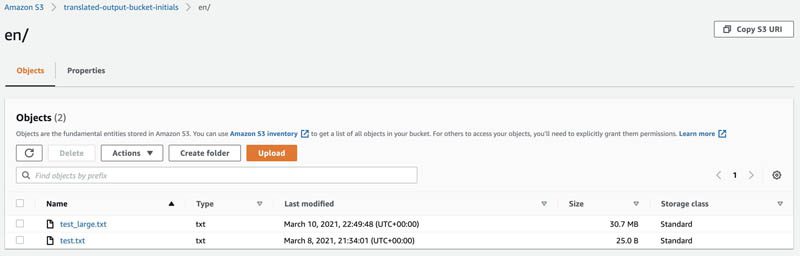

This action starts the workflow, and the translated document automatically shows up in the output S3 bucket, in the folder for the target language (for this example, en). The length of time for the file to appear depends on the size of the input document.

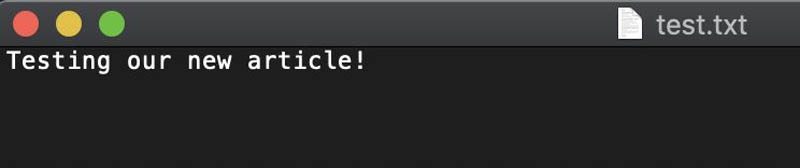

Our translated file looks like the following screenshot.

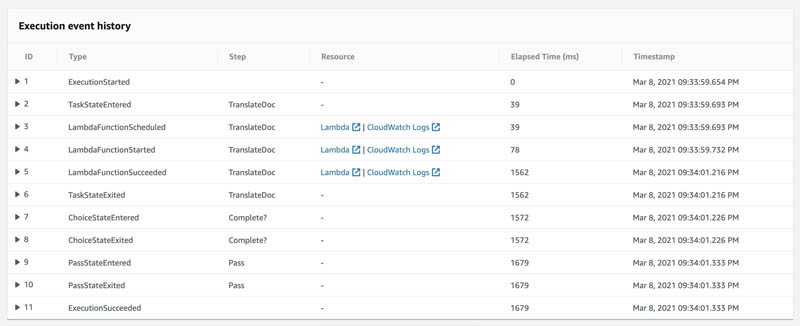

You can also track the state machine’s progress on the Step Functions console, or with the relevant API calls.

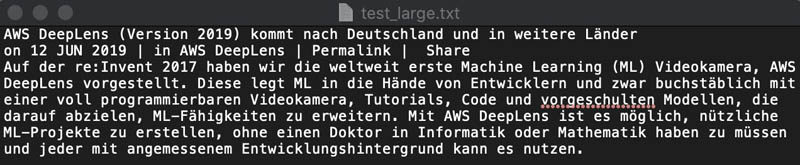

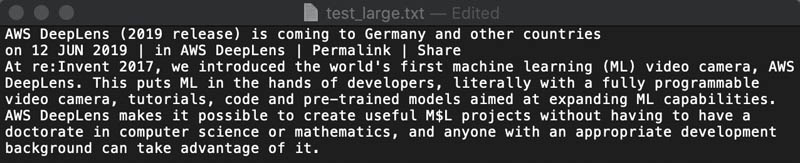

Let’s try the solution with a larger file. The test_large.txt file contains content from multiple AWS blog posts and other content written in German (for example, we use all the text from the post AWS DeepLens (Version 2019) kommt nach Deutschland und in weitere Länder).

This file is much bigger than the file in previous test. We upload the file in the drop/ folder of the input bucket.

On the Step Functions console, you can confirm that the pipeline is running by checking the status of the state machine.

![]()

On the Graph inspector page, you can get more insights on the status of the state machine at any given point. When you choose a step, the Step output tab shows the completion percentage.

When the state machine is complete, you can retrieve the translated file from the output bucket.

The following screenshot shows that our file is translated in English.

Troubleshooting

If you don’t see the translated document in the output S3 bucket, check Amazon CloudWatch Logs for the corresponding Lambda function and look for potential errors. For cost-optimization, by default, the solution uses 256 MB of memory for the Process Document Lambda function. While processing a large document, if you see Runtime.ExitError for the function in the CloudWatch Logs, increase the function memory.

Other considerations

It’s worth highlighting the power of the automatic language detection feature of Amazon Translate, captured as auto in the SourceLanguageCode field that we specified when deploying the CloudFormation stack. In the previous examples, we submitted a file containing text in Greek and another file in German, and they were both successfully translated into English. With our solution, you don’t have to redeploy the stack (or manually change the source language code in the Lambda function) every time you upload a source file with a different language. Amazon Translate detects the source language and starts the translation process. Post deployment, if you need to change the target language code, you can either deploy a new CloudFormation stack or update the existing stack.

This solution uses the Amazon Translate synchronous real-time API. It handles the maximum document size limit (5,000 bytes) by splitting the document into paragraphs (ending with a newline character). If needed, it further splits each paragraph into sentences (ending with a period). You can modify these delimiters based on your source text. This solution can support a maximum of 5,000 bytes for a single sentence and it only handles UTF-8 formatted text documents with .txt or .text file extensions. You can modify the Python code in the Process Document Lambda function to handle different file formats.

In addition to Amazon S3 costs, the solution incurs usage costs from Amazon Translate, Lambda, and Step Functions. For more information, see Amazon Translate pricing, Amazon S3 pricing, AWS Lambda pricing, and AWS Step Functions pricing.

Conclusion

In this post, we showed the implementation of a serverless pipeline that can translate documents in real time using the real-time translation feature of Amazon Translate and the power of Step Functions as orchestrators of individual Lambda functions. This solution allows for more control and for adding sophisticated functionality to your applications. Come build your advanced document translation pipeline with Amazon Translate!

For more information, see the Amazon Translate Developer Guide and Amazon Translate resources. If you’re new to Amazon Translate, try it out using our Free Tier, which offers 2 million characters per month for free for the first 12 months, starting from your first translation request.

About the Authors

Jay Rao is a Senior Solutions Architect at AWS. He enjoys providing technical guidance to customers and helping them design and implement solutions on AWS.

Jay Rao is a Senior Solutions Architect at AWS. He enjoys providing technical guidance to customers and helping them design and implement solutions on AWS.

Seb Kasprzak is a Solutions Architect at AWS. He spends his days at Amazon helping customers solve their complex business problems through use of Amazon technologies.

Seb Kasprzak is a Solutions Architect at AWS. He spends his days at Amazon helping customers solve their complex business problems through use of Amazon technologies.

Nikiforos Botis is a Solutions Architect at AWS. He enjoys helping his customers succeed in their cloud journey, and is particularly interested in AI/ML technologies.

Nikiforos Botis is a Solutions Architect at AWS. He enjoys helping his customers succeed in their cloud journey, and is particularly interested in AI/ML technologies.

Bobbie Couhbor is a Senior Solutions Architect for Digital Innovation at AWS, helping customers solve challenging problems with emerging technology, such as machine learning, robotics, and IoT.

Bobbie Couhbor is a Senior Solutions Architect for Digital Innovation at AWS, helping customers solve challenging problems with emerging technology, such as machine learning, robotics, and IoT.

Tags: Archive

Leave a Reply