Amazon Lookout for Vision Accelerator Proof of Concept (PoC) Kit

Amazon Lookout for Vision is a machine learning service that spots defects and anomalies in visual representations using computer vision. With Amazon Lookout for Vision, manufacturing companies can increase quality and reduce operational costs by quickly identifying differences in images of objects at scale.

Basler and Amazon Lookout for Vision have collaborated to launch the “Amazon Lookout for Vision Accelerator PoC Kit” (APK) to help customers complete a Lookout for Vision PoC in less than six weeks. The APK is an “out-of-the-box” vision system (hardware + software) to capture and transmit images to the Lookout for Vision service and train/evaluate Lookout for Vision models. The APK simplifies camera selection/installation and capturing/analyzing images, enabling you to quickly validate Lookout for Vision performance before moving to a production setup.

Most manufacturing and industrial customers have multiple use cases (such as multiple production lines or multiple product SKUs) in which Amazon Lookout for Vision can provide support in automated visual inspection. The APK enables customers to use the kit to test Lookout for Vision functionalities for their use case first and then decide on purchasing a customized vision solution for multiple lines. Without the APK, you would have to procure and set up a vision system that integrates with Amazon Lookout for Vision, which is resource and time-consuming and can delay PoC starts. The integrated hardware and software design of the APK comprises an automated AWS Cloud connection, image preprocessing, and direct image transmission to Amazon Lookout for Vision – saving you time and resources.

The APK is intended to be set up and installed by technical staff with easy-to-follow instructions.

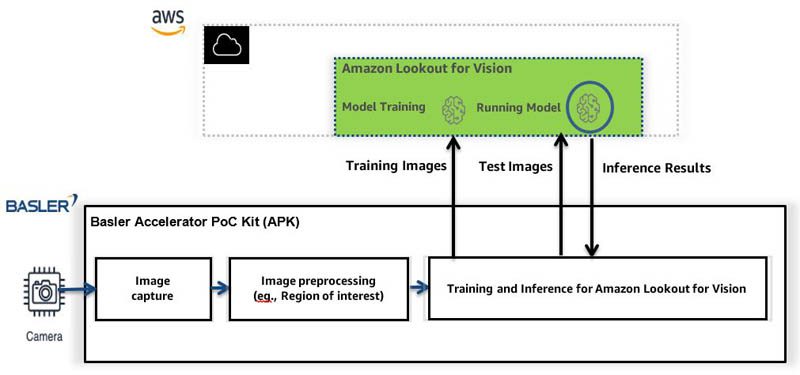

The APK enables you to quickly capture and transmit images, train Amazon Lookout for Vision models, run inferences to detect anomalies, and assess model performance. The following diagram illustrates our solution architecture.

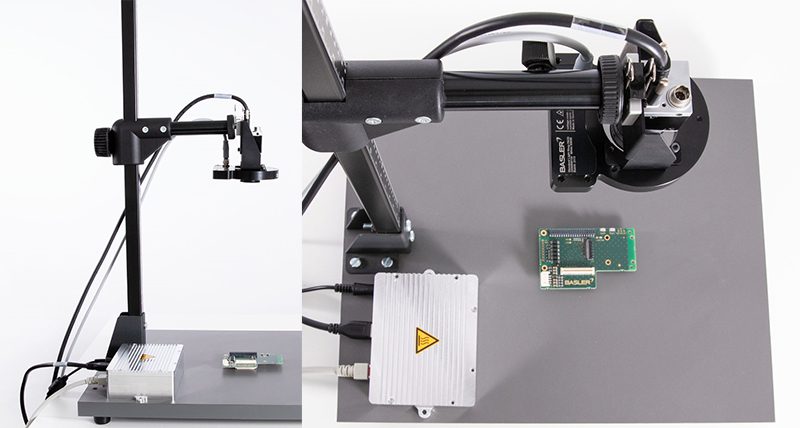

The kit comes equipped with a:

- Basler ace camera

- Camera lens

- USB cable

- Network cable

- Power cable for the ring light

- Basler standard ring light

- Basler camera mount

- NVIDIA Jetson Nano development board (in its housing)

- Development board power supply

See corresponding items in the following image:

In the next section, we will walk through the steps for acquiring an image, extracting the region of interest (ROI) with image preprocessing, uploading training images to an Amazon Simple Storage Service (Amazon S3) bucket, training an Amazon Lookout for Vision model, and running inference on test images. The train and test images are of a printed circuit board. The Lookout for Vision model will learn to classify images into normal and anomaly (scratches, bent pins, bad solder, and missing components). In this blog, we will create a training dataset using the Lookout for Vision auto-split feature on the console with a single dataset. You can also set up a separate training and test dataset using the kit.

Kit Setup

After you unbox the kit, complete the following steps:

- Firmly screw the lens onto the camera mount.

- Connect the camera to the board with the supplied USB cable.

- For poorly lighted areas, use the supplied ring light. Note: If you use the ring light for training images, you should also use it to capture inference images.

- Connect the board to the network using a network cable (you can optionally use the supplied cable).

- Connect the board to its power supply and plug it in. In the image below, please note the camera stand and the base platform show an example set, but they are not provided as part of the APK.

- A monitor, keyboard, and mouse have to be attached when turning on the system for the first time.

- On the first boot, accept the end user licensing agreement from NVIDIA. You will see a series of prompts to set up the location, user name, and password, etc. For more information, see the first boot section on the initial setup.

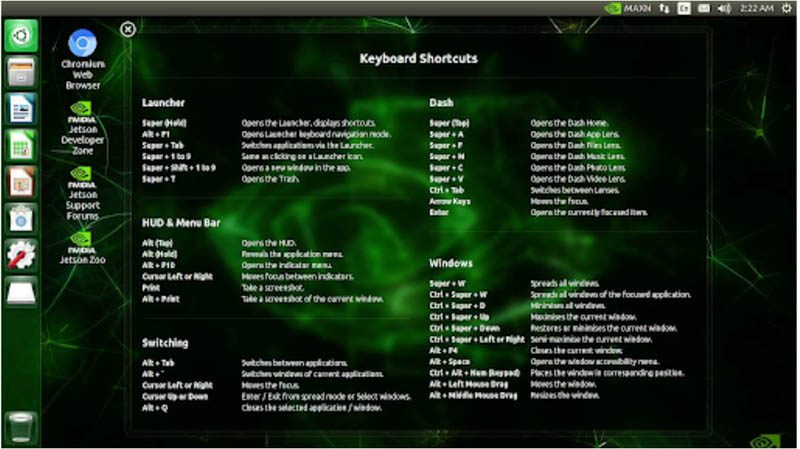

- Log in to APK with the user name and password. You will see the following screen. Bring up the Linux terminal window using the search icon (green icon on the top left). This will display the APK IP address.

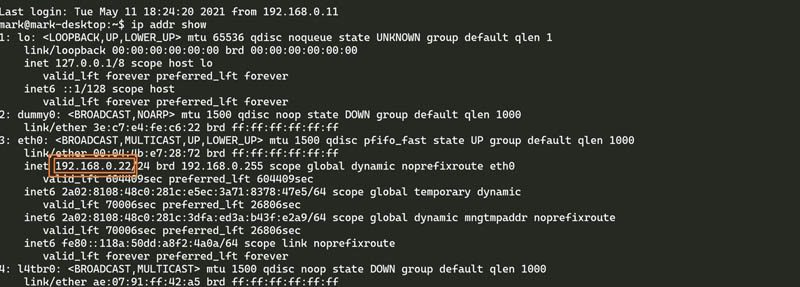

- Enter the command “ip addr show” command. This will display the APK IP address (For, e.g., 192.168.0.22 as shown in the following screenshot)

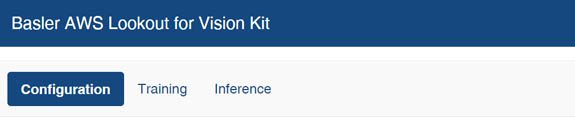

- Go to your Chrome browser on a machine on the same network and enter the APK IP address. The kit’s webpage should come up with a live stream from the camera.

Now we can do the optical setup (as described in the next section), and start taking pictures.

Image acquisition, preprocessing, and cloud connection setup

- With the browser running and showing the webpage of the kit, choose Configuration.

In a few seconds, a live image from the camera appears.

- Create an AWS account if you don’t have one. One can create an AWS account for free. The new user has access to AWS free tier service for the first 12 months. For more information, see creating and activating a new AWS account.

- Now you set up the connection in the cloud to your AWS account.

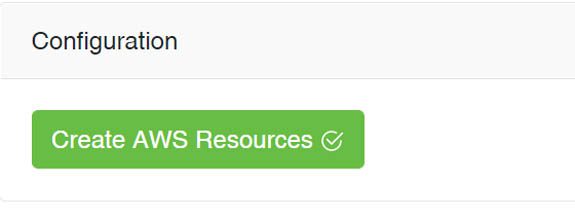

- Choose Create AWS Resources.

- In the dialog box that appears, choose Create AWS Resources.

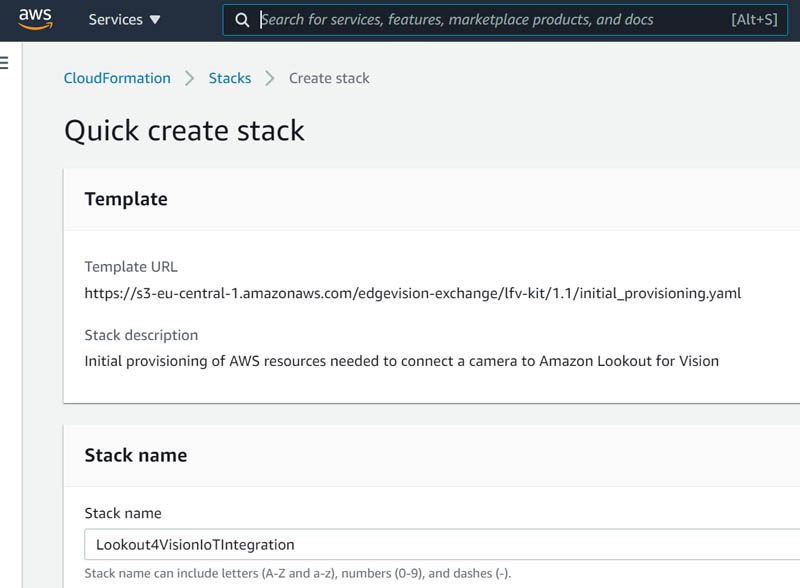

You are redirected to the AWS Management Console, where you are asked to run the AWS CloudFormation stack.

- As part of creating the stack, create an S3 bucket in your specified region. Accept the check box to create AWS Identity and Access Management (IAM) resources.

- Choose Create Stack.

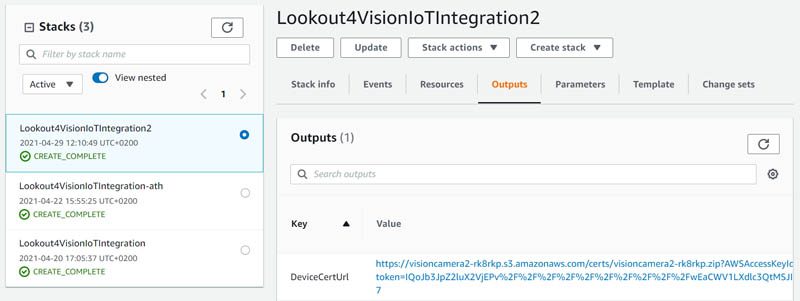

- When the stack is created, on the Outputs tab, copy the value for

DeviceCertUrl.

- Return to the kit’s webpage and enter the URL value.

- Choose OK

- You are redirected back to the live image; the setup is now complete.

- Place the camera some distance away from the object to be inspected so that the object is fully in the live camera view and fills up the view as much as possible.

- As a general guideline, the operator should be able to see the anomaly in the image so that the Amazon Lookout for Vision models can learn the defects from the normal image. Since the supplied lens has a minimal distance to the object of 100 millimeters, the object should be placed at or greater than the minimal distance.

- If the object at this distance doesn’t fill up the image, you can cut out the background using the region-of-interest (ROI) tool described below.

- Check the focus, and either change the object’s distance to the lens or turn the focus on the lens (most likely a combination).

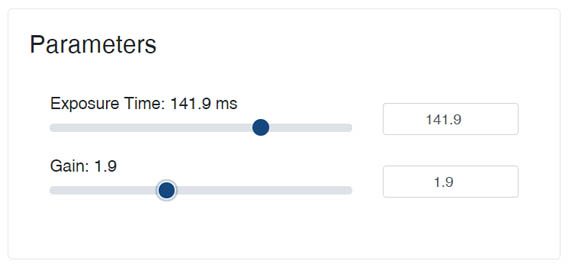

- If the live image appears too dark or too light, adjust the Gain and Exposure Times Note: Too much gain causes more noise in the image, and a long exposure time causes blurriness if the object is moving.

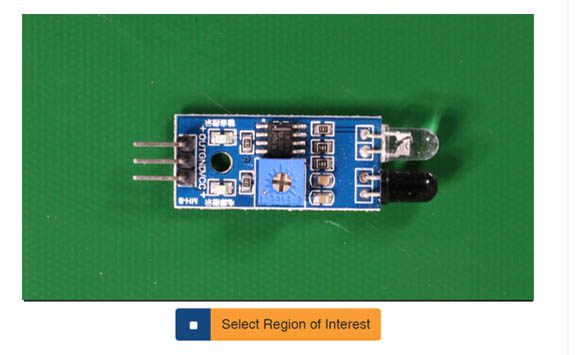

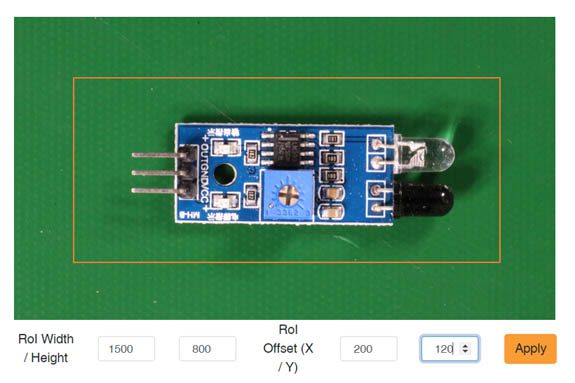

- If the object is focused and takes up a large part of the picture, use the ROI tool to reduce the unnecessary “background information”.

- The ROI tool selects the relevant part of the image and reduces background information. The image in the ROI is sent to the Amazon S3 bucket and will be used for Lookout for Vision training and inference.

- Choose Apply to reconfigure the camera to concentrate on this region.

- You can see the ROI on the live view. If you change the camera angle or distance to the object, you may need to change or reset the ROI. You can do this by choosing “Select Region of Interest” again and repeating the process.

Upload training images

We are now ready to upload our training images.

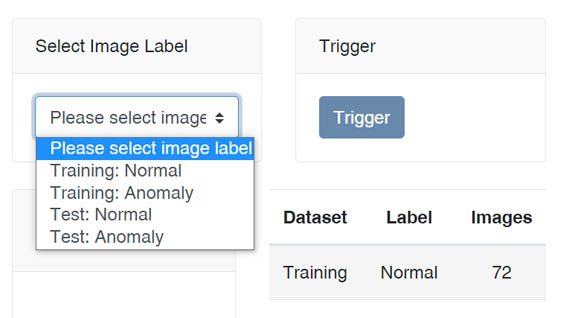

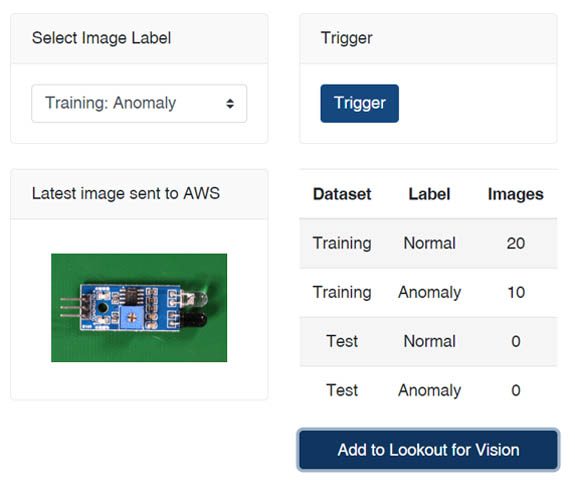

- Choose the Training tab on the browser webpage.

- On the drop-down menu, choose Training: Normal or Training: Anomaly. Images are sent to the appropriate folder in the Amazon S3 bucket.

- Choose Trigger to trigger images from an object with and without anomalies. The camera may also be triggered by a hardware trigger direct to its I/O pins. For more information, see connector pin numbering and assignments.

It’s essential that each image captured is of a unique object and not the same object captured multiple times. If you repeat the same image, the model will not learn normal, defect-free variations of your object, and it could negatively impact model performance.

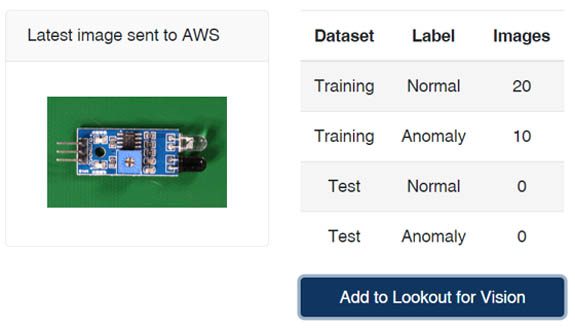

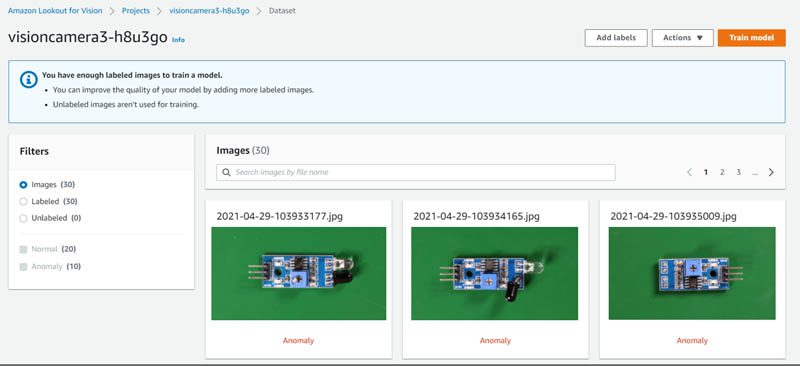

- After every trigger, the image is sent to the S3 bucket. At a minimum, you need to capture 20 normal and 10 anomalous images to use the single dataset auto-split option on the Amazon Lookout for Vision console. In general, the more images you capture, the better model performance you can expect. A table on the website shows the last image sent as a thumbnail and the number of images in each category.

Lookout for Vision Model Dataset and Training

In this step, we prepare the dataset and start training.

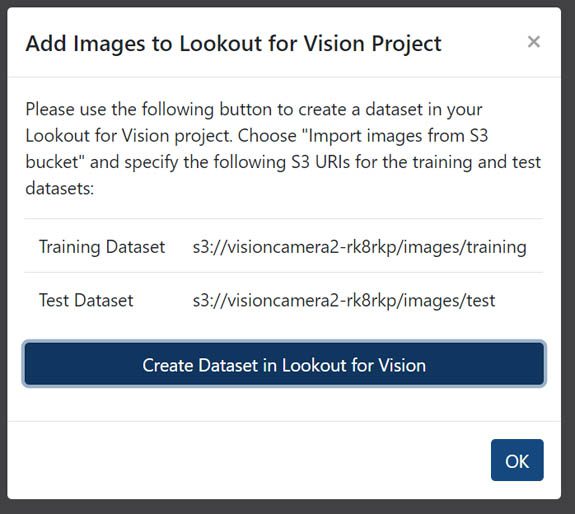

- Choose Add to Lookout for Vision button when you have a minimum of 20 normal and 10 anomalous images. Because we’re using the single dataset and the auto-split option, it’s OK to have no test images. The auto-split option automatically divides the 30 images into a training and test dataset internally.

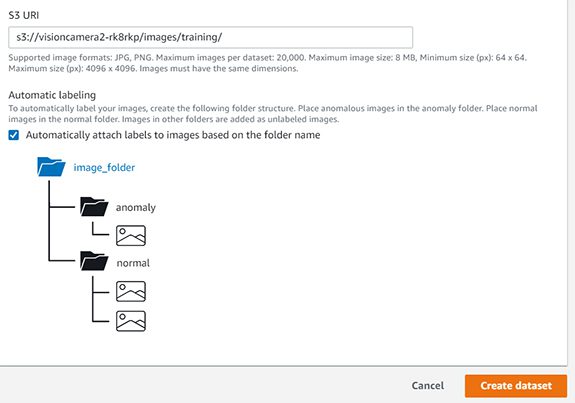

- Choose Create Dataset in Lookout for Vision

- You are redirected to the Amazon Lookout for Vision console.

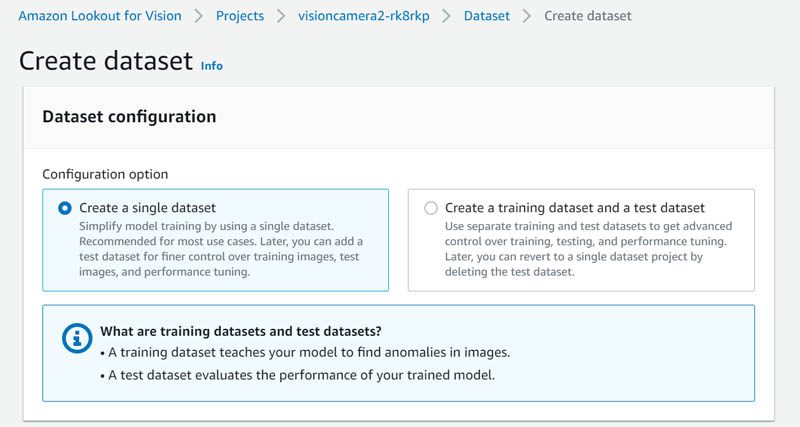

- Select Create a single dataset.

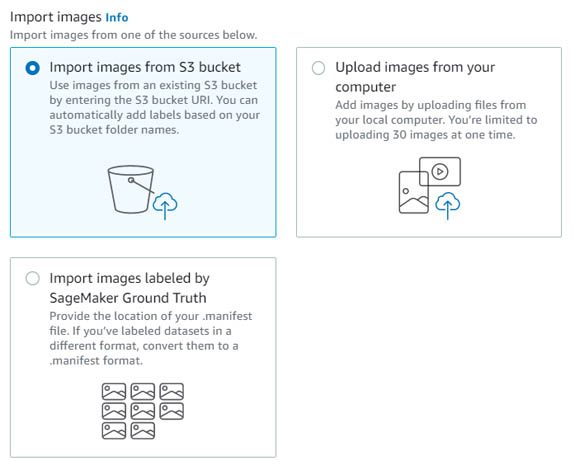

- Select Import images from S3 bucket

- For S3 URL, enter the URL for the S3 training images directory as shown in the following picture.

- Select Automatically attach labels to images based on the folder name.

- This option imports the images with the correct labels in the dataset.

- Choose Create dataset

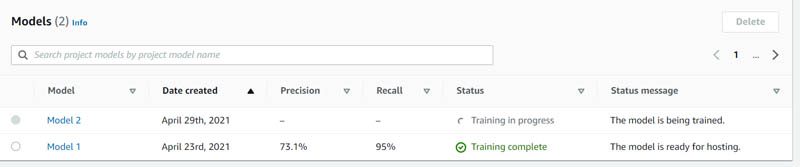

- Choose Train model button to start training

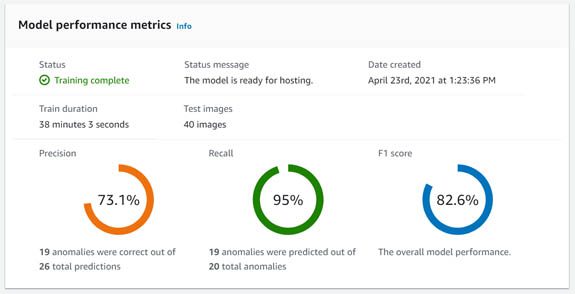

On the Models page, you can see the status indicate Training in progress and change to Training complete when the model is trained.

- Choose your model to see the model performance.

The model reports the precision, recall, and F1 scores. Precision is a measure of the number of correct anomalies out of the total predictions. A recall is a measure of the number of predicted anomalies out of the total anomalies. The F1 score is an average of precision and recall measures.

In general, you can improve model performance by adding more training images and providing a consistent lighting setup. Please note lighting can change during the day depending on your environment. (such as sunlight coming through the windows). You can control the lighting by closing the curtains and using the provided ring light. For more information, see how to light up your vision system.

Run Inference on new images

To run inferences on new images, complete the following steps:

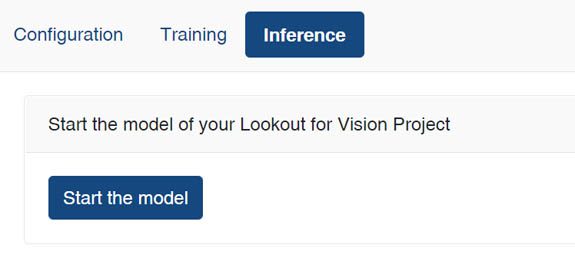

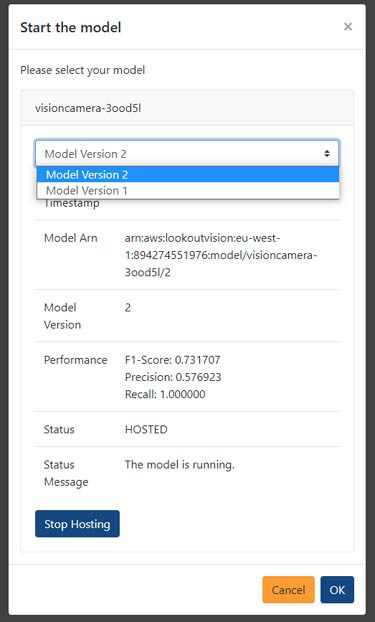

- On the kit webpage, choose the Inference

- Choose Start the model to host the Lookout for Vision model.

- On the drop-down menu, choose the project you want to use and the model version.

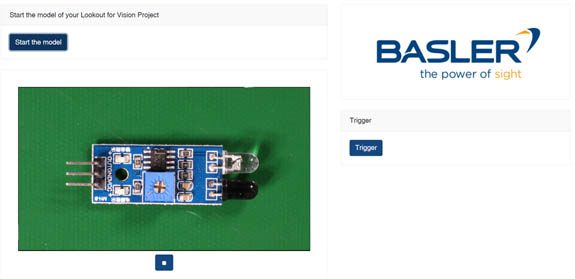

- Place a new object that the model hasn’t seen before in front of the camera, and choose trigger in the browser webpage of the kit.

Make sure the object pose and lighting is similar to the training object pose and lighting. This is important to prevent the model from identifying a false anomaly due to lighting or pose changes.

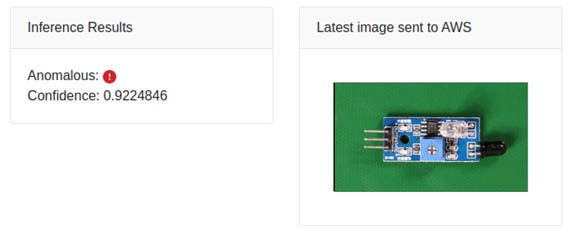

Inference results for the current image are shown in the browser window. You can repeat this exercise with new objects and test your model performance on different anomaly types.

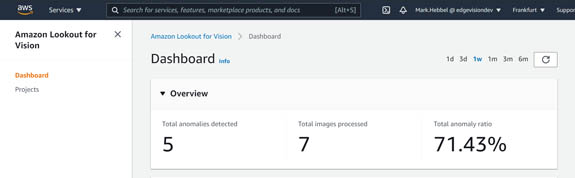

The cumulated inference results are available on the Amazon Lookout for Vision console on the Dashboard page.

In most cases, you can expect to implement these steps in a few hours, get a quick assessment of your use case fit by running inferences on unseen test images, and correlate the inference results with the model precision, recall, and F1 scores.

Conclusion

Basler and Amazon Web Services collaborated on an “Amazon Lookout for Vision Accelerator PoC Kit” (APK). The APK is a testing camera system that customers can use for fast prototyping of their Lookout for Vision application. It includes out-of-the-box vision hardware (camera, processing unit, lighting, and accessories) with integrated software components to quickly connect to the AWS Cloud and Lookout for Vision.

With direct integration with Lookout for Vision, the APK offers you a new and efficient approach for rapid prototyping and shortens your proof-of-concept evaluation by weeks. The APK can give you the confidence to evaluate your anomaly detection model performance before moving to production. As the kit is a bundle of fixed components, changes in the hard-and software may be necessary for the next step, depending on the customer application. After completing your PoC with the APK, Basler and AWS will offer customers a gap analysis to determine if the scope of the kit met your use case requirements or adjustments are needed in terms of a customized solution.

Note: To help ensure the highest level of success in your prototyping efforts, we require you to have a kit qualification discussion with Basler before purchase.

Contact Basler today to discuss your use case fit for APK: AWSBASLER@baslerweb.com

Learn more | Basler Tools for Component Selection

About the Authors

Amit Gupta is an AI Services Solutions Architect at AWS. He is passionate about enabling customers with well-architected machine learning solutions at scale.

Amit Gupta is an AI Services Solutions Architect at AWS. He is passionate about enabling customers with well-architected machine learning solutions at scale.

Mark Hebbel is Head of IoT and Applications at Basler AG. He and his team implement camera based solutions for customers in the machine vision space. He has a special interest in decentralized architectures.

Mark Hebbel is Head of IoT and Applications at Basler AG. He and his team implement camera based solutions for customers in the machine vision space. He has a special interest in decentralized architectures.

Tags: Archive

Leave a Reply