How lekker got more insights into their customer churn model with Amazon SageMaker Debugger

With over 400,000 customers, lekker Energie GmbH is a leading supraregional provider of electricity and gas on the German energy market. lekker is customer and service oriented and regularly scores top marks in comparison tests. As one of the most important suppliers of green electricity to private households, the company, with its 220 employees, stands for environmentally and consumer-friendly products.

Germany’s energy market was liberalized in the 1990s. Since then, customers have free choice of their energy and gas supplier. During the liberalization, the German government standardized the switching processes, so switching your energy or gas supplier is an easy task. However, it’s a challenging task for lekker to hold churn rates low. Preventing existing customers from leaving is several times cheaper than acquiring new ones. The best way to realize low churn rates is to keep their customers satisfied. Knowledge about a customer’s churn risk is helpful information for target-based campaigns, because it allows lekker to focus on customers who are more likely to churn.

This post discusses how lekker used Amazon SageMaker Debugger to get deep insights into their customer churn model. Debugger automatically collects data during model training and provides built-in rules to automatically detect issues in model training.

Data preprocessing

lekker has a wide range of systems with different databases and data structures, and uses Spark and AWS Step Functions to create a data lake on AWS. In preparation of the churn model, lekker creates a Spark processing job that holds customer-specific information like duration, sales channel, consumption, and other information for label creation. lekker make distinctions between active and passive churn. Active churn describes customers canceling their contract. Passive churn describes customers who are no longer in lekker’s delivery area or whose contract was cancelled due to late payment. For the introduced model, lekker uses active churn as a label, which helps better fit marketing expectations for retention campaigns.

Create a customer churn model

Before lekker started with AWS, data came from an Oracle database, which was used as a business intelligence (BI) platform. The BI team and analysts were organized in different departments and had different access rights. Data scientists needed to access data by schema-on-read. Models were trained on local machines or non-scalable servers, and computational restrictions came up quickly. If a model was trained, model monitoring and debugging was hard to perform, while management’s skepticism of potential closed-box models grew. Model deployment was also difficult, caused by missing orchestration tools and limited server availability and capacity.

When lekker decided to use SageMaker, most of these problems were solved, because SageMaker offers solutions along the whole machine learning workflow. lekker can now easily scale computing capacity needs and access all available data on Amazon S3. Their data scientists can now explore and prepare data in the same notebook, and find it easier to create and train models using SageMaker Estimators. Additionally, lekker frequently use SageMaker automatic model tuning, which figures out the best model by running different hyperparameter configurations. This helped raise model quality tremendously. lekker uses Debugger to evaluate and communicate models’ results and get model insights.

Set up training on Amazon SageMaker

To run the XGBoost training on SageMaker, lekker uses the SageMaker Estimator API. It takes the instance type for the model training (ml.m5.4xlarge). It also takes the image URI of the training image and a dictionary for the model hyperparameters. See the following code:

Configure Debugger and rules

lekker uses Debugger in three ways:

- Use built-in rules to identify underperforming training jobs

- Create automatic visualizations

- Collect important metrics from training jobs

The following code shows the Debugger hook configuration to collect metrics such as feature importance and Shapley values from churn model training:

Debugger provides built-in rules that check for model training issues such overfitting or loss not decreasing. Those rules run as a SageMaker processing job in a separate container and instance so the rule analysis doesn’t interfere with the actual training. Users don’t pay to run these built-in rules. lekker frequently uses the loss_not_decreasing and xboost_report rules. The first rule monitors the loss curves and triggers if loss doesn’t decrease by a certain percentage. The xgboost_report rule captures XGBoost model data and creates a static HTML report with visualizations such as ROC curves, errors plots, and more, and provides key insights and recommendations. See the following code:

After the Debugger hook configuration and list of rules are specified, one starts the SageMaker training with estimator.fit(). The fit function takes as input the path to training and validation data in Amazon S3. See the following code:

SageMaker automatically spins up the ml.m5.4xlarge training instance, downloads the training container and datasets, and runs the model training. It also spins up an instance to run the rule analysis as a SageMaker processing job. You can go to SageMaker Studio and check the rule status or check the status from the Python SDK.

Visualize and perform real-time monitoring

When the training is running, lekker uses Debugger’s open-source smdebug library to fetch and query the data that is uploaded in real time to Amazon S3. The first step is to create a trial object that takes either a local or S3 path:

Now one access and query the data. To plot the loss curves, one simply retrieves the metrics collection and the number of recorded steps:

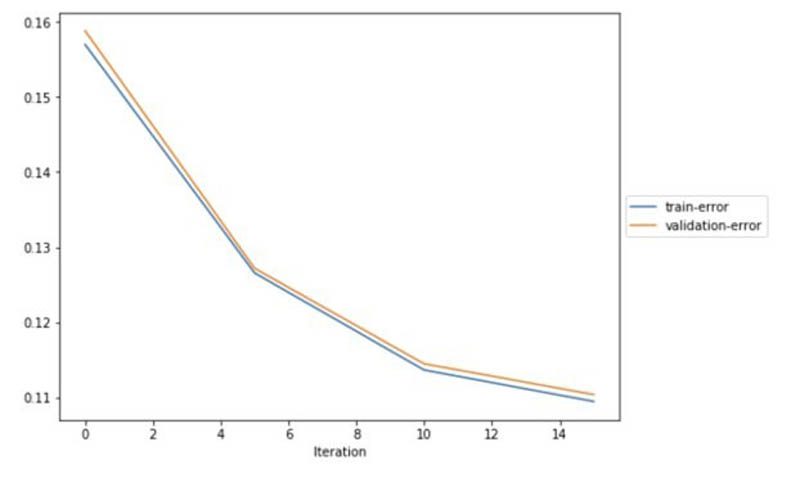

The following figure shows that train and validation errors fall while training the customer churn model. That’s a sign of a well-trained model, because it shows that the model performs well on the unseen data (validation data). Debugger makes this visualization easy to create.

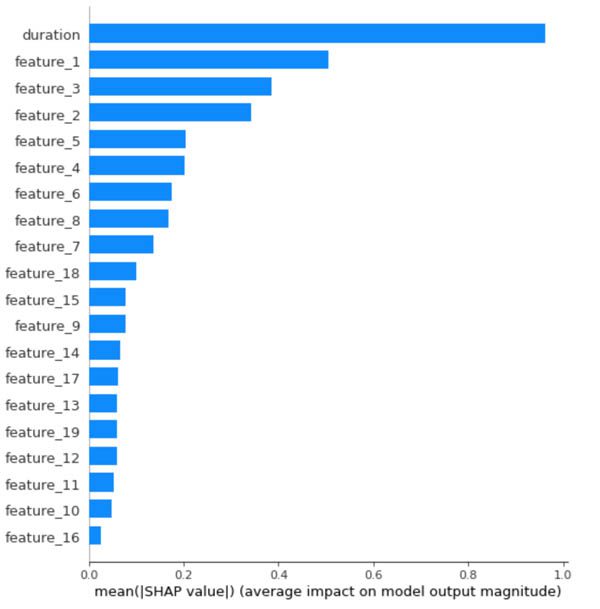

When the training job has completed, lekker uses the output of the xgboost_report rule to get further insights into the customer churn model. The following figure shows the model’s feature importance for the training job. The most important feature is customer duration (membership in months). lekker offers contracts with a fixed duration, such as 12 or 24 months. If customers cancel their contract, the churn shows at the end of the fixed duration period. That’s why most churn appears at month 12 and 24.

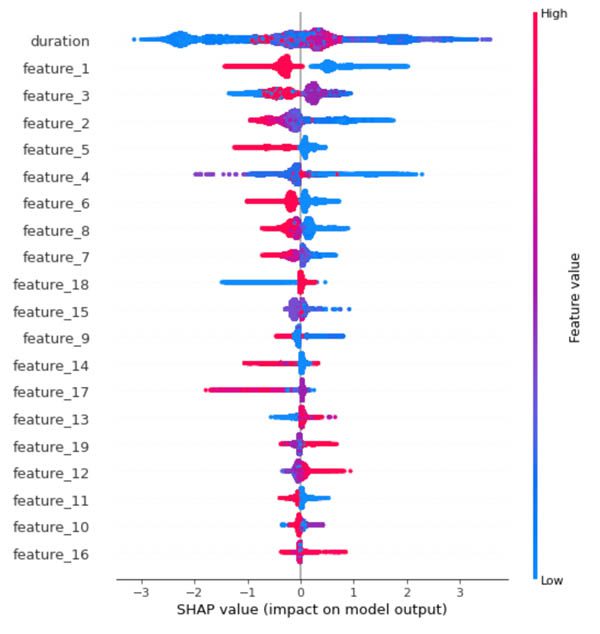

Knowledge about what influences the models’ outcome is important because it helps explain the model. lekker uses SHapley Additive exPlanations (SHAP) values recorded by Debugger during training. SHAP was made for local interpretability of a predictive model. It uses a game theoretic approach to explain the output of machine learning models.

In the following figure, blue represents low feature values, red represents high. The x-axis shows the SHAP-value, which describes the impact on the outcome. High values indicate a predicted value increase, low values indicate a decrease. A line’s thickness represents how many customers are at this specific point. In the churn model customers with low duration have low predicted churn probabilities. That’s a result of their contract structure, because customer churn can be determined after 12 months at the earliest.

Users running on Amazon SageMaker can obtain SHAP values for their model either through SageMaker Debugger or SageMaker Clarify. The key difference is that Debugger records those values during training, while Clarify captures them after the model has been trained. Inspecting SHAP values during the training phase, helps to further improve the model by identifying and removing irrelevant input features.

Once the model is trained, you can use Clarify to get SHAP values for any dataset. Once you deploy the model as an endpoint, you can use Clarify to monitor the SHAP values for captured data from the endpoint. Another key difference is that Debugger can collect SHAP values during training for XGBoost models whereas Clarify is model agnostic and can work with any model.

Results

With all the tools and services SageMaker provides, lekker was able to raise churn model accuracy by nearly 20%. In addition, the model is more stable than earlier versions. That’s why the F1 score raised over 80% and AUC to 96%.

“Since we got all this information about model insights, we are able to get a clear understanding about what’s happening,” says Steffen Kremers, a data scientist at lekker. “Especially the concept of feature gains, which is fully integrated in the Debugger report, gave us useful information about the most influencing features. Important information for both feature engineering and feature selection.”

Since the churn model was deployed, lekker has moved three more models to SageMaker and integrated them into operations. lekker transferred the learnings they made to all these models, and have seen that all models yield better results than before. Once lekker saw the insights ML can bring, they began expanding their ML activities.

Conclusion

This post demonstrated how lekker moved workloads from on premises to SageMaker, and how it helped their data science teams accelerate and innovate faster. lekker extensively uses Debugger to get deeper insights into their models, which help improve and better explain the models. To learn more about Debugger features and how this service can help your business, see Amazon SageMaker Debugger. To learn more about optimizing for customer churn, check out the blog post Preventing customer churn by optimizing incentive programs using stochastic programming.

About the Authors

Steffen Kremers is a data scientist at lekker based in Germany. He accompanies the whole machine learning process – from developing use case ideas to model building up to model deployment.

Steffen Kremers is a data scientist at lekker based in Germany. He accompanies the whole machine learning process – from developing use case ideas to model building up to model deployment.

Nathalie Rauschmayr is an Applied Scientist at AWS, where she helps customers develop deep learning applications.

Nathalie Rauschmayr is an Applied Scientist at AWS, where she helps customers develop deep learning applications.

Lu Huang is a Senior Product Manager on the AWS Deep Engine team, managing Amazon SageMaker Debugger.

Lu Huang is a Senior Product Manager on the AWS Deep Engine team, managing Amazon SageMaker Debugger.

Tags: Archive

Leave a Reply