Build XGBoost models with Amazon Redshift ML

Amazon Redshift ML allows data analysts, developers, and data scientists to train machine learning (ML) models using SQL. In previous posts, we demonstrated how customers can use the automatic model training capability of Amazon Redshift to train their classification and regression models. Redshift ML provides several capabilities for data scientists. It allows you to create a model using SQL and specify your algorithm as XGBoost. It also lets you bring your pre-trained XGBoost model into Amazon Redshift for local inference. You can let users remotely invoke any model deployed in Amazon SageMaker for inference with SQL.

In this post, we illustrate how data scientists can train models using the XGBoost algorithm. Specifically, we discuss how you can use Redshift ML to train ML models with the CREATE MODEL command by providing advanced parameters such as preprocessors, problem type, and hyperparameters.

In the post Create, train, and deploy machine learning models in Amazon Redshift using SQL with Amazon Redshift ML, we reviewed the benefits of Redshift ML and how it simplifies your ML pipeline without the complexity of exporting your data from the data warehouse for use with ML. You don’t have to worry about the governance of data that you export from your data warehouse.

Prerequisites

To get started, we need an Amazon Redshift cluster with the ML feature enabled. Redshift ML is generally available, and you can use it in a cluster with the latest maintenance version. For an introduction to Redshift ML and instructions on setting it up, see Create, train, and deploy machine learning models in Amazon Redshift using SQL with Amazon Redshift ML.

Introducing XGBoost

The XGBoost algorithm (eXtreme Gradient Boosting) is an optimized open-source implementation of the gradient boosted trees algorithm. XGBoost is designed from the ground up to handle many data science problems in a highly efficient, flexible, portable, and accurate way. XGBoost can be used for regression, binary classification, multi-class classification, and ranking problems. For details on XGBoost and SageMaker, see Introducing the open-source Amazon SageMaker XGBoost algorithm container.

The Redshift ML CREATE MODEL with AUTO OFF option currently supports only XGBoost as the MODEL_TYPE. As a data scientist or ML expert, you can provide relevant information such as objective, preprocessors, and hyperparameters as part of the CREATE MODEL command based on your use case.

Use case

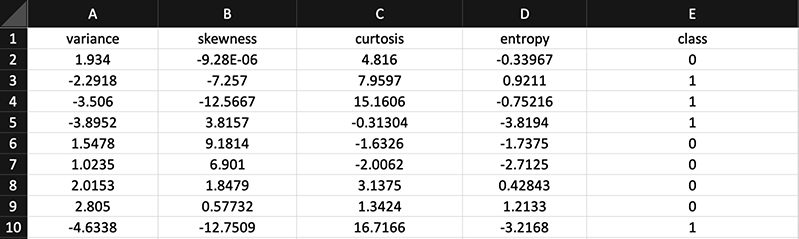

For this post, we use the banknote authentication dataset, which is a classic binary classification problem to predict whether a given banknote is genuine or forged. With analytical methods, you can use the Intaglio structures of circulating banknotes for authentication. Intaglio is a special printing technique that adds a unique structure to banknotes. Image recognition and the Wavelet Transform tool were used to obtain spectral features like variance, skewness, kurtosis, and entropy (of an image) from banknote specimens used as the input parameters for the model. Based on these measurements, you can employ ML methodologies to predict an original note from a forged one.

The following screenshot shows a sample dataset for this use case. The last column, class, is the one we’re going to predict. Zero (0) corresponds to a genuine banknote and one (1) is counterfeit.

Setup and data preparation

We split the dataset into two sets (80% and 20%) to use for training and testing purposes. To get arbitrariness in the dataset, we use a random number when we split the data. The test data is available in the public Amazon Simple Storage Service (Amazon S3) bucket s3://redshiftbucket-ml-sagemaker/banknote_authentication.

The following are the DDL and COPY commands for both training and test datasets. If you prefer, you can also keep the dataset in a single table and use a where clause in the SQL statement to split the training and test data. For simple illustration purposes, we divide the dataset to be used in two different tables. You can use the Amazon Redshift Query Editor or your preferred SQL editor to run these SQL statements.

For loading data, use the appropriate AWS Identity and Access Management (IAM) role for your Amazon Redshift cluster.

Create the ML Model

Now that we have set up the data , let’s create the model in Redshift ML with AUTO OFF and XGBoost as the model type using the CREATE MODEL command:

We specified the class column as the target (label) that we want to predict, and specified func_model_banknoteauthentication_xgboost_binary as the function.

Make the appropriate changes in the CREATE MODEL command to specify the IAM_ROLE and S3_BUCKET . Refer to the previous posts or the documentation on the requirements for the IAM role. The S3 bucket specified is used for exporting the training dataset and storing other ML-related artifacts.

We use the table banknoteauthentication_train for training data, and the MODEL_TYPE is set to xgboost with AUTO OFF.

The CREATE MODEL command is asynchronous, and the model creation happens in the background after you run the command. It may take 8–10 minutes for the model to be trained and ready; and you can monitor the progress using the SHOW MODEL model_name; command:

Objectives and hyperparameters

As a data scientist, you might want to specify training objectives and hyperparameters for tuning your model. Let’s discuss briefly how you can use OBJECTIVE and HYPERPARAMETERS options in the CREATE MODEL with AUTO OFF.

An objective in any ML problem is a measure of the quality of the solution. An objective of a problem aims to either minimize or maximize the function specified. For example, reg:squarederror is used as the objective for regression problems, and the smaller the value (closer to zero) this function is, the better the accuracy of the model.

Redshift ML currently supports the following OBJECTIVE options that go along with XGBoost: reg:squarederror, reg:squaredlogerror, reg:logistic, reg:pseudohubererror, reg:tweedie, binary:logistic, binary:hinge, and multi:softmax. For more information, see Learning Task Parameters in the XGBoost documentation.

Hyperparameters are problem- and algorithm-specific properties that govern and control the behavior of the training and can have a direct impact on the efficiency of the model. If no hyperparameters are specified, the defaults for XGBoost are used. The values should be enclosed in single quotes. For our hyperparameters, NUM_ROUND (the number of rounds to run the training) is set to 100. For a complete list of supported hyperparameters, see CREATE XGBoost models with AUTO OFF.

The following code is a representative output from show model after the create model command starts. It reflects the inputs presented in the create model command and more information like model state (TRAINING, READY, FAILED) and the max runtime to finish. Leaving the model to run to completion with the default time allows you to tune the model to yield better accuracy.

After the create model command is complete, the show model command output looks like the following code. The model state is now READY and has additional information like Estimated Cost and train:error. For models created with Amazon SageMaker Autopilot (AUTO ON), we see validation as one metric that gives the model’s accuracy, whereas AUTO OFF with XGBoost provides train:error, which is a measure of accuracy. In the following code, the value 0.000000 indicates the model is close to 100% accurate.

Inference

For binary and multi-class classification problems, we compute the accuracy as the model metric. Accuracy can be calculated based on the following:

Let’s apply the preceding code to our use case to find the accuracy of the model. We use the banknoteauthentication_test table to test the accuracy, and use the newly created function func_model_banknoteauthentication_xgboost_binary to predict and take the columns other than the target and label as the input.

The inference query output (0.9923 *100 = 99.23 %) matches the output from the show model command.

Let’s run the prediction query on the banknoteauthentication_test to get the count of original vs. counterfeit banknotes:

Troubleshooting

Please check the Redshift ML regression blog for troubleshooting tips.

Conclusion

Redshift ML provides an easy and seamless platform for database users to create, train, and tune models using the SQL interface. This post showed how data scientists can train an XGBoost model using Redshift ML with the CREATE MODEL command and use the model for inference using SQL.

About the Authors

Satish Sathiya is a Senior Product Engineer at Amazon Redshift. He is an avid big data enthusiast who collaborates with customers around the globe to achieve success and meet their data warehousing and data lake architecture needs.

Satish Sathiya is a Senior Product Engineer at Amazon Redshift. He is an avid big data enthusiast who collaborates with customers around the globe to achieve success and meet their data warehousing and data lake architecture needs.

Debu Panda, a principal product manager at AWS, is an industry leader in analytics, application platform, and database technologies and has more than 25 years of experience in the IT world.

Debu Panda, a principal product manager at AWS, is an industry leader in analytics, application platform, and database technologies and has more than 25 years of experience in the IT world.

Jiayuan Chen is a Software Development Engineer at AWS. He is passionate about designing and building data-intensive applications, and has been working in the areas of data lake, query engine, ingestion, and analytics. He keeps up with latest technologies and innovates things that spark joy.

Jiayuan Chen is a Software Development Engineer at AWS. He is passionate about designing and building data-intensive applications, and has been working in the areas of data lake, query engine, ingestion, and analytics. He keeps up with latest technologies and innovates things that spark joy.

Lokesh Gupta is a Software Development Manager at AWS and manages the Machine Learning Compiler Technologies team. He leads the effort to optimize ML models for best inference performance on the Cloud and Edge targets.

Lokesh Gupta is a Software Development Manager at AWS and manages the Machine Learning Compiler Technologies team. He leads the effort to optimize ML models for best inference performance on the Cloud and Edge targets.

Tags: Archive

Leave a Reply