Generate a jazz rock track using AWS DeepComposer with machine learning

At AWS, we love sharing our passion for technology and innovation, and AWS DeepComposer is no exception. This service is designed to help everyone learn about generative artificial intelligence (AI) through the language of music. You can use a sample melody, upload your own melody, or play a tune using the virtual or a real keyboard. Best of all, you don’t have to write any code. But what exactly is generative AI, and why is it useful? In this post, we discuss generative AI, illustrate its features with some use cases, and walk you through composing a track in AWS DeepComposer.

Generative AI overview

Generative AI is a specific field of AI that focuses on generating new material with minimal human interaction. It’s one of the newest and most exciting fields in the AI world with a plethora of possibilities. You can use generative techniques for creating new works of art, writing your next symphony, or even discovering new drug combinations.

Generative AI uses mathematical algorithms that run on computers, based on an input, to produce a new output. This newly generated output approximates something real, but is synthetically generated. You can feed this synthetic data into other AI models, extending the range of creative possibilities and helping find new and unique solutions to challenging problems.

For example, Autodesk uses generative design techniques to build new and innovative designs for interplanetary landing craft.

AWS DeepComposer overview

AWS DeepComposer provides a fun and hands-on way for developers to start with generative AI. AWS DeepComposer provides a range of built-in melodies to use in your models. This is the input into the generative AI modeling process. You can play in your own melodies using the virtual keyboard, or connect your own MIDI compatible keyboard. You also have the option to upload your own MIDI file.

The AWS DeepComposer tool allows you to have fun in an engaging and interesting way while learning generative AI. You may need to run your models at least 5–10 times to generate at least one example that is musically pleasing. Running an AI model hundreds or thousands of times is not uncommon in data science, in order to generate the best results. Plus, you’ll have fun listening to the differences between each musical model. To listen to a range of compositions that our clients have created, check out the top 10 entries to a recent AWS DeepComposer Chartbusters competition.

The core mission of the AWS DeepComposer product team is to empower everyone to learn about generative AI, and we believe that music is a great vehicle for learning.

AWS DeepComposer built-in ML models

The first step is to choose a built-in machine learning (ML) model. The model takes your melody and, depending on the model characteristics and the parameters you set, automatically generates new melodies, adds harmonies, or adds notes to your existing melody. You can choose from three models:

- Autoregressive Convolutional Neural Network (AR-CNN) – Uses a ready-to-go neural network that takes your input melody and adds or removes notes in a style similar to Bach. This is a great start for beginners and produces good results in a short time.

- Generative Adversarial Network (GAN) – Uses a generator and discriminator technique to produce genre-based accompaniment tracks based on various parameters. In other words, the original input melody is harmonized, and instrumentation is selected. This model requires more work, and probably produces mediocre musical results. However, you can use this to create new chords or harmonies based on the input track.

- Transformers – Converts your track into tokens that are used to add notes to your input track. It’s great for creating new musical material, but the quality of the melody varies enormously depending on the input track and the way the model is tuned using the parameters. For me as a musician, this is the most exciting model, because it can generate high-quality content in a short time. Tight timelines are a common factor when writing commercial music, particularly for TV, radio, and film. The use of generated content helps reduce the timelines and likely increases the quality of music for capable musicians.

Create a composition in AWS DeepComposer

Let’s walk through the process of creating a new musical composition. For this post, we use the AR-CNN model with the default parameters. This model has been trained using a range of different Bach chorales as inputs.

As a prerequisite to my music degree in the mid-1990s, the study of Bach chorales was essential for learning the basics of tonal music harmony. For this reason, it makes sense to start with the AR-CNN model, because it’s more likely to create tonal music harmony, based on the major and minor scale system, that is pleasing to the ear. Many of the chord progressions used in Bach chorales are still used in modern popular music.

Firstly, I played in a simple melody based on the C minor scale. I purposely chose a scale that would have been typical of the Bach era, but I decided to add a little bit of a swing and jazz rhythm to it. The reason for adding the jazz rhythm was to observe how well the model would cope with the rhythm.

You can use one of the melodies that is built into the tool, such as Twinkle, Twinkle, Little Star, utilize the virtual keyboard to play in your tune, or if you like a tune with a jazzy lilt, try the tune Bass Riff for AWS DeepComposer Demo on SoundCloud. The original MIDI file is available on GitHub.

In this example, we use the same tune that we supplied on GitHub.

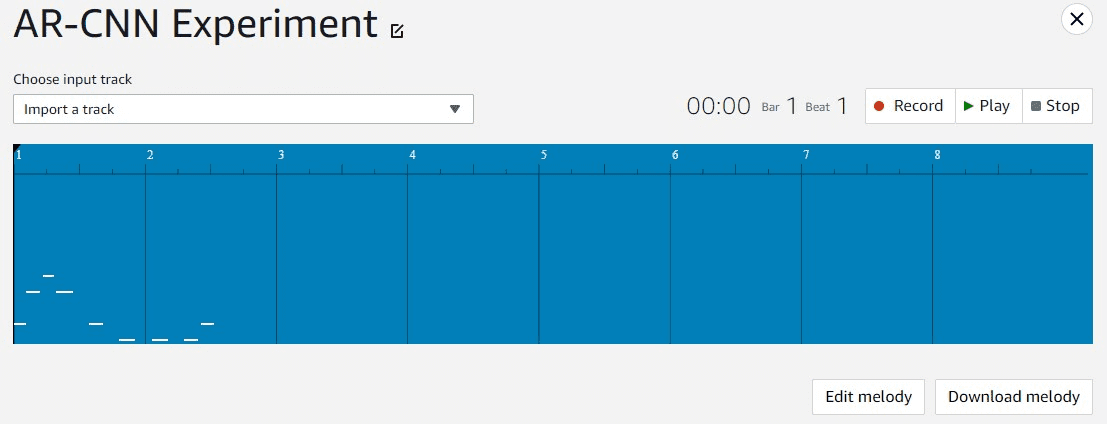

After you import the tune, it should look similar to the following screenshot.

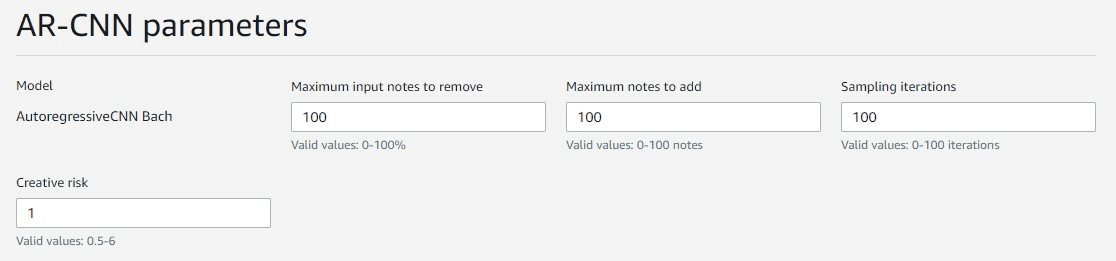

Next, choose the AR-CNN model with the default parameters.

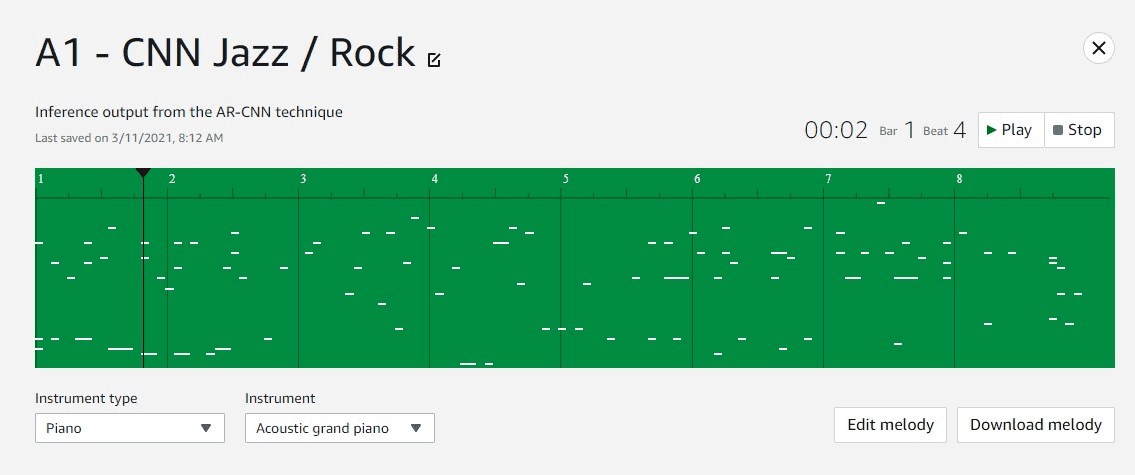

Then listen to your final composition. Each time you run the model, it produces a unique set of results. In our experiment, it produced the following interesting musical pattern.

It took the jazz lilt of the original input melody and incorporated that rhythmic feel into the entire composition, which is very interesting. Because the model was trained on Bach chorales, it has a strong grasp of how tonal harmonies work. I found the harmonies work very well with the custom input melody and are pleasing to the ear. This was experiment two out of five, so don’t be afraid to run the model many times and select the best musical composition.

Share your composition

Finally, you can upload your creative masterpiece to SoundCloud. You need to sign up for a SoundCloud account, if you don’t have one, then you can share with all your friends on social media.

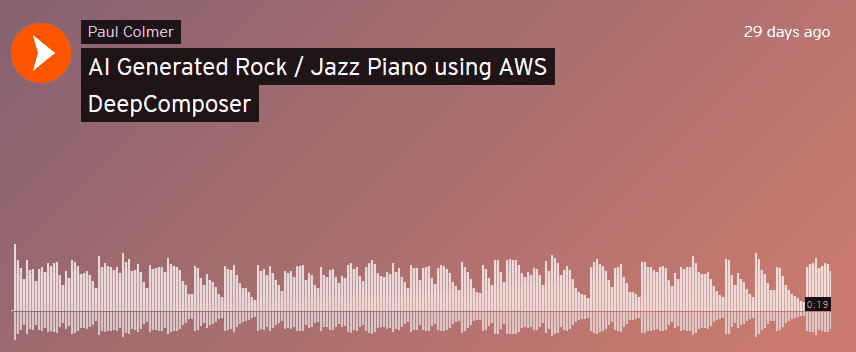

The following is the musical output of our experiment that that we just showed you, using the AR-CNN model.

The following is another experiment using the Transformer model.

Lastly, the following is an experiment using the GAN model.

But why stop at sharing with your friends, when you could enter the AWS DeepComposer Chartbusters Challenge with your latest masterpiece and share with the whole world!

For more information about the 2021 Chartbusters season, see Announcing the AWS DeepComposer Chartbusters challenges 2021 season launch.

Conclusion

In this post, we talked about the definition of generative AI and provided some use cases. We introduced you to the AWS DeepComposer tool and showed some musical examples showcased from the AWS DeepComposer Chartbusters competition.

We explained the basics of the three built-in models in AWS DeepComposer and walked you through an example of how you can create your own music from the AR-CNN model. Lastly, we showed you how to upload your masterpiece to SoundCloud, so you can share with your family and friends or compete against other composers.

Want to learn more? Try training a custom model in the AWS DeepComposer Studio. Let me know your thoughts in the comments section. Most importantly, have fun!

About the Author

Paul Colmer is a Technical Account Manager at Amazon Web Services. His passion is helping customers, partners and employees develop and grow, through compelling story telling and sharing experiences and knowledge. With over 25 years in the IT industry, he specializes in agile cultural practices and machine learning solutions. Paul is a Fellow of the London College of Music and Fellow of the British Computer Society.

Paul Colmer is a Technical Account Manager at Amazon Web Services. His passion is helping customers, partners and employees develop and grow, through compelling story telling and sharing experiences and knowledge. With over 25 years in the IT industry, he specializes in agile cultural practices and machine learning solutions. Paul is a Fellow of the London College of Music and Fellow of the British Computer Society.

Tags: Archive

Leave a Reply