Automate continuous model improvement with Amazon Rekognition Custom Labels and Amazon A2I: Part 2

In Part 1 of this series, we walk through a continuous model improvement machine learning (ML) workflow with Amazon Rekognition Custom Labels and Amazon Augmented AI (Amazon A2I). We explained how we use AWS Step Functions to orchestrate model training and deployment, and custom label detection backed by a human labeling private workforce. We described how we use AWS Systems Manager Parameter Store to parameterize the ML workflow to provide flexibility without needing development rework.

In this post, we provide step-by-step instructions to deploy the solution with AWS CloudFormation.

Automate continuous model improvement with Amazon Rekognition Custom Labels and Amazon A2I

|

Prerequisites

You need to complete the following prerequisites before deploying the solution:

- Have an AWS account with AWS Identity and Access Management (IAM) permissions to launch the provided CloudFormation template.

- Decide on one of the Amazon Rekognition Custom Labels supported Regions in which to deploy this solution.

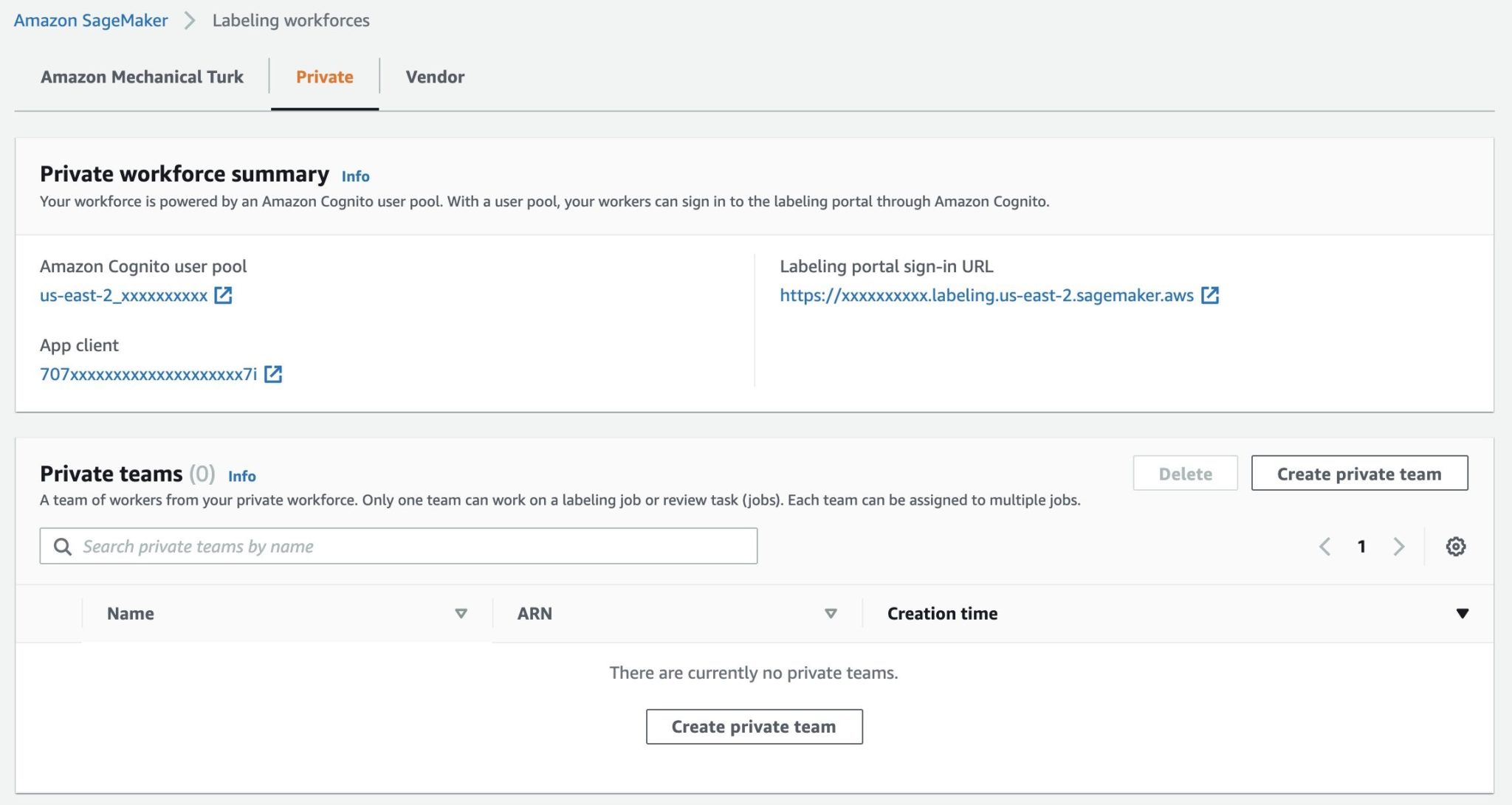

- As part of the CloudFormation deployment process, you can optionally deploy an Amazon SageMaker Ground Truth private workforce with an associated Amazon Cognito user pool. Because you can only have one private workforce per Region, you can’t deploy over an existing private workforce. To verify whether you have a private workforce already, go to the Labeling workforces page on the Amazon SageMaker console, choose the Region that you want use, and choose the Private You should see three possible setups:

- No Private workforce – In this setup, SageMaker deploys a private workforce and a private team for you. When you’re prompted to enter a value for

A2IPrivateTeamNamein the CloudFormation template, leave it blank. - Private workforce without private teams – In this setup, you need to create a private team first. Choose Create private team and follow the instructions to create a team. When you’re prompted to enter a value for

A2IPrivateTeamNamein the CloudFormation template, enter your newly created private team name.

- No Private workforce – In this setup, SageMaker deploys a private workforce and a private team for you. When you’re prompted to enter a value for

- Private workforce with private teams – In this setup, you can use your existing private team or create a new one. We recommend that you create a new private team. When you’re prompted to enter a value for

A2IPrivateTeamNamein the CloudFormation template, enter your private team name.

- You need to initialize Amazon Rekognition Custom Labels for your selected Region before you can use Amazon Rekognition Custom Labels. On the Amazon Rekognition Custom Labels console, choose Projects in the navigation pane and take one of the following actions:

- If you see the Projects page with or without projects, then your Region is initialized.

- If you’re prompted with a message for first-time setup, follow the instructions to create the default Amazon Simple Storage Service (Amazon S3) bucket. We don’t use the default S3 bucket with this solution, but it’s part of the Amazon Rekognition Custom Labels initialization process.

Deploy the solution resources

Now that we have explained how this solution works, we show you how to use AWS CloudFormation to deploy the required and optional AWS resources for this solution.

- Sign in to the AWS Management Console with your IAM username and password.

- Choose Launch Stack.

- Choose your AWS Region (as noted in the prerequisites).

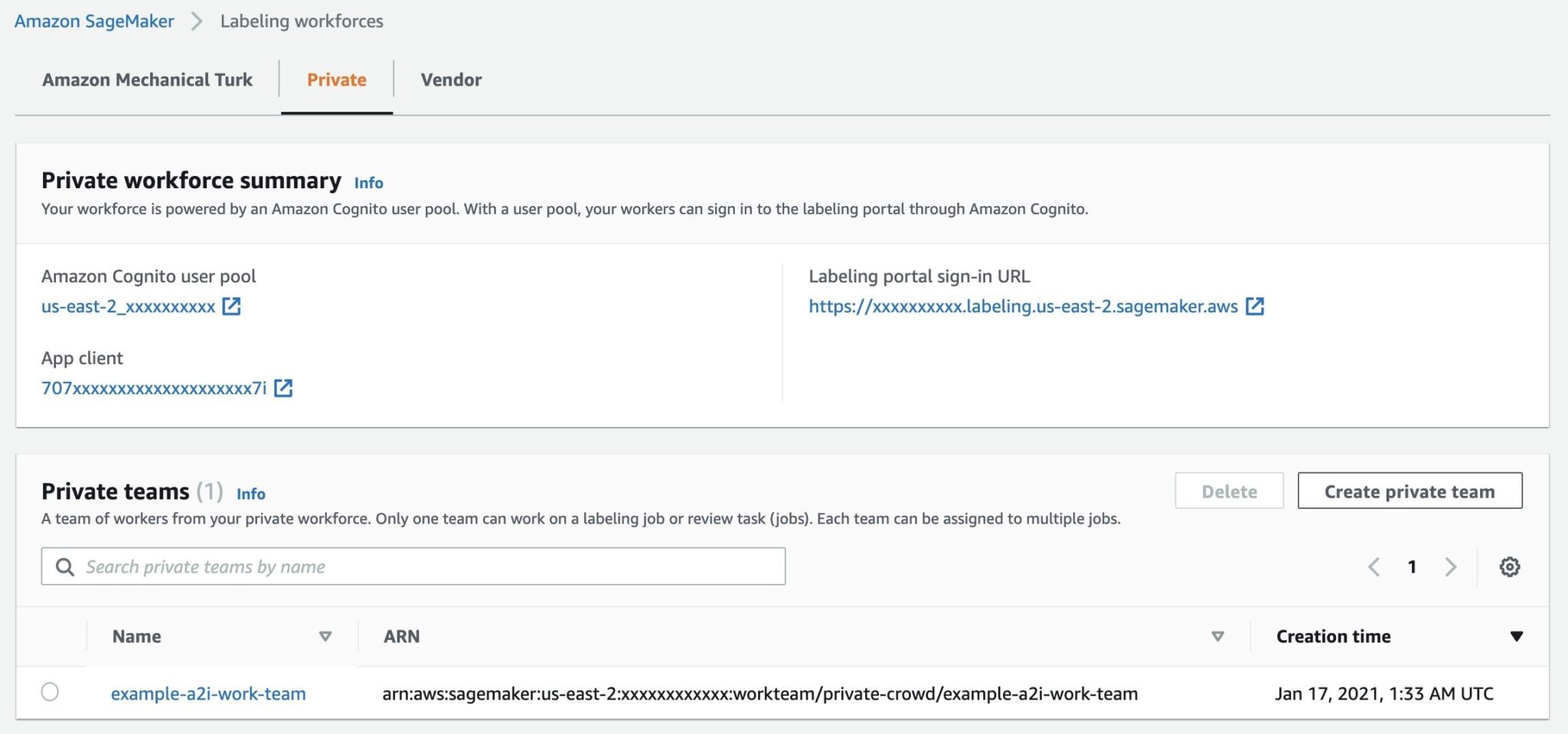

- For Stack name, enter a name.

- For A2IPrivateTeamName, leave it blank if you don’t have a SageMaker private workforce. If you have a private workforce, enter the private team name, not ARN.

- For SNSEmail, enter a valid email address.

- Select I acknowledge that AWS CloudFormation might create IAM resources with custom names.

- Choose Create stack.

The CloudFormation stack takes about 5 minutes to complete. You should receive an email “AWS Notification – Subscription Confirmation” asking you to confirm a subscription. Choose Confirm subscription in the email to confirm the subscription. Additionally, if AWS CloudFormation deployed the private work team for you, you should receive an email “Your temporary password” with a username and temporary password. You need this information later to access the Amazon A2I web UI to perform the human labeling tasks.

Solution overview

In the following sections, we walk you through the end-to-end process of using this solution. The process includes the following high-level steps:

- Prepare your initial training images.

- Train and deploy your model.

- Use your model for logo detection.

- Complete a human labeling task.

- Run automated model training.

Prepare your initial training images

In this post, we use Amazon and AWS logos for our initial training images.

- Download the sample images .zip file and expand it.

- As a best practice, review the training images in the

amazon_logoandaws_logo.

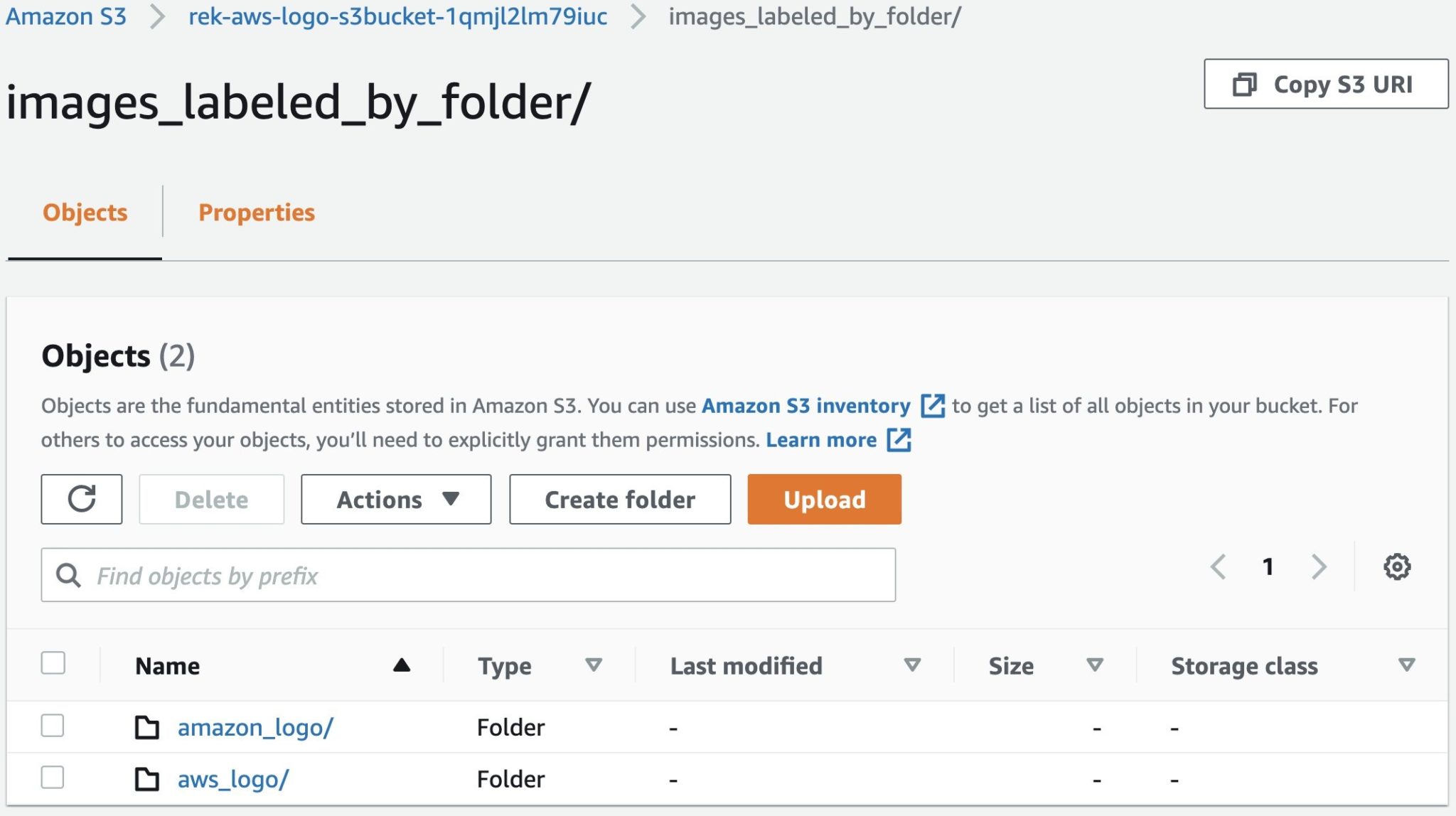

Amazon Rekognition Custom Labels supports two methods of labeling datasets: object-level labeling and image-level labeling. This solution uses the image-level labeling method. Specifically, we use the folder name as the label such that images in the amazon_logo and aws_logo folders are labeled amazon_log and aws_logo, respectively.

Train and deploy your model

In this section, we walk you through the steps to add training images to Amazon S3 and explain how the automatic model training process works.

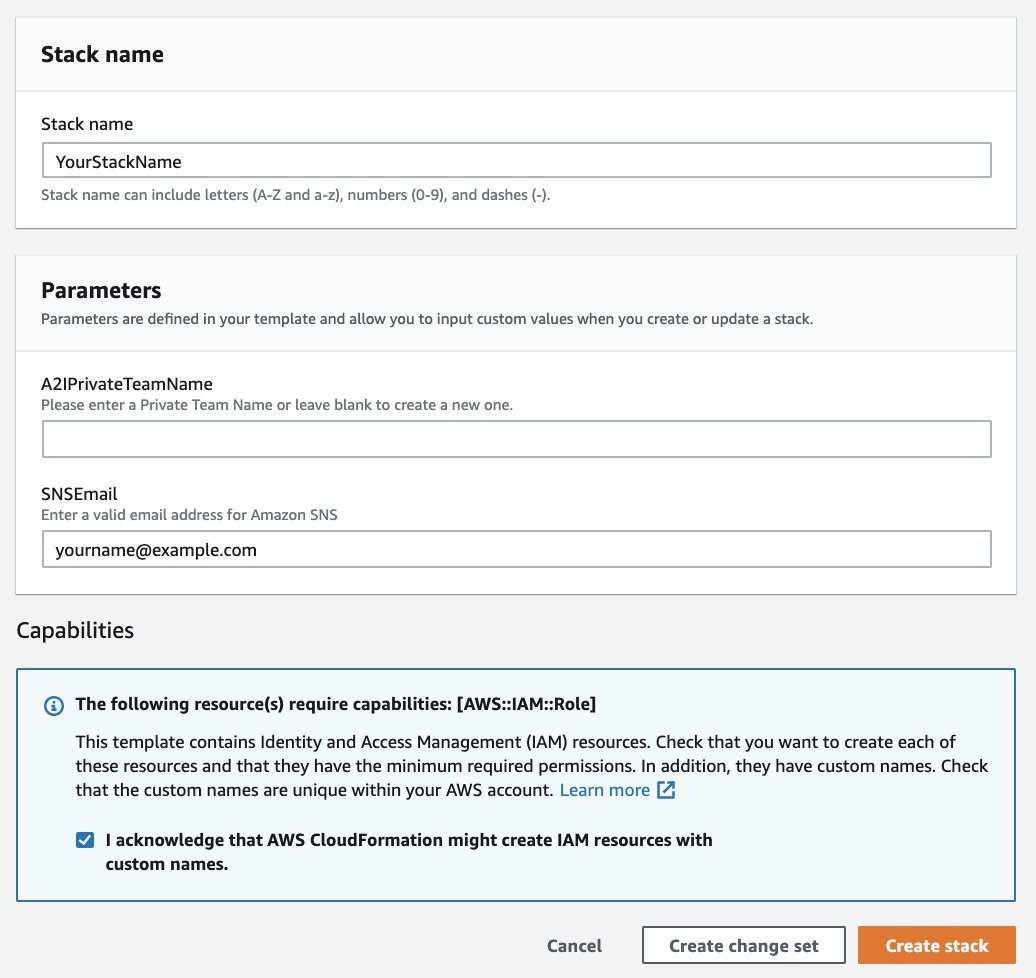

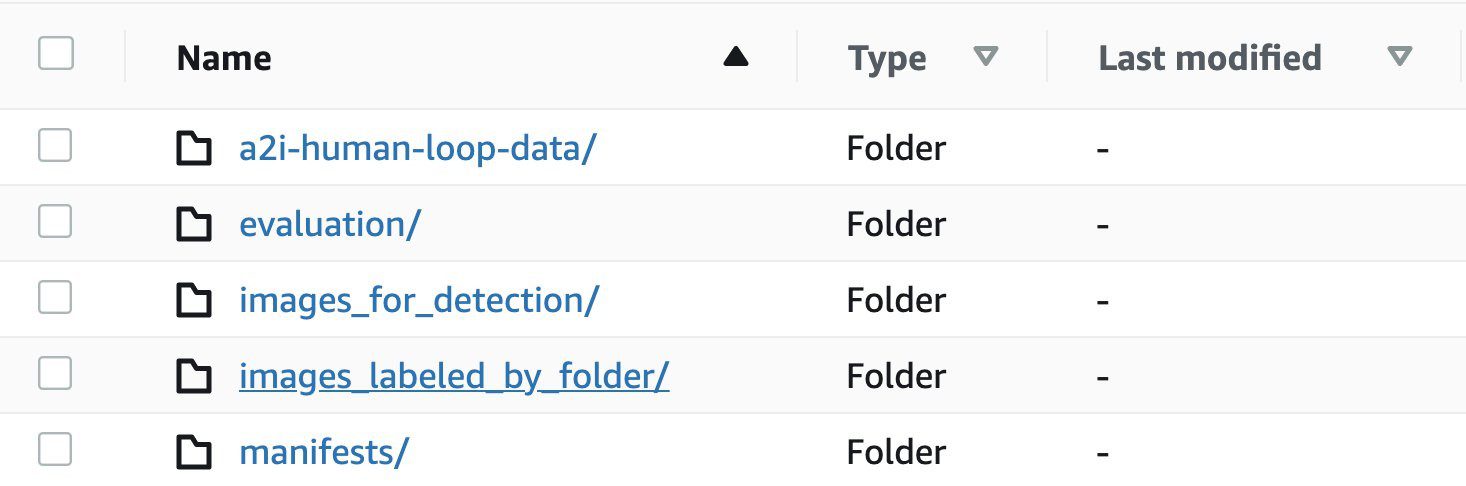

- On the Amazon S3 console, choose the bucket with the name similar to

yourstackname-s3bucket-esxhty0wyhqu.

You should see five folders, as in the following screenshot.

- Choose the folder

images_labeled_by_folder. - Choose Upload and then Add folder.

- When prompted to select images from your computer, choose the

amazon_logofolder, not the images inside the folder. - Repeat these steps to add the

aws_logofolder. - Choose Upload.

You should now have two folders and 10 images, as shown in the S3 images_labeled_by_folder folder.

At this point, we have 10 training images, which meet the minimum of 10 new images (as currently set in Parameter Store) to qualify for new model training. When the Amazon EventBridge schedule rule triggers to invoke the Step Functions state machine to check for new training, it initiates a full model training and deployment process. Because the current schedule rule polling frequency is 600 minutes, the schedule rule doesn’t trigger in the time frame of this demo. We manually trigger the schedule rule for the sole purpose of this demo only.

- On the Parameter Store console, choose the

Enable-Automatic-Trainingparameter - Choose Edit.

- Update the value to

falseand choose Save changes. - Choose Edit

- Update the value back to

trueand choose Save changes.

This resets the schedule rule to initiate an event to send to Step Functions to check the conditions for new model training. You should also receive three separate emails, two indicating that you updated a parameter and one indicating that automatic training is checked.

Wait for the model training and deployment to complete. This should take approximately 1 hour. You should receive periodic emails on model training and deployment status. The model is deployed when you receive an email with the message “Status: RUNNING.”

- As an optional step, go to the Projects page on the Amazon Rekognition Custom Labels console to review the model status and training details.

If you’re redirected to a different Region, switch to the correct Region.

- As another optional step, choose the state machine on the Step Functions console.

You should see a list of runs and associated statuses.

- Choose any run to review the details and choose Graph inspector.

The state machine records every run so you can always refer back to troubleshoot issues.

Now that your initial model has been deployed, let’s test out the model with some images.

Use your model for logo detection

In this section, we walk you through the steps to detect custom logos and explain how A2I human labeling task is created.

- Navigate to the S3 bucket and choose the

images_for_detection - Upload an image that you used previously for training.

The upload process triggers an S3 PutObject event, which invokes an AWS Lambda function to run the Amazon Rekognition Custom Labels detection process. You should receive an email with a detection result indicating a confidence score of at least 70, which is as expected because you’re using the same image you used for training.

- Upload all the images in the

inference_images

You should receive emails with detection results with varying confidence scores, and some should indicate that Amazon A2I human loops have been created. When a detection result has a confidence score less than the acceptance level, which is currently set at 70, a human labeling task is created to label that image.

- Optionally, go to the Step Functions console to review the state machine runs of the custom label detection events.

Complete a human labeling task

In the last section, we staged some human labeling tasks. In this section, we walk you through the steps to complete a human labeling task and explain how the human labeling results are processed for new model training.

- On the Amazon S3 console, navigate to the

images_labeled_by_folder - Make a note of the names of the folders and the total number of images.

At this stage, you should have two folders and a total of 10 images.

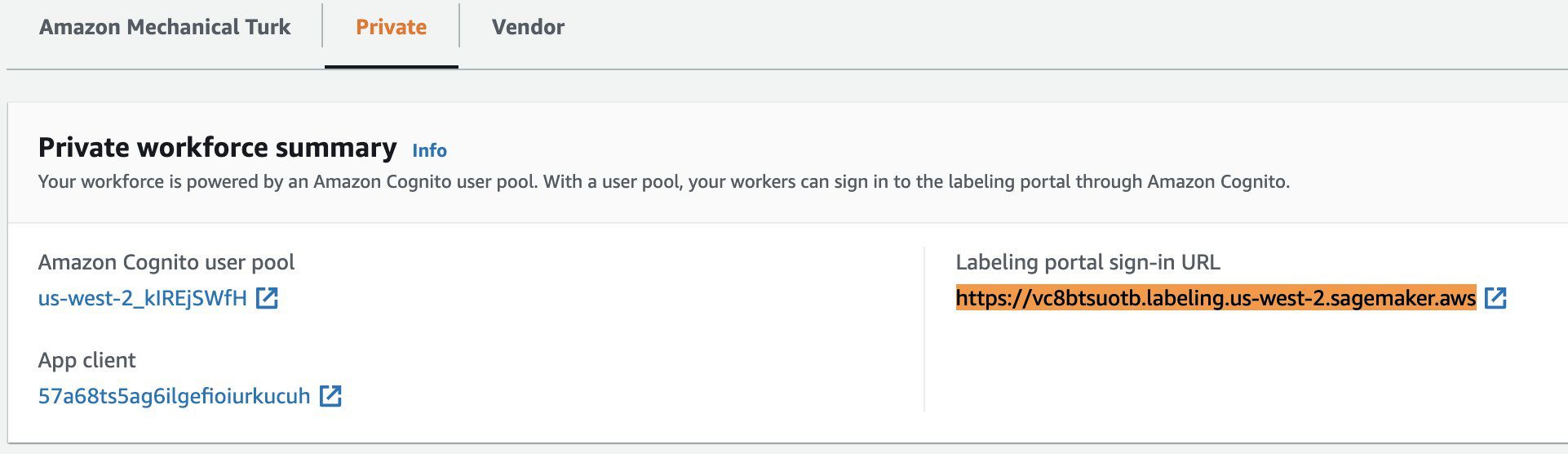

- On the Ground Truth console, go to your labeling workforce.

If you’re redirected to a different Region, switch to the correct Region.

- On the Private tab, choose the URL under Labeling portal sign-in URL.

This opens the Amazon A2I web portal for the human labeling task.

- Sign in to the web interface with your username and password.

If the private team was deployed for you, the username and temporary password are in the email that was sent. If you created your own private team, you should have the information already.

- Choose Start working to begin the process.

- For each image, review the image and choose the correct Amazon company logo or

None of the Aboveto use as label. - Keep a tally of the options you choose in each task.

- Choose Submit to advance to the next image until you have completed all the labeling tasks.

- Return to the

images_labeled_by_folderfolder and review the changes.

For each image you labeled, a Lambda function makes a copy of the original image, appends the prefix humanLoopName and an UUID to the original S3 object key, and adds it to the corresponding labeled folder. If the folder doesn’t exist, the Lambda function creates a corresponding labeled folder. For the None of the Above labels, nothing is done. This is how newly captured and labeled images are added to the training dataset.

Run automated model training

In the previous sections, you uploaded 10 training images and manually invoked model training for the purpose of this demo only. The model was trained and automatically deployed because the F1 score was greater than the minimum of 0.8 as set in Parameter Store. In addition, the model was deployed with a minimum inference unit of 1, as set in Parameter Store.

Next, you uploaded some inference images for detection. Based on the minimum detection confidence score of 70% (as set in Parameter Store), images with less than 70% required human review. For each image requiring review, a human review task was created, because the Amazon A2 workflow process was enabled in the parameter. You completed the human review tasks and some labeled images were added to the total training dataset.

For new model training to begin, it needs to meet three conditions in the following order:

- Automatic training is enabled in Parameter Store. If disabled, the schedule rule isn’t triggered to check the training.

- The schedule rule triggers next to check for training based on the set frequency. Because the frequency is current set at 600 minutes, the schedule rule doesn’t trigger in the timeline of this demo.

- When checking for training, the number of new images added since the last successful training must be greater than the minimum untrained images (currently set at 10) for new training to start. Because you trained 10 images in the initial training, you need 10 new labeled images, for a total of 20 images.

Clean up

Complete the following steps to clean up the AWS resources that we created as part of this post to avoid potential recurring charges.

- On the AWS CloudFormation console, choose the stack you used in this post.

- Choose Delete to delete the stack.

- Note that the following resources are not deleted by AWS CloudFormation:

-

- S3 bucket

- Amazon Rekognition Custom Labels project

- Ground Truth private workforce

- On the Amazon S3 console, choose the bucket you used for this post.

- Choose Empty.

- Choose Delete.

- On the Amazon Rekognition Custom Label console, choose the running model.

- On the Evaluate and use model tab, expand the API Code

- Run the AWS Command Line Interface (AWS CLI)

stop model - On the Projects page, select the project and choose Delete.

- To delete the private workforce, enter the following code (leave the workforce name as

defaultand provide your Region):

Conclusions

In Part 1 of this series, we provided the overview of a continuous model improvement ML workflow with Amazon Rekognition Custom Labels and Amazon A2I.

In this post, we walked through the steps to deploy the solution with AWS CloudFormation and completed an end-to-end process to train and deploy a Amazon Rekognition Custom Labels model, perform custom label detection, and create and complete Amazon A2I human labeling tasks.

For an in-depth explanation and the code sample in this post, see the AWS Samples GitHub repository.

About the Authors

Les Chan is a Sr. Partner Solutions Architect at Amazon Web Services. He helps AWS Partners enable their AWS technical capacities and build solutions around AWS services. His expertise spans application architecture, DevOps, serverless, and machine learning.

Les Chan is a Sr. Partner Solutions Architect at Amazon Web Services. He helps AWS Partners enable their AWS technical capacities and build solutions around AWS services. His expertise spans application architecture, DevOps, serverless, and machine learning.

Daniel Duplessis is a Sr. Partner Solutions Architect at Amazon Web Services, based out of Toronto. He helps AWS Partners and customers in enterprise segments build solutions using AWS services. His favorite technical domains are serverless and machine learning.

Daniel Duplessis is a Sr. Partner Solutions Architect at Amazon Web Services, based out of Toronto. He helps AWS Partners and customers in enterprise segments build solutions using AWS services. His favorite technical domains are serverless and machine learning.

Tags: Archive

Leave a Reply