Enable scalable, highly accurate, and cost-effective video analytics with Axis Communications and Amazon Rekognition

With the number of cameras and sensors deployed growing exponentially, companies across industries are consuming more video than ever before. Additionally, advancements in analytics have expanded potential use cases, and these devices are now used to improve business operations and intelligence. In turn, the ability to effectively process video at these rapidly expanding volumes is now critical—but too often, it still falls to manual review. This is unreliable, poorly scalable, and costly, and underscores the need for automation to process video accurately, at scale.

In this post, we show you how to enable your Axis Communications cameras with Amazon Rekognition. Combining Axis edge technology with Amazon Rekognition provides an efficient and scalable solution capable of delivering the high-quality video analysis needed to generate actionable business and security insights.

Proactively analyzing video helps drive business outcomes

Video surveillance has typically been utilized in a reactive way, with security personnel monitoring banks of wall monitors for anything amiss or reviewing footage after the fact for evidence. Advances in edge capabilities and artificial intelligence (AI) and machine learning (ML) in the cloud have enabled today’s cameras to respond to incidents in real time.

High-quality cameras can now capture more accurate and detailed images for analysis in the cloud, which has made analytics more accessible. Edge processing can also make this analysis more feasible, reducing bandwidth by lowering the amount of data that must be transmitted for effective analysis. This combination of improved processing, image quality, and AI and ML capabilities has made serious breakthroughs possible. These advances, including object detection and tracking and pan/tilt/zoom cameras, have improved the quality of images for processing and analysis. Reviewing these images is also easier than ever, with searchable image and video libraries tagged with relevant markers.

The opportunity to apply advanced analytics has therefore never been greater. Companies across industries, including enterprise security, retail, manufacturing, hospitality, travel, and more, are using a hybrid edge plus cloud approach to scale use cases like person of interest detection, automated access management, people and vehicle counting, heat mapping, PPE compliance analysis, sentiment analysis, and product defect and anomaly detection. With these advanced analytics, companies can improve their business KPIs, whether it be improving customer safety, making customer experiences more seamless, or diminishing product defects.

Enhance video analysis with Axis Communications and Amazon Rekognition

Axis Communications is the industry leader in IP cameras and network solutions that provide insights for improving security and new ways of doing business. As the industry leader, Axis offers network products and services for intelligent video surveillance, access control, intercom, and audio.

Amazon Rekognition is an AI service that uses deep learning technology to allow you to extract meaningful metadata from images and videos – including identifying objects, people, text, scenes, and activities, and potentially inappropriate content – with no ML expertise required. Amazon Rekognition also provides highly accurate facial analysis and facial search capabilities that you can use to detect, analyze, and compare faces for a wide variety of user verification, people counting, and safety use cases. Lastly, with Amazon Rekognition Custom Labels, you can use your own data to build your own object detection and image classification models.

The combination of Axis technology for video ingestion and edge preprocessing with Amazon Rekognition for computer vision provides a highly scalable workflow for video analytics. Ease of use is a significant positive factor here because adding Amazon Rekognition to existing systems isn’t difficult – it’s as simple as integrating an API into a workflow. No need to be an ML scientist; simply send captured frames to AWS and receive a result that can be entered into a database.

Serverless computing, through AWS Lambda, also makes life easier for both customers and integrators. It means less hardware needs to be deployed, which also reduces the cost of deployment. And because Axis cameras are processing video at the network edge, you can set intelligent rules to determine when these devices should send captured images to Amazon Rekognition for further analysis – saving considerable bandwidth. With just a few lines of code, you can create the glue that attaches received images to Amazon Rekognition.

This further underscores the dramatic improvement over manual review. Better results can be achieved faster and with greater accuracy – without the need for costly, unnecessary manhours. With so many potential use cases, the combination of Axis devices and Amazon Rekognition has the potential to provide today’s businesses with significant and immediate ROI.

Solution overview

First, preprocessing is performed at the edge using an Axis camera. You can use a variety of different events to trigger when to capture an image and sent it to AWS for further image analysis:

- Axis native camera event triggers – These include the following:

- Digital I/O input

- Scheduled event

- Virtual input (input from other sensors to trigger the image upload)

- Tampering

- Shock detection

- Audio detection

- Axis analytic event triggers – The following is a list of Axis analytical camera applications that you can use to trigger an image capture. You can also develop your own apps to run on the camera to generate an event. For more information, see AXIS Camera Application Platform.

- Motion detection, which is installed on all cameras by default

- Axis Object Analytics captures a person or vehicle in a scene

- Axis Face Detector captures faces found in a scene

- Axis License Plate Verifier reads license plates

- Axis Live Privacy Shield masks people for privacy

- Axis Fence Guard allows you to set up virtual fences in a camera’s field of view

- Axis Loitering Guard captures a person or vehicle in a scene loitering

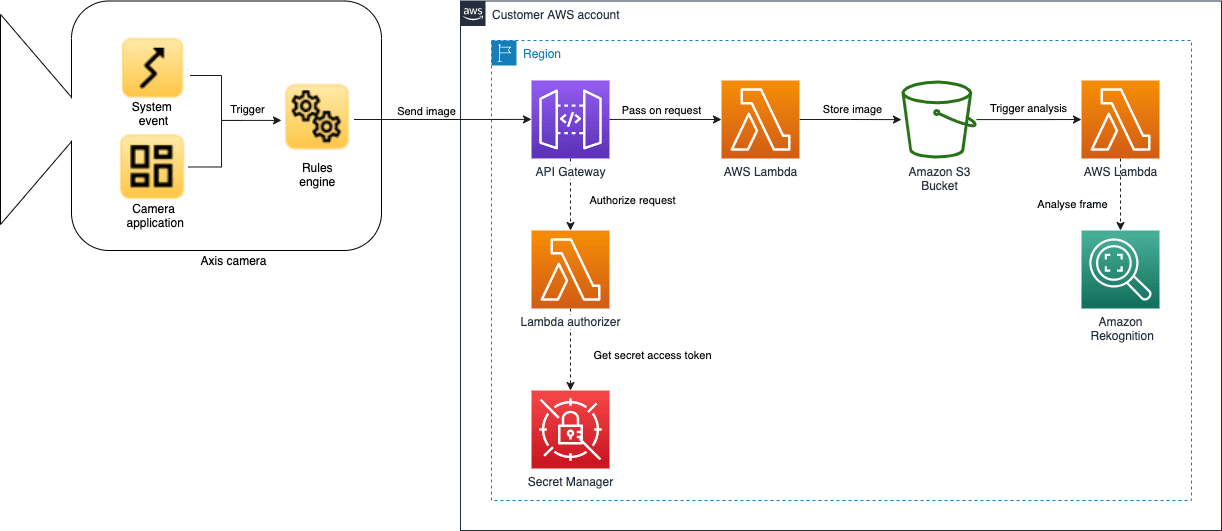

Second, combining Amazon API Gateway, Lambda, and Amazon Simple Storage Service (Amazon S3) with an Axis network camera and its event system allows you to securely upload images to the AWS Cloud.

Third, you can send those images to Amazon Rekognition for analysis of what’s in the frames (such as people, faces, and vehicles).

The following diagram illustrates this architecture.

Now let’s dive into how to set up the cloud services needed and configure the event system in the Axis network camera to upload the images.

The solution setup is divided in two sections: one for the AWS setup and one for the Axis camera setup.

The AWS services and camera configurations needed in order to send an image to Amazon S3 are managed via an example application that can be downloaded from an Axis Communications GitHub repository. The application consists of the following AWS resources:

- API Gateway

- Lambda function

- Secret stored in AWS Secrets Manager

- S3 bucket

Because the camera can’t sign requests using AWS Signature Version 4, we need to include a Lambda function to handle this step. Rather than sending images directly from the Axis camera to Amazon S3, we instead send them to an API Gateway. The API Gateway delegates authorization to a Lambda authorizer that compares the provided access token to an access token stored in Secrets Manager. If the provided access token is deemed valid, the API Gateway forwards the request to a Lambda function that uploads the provided image to an S3 bucket.

Prerequisites

Before you get started, make sure you have the following prerequisites:

- A network camera from Axis Communications

- The AWS Command Line Interface (AWS CLI) installed

- The AWS Serverless Application Model (AWS SAM) installed

- Node.js installed

AWS setup

For your AWS-side configuration, complete the following steps:

- Clone the GitHub repo.

- In your AWS CLI terminal, start by authenticating access to AWS. Depending on your organization’s setup, several authentication options may be valid. For this post, we use multi-factor authentication (MFA) to authenticate access to AWS. Modify and run the following command:

When you’re authenticated against AWS, you can start building and deploying the AWS services receiving the snapshots sent from a network camera. The service resources are described in template.yaml using the AWS Sam.

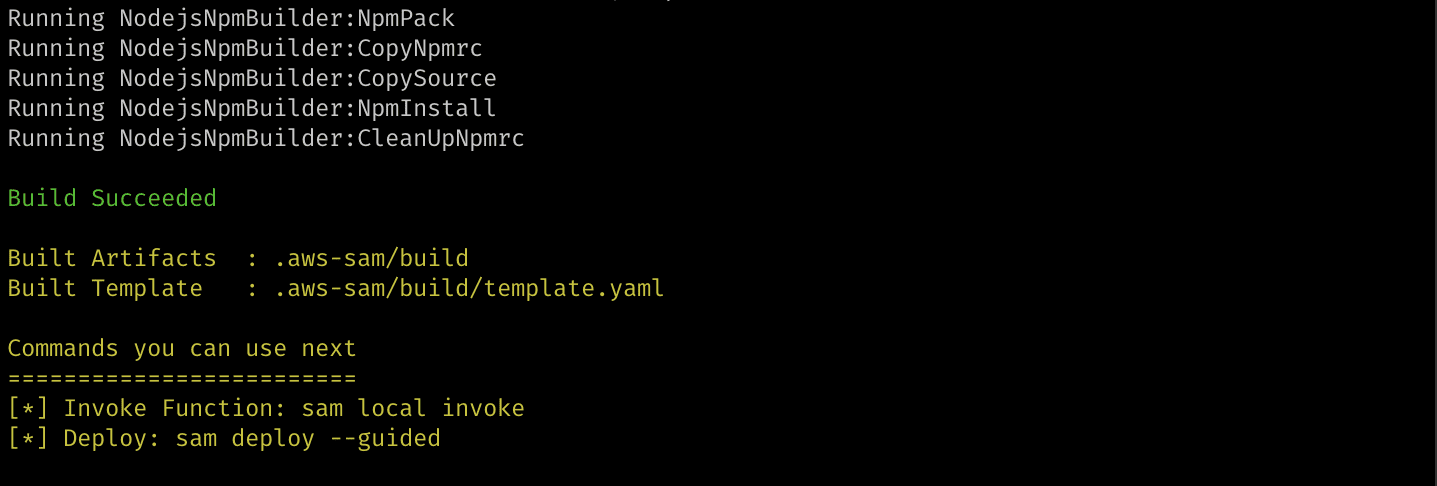

- Run the following command:

The first command builds the source of your application. The second command packages and deploys your application to AWS, with a series of prompts:

- Stack Name – The name of the stack to deploy to AWS CloudFormation. This should be unique to your account and Region; a good starting point would be images-to-aws-s3 or something similar.

- AWS Region – The Region you want to deploy your app to.

- Confirm changes before deploy – If set to yes, any change sets are shown to you before running manual review. If set to no, the AWS SAM CLI automatically deploys application changes.

- Allow SAM CLI IAM role creation – This AWS SAM template creates AWS Identity and Access Management (IAM) roles required for the Lambda function to access AWS services. By default, these are scoped down to minimum required permissions. Choose Y to have the AWS SAM automatically create the roles.

- Save arguments to samconfig.toml – If set to Y, your choices are saved to a configuration file inside the project, so that in the future you can just rerun sam deploy without parameters to deploy changes to your application.

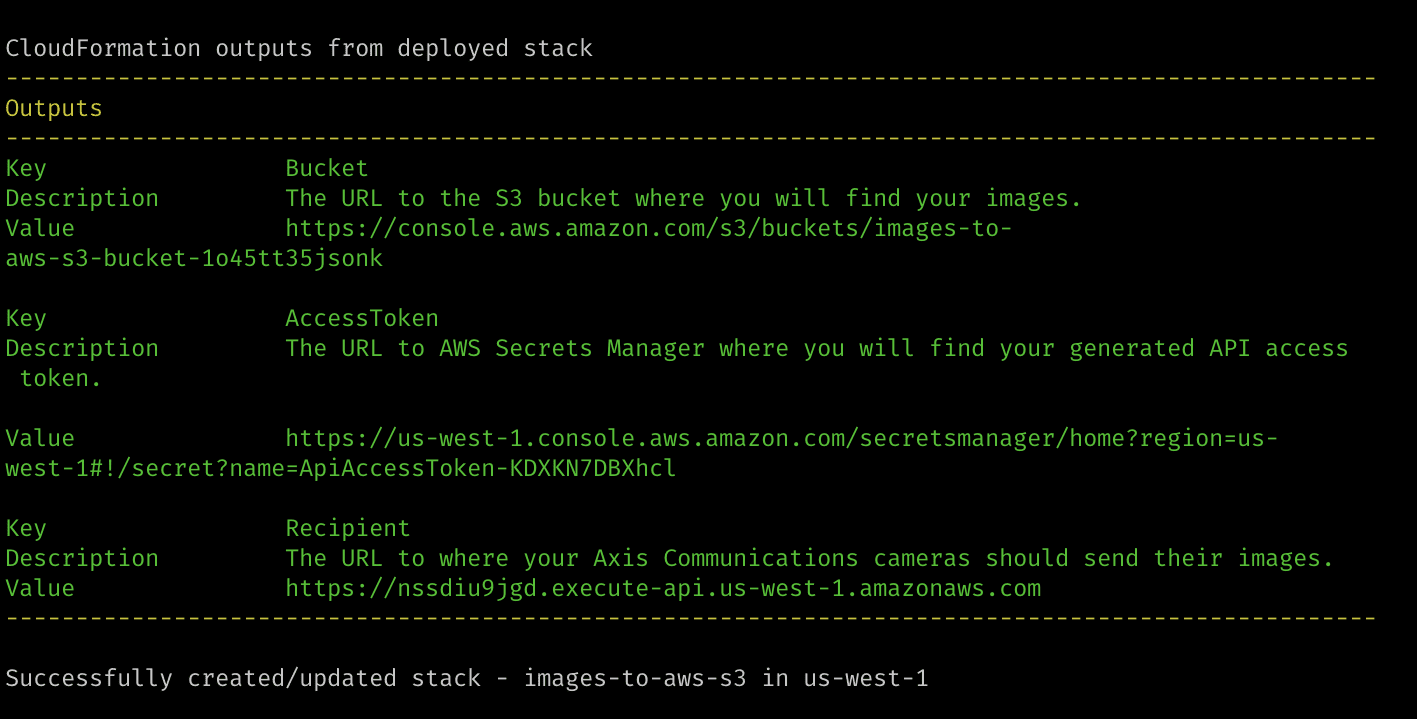

- After a successful deployment, navigate to your newly created CloudFormation stack on the AWS CloudFormation console.

You’ll find the API Gateway forwarding requests to Lambda, and the Lambda function saves the image snapshots to an S3 bucket.

The deployed CloudFormation stack created two output parameters that we use when configuring our Axis camera:

- Recipient – Defines the URL of the API Gateway where cameras should send their snapshots.

- AccessToken – Contains the URL to the secret API access token found in Secret Manager. This API access token authorizes the camera and allows it to send snapshots.

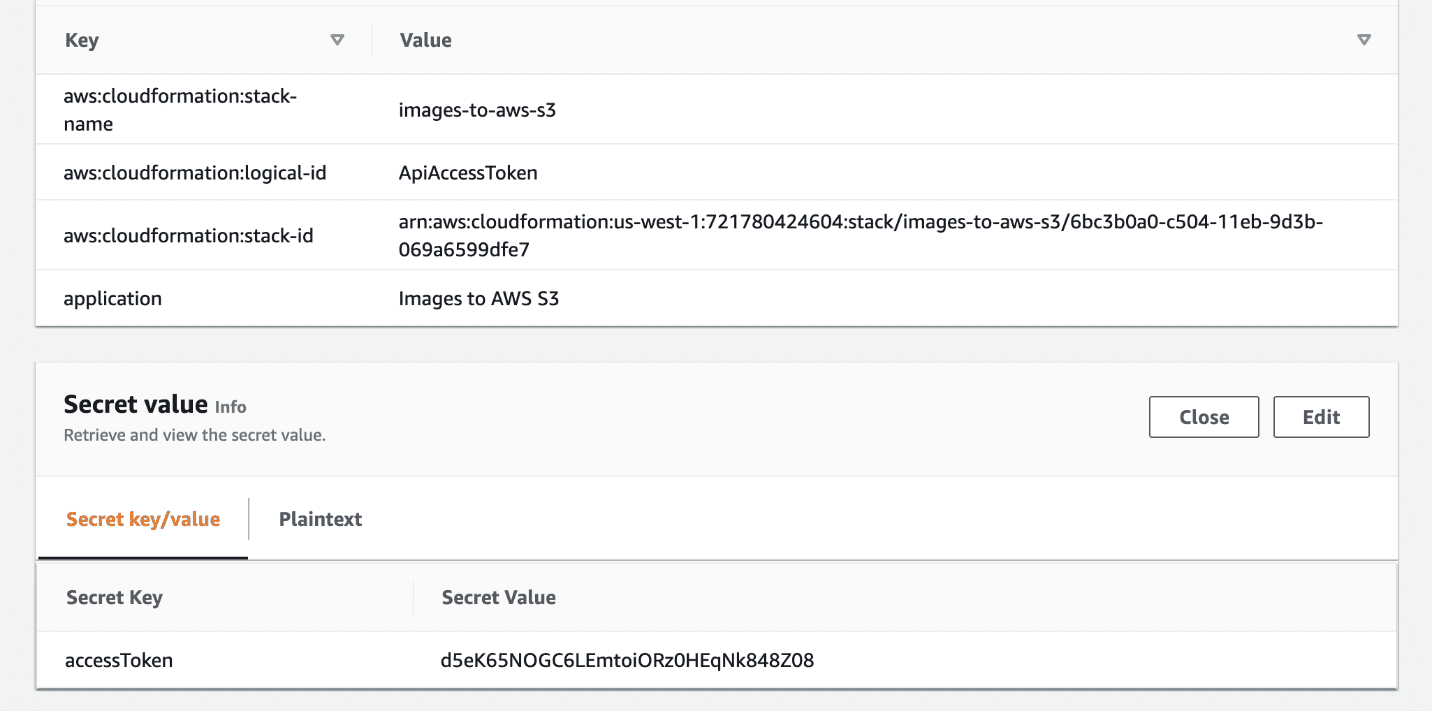

- Enter the link to the AccessToken in your browser to go directly to where you can find the secret.

You use the secret in your API Gateway to authenticate the image upload.

Requests to the API Gateway without this access token are denied access.

Axis camera setup

In the camera, you need to set up an HTTPS recipient to the API Gateway and an event in the camera that’s used as a trigger when an image should be uploaded.

- Log in to the camera.

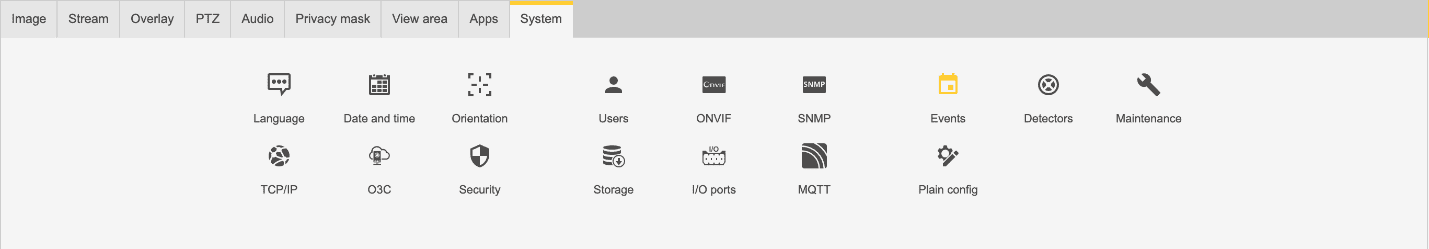

- On the System tab, choose Events.

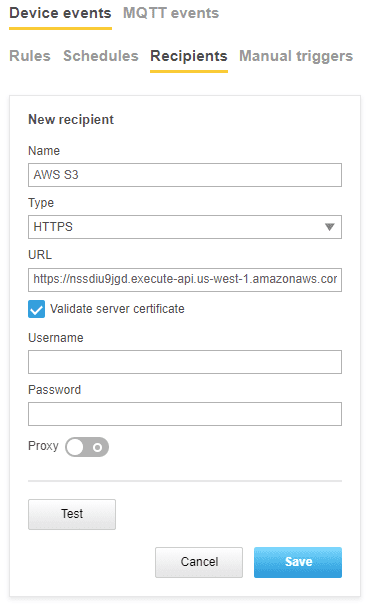

- On the Recipients tab, choose the plus sign to add the API Gateway recipient URL.

No username and password are needed here; the authentication is handled via the access token (AccessToken) that you enter as a custom CGI parameter in a later step.

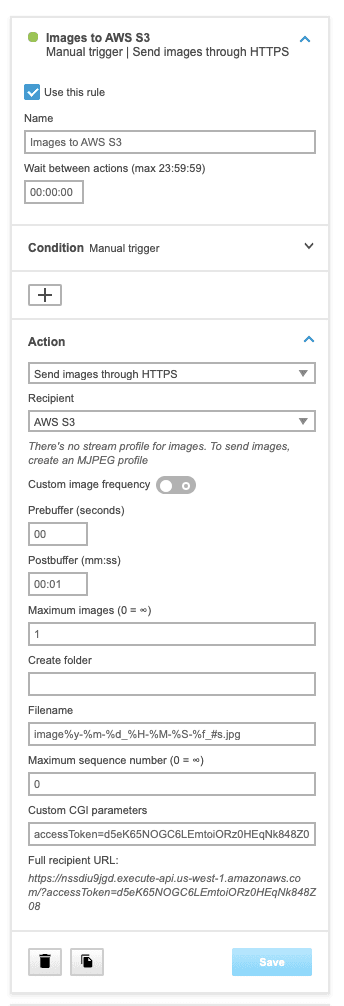

- On the Rules tab, choose the plus sign to add a condition for when to send an image to Amazon S3.

- Choose Manual trigger to manually trigger an upload of a limited number of images to Amazon S3.

- For Postbuffer, enter

00:01. - For Maximum images, enter

1.

If you need to take images and upload them to Amazon S3 automatically, at a given period of time, you can use a the pulse type condition. In this case, you can specify a pulse interval like every minute or every second. For more information about how to capture an image snapshot, see AXIS OS Portal User manual.

- For Custom CGI parameters, enter the

AccessTokenvalue. - Choose Save.

Test the setup

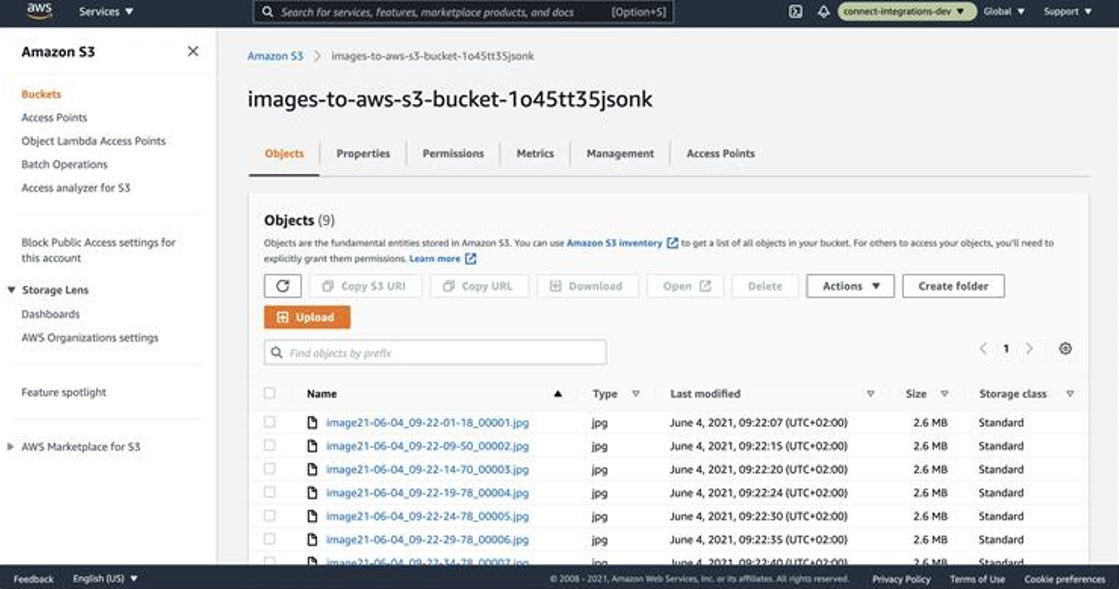

After you send some images (manually or automatically) from the camera, you can open the Amazon S3 console to verify that the images are uploaded correctly.

Analyse frames with Amazon Rekognition

You can now call Amazon Rekognition APIs to analyse the frame you stored on S3, by triggering an AWS Lambda function invoking the Amazon Rekongition API that you need. The following instructions show how to create a Lambda function in Python that calls Amazon Rekognition DetectLabels.

Step 1: Create an AWS Lambda function (console)

- Sign in to the AWS Management Console and open the AWS Lambda console at https://console.aws.amazon.com/lambda/.

- Choose Create function. For more information, see Create a Lambda Function with the Console.

- Choose the following options.

- Choose Author from scratch.

- Enter a value for Function name.

- For Runtimechoose Python (from7 to 3.9 version).

- For Choose or create an execution role, choose Create a new role with basic Lambda permissions.

- Choose Create functionto create the AWS Lambda function.

Step 2: Attach Amazon Rekognition and Amazon S3 permissions to AWS Lambda created role

- Open the IAM console at https://console.aws.amazon.com/iam/.

- From the navigation pane, choose Roles.

- From the resources list, choose the IAM role that AWS Lambda created for you. The role name is prepended with the name of your Lambda function.

- In the Permissionstab, choose Attach policies.

- Add the AmazonRekognitionFullAccessand AmazonS3ReadOnlyAccess.

- Choose Attach Policy.

Step 3: Add Python code in AWS Lambda console

- In AWS Lambda, choose your function name.

- In Function Overview panel choose +Add trigger.

- Select S3 trigger, then select the bucket where the Axis camera is storing the camera frames (in our example images-to-aws-s3-bucket-1o45tt35jsonk).

- In event type choose PUT.

- Flag the Recursive invocation acknowledgment and then click on Add.

Step 4: Add Python code in AWS Lambda console

- In AWS Lambda function page select the code tab.

- In the function code editor, add the following to the file py. This function will process each frame just after the upload from the Axis Camera, because of the trigger on the PUT event we configured at step 3.

- Choose Save to save your Lambda function.

Step 5: Test your AWS Lambda function (console)

- Choose Test.

- Choose s3-put as Event template.

- Enter a value for Event name.

- Change bucket name and objet key in the test json request byt providing the bucket name and one frame name you already have on Amazon S3.

- Choose Create.

- Choose Test. The Lambda function is invoked. The output is displayed in the Execution results pane of the code editor. The output is a list of labels found by Amazon Rekognition on the given frame.

Conclusion

Getting started with Axis Communications and Amazon Rekognition is easy. Integrators are a critical delivery mechanism for this technology, and its countless integrations provide opportunities to offer expanding services to new and existing customers. The application has been released to the public via GitHub for integrators to review. You can find a code sample on how to send images to S3 below, or click on this GitHub link to get started now.

Please go to this link to find out more information on how to get started with Amazon Rekognition. Additionally, details on Amazon Rekognition pricing can be found here. Amazon Rekognition image APIs come with a free tier that lasts 12 months and allows you to analyze 5,000 images per month and store 1,000 pieces of face metadata per month.

If you are interested in becoming a partner, please visit the channel network at Axis Communications and the partner network at AWS.

About the Author

Oliver Myers is the Principal WW Business Development Manager for Amazon Rekognition (an AI service that allows customers to extract visual metadata from images and videos) at AWS. In this role he focuses on helping customers implement computer vision into their business workflows across industries.

Oliver Myers is the Principal WW Business Development Manager for Amazon Rekognition (an AI service that allows customers to extract visual metadata from images and videos) at AWS. In this role he focuses on helping customers implement computer vision into their business workflows across industries.

Tags: Archive

Leave a Reply