Perform audio redaction for personally identifiable information with Amazon Transcribe

Amazon Transcribe is an automatic speech recognition (ASR) service that makes it easy to add speech-to-text capabilities to your applications. Speech or audio data is virtually impossible for computers to search and analyze. Therefore, recorded speech needs to be converted to text before it can be used in applications. Automatic content redaction is a feature of Amazon Transcribe that can automatically remove information such as sensitive personally identifiable information (PII) from your transcription results.

A popular content redaction use case is the automatic transcription of customer calls (such as in call centers and telemarketing). To build datasets for downstream analytics and natural language processing (NLP) tasks, such as sentiment analysis, you may need to remove all PII to protect privacy and comply with local laws and regulations. This post follows up on a previous post about redacting PII in Amazon Transcribe and demonstrates an approach for redacting PII from both a text transcription and source audio file.

Solution overview

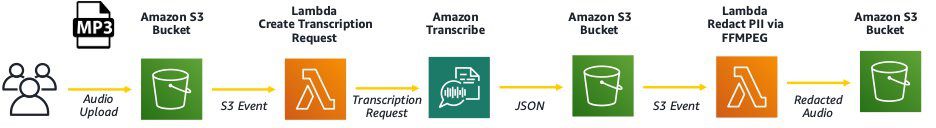

The following figure shows an example architecture for performing PII audio redaction, using Amazon Simple Storage Service (Amazon S3) and AWS Lambda. Additionally, we use the AWS SDK for Python (Boto3) for the Lambda functions.

For this post, we provide an AWS CloudFormation audio redaction template, which provides the full details of the implementation to enable repeatable deployments. If you use the template, you must specify the name of the input S3 bucket where the audio files get uploaded and the name of the output S3 bucket where the created artifacts get saved.

Redact PII from a text transcription

When instructed, Amazon Transcribe can natively identify and redact sensitive PII from text transcription output in its supported languages. Supported PII entities include the following:

- Bank account number

- Bank routing number

- Credit or debit card number

- Credit or debit card CVV code

- Credit or debit card expiration date

- Credit or debit card PIN

- Email address

- US mailing address

- Name

- US phone number

- Social Security number

Detected PII entities are replaced with a [PII] tag in the transcribed text. A redaction confidence score (instead of the usual ASR score) and associated starting and ending timestamps are also provided for each entity. These timestamps allow you to easily locate the PII in the original audio source files for redaction.

Redact PII from an audio file

As depicted in our architecture, you can demonstrate the workflow for redacting PII from the transcribed audio by first uploading the sample MP3 file with simulated personal information to an S3 bucket. You can do this either directly via the AWS Management Console or the AWS Command Line Interface (AWS CLI). Lossless audio formats such as FLAC or WAV can also be used for improved accuracy.

The next step is to transcribe the source audio file using the StartTranscriptionJob API. The following is a snippet of the Lambda function to create the Amazon Transcribe job:

Full details on the Lambda function can be found in the CloudFormation template.

The transcription is a JSON document containing detailed information about each word. The full transcript portion of the JSON document is shown in the following code:

For each transcription job with automatic content redaction enabled, you can generate either the redacted transcript only or both the redacted transcript and the unredacted transcript (see the ContentRedaction settings in the Lambda function code snippet). Both redacted and unredacted transcripts are stored in the same output S3 bucket you specify or in the default S3 bucket managed by the service. This feature of Amazon Transcribe provides additional levels of control to protect this sensitive customer information by controlling access to the redacted and non-redacted data through user-defined permission groups.

As expected, the PII has been redacted from the text transcript and replaced with the [PII] tag. Upon delivery of the Amazon Transcribe JSON file to an S3 bucket, a second Lambda function is triggered, which identifies the timestamps of the PII entities (see the following code):

After all the relevant timestamps for each PII entity, such as start_time and end_time, have been extracted, we use FFmpeg to suppress the audio volume for the identified PII time segments. FFmpeg is a free and open-source software project consisting of a large suite of libraries and programs for handling video, audio, and other multimedia files and streams.

In this post, we provide details for the FFmpeg command string along with the actual command for the sample audio file. An implementation can also be found in the Lambda function included with the CloudFormation template. As depicted, the start_time and end_time for each PII entity from the JSON output file produced by Amazon Transcribe is passed as a parameter to the FFmpeg executable. It should also be noted that you can pass all the PII time ranges at once, and there are timestamps for seven PII entities.

We use the following command format:

The following code is the command used for the sample audio:

You can review the final audio redacted version of the sample MP3 file.

Conclusion

This post demonstrated how to redact PII from both text transcriptions and source audio files. The Amazon Transcribe content redaction feature is available for US English in the following Regions:

- US East (N. Virginia), US East (Ohio), US West (N. California), US West (Oregon)

- Asia Pacific (Hong Kong), Asia Pacific (Mumbai), Asia Pacific (Seoul), Asia Pacific (Singapore), Asia Pacific (Sydney), Asia Pacific (Tokyo)

- Canada (Central)

- Europe (Frankfurt), Europe (Ireland), Europe (London), Europe (Paris)

- Middle East (Bahrain)

- South America (São Paulo)

- AWS GovCloud (US-West)

Take a look at the pricing page, give the feature a try, and send us feedback either in the AWS forum for Amazon Transcribe or through your usual AWS support contacts.

About the Authors

Erwin Gilmore is a Senior Specialist Technical Account Manager in Artificial Intelligence and Machine Learning at Amazon Web Services. He provides technical guidance and helps customers accelerate their ability to innovate through showing the art of the possible on AWS. In his spare time, he enjoys spending time, hiking, and traveling with his family.

Erwin Gilmore is a Senior Specialist Technical Account Manager in Artificial Intelligence and Machine Learning at Amazon Web Services. He provides technical guidance and helps customers accelerate their ability to innovate through showing the art of the possible on AWS. In his spare time, he enjoys spending time, hiking, and traveling with his family.

Esther Lee is a Product Manager for AWS Language AI Services. She is passionate about the intersection of technology and education. Out of the office, Esther enjoys long walks along the beach, dinners with friends and friendly rounds of Mahjong.

Esther Lee is a Product Manager for AWS Language AI Services. She is passionate about the intersection of technology and education. Out of the office, Esther enjoys long walks along the beach, dinners with friends and friendly rounds of Mahjong.

Tags: Archive

Leave a Reply