Extract custom entities from documents in their native format with Amazon Comprehend

Multiple industries such as finance, mortgage, and insurance face the challenge of extracting information from documents and taking a specific action to enable business processes. Intelligent document processing (IDP) helps extract information locked within documents that is important to business operations. Customers are always seeking new ways to use artificial intelligence (AI) to help them with an IDP solution that quickly and accurately processes diverse types of documents and the various layouts documents come in. Although many people think document processing is a simple task, companies with millions of documents understand that there is no simple way to accomplish the goal. In addition to document layouts and formats, there are other IDP challenges—it’s manual, time-consuming, prone to errors, and expensive.

Amazon Comprehend has launched features such as Personal Identifiable Information (PII) redaction, entity extraction, document classification, and sentiment analysis which helps you find insights within unstructured documents like email, dense paragraphs of text, or social media feeds. Additionally, features like Comprehend Custom help you with custom entity extraction and document classification that are business or domain specific. One pain point we heard from customers is that preprocessing other document formats, such as PDF, into plain text to use Amazon Comprehend is a challenge and takes time to complete.

Starting today, you can use custom entity recognition in Amazon Comprehend on more document types without the need to convert files to plain text. Amazon Comprehend can now process varying document layouts such as dense text and lists or bullets in PDF and Word while extracting entities (specific words) from documents. Historically, you could only use Amazon Comprehend on plain text documents, which required you to flatten the documents into machine-readable text. You can now use natural language processing (NLP) to extract custom entities from your PDF, Word, and plain text documents using the same API, with less document preprocessing required. You only need 250 documents and 100 annotations per entity type to train a model and get started. To extract text and spatial locations of text from scanned PDF documents, Amazon Comprehend calls Amazon Textract on your behalf before processing for custom entity recognition. You get billed separately for the Amazon Textract calls; for additional details, see Amazon Textract pricing.

This feature can help with document processing workflows in business verticals such as insurance, mortgage, finance, and more. The complexity of different document layouts and formats across these verticals makes it challenging to extract the information you need because you might not need every single data point on the page. With this new feature, you can now employ machine learning to extract custom entities using a single model and API call. For example, you can process automotive or health insurance claims and extract entities such as claim amount, co-pay amount, or primary and dependent names. You can also apply this solution to mortgages to extract an applicant name, co-signer, down payment amount, or other financial documents. Lastly, for financial services, you can process documents such as SEC filings and extract specific entities such as proxy proposals, earnings reports, or board of director names. You can use these extracted entities for further applications such as enhanced semantic search, generating knowledge graphs, and personalized content recommendation.

This post gives an overview of the capabilities of Amazon Comprehend custom entity recognition by showing how to train a model, evaluate model performance, and perform document inference. As an example, we use documents from the financial domain (SEC S-3 filings and Prospectus filings).

- The SEC dataset is available for download here

s3://aws-ml-blog/artifacts/custom-document-annotation-comprehend/sources/. - The annotations are available here

s3://aws-ml-blog/artifacts/custom-document-annotation-comprehend/annotations/. - The manifest file to use for training a model can be found here

s3://aws-ml-blog/artifacts/custom-document-annotation-comprehend/manifests/output.manifest.

Note: you can directly use this output.manifest for training or you can change the source reference and annotation reference to point to your S3 bucket before training the model.

Amazon Comprehend custom entity recognition model overview

The new Amazon Comprehend custom entity recognition model utilizes the structural context of text (text placement within a table or page) combined with natural language context to extract custom entities from anywhere in a document, including dense text, numbered lists, and bullets. This combination of information also allows Amazon Comprehend custom entity recognition to also extract discontiguous or disconnected entities that aren’t immediately part of the same span of text (for example, entities nested within a table with multiple rows and columns).

Train an Amazon Comprehend custom entity recognition model via the console

In the previous post, we showed you how to annotate finance documents via Amazon SageMaker Ground Truth using the custom annotation template provided by Amazon Comprehend. The output of that annotation job was a manifest file that you can use to train an Amazon Comprehend custom entity recognition model within a few minutes. To do so via the Amazon Comprehend console, complete the following steps:

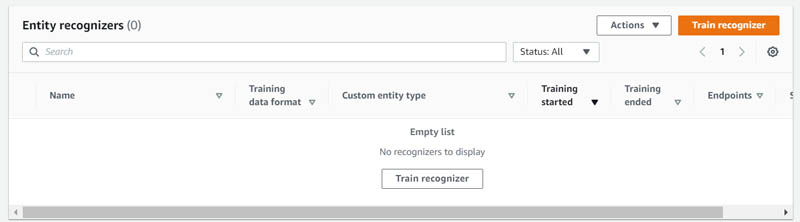

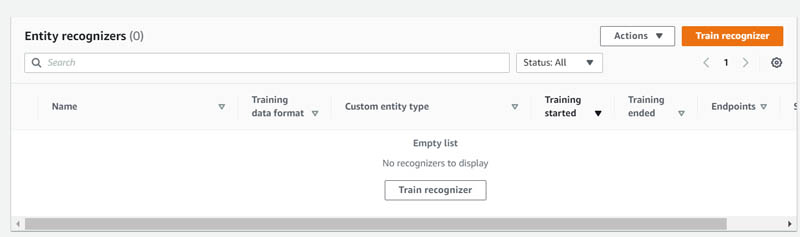

- On the Amazon Comprehend console, choose Custom entity recognition in the navigation pane.

- Choose Train recognizer.

- On the next screen, you can name you recognizer model, select a language (English is currently the only language supported for custom entity recognition models that are used on PDF and Word doc), and add custom entity types for your model to train on.

The entity types must match one of the types in the annotations or entity list that was completed when annotating your documents.

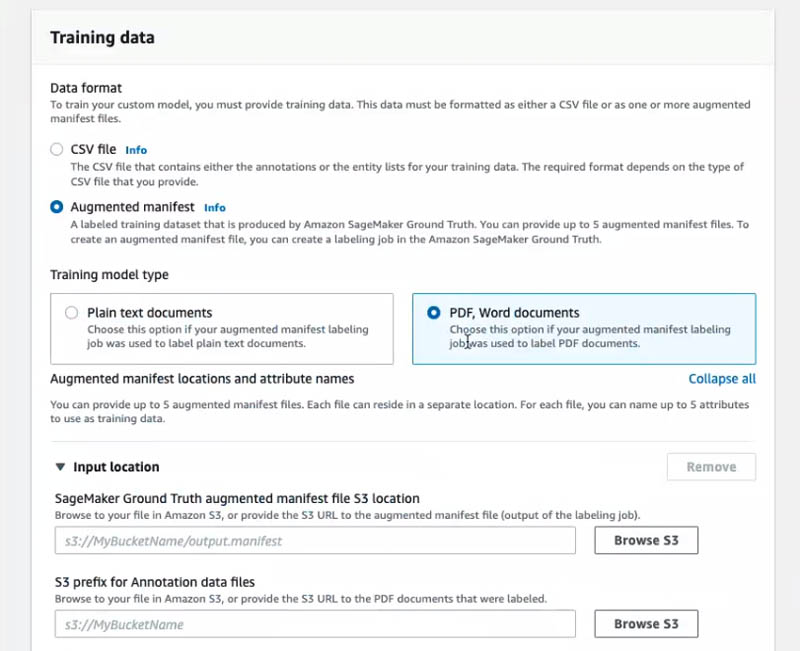

- Under Training data, select Augmented manifest to reference the manifest that was created when annotating your documents.

- Select PDF, Word documents to signify what types of documents you’re using for training and inference.

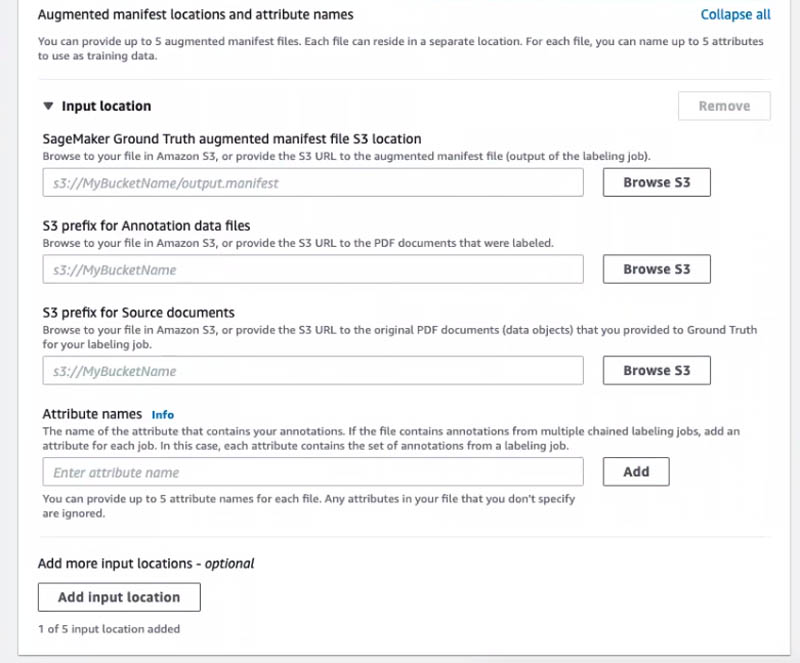

- Under Input location, add the Amazon Simple Storage Service (Amazon S3) location for the following:

- Ground Truth augmented manifest file

- Annotation data files

- Source PDF documents that were used for annotation

- You can optionally add an attribute name, which you can use to name the set of annotations to distinctly label multiple chained labeling jobs.

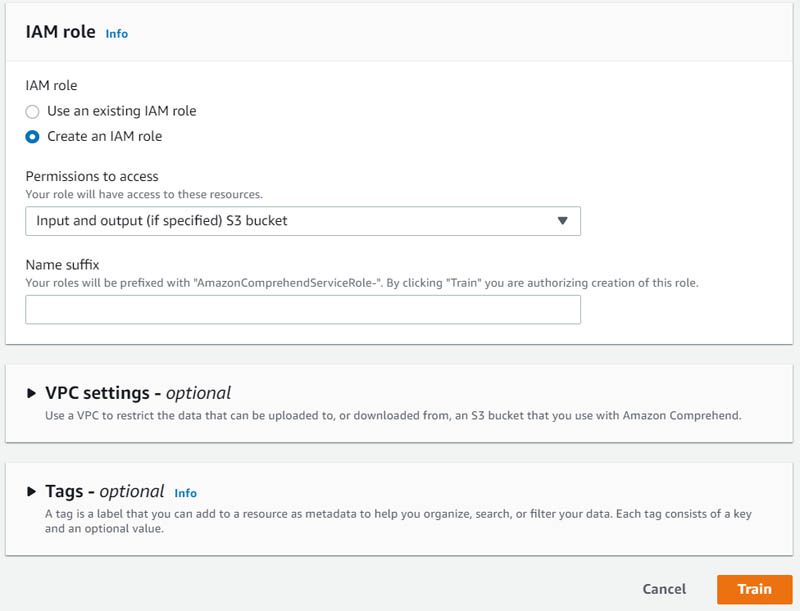

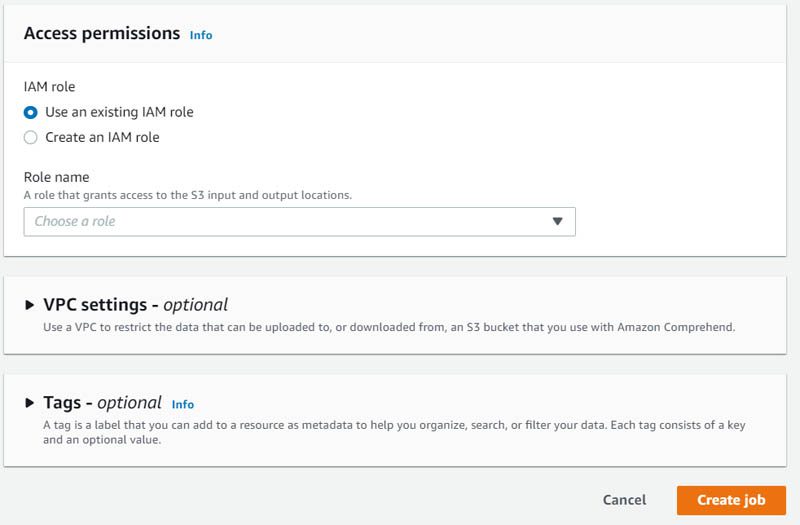

- Configure your AWS Identity and Access Management (IAM) role, using an existing IAM role or creating a new one.

- Optionally, configure VPC settings and tags.

For more information on VPCs see Protect Jobs by Using an Amazon Virtual Private Cloud, and for tagging, see Tagging Custom Entity Recognizers.

- Choose Train to train your recognizer model.

All recognizers populate in the dashboard, with information on the model training status and training metrics.

Use your custom entity recognition model via the console

When you’re ready to start using your model, keep in mind the following:

- You can use your custom entity recognition model that is trained on PDF documents to extract custom entities from PDF, Word, and plain text documents

- You can only use the custom entity recognition models trained on PDF documents for batch (asynchronous) processing

To create a batch job using the Amazon Comprehend console, complete the following steps:

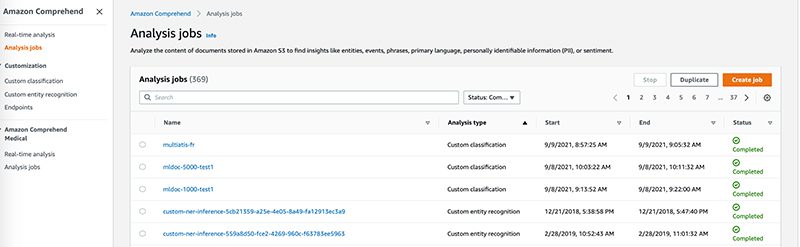

- Choose Analysis jobs in the navigation pane.

- Choose Create job.

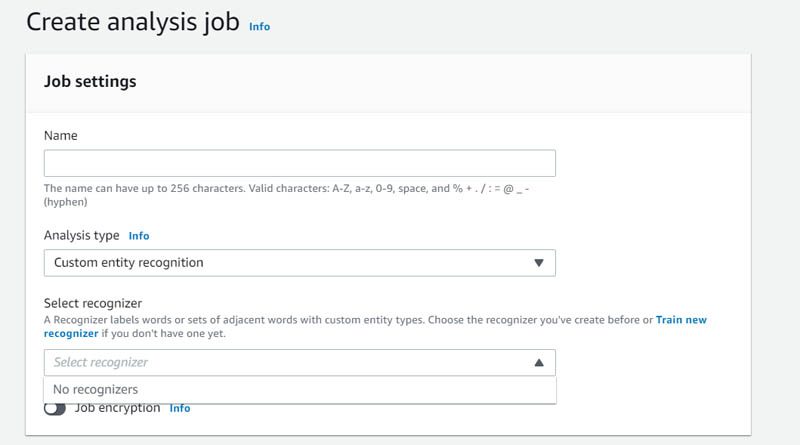

- For Name, enter a job name.

- For Analysis type¸ choose Custom entity recognition.

- For Select recognizer, choose the recognizer model you trained on PDF documents.

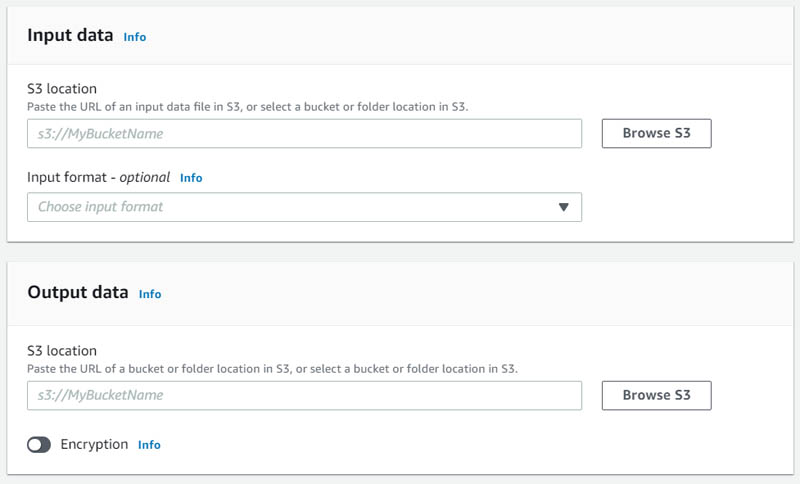

- Under Input data, enter the S3 location of the inference dataset you plan to process.

- Under Output data, enter the S3 location of where you want the results to populate.

- Under Access permissions, you can configure your IAM role (using an existing role or creating a new one).

- Optionally, configure VPC settings and tags.

- Choose Create job to process your documents.

After your documents are done processing, you can review the output in the specific output S3 location. The output is in JSON format, with each line encoding all the extraction predictions for a single document. The output schema includes the entity text and the bounding boxes for entities detected along with their text offsets.

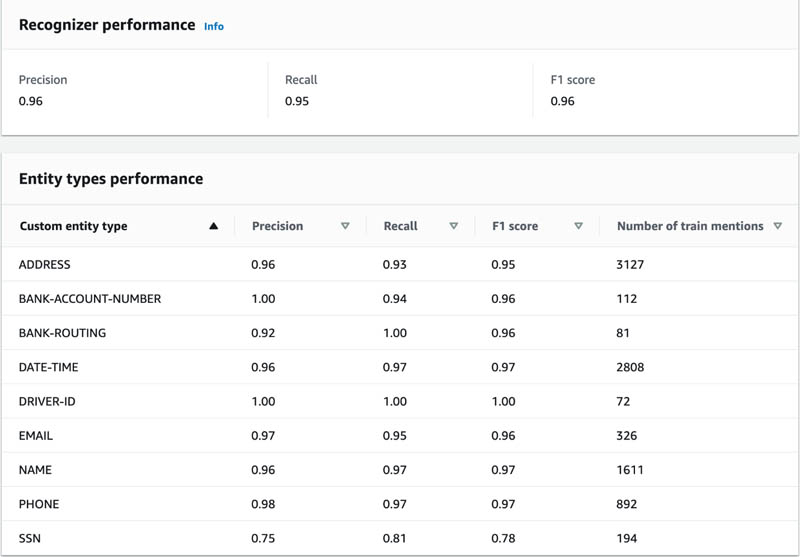

Custom entity recognition model performance

Prior to this, Amazon Comprehend custom entity recognition models used natural language context to extract entities. With the new models that are capable of using both structural and natural language context, we see significant improvements in the model accuracy (F1 score) across diverse datasets that contain semi-structured and structured documents.

For example, we trained the new models on a mix of financial, insurance and banking documents that have a mix of dense paragraph text, lists, bullets and other semi-structured elements to extract entities such as NAME, DATE-TIME, EMAIL, ADDRESS, SSN, PHONE, BANK-ACCOUNT-NUMBER, BANK-ROUTING and DRIVER-ID. We were able to achieve a F1 score of 0.96, which was significantly higher than if you were to train models by flattening the text and using a traditional custom plain text NER model.

Conclusion

This blog demonstrated the flexibility and accuracy of the new Amazon Comprehend custom entity recognition API to process varying document layouts such as dense text and lists or bullets in PDF, Word, and plain text documents. This new feature allows you to efficiently extract entities in semi-structured contexts through a single managed model in Amazon Comprehend, with no preprocessing required. For more information, read our documentation and follow the code in GitHub.

About the Authors

Anant Patel is a Sr. Product Manager-Tech on the Amazon Comprehend team within AWS AI/ML.

Anant Patel is a Sr. Product Manager-Tech on the Amazon Comprehend team within AWS AI/ML.

Andrea Morton-Youmansis a Product Marketing Manager on the AI Services team at AWS. Over the past 10 years she has worked in the technology and telecommunications industries, focused on developer storytelling and marketing campaigns. In her spare time, she enjoys heading to the lake with her husband and Aussie dog Oakley, tasting wine and enjoying a movie from time to time.

Andrea Morton-Youmansis a Product Marketing Manager on the AI Services team at AWS. Over the past 10 years she has worked in the technology and telecommunications industries, focused on developer storytelling and marketing campaigns. In her spare time, she enjoys heading to the lake with her husband and Aussie dog Oakley, tasting wine and enjoying a movie from time to time.

Tags: Archive

Leave a Reply