Calculate inference units for an Amazon Rekognition Custom Labels model

Amazon Rekognition Custom Labels allows you to extend the object and scene detection capabilities of Amazon Rekognition to extract information from images that is uniquely helpful to your business. For example, you can find your logo in social media posts, identify your products on store shelves, classify machine parts in an assembly line, distinguish healthy and infected plants, or detect your animated characters in videos.

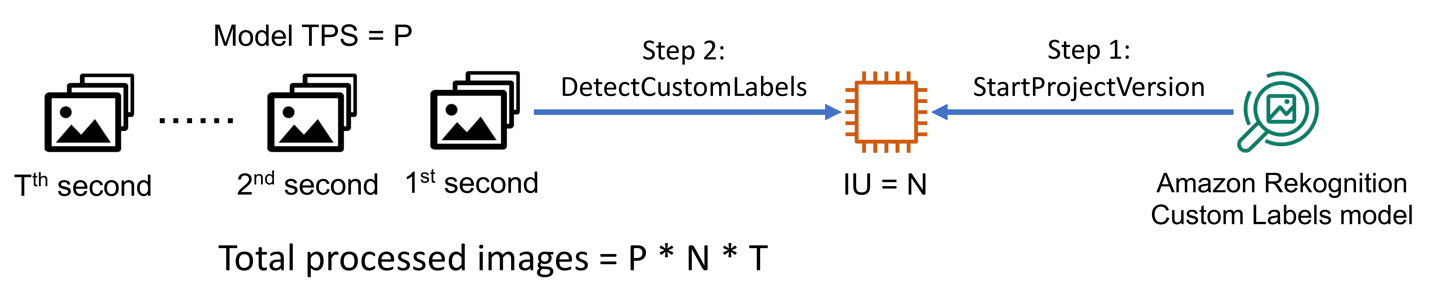

Amazon Rekognition Custom Labels provides a simple end-to-end experience where you start by labeling a dataset. Amazon Rekognition Custom Labels then builds a custom machine learning (ML) model for you by inspecting the data and selecting the right ML algorithm. After your model is trained, you can start using it immediately for image analysis. You start a model by calling the StartProjectVersion API and providing the minimum number of inference units (IUs) to use. A single IU represents one unit of compute power. The number of images you can process with a single IU in a certain time frame depends on many factors, such as the size of the images processed and the complexity of the custom model. As you start the model, you can provide a higher number of IUs to increase the transactions per second (TPS) throughput of your model. Amazon Rekognition Custom Labels then provisions multiple compute resources in parallel to process your images more quickly.

However, determining the right number of IUs for your workload is tricky, because over-provisioned IUs causes unnecessary cost, and insufficient IUs result in exceeding provisioned throughput. Due to the lack of information of calculating appropriate IU, some customers tend to over-provision IUs to ensure their workloads run without any exception errors. This can be quite costly. Other customers spend a lot of time adding IUs until their workloads run smoothly. In this post, we show you how to calculate the IUs needed to meet your workload performance requirement at the lowest possible cost.

Understanding inference units

For this post, we use a commonly seen customer scenario to explain the concept of IUs.

For a specific use case in object/scene detection or classification, you train an Amazon Rekognition Custom Labels model. After the model is trained, you need to start the model for inference. Let’s assume you start your custom model at 2:00 PM and end at 5:00 PM, and choose to provision 1 IU, your total inference hours billed is 3 hours. Assuming that the model allows you to analyze five concurrent images per second (5 TPS), you can process 54,000 (5*3,600*3) images in 3 hours.

Now let’s assume that you want to process twice the number of images (108,000) in 3 hours using the same model. You can start the model for the same duration of 3 hours and provision 2 IUs. Similarly, if you need to process 54,000 images but want to reduce the processing time from 3 hours to 1 hour, you can provision 3 IUs, which means processing 15 images per second and then stopping the model after 1 hour. Your total inference hours billed would be 6 hours (3 hours elapsed * 2 IUs) and 3 hours (1 hour elapsed * 3 IUs), respectively. Because your throughput and cost are based on the provisioned IUs per hour, it’s important to calculate the right IUs needed for your workload.

After you train an Amazon Rekognition Custom Labels model, you can start the model with one or more IU. If your load of requests is higher than the maximum supported TPS based on the provisioned IU, Amazon Rekognition Custom Labels returns an exception called ProvisionedThroughputExceededException for all requests over the max TPS, which indicates that the model is maximally utilized. In general, max TPS depends on the trained custom model, input images, and number of IUs provisioned. Therefore, you can determine required IUs by calculating max TPS. To do this, you can start the model with 1 IU, and progressively increase input requests until the ProvisionedThroughputExceededException exception is raised. After you get the max TPS throughput of the model, you can use it to calculate the overall IUs needed for your workload. For example, if the max TPS throughput is 5 TPS and you need to process 15 images per second, you have to start the model with 3 IUs.

Solution overview

In the following sections, we discuss how to calculate max TPS throughput of an Amazon Rekognition Custom Labels model. Then you can calculate the exact IUs needed as you start the model to process your images.

We walk through the following high-level steps:

- Train a model using Amazon Rekognition Custom Labels.

- Start your model.

- Launch an Amazon Elastic Compute Cloud (Amazon EC2) instance and set up your test environment.

- Create a test script.

- Add sample image(s) and run the script.

- Review the program output.

Train a model using Amazon Rekognition Custom Labels

You start by training a model in Amazon Rekognition Custom Labels for your use case. To learn more about how to create, train, evaluate, and use a model that detects objects, scenes, and concepts in images, refer to Getting started with Amazon Rekognition Custom Labels.

Start your model

After your model is trained, start the model with 1 IU. You can use the following command from the AWS Command Line Interface (AWS CLI) to start your model:

In addition to the AWS CLI, the following code snippet shows how you can also use the API to start your Amazon Rekognition Custom Labels model:

Launch an EC2 instance and set up your test environment

Launch an EC2 instance that you use to run a script that uses a sample image to call the model we started in the previous step. You can follow the steps in the quick start guide to launch an EC2 instance. Although the guide uses an instance type of t2.micro, you should use a compute-optimized instance type such as C5 to run this test.

After you connect to the EC2 instance, run the following commands from the terminal to install the required dependencies:

Create a test script

Create a Python file named tps.py with the following code:

Add sample image(s) and run the script

Create a folder named images and add at least one sample image. This folder of images is used for inference using the model trained with Amazon Rekognition Custom Labels.

Run the Python script to calculate model TPS throughput:

Review the program output

This program gradually increases the load of requests using the representative images to maximally utilize the model. It creates multiple threads in parallel, and adds new threads over time. It runs for a few minutes and prints tabular formatted statistical information including number of requests, number of failed requests, latency statistics (average, min, max, median), average requests per second, and average failures per second. When it completes all the runs, it prints the max TPS (Max supported TPS) and 95th percentile latency in milliseconds (95th percentile response time).

Let’s assume that you get max TPS throughput of the model as 3, and you plan to process 12 images per second. In this case, you can start the model with 4 IUs to achieve the desired throughput.

Conclusion

In this post, we showed how to calculate the IUs needed to meet your requirement of workload performance at the lowest possible cost. By right-sizing the IU for your model, you ensure that you can process images with the required throughput and not pay extra by avoiding over-provisioning resources. To learn more about Amazon Rekognition Custom Labels use cases and other features, refer to Key features.

In addition to optimizing the IU, if your workload requires processing images in batches (such as once a day or week, or at scheduled times during the day), you can provision your custom model at scheduled times. The post Batch image processing with Amazon Rekognition Custom Labels shows you how to build a cost-optimal batch solution with Amazon Rekognition Custom Labels that provisions your custom model at scheduled times, processes all your images, and deprovisions your resources to avoid incurring extra cost.

About the Authors

Ditesh Kumar is a Software Developer working for Amazon Rekognition Custom Labels. He is focused on building scalable Computer Vision services and adding new features to enhance usability and adoption of Custom Labels. In his spare time Ditesh is a big fan of hiking and traveling and enjoys spending time with his family.

Ditesh Kumar is a Software Developer working for Amazon Rekognition Custom Labels. He is focused on building scalable Computer Vision services and adding new features to enhance usability and adoption of Custom Labels. In his spare time Ditesh is a big fan of hiking and traveling and enjoys spending time with his family.

Kashif Imran is a Principal Solutions Architect at Amazon Web Services. He works with some of the largest AWS customers who are taking advantage of AI/ML to solve complex business problems. He provides technical guidance and design advice to implement computer vision applications at scale. His expertise spans application architecture, serverless, containers, NoSQL, and machine learning.

Kashif Imran is a Principal Solutions Architect at Amazon Web Services. He works with some of the largest AWS customers who are taking advantage of AI/ML to solve complex business problems. He provides technical guidance and design advice to implement computer vision applications at scale. His expertise spans application architecture, serverless, containers, NoSQL, and machine learning.

Sherry Ding is a Senior AI/ML Specialist Solutions Architect. She has extensive experience in machine learning with a PhD degree in Computer Science. She mainly works with Public Sector customers on various AI/ML related business challenges, helping them accelerate their machine learning journey on the AWS Cloud. When not helping customers, she enjoys outdoor activities.-

Sherry Ding is a Senior AI/ML Specialist Solutions Architect. She has extensive experience in machine learning with a PhD degree in Computer Science. She mainly works with Public Sector customers on various AI/ML related business challenges, helping them accelerate their machine learning journey on the AWS Cloud. When not helping customers, she enjoys outdoor activities.-

Tags: Archive

Leave a Reply